mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-18 14:56:20 +00:00

Compare commits

1 Commits

chore/no-t

...

fix/mcp-se

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

6f33d92b2c |

@@ -201,11 +201,6 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -202,7 +202,7 @@ This is the most critical stage where files are moved and transformed into their

|

||||

- Copies README.md and LICENSE to dist/

|

||||

- Copies locales folder for internationalization

|

||||

- Creates a clean package.json for distribution with only necessary dependencies

|

||||

- Keeps distribution dependencies minimal (no bundled runtime deps)

|

||||

- Includes runtime dependencies like tiktoken

|

||||

- Maintains optional dependencies for node-pty

|

||||

|

||||

2. The JavaScript Bundle is Created:

|

||||

|

||||

@@ -480,7 +480,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

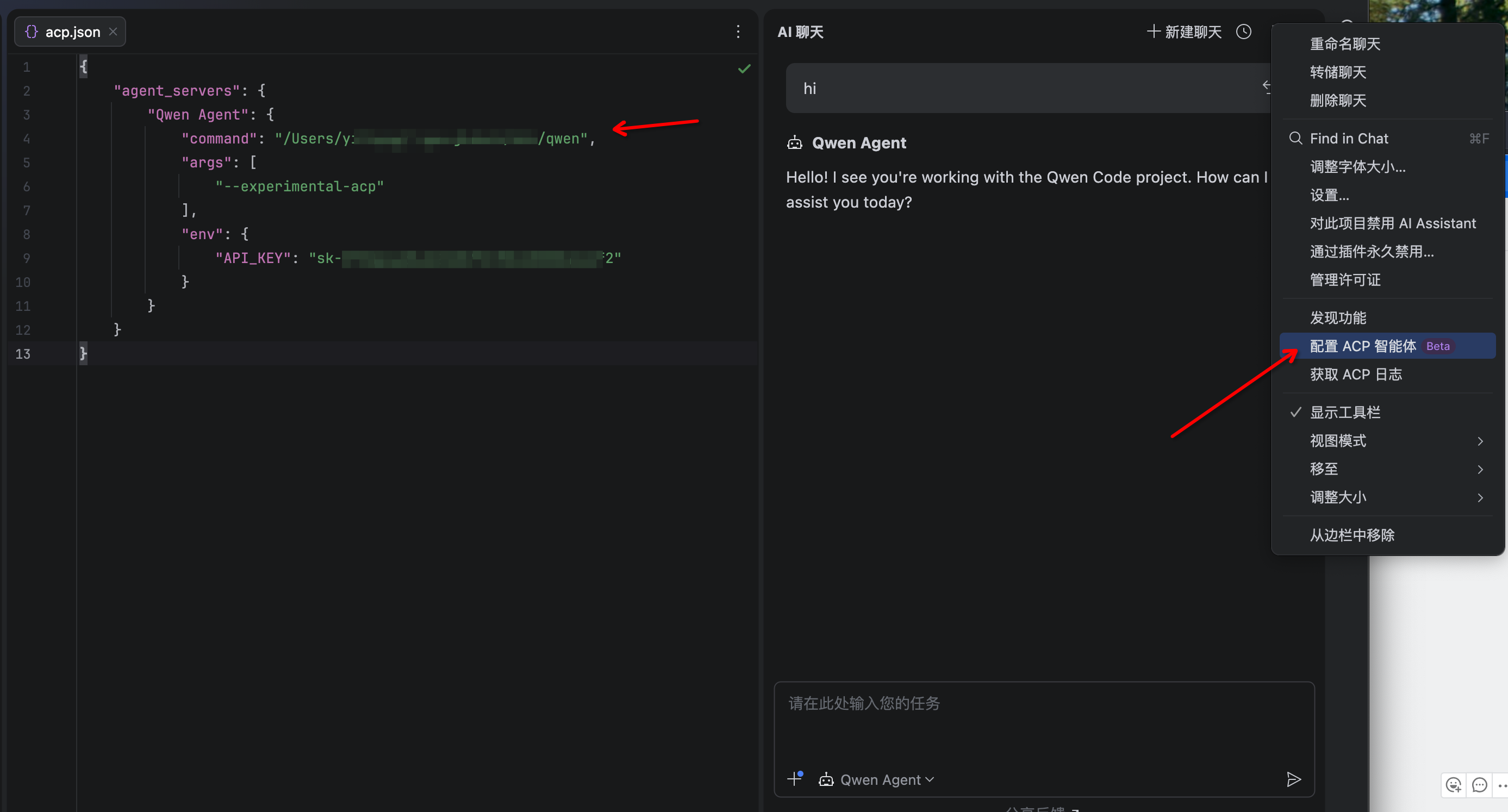

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

|

||||

BIN

docs/users/images/jetbrains-acp.png

Normal file

BIN

docs/users/images/jetbrains-acp.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 36 KiB |

@@ -1,11 +1,11 @@

|

||||

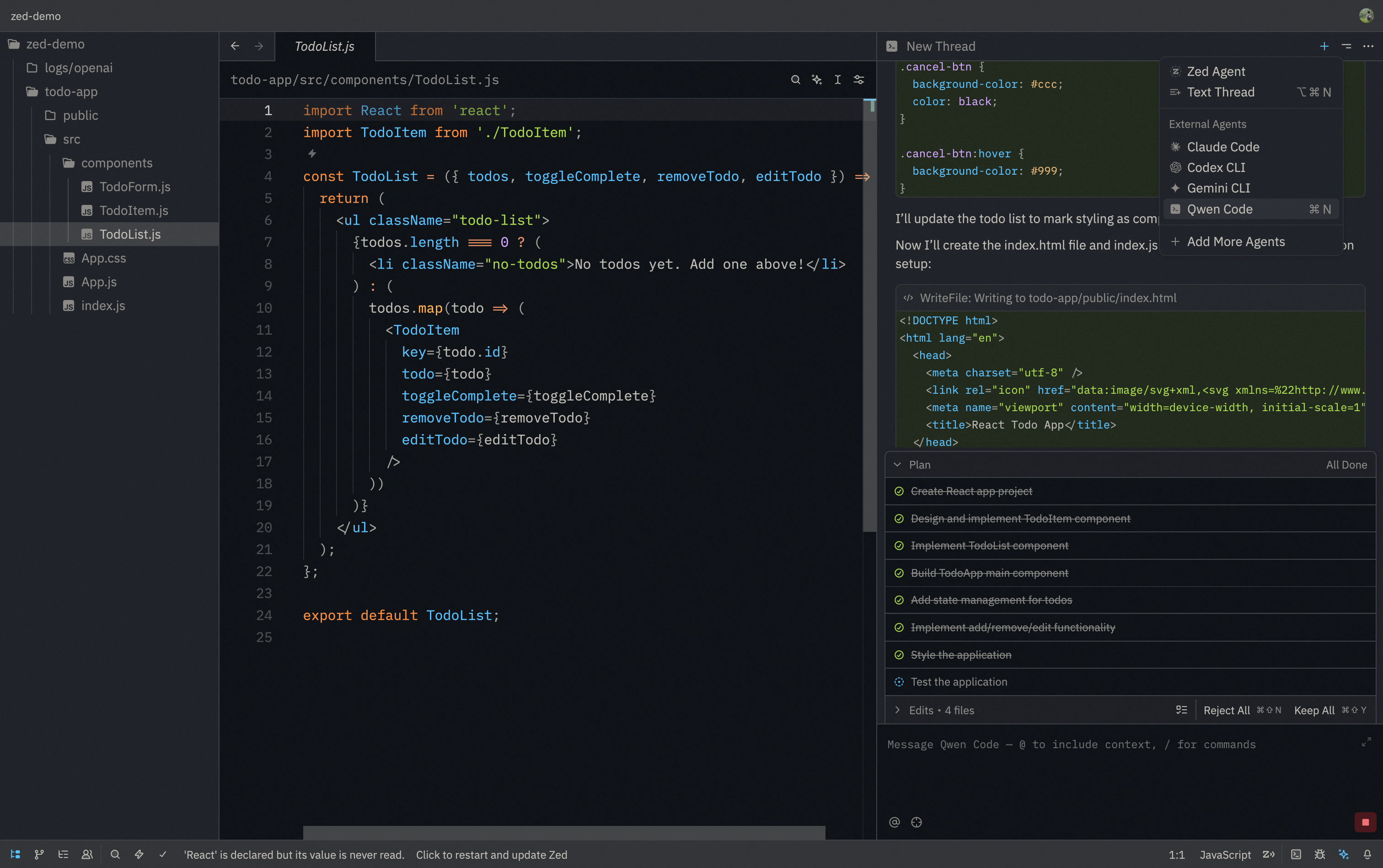

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Agent Control Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -22,7 +22,13 @@

|

||||

|

||||

### Installation

|

||||

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Zed Editor

|

||||

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

@@ -33,6 +33,7 @@ const external = [

|

||||

'@lydell/node-pty-linux-x64',

|

||||

'@lydell/node-pty-win32-arm64',

|

||||

'@lydell/node-pty-win32-x64',

|

||||

'tiktoken',

|

||||

];

|

||||

|

||||

esbuild

|

||||

|

||||

@@ -831,7 +831,7 @@ describe('Permission Control (E2E)', () => {

|

||||

TEST_TIMEOUT,

|

||||

);

|

||||

|

||||

it.skip(

|

||||

it(

|

||||

'should execute dangerous commands without confirmation',

|

||||

async () => {

|

||||

const q = query({

|

||||

|

||||

10

package-lock.json

generated

10

package-lock.json

generated

@@ -15682,6 +15682,12 @@

|

||||

"tslib": "^2"

|

||||

}

|

||||

},

|

||||

"node_modules/tiktoken": {

|

||||

"version": "1.0.22",

|

||||

"resolved": "https://registry.npmjs.org/tiktoken/-/tiktoken-1.0.22.tgz",

|

||||

"integrity": "sha512-PKvy1rVF1RibfF3JlXBSP0Jrcw2uq3yXdgcEXtKTYn3QJ/cBRBHDnrJ5jHky+MENZ6DIPwNUGWpkVx+7joCpNA==",

|

||||

"license": "MIT"

|

||||

},

|

||||

"node_modules/tinybench": {

|

||||

"version": "2.9.0",

|

||||

"resolved": "https://registry.npmjs.org/tinybench/-/tinybench-2.9.0.tgz",

|

||||

@@ -17984,6 +17990,7 @@

|

||||

"shell-quote": "^1.8.3",

|

||||

"simple-git": "^3.28.0",

|

||||

"strip-ansi": "^7.1.0",

|

||||

"tiktoken": "^1.0.21",

|

||||

"undici": "^6.22.0",

|

||||

"uuid": "^9.0.1",

|

||||

"ws": "^8.18.0"

|

||||

@@ -18581,10 +18588,11 @@

|

||||

},

|

||||

"packages/sdk-typescript": {

|

||||

"name": "@qwen-code/sdk",

|

||||

"version": "0.1.3",

|

||||

"version": "0.1.2",

|

||||

"license": "Apache-2.0",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

"tiktoken": "^1.0.21",

|

||||

"zod": "^3.25.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

|

||||

@@ -38,15 +38,14 @@

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

"@qwen-code/qwen-code-core": "file:../core",

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

"@types/update-notifier": "^6.0.8",

|

||||

"ansi-regex": "^6.2.2",

|

||||

"command-exists": "^1.2.9",

|

||||

"comment-json": "^4.2.5",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"extract-zip": "^2.0.1",

|

||||

"fzf": "^0.5.2",

|

||||

"glob": "^10.5.0",

|

||||

"highlight.js": "^11.11.1",

|

||||

@@ -66,6 +65,7 @@

|

||||

"strip-json-comments": "^3.1.1",

|

||||

"tar": "^7.5.2",

|

||||

"undici": "^6.22.0",

|

||||

"extract-zip": "^2.0.1",

|

||||

"update-notifier": "^7.3.1",

|

||||

"wrap-ansi": "9.0.2",

|

||||

"yargs": "^17.7.2",

|

||||

@@ -74,7 +74,6 @@

|

||||

"devDependencies": {

|

||||

"@babel/runtime": "^7.27.6",

|

||||

"@google/gemini-cli-test-utils": "file:../test-utils",

|

||||

"@qwen-code/qwen-code-test-utils": "file:../test-utils",

|

||||

"@testing-library/react": "^16.3.0",

|

||||

"@types/archiver": "^6.0.3",

|

||||

"@types/command-exists": "^1.2.3",

|

||||

@@ -93,7 +92,8 @@

|

||||

"pretty-format": "^30.0.2",

|

||||

"react-dom": "^19.1.0",

|

||||

"typescript": "^5.3.3",

|

||||

"vitest": "^3.1.1"

|

||||

"vitest": "^3.1.1",

|

||||

"@qwen-code/qwen-code-test-utils": "file:../test-utils"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=20"

|

||||

|

||||

@@ -83,26 +83,12 @@ export const useAuthCommand = (

|

||||

async (authType: AuthType, credentials?: OpenAICredentials) => {

|

||||

try {

|

||||

const authTypeScope = getPersistScopeForModelSelection(settings);

|

||||

|

||||

// Persist authType

|

||||

settings.setValue(

|

||||

authTypeScope,

|

||||

'security.auth.selectedType',

|

||||

authType,

|

||||

);

|

||||

|

||||

// Persist model from ContentGenerator config (handles fallback cases)

|

||||

// This ensures that when syncAfterAuthRefresh falls back to default model,

|

||||

// it gets persisted to settings.json

|

||||

const contentGeneratorConfig = config.getContentGeneratorConfig();

|

||||

if (contentGeneratorConfig?.model) {

|

||||

settings.setValue(

|

||||

authTypeScope,

|

||||

'model.name',

|

||||

contentGeneratorConfig.model,

|

||||

);

|

||||

}

|

||||

|

||||

// Only update credentials if not switching to QWEN_OAUTH,

|

||||

// so that OpenAI credentials are preserved when switching to QWEN_OAUTH.

|

||||

if (authType !== AuthType.QWEN_OAUTH && credentials) {

|

||||

@@ -120,6 +106,9 @@ export const useAuthCommand = (

|

||||

credentials.baseUrl,

|

||||

);

|

||||

}

|

||||

if (credentials?.model != null) {

|

||||

settings.setValue(authTypeScope, 'model.name', credentials.model);

|

||||

}

|

||||

}

|

||||

} catch (error) {

|

||||

handleAuthFailure(error);

|

||||

|

||||

@@ -8,7 +8,10 @@ import { describe, it, expect, beforeEach, afterEach, vi } from 'vitest';

|

||||

import * as fs from 'node:fs';

|

||||

import * as path from 'node:path';

|

||||

import * as os from 'node:os';

|

||||

import { updateSettingsFilePreservingFormat } from './commentJson.js';

|

||||

import {

|

||||

updateSettingsFilePreservingFormat,

|

||||

applyUpdates,

|

||||

} from './commentJson.js';

|

||||

|

||||

describe('commentJson', () => {

|

||||

let tempDir: string;

|

||||

@@ -180,3 +183,18 @@ describe('commentJson', () => {

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

describe('applyUpdates', () => {

|

||||

it('should apply updates correctly', () => {

|

||||

const original = { a: 1, b: { c: 2 } };

|

||||

const updates = { b: { c: 3 } };

|

||||

const result = applyUpdates(original, updates);

|

||||

expect(result).toEqual({ a: 1, b: { c: 3 } });

|

||||

});

|

||||

it('should apply updates correctly when empty', () => {

|

||||

const original = { a: 1, b: { c: 2 } };

|

||||

const updates = { b: {} };

|

||||

const result = applyUpdates(original, updates);

|

||||

expect(result).toEqual({ a: 1, b: {} });

|

||||

});

|

||||

});

|

||||

|

||||

@@ -38,7 +38,7 @@ export function updateSettingsFilePreservingFormat(

|

||||

fs.writeFileSync(filePath, updatedContent, 'utf-8');

|

||||

}

|

||||

|

||||

function applyUpdates(

|

||||

export function applyUpdates(

|

||||

current: Record<string, unknown>,

|

||||

updates: Record<string, unknown>,

|

||||

): Record<string, unknown> {

|

||||

@@ -50,6 +50,7 @@ function applyUpdates(

|

||||

typeof value === 'object' &&

|

||||

value !== null &&

|

||||

!Array.isArray(value) &&

|

||||

Object.keys(value).length > 0 &&

|

||||

typeof result[key] === 'object' &&

|

||||

result[key] !== null &&

|

||||

!Array.isArray(result[key])

|

||||

|

||||

@@ -120,7 +120,7 @@ export function resolveCliGenerationConfig(

|

||||

|

||||

// Log warnings if any

|

||||

for (const warning of resolved.warnings) {

|

||||

console.warn(warning);

|

||||

console.warn(`[modelProviderUtils] ${warning}`);

|

||||

}

|

||||

|

||||

// Resolve OpenAI logging config (CLI-specific, not part of core resolver)

|

||||

|

||||

@@ -63,6 +63,7 @@

|

||||

"shell-quote": "^1.8.3",

|

||||

"simple-git": "^3.28.0",

|

||||

"strip-ansi": "^7.1.0",

|

||||

"tiktoken": "^1.0.21",

|

||||

"undici": "^6.22.0",

|

||||

"uuid": "^9.0.1",

|

||||

"ws": "^8.18.0"

|

||||

|

||||

@@ -19,7 +19,9 @@ const mockTokenizer = {

|

||||

};

|

||||

|

||||

vi.mock('../../utils/request-tokenizer/index.js', () => ({

|

||||

RequestTokenEstimator: vi.fn(() => mockTokenizer),

|

||||

getDefaultTokenizer: vi.fn(() => mockTokenizer),

|

||||

DefaultRequestTokenizer: vi.fn(() => mockTokenizer),

|

||||

disposeDefaultTokenizer: vi.fn(),

|

||||

}));

|

||||

|

||||

type AnthropicCreateArgs = [unknown, { signal?: AbortSignal }?];

|

||||

@@ -350,7 +352,9 @@ describe('AnthropicContentGenerator', () => {

|

||||

};

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

expect(mockTokenizer.calculateTokens).toHaveBeenCalledWith(request);

|

||||

expect(mockTokenizer.calculateTokens).toHaveBeenCalledWith(request, {

|

||||

textEncoding: 'cl100k_base',

|

||||

});

|

||||

expect(result.totalTokens).toBe(50);

|

||||

});

|

||||

|

||||

|

||||

@@ -25,7 +25,7 @@ type MessageCreateParamsNonStreaming =

|

||||

Anthropic.MessageCreateParamsNonStreaming;

|

||||

type MessageCreateParamsStreaming = Anthropic.MessageCreateParamsStreaming;

|

||||

type RawMessageStreamEvent = Anthropic.RawMessageStreamEvent;

|

||||

import { RequestTokenEstimator } from '../../utils/request-tokenizer/index.js';

|

||||

import { getDefaultTokenizer } from '../../utils/request-tokenizer/index.js';

|

||||

import { safeJsonParse } from '../../utils/safeJsonParse.js';

|

||||

import { AnthropicContentConverter } from './converter.js';

|

||||

|

||||

@@ -105,8 +105,10 @@ export class AnthropicContentGenerator implements ContentGenerator {

|

||||

request: CountTokensParameters,

|

||||

): Promise<CountTokensResponse> {

|

||||

try {

|

||||

const estimator = new RequestTokenEstimator();

|

||||

const result = await estimator.calculateTokens(request);

|

||||

const tokenizer = getDefaultTokenizer();

|

||||

const result = await tokenizer.calculateTokens(request, {

|

||||

textEncoding: 'cl100k_base',

|

||||

});

|

||||

|

||||

return {

|

||||

totalTokens: result.totalTokens,

|

||||

|

||||

@@ -153,26 +153,6 @@ vi.mock('../telemetry/loggers.js', () => ({

|

||||

logNextSpeakerCheck: vi.fn(),

|

||||

}));

|

||||

|

||||

// Mock RequestTokenizer to use simple character-based estimation

|

||||

vi.mock('../utils/request-tokenizer/requestTokenizer.js', () => ({

|

||||

RequestTokenizer: class {

|

||||

async calculateTokens(request: { contents: unknown }) {

|

||||

// Simple estimation: count characters in JSON and divide by 4

|

||||

const totalChars = JSON.stringify(request.contents).length;

|

||||

return {

|

||||

totalTokens: Math.floor(totalChars / 4),

|

||||

breakdown: {

|

||||

textTokens: Math.floor(totalChars / 4),

|

||||

imageTokens: 0,

|

||||

audioTokens: 0,

|

||||

otherTokens: 0,

|

||||

},

|

||||

processingTime: 0,

|

||||

};

|

||||

}

|

||||

},

|

||||

}));

|

||||

|

||||

/**

|

||||

* Array.fromAsync ponyfill, which will be available in es 2024.

|

||||

*

|

||||

@@ -437,12 +417,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

] as Content[],

|

||||

originalTokenCount = 1000,

|

||||

summaryText = 'This is a summary.',

|

||||

// Token counts returned in usageMetadata to simulate what the API would return

|

||||

// Default values ensure successful compression:

|

||||

// newTokenCount = originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

// = 1000 - (1600 - 1000) + 50 = 1000 - 600 + 50 = 450 (< 1000, success)

|

||||

compressionInputTokenCount = 1600,

|

||||

compressionOutputTokenCount = 50,

|

||||

} = {}) {

|

||||

const mockOriginalChat: Partial<GeminiChat> = {

|

||||

getHistory: vi.fn((_curated?: boolean) => chatHistory),

|

||||

@@ -464,12 +438,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: compressionInputTokenCount,

|

||||

candidatesTokenCount: compressionOutputTokenCount,

|

||||

totalTokenCount:

|

||||

compressionInputTokenCount + compressionOutputTokenCount,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

// Calculate what the new history will be

|

||||

@@ -509,13 +477,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

// New token count formula: originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

const estimatedNewTokenCount = Math.max(

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

originalTokenCount -

|

||||

(compressionInputTokenCount - 1000) +

|

||||

compressionOutputTokenCount,

|

||||

);

|

||||

const estimatedNewTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

return {

|

||||

client,

|

||||

@@ -527,58 +493,49 @@ describe('Gemini Client (client.ts)', () => {

|

||||

|

||||

describe('when compression inflates the token count', () => {

|

||||

it('allows compression to be forced/manual after a failure', async () => {

|

||||

// Call 1 (Fails): Setup with token counts that will inflate

|

||||

// newTokenCount = originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

// = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

// Call 1 (Fails): Setup with a long summary to inflate tokens

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount: inflatedTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(inflatedTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

await client.tryCompressChat('prompt-id-4', false); // Fails

|

||||

|

||||

// Call 2 (Forced): Re-setup with token counts that will compress

|

||||

// newTokenCount = 100 - (1100 - 1000) + 50 = 100 - 100 + 50 = 50 <= 100 (compression)

|

||||

// Call 2 (Forced): Re-setup with a short summary

|

||||

const shortSummary = 'short';

|

||||

const { estimatedNewTokenCount: compressedTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: shortSummary,

|

||||

compressionInputTokenCount: 1100,

|

||||

compressionOutputTokenCount: 50,

|

||||

});

|

||||

expect(compressedTokenCount).toBeLessThanOrEqual(100); // Ensure setup is correct

|

||||

|

||||

const result = await client.tryCompressChat('prompt-id-4', true); // Forced

|

||||

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(100);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(100);

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

newTokenCount: compressedTokenCount,

|

||||

originalTokenCount: 100,

|

||||

});

|

||||

});

|

||||

|

||||

it('yields the result even if the compression inflated the tokens', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

const result = await client.tryCompressChat('prompt-id-4', false);

|

||||

|

||||

expect(result.compressionStatus).toBe(

|

||||

CompressionStatus.COMPRESSION_FAILED_INFLATED_TOKEN_COUNT,

|

||||

);

|

||||

expect(result.originalTokenCount).toBe(100);

|

||||

// The newTokenCount should be higher than original since compression failed due to inflation

|

||||

expect(result.newTokenCount).toBeGreaterThan(100);

|

||||

expect(result).toEqual({

|

||||

compressionStatus:

|

||||

CompressionStatus.COMPRESSION_FAILED_INFLATED_TOKEN_COUNT,

|

||||

newTokenCount: estimatedNewTokenCount,

|

||||

originalTokenCount: 100,

|

||||

});

|

||||

// IMPORTANT: The change in client.ts means setLastPromptTokenCount is NOT called on failure

|

||||

expect(

|

||||

uiTelemetryService.setLastPromptTokenCount,

|

||||

@@ -586,13 +543,10 @@ describe('Gemini Client (client.ts)', () => {

|

||||

});

|

||||

|

||||

it('does not manipulate the source chat', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, mockOriginalChat, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

@@ -603,13 +557,10 @@ describe('Gemini Client (client.ts)', () => {

|

||||

});

|

||||

|

||||

it('will not attempt to compress context after a failure', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

@@ -680,7 +631,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 501 - (1400 - 1000) + 50 = 501 - 400 + 50 = 151 <= 501 (success)

|

||||

const summaryText = 'This is a summary.';

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

@@ -691,11 +641,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1400,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1450,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

// Mock startChat to complete the compression flow

|

||||

@@ -774,8 +719,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 501 - (1400 - 1000) + 50 = 501 - 400 + 50 = 151 <= 501 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -785,11 +735,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1400,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1450,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -799,11 +744,12 @@ describe('Gemini Client (client.ts)', () => {

|

||||

expect(tokenLimit).toHaveBeenCalled();

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

// Assert that summarization happened

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

// Assert that summarization happened and returned the correct stats

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

@@ -863,8 +809,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 700 - (1500 - 1000) + 50 = 700 - 500 + 50 = 250 <= 700 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -874,11 +825,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1500,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1550,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -888,11 +834,12 @@ describe('Gemini Client (client.ts)', () => {

|

||||

expect(tokenLimit).toHaveBeenCalled();

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

// Assert that summarization happened

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

// Assert that summarization happened and returned the correct stats

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

|

||||

@@ -940,8 +887,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 100 - (1060 - 1000) + 20 = 100 - 60 + 20 = 60 <= 100 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -951,11 +903,6 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1060,

|

||||

candidatesTokenCount: 20,

|

||||

totalTokenCount: 1080,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -964,10 +911,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

|

||||

@@ -441,19 +441,47 @@ export class GeminiClient {

|

||||

yield { type: GeminiEventType.ChatCompressed, value: compressed };

|

||||

}

|

||||

|

||||

// Check session token limit after compression.

|

||||

// `lastPromptTokenCount` is treated as authoritative for the (possibly compressed) history;

|

||||

// Check session token limit after compression using accurate token counting

|

||||

const sessionTokenLimit = this.config.getSessionTokenLimit();

|

||||

if (sessionTokenLimit > 0) {

|

||||

const lastPromptTokenCount = uiTelemetryService.getLastPromptTokenCount();

|

||||

if (lastPromptTokenCount > sessionTokenLimit) {

|

||||

// Get all the content that would be sent in an API call

|

||||

const currentHistory = this.getChat().getHistory(true);

|

||||

const userMemory = this.config.getUserMemory();

|

||||

const systemPrompt = getCoreSystemPrompt(

|

||||

userMemory,

|

||||

this.config.getModel(),

|

||||

);

|

||||

const initialHistory = await getInitialChatHistory(this.config);

|

||||

|

||||

// Create a mock request content to count total tokens

|

||||

const mockRequestContent = [

|

||||

{

|

||||

role: 'system' as const,

|

||||

parts: [{ text: systemPrompt }],

|

||||

},

|

||||

...initialHistory,

|

||||

...currentHistory,

|

||||

];

|

||||

|

||||

// Use the improved countTokens method for accurate counting

|

||||

const { totalTokens: totalRequestTokens } = await this.config

|

||||

.getContentGenerator()

|

||||

.countTokens({

|

||||

model: this.config.getModel(),

|

||||

contents: mockRequestContent,

|

||||

});

|

||||

|

||||

if (

|

||||

totalRequestTokens !== undefined &&

|

||||

totalRequestTokens > sessionTokenLimit

|

||||

) {

|

||||

yield {

|

||||

type: GeminiEventType.SessionTokenLimitExceeded,

|

||||

value: {

|

||||

currentTokens: lastPromptTokenCount,

|

||||

currentTokens: totalRequestTokens,

|

||||

limit: sessionTokenLimit,

|

||||

message:

|

||||

`Session token limit exceeded: ${lastPromptTokenCount} tokens > ${sessionTokenLimit} limit. ` +

|

||||

`Session token limit exceeded: ${totalRequestTokens} tokens > ${sessionTokenLimit} limit. ` +

|

||||

'Please start a new session or increase the sessionTokenLimit in your settings.json.',

|

||||

},

|

||||

};

|

||||

|

||||

@@ -708,7 +708,7 @@ describe('GeminiChat', () => {

|

||||

|

||||

// Verify that token counting is called when usageMetadata is present

|

||||

expect(uiTelemetryService.setLastPromptTokenCount).toHaveBeenCalledWith(

|

||||

57,

|

||||

42,

|

||||

);

|

||||

expect(uiTelemetryService.setLastPromptTokenCount).toHaveBeenCalledTimes(

|

||||

1,

|

||||

|

||||

@@ -529,10 +529,10 @@ export class GeminiChat {

|

||||

// Collect token usage for consolidated recording

|

||||

if (chunk.usageMetadata) {

|

||||

usageMetadata = chunk.usageMetadata;

|

||||

const lastPromptTokenCount =

|

||||

usageMetadata.totalTokenCount ?? usageMetadata.promptTokenCount;

|

||||

if (lastPromptTokenCount) {

|

||||

uiTelemetryService.setLastPromptTokenCount(lastPromptTokenCount);

|

||||

if (chunk.usageMetadata.promptTokenCount !== undefined) {

|

||||

uiTelemetryService.setLastPromptTokenCount(

|

||||

chunk.usageMetadata.promptTokenCount,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@@ -22,7 +22,17 @@ const mockTokenizer = {

|

||||

};

|

||||

|

||||

vi.mock('../../../utils/request-tokenizer/index.js', () => ({

|

||||

RequestTokenEstimator: vi.fn(() => mockTokenizer),

|

||||

getDefaultTokenizer: vi.fn(() => mockTokenizer),

|

||||

DefaultRequestTokenizer: vi.fn(() => mockTokenizer),

|

||||

disposeDefaultTokenizer: vi.fn(),

|

||||

}));

|

||||

|

||||

// Mock tiktoken as well for completeness

|

||||

vi.mock('tiktoken', () => ({

|

||||

get_encoding: vi.fn(() => ({

|

||||

encode: vi.fn(() => new Array(50)), // Mock 50 tokens

|

||||

free: vi.fn(),

|

||||

})),

|

||||

}));

|

||||

|

||||

// Now import the modules that depend on the mocked modules

|

||||

@@ -124,7 +134,7 @@ describe('OpenAIContentGenerator (Refactored)', () => {

|

||||

});

|

||||

|

||||

describe('countTokens', () => {

|

||||

it('should count tokens using character-based estimation', async () => {

|

||||

it('should count tokens using tiktoken', async () => {

|

||||

const request: CountTokensParameters = {

|

||||

contents: [{ role: 'user', parts: [{ text: 'Hello world' }] }],

|

||||

model: 'gpt-4',

|

||||

@@ -132,27 +142,26 @@ describe('OpenAIContentGenerator (Refactored)', () => {

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

|

||||

// 'Hello world' = 11 ASCII chars

|

||||

// 11 / 4 = 2.75 -> ceil = 3 tokens

|

||||

expect(result.totalTokens).toBe(3);

|

||||

expect(result.totalTokens).toBe(50); // Mocked value

|

||||

});

|

||||

|

||||

it('should handle multimodal content', async () => {

|

||||

it('should fall back to character approximation if tiktoken fails', async () => {

|

||||

// Mock tiktoken to throw error

|

||||

vi.doMock('tiktoken', () => ({

|

||||

get_encoding: vi.fn().mockImplementation(() => {

|

||||

throw new Error('Tiktoken failed');

|

||||

}),

|

||||

}));

|

||||

|

||||

const request: CountTokensParameters = {

|

||||

contents: [

|

||||

{

|

||||

role: 'user',

|

||||

parts: [{ text: 'Hello' }, { text: ' world' }],

|

||||

},

|

||||

],

|

||||

contents: [{ role: 'user', parts: [{ text: 'Hello world' }] }],

|

||||

model: 'gpt-4',

|

||||

};

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

|

||||

// Parts are combined for estimation:

|

||||

// 'Hello world' = 11 ASCII chars -> 11/4 = 2.75 -> ceil = 3 tokens

|

||||

expect(result.totalTokens).toBe(3);

|

||||

// Should use character approximation (content length / 4)

|

||||

expect(result.totalTokens).toBeGreaterThan(0);

|

||||

});

|

||||

});

|

||||

|

||||

|

||||

@@ -12,7 +12,7 @@ import type {

|

||||

import type { PipelineConfig } from './pipeline.js';

|

||||

import { ContentGenerationPipeline } from './pipeline.js';

|

||||

import { EnhancedErrorHandler } from './errorHandler.js';

|

||||

import { RequestTokenEstimator } from '../../utils/request-tokenizer/index.js';

|

||||

import { getDefaultTokenizer } from '../../utils/request-tokenizer/index.js';

|

||||

import type { ContentGeneratorConfig } from '../contentGenerator.js';

|

||||

|

||||

export class OpenAIContentGenerator implements ContentGenerator {

|

||||

@@ -68,9 +68,11 @@ export class OpenAIContentGenerator implements ContentGenerator {

|

||||

request: CountTokensParameters,

|

||||

): Promise<CountTokensResponse> {

|

||||

try {

|

||||

// Use the request token estimator (character-based).

|

||||

const estimator = new RequestTokenEstimator();

|

||||

const result = await estimator.calculateTokens(request);

|

||||

// Use the new high-performance request tokenizer

|

||||

const tokenizer = getDefaultTokenizer();

|

||||

const result = await tokenizer.calculateTokens(request, {

|

||||

textEncoding: 'cl100k_base', // Use GPT-4 encoding for consistency

|

||||

});

|

||||

|

||||

return {

|

||||

totalTokens: result.totalTokens,

|

||||

|

||||

@@ -106,6 +106,15 @@ export const QWEN_OAUTH_MODELS: ModelConfig[] = [

|

||||

description:

|

||||

'The latest Qwen Coder model from Alibaba Cloud ModelStudio (version: qwen3-coder-plus-2025-09-23)',

|

||||

capabilities: { vision: false },

|

||||

generationConfig: {

|

||||

samplingParams: {

|

||||

temperature: 0.7,

|

||||

top_p: 0.9,

|

||||

max_tokens: 8192,

|

||||

},

|

||||

timeout: 60000,

|

||||

maxRetries: 3,

|

||||

},

|

||||

},

|

||||

{

|

||||

id: 'vision-model',

|

||||

@@ -113,5 +122,14 @@ export const QWEN_OAUTH_MODELS: ModelConfig[] = [

|

||||

description:

|

||||

'The latest Qwen Vision model from Alibaba Cloud ModelStudio (version: qwen3-vl-plus-2025-09-23)',

|

||||

capabilities: { vision: true },

|

||||

generationConfig: {

|

||||

samplingParams: {

|

||||

temperature: 0.7,

|

||||

top_p: 0.9,

|

||||

max_tokens: 8192,

|

||||

},

|

||||

timeout: 60000,

|

||||

maxRetries: 3,

|

||||

},

|

||||

},

|

||||

];

|

||||

|

||||

@@ -480,91 +480,6 @@ describe('ModelsConfig', () => {

|

||||

expect(gc.apiKeyEnvKey).toBeUndefined();

|

||||

});

|

||||

|

||||

it('should use default model for new authType when switching from different authType with env vars', () => {

|

||||

// Simulate cold start with OPENAI env vars (OPENAI_MODEL and OPENAI_API_KEY)

|

||||

// This sets the model in generationConfig but no authType is selected yet

|

||||

const modelsConfig = new ModelsConfig({

|

||||

generationConfig: {

|

||||

model: 'gpt-4o', // From OPENAI_MODEL env var

|

||||

apiKey: 'openai-key-from-env',

|

||||

},

|

||||

});

|

||||

|

||||

// User switches to qwen-oauth via AuthDialog

|

||||

// refreshAuth calls syncAfterAuthRefresh with the current model (gpt-4o)

|

||||

// which doesn't exist in qwen-oauth registry, so it should use default

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.QWEN_OAUTH, 'gpt-4o');

|

||||

|

||||

const gc = currentGenerationConfig(modelsConfig);

|

||||

// Should use default qwen-oauth model (coder-model), not the OPENAI model

|

||||

expect(gc.model).toBe('coder-model');

|

||||

expect(gc.apiKey).toBe('QWEN_OAUTH_DYNAMIC_TOKEN');

|

||||

expect(gc.apiKeyEnvKey).toBeUndefined();

|

||||

});

|

||||

|

||||

it('should clear manual credentials when switching from USE_OPENAI to QWEN_OAUTH', () => {

|

||||

// User manually set credentials for OpenAI

|

||||

const modelsConfig = new ModelsConfig({

|

||||

initialAuthType: AuthType.USE_OPENAI,

|

||||

generationConfig: {

|

||||

model: 'gpt-4o',

|

||||

apiKey: 'manual-openai-key',

|

||||

baseUrl: 'https://manual.example.com/v1',

|

||||

},

|

||||

});

|

||||

|

||||

// Manually set credentials via updateCredentials

|

||||

modelsConfig.updateCredentials({

|

||||

apiKey: 'manual-openai-key',

|

||||

baseUrl: 'https://manual.example.com/v1',

|

||||

model: 'gpt-4o',

|

||||

});

|

||||

|

||||

// User switches to qwen-oauth

|

||||

// Since authType is not USE_OPENAI, manual credentials should be cleared

|

||||

// and default qwen-oauth model should be applied

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.QWEN_OAUTH, 'gpt-4o');

|

||||

|

||||

const gc = currentGenerationConfig(modelsConfig);

|

||||

// Should use default qwen-oauth model, not preserve manual OpenAI credentials

|

||||

expect(gc.model).toBe('coder-model');

|

||||

expect(gc.apiKey).toBe('QWEN_OAUTH_DYNAMIC_TOKEN');

|

||||

// baseUrl should be set to qwen-oauth default, not preserved from manual OpenAI config

|

||||

expect(gc.baseUrl).toBe('DYNAMIC_QWEN_OAUTH_BASE_URL');

|

||||

expect(gc.apiKeyEnvKey).toBeUndefined();

|

||||

});

|

||||

|

||||

it('should preserve manual credentials when switching to USE_OPENAI', () => {

|

||||

// User manually set credentials

|

||||

const modelsConfig = new ModelsConfig({

|

||||

initialAuthType: AuthType.USE_OPENAI,

|

||||

generationConfig: {

|

||||

model: 'gpt-4o',

|

||||

apiKey: 'manual-openai-key',

|

||||

baseUrl: 'https://manual.example.com/v1',

|

||||

samplingParams: { temperature: 0.9 },

|

||||

},

|

||||

});

|

||||

|

||||

// Manually set credentials via updateCredentials

|

||||

modelsConfig.updateCredentials({

|

||||

apiKey: 'manual-openai-key',

|

||||

baseUrl: 'https://manual.example.com/v1',

|

||||

model: 'gpt-4o',

|

||||

});

|

||||

|

||||

// User switches to USE_OPENAI (same or different model)

|

||||

// Since authType is USE_OPENAI, manual credentials should be preserved

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.USE_OPENAI, 'gpt-4o');

|

||||

|

||||

const gc = currentGenerationConfig(modelsConfig);

|

||||

// Should preserve manual credentials

|

||||

expect(gc.model).toBe('gpt-4o');

|

||||

expect(gc.apiKey).toBe('manual-openai-key');

|

||||

expect(gc.baseUrl).toBe('https://manual.example.com/v1');

|

||||

expect(gc.samplingParams?.temperature).toBe(0.9); // Preserved from initial config

|

||||

});

|

||||

|

||||

it('should maintain consistency between currentModelId and _generationConfig.model after initialization', () => {

|

||||

const modelProvidersConfig: ModelProvidersConfig = {

|

||||

openai: [

|

||||

|

||||

@@ -600,7 +600,7 @@ export class ModelsConfig {

|

||||

|

||||

// If credentials were manually set, don't apply modelProvider defaults

|

||||

// Just update the authType and preserve the manually set credentials

|

||||

if (preserveManualCredentials && authType === AuthType.USE_OPENAI) {

|

||||

if (preserveManualCredentials) {

|

||||

this.strictModelProviderSelection = false;

|

||||

this.currentAuthType = authType;

|

||||

if (modelId) {

|

||||

@@ -621,17 +621,7 @@ export class ModelsConfig {

|

||||

this.applyResolvedModelDefaults(resolved);

|

||||

}

|

||||

} else {

|

||||

// If the provided modelId doesn't exist in the registry for the new authType,

|

||||

// use the default model for that authType instead of keeping the old model.

|

||||

// This handles the case where switching from one authType (e.g., OPENAI with

|

||||

// env vars) to another (e.g., qwen-oauth) - we should use the default model

|

||||

// for the new authType, not the old model.

|

||||

this.currentAuthType = authType;

|

||||

const defaultModel =

|

||||

this.modelRegistry.getDefaultModelForAuthType(authType);

|

||||

if (defaultModel) {

|

||||

this.applyResolvedModelDefaults(defaultModel);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@@ -559,109 +559,6 @@ export async function getQwenOAuthClient(

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Displays a formatted box with OAuth device authorization URL.

|

||||

* Uses process.stderr.write() to bypass ConsolePatcher and ensure the auth URL

|

||||

* is always visible to users, especially in non-interactive mode.

|

||||

* Using stderr prevents corruption of structured JSON output (which goes to stdout)

|

||||

* and follows the standard Unix convention of user-facing messages to stderr.

|

||||

*/

|

||||

function showFallbackMessage(verificationUriComplete: string): void {

|

||||

const title = 'Qwen OAuth Device Authorization';

|

||||

const url = verificationUriComplete;

|

||||

const minWidth = 70;

|

||||

const maxWidth = 80;

|

||||

const boxWidth = Math.min(Math.max(title.length + 4, minWidth), maxWidth);

|

||||

|

||||

// Calculate the width needed for the box (account for padding)

|

||||

const contentWidth = boxWidth - 4; // Subtract 2 spaces and 2 border chars

|

||||

|

||||

// Helper to wrap text to fit within box width

|

||||

const wrapText = (text: string, width: number): string[] => {

|

||||

// For URLs, break at any character if too long

|

||||

if (text.startsWith('http://') || text.startsWith('https://')) {

|

||||

const lines: string[] = [];

|

||||

for (let i = 0; i < text.length; i += width) {

|

||||

lines.push(text.substring(i, i + width));

|

||||

}

|

||||

return lines;

|

||||

}

|

||||

|

||||

// For regular text, break at word boundaries

|

||||

const words = text.split(' ');

|

||||

const lines: string[] = [];

|

||||

let currentLine = '';

|

||||

|

||||

for (const word of words) {

|

||||

if (currentLine.length + word.length + 1 <= width) {

|

||||

currentLine += (currentLine ? ' ' : '') + word;

|

||||

} else {

|

||||

if (currentLine) {

|

||||

lines.push(currentLine);

|

||||

}

|

||||

currentLine = word.length > width ? word.substring(0, width) : word;

|

||||

}

|

||||

}

|

||||

if (currentLine) {

|

||||

lines.push(currentLine);

|

||||

}

|

||||

return lines;

|

||||

};

|

||||

|

||||

// Build the box borders with title centered in top border

|

||||

// Format: +--- Title ---+

|

||||

const titleWithSpaces = ' ' + title + ' ';

|

||||

const totalDashes = boxWidth - 2 - titleWithSpaces.length; // Subtract corners and title

|

||||

const leftDashes = Math.floor(totalDashes / 2);

|

||||

const rightDashes = totalDashes - leftDashes;

|

||||

const topBorder =

|

||||

'+' +

|

||||

'-'.repeat(leftDashes) +

|

||||

titleWithSpaces +

|

||||

'-'.repeat(rightDashes) +

|

||||

'+';

|

||||

const emptyLine = '|' + ' '.repeat(boxWidth - 2) + '|';

|

||||

const bottomBorder = '+' + '-'.repeat(boxWidth - 2) + '+';

|

||||

|

||||

// Build content lines

|

||||

const instructionLines = wrapText(

|

||||

'Please visit the following URL in your browser to authorize:',

|

||||

contentWidth,

|

||||

);

|

||||

const urlLines = wrapText(url, contentWidth);

|

||||

const waitingLine = 'Waiting for authorization to complete...';

|

||||

|

||||

// Write the box

|

||||

process.stderr.write('\n' + topBorder + '\n');

|

||||

process.stderr.write(emptyLine + '\n');

|

||||

|

||||

// Write instructions

|

||||

for (const line of instructionLines) {

|

||||

process.stderr.write(

|

||||

'| ' + line + ' '.repeat(contentWidth - line.length) + ' |\n',

|

||||

);

|

||||

}

|

||||

|

||||

process.stderr.write(emptyLine + '\n');

|

||||

|

||||

// Write URL

|

||||

for (const line of urlLines) {

|

||||

process.stderr.write(

|

||||

'| ' + line + ' '.repeat(contentWidth - line.length) + ' |\n',

|

||||

);

|

||||

}

|

||||

|

||||

process.stderr.write(emptyLine + '\n');

|

||||

|

||||

// Write waiting message

|

||||

process.stderr.write(

|

||||

'| ' + waitingLine + ' '.repeat(contentWidth - waitingLine.length) + ' |\n',

|

||||

);

|

||||

|

||||

process.stderr.write(emptyLine + '\n');

|

||||

process.stderr.write(bottomBorder + '\n\n');

|

||||

}

|

||||

|

||||

async function authWithQwenDeviceFlow(

|

||||

client: QwenOAuth2Client,

|

||||

config: Config,

|

||||

@@ -674,50 +571,6 @@ async function authWithQwenDeviceFlow(

|

||||

};

|

||||

qwenOAuth2Events.once(QwenOAuth2Event.AuthCancel, cancelHandler);

|

||||

|

||||

// Helper to check cancellation and return appropriate result

|

||||

const checkCancellation = (): AuthResult | null => {

|

||||

if (!isCancelled) {

|

||||

return null;

|

||||

}

|

||||

const message = 'Authentication cancelled by user.';

|

||||

console.debug('\n' + message);

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthProgress, 'error', message);

|

||||

return { success: false, reason: 'cancelled', message };

|

||||

};

|

||||

|

||||

// Helper to emit auth progress events

|

||||

const emitAuthProgress = (

|

||||

status: 'polling' | 'success' | 'error' | 'timeout' | 'rate_limit',

|

||||

message: string,

|

||||

): void => {

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthProgress, status, message);

|

||||

};

|

||||

|

||||

// Helper to handle browser launch with error handling

|

||||

const launchBrowser = async (url: string): Promise<void> => {

|

||||

try {

|

||||

const childProcess = await open(url);

|

||||

|

||||

// IMPORTANT: Attach an error handler to the returned child process.

|

||||

// Without this, if `open` fails to spawn a process (e.g., `xdg-open` is not found

|

||||

// in a minimal Docker container), it will emit an unhandled 'error' event,

|

||||

// causing the entire Node.js process to crash.

|

||||

if (childProcess) {

|

||||

childProcess.on('error', (err) => {

|

||||

console.debug(

|

||||

'Browser launch failed:',

|

||||

err.message || 'Unknown error',

|

||||

);

|

||||

});

|

||||

}

|

||||

} catch (err) {

|

||||

console.debug(

|

||||

'Failed to open browser:',

|

||||

err instanceof Error ? err.message : 'Unknown error',

|

||||

);

|

||||

}

|

||||

};

|

||||

|

||||

try {

|

||||

// Generate PKCE code verifier and challenge

|

||||

const { code_verifier, code_challenge } = generatePKCEPair();

|

||||

@@ -740,18 +593,56 @@ async function authWithQwenDeviceFlow(

|

||||

// Emit device authorization event for UI integration immediately

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthUri, deviceAuth);

|

||||

|

||||

const showFallbackMessage = () => {

|

||||

console.log('\n=== Qwen OAuth Device Authorization ===');

|

||||

console.log(

|

||||

'Please visit the following URL in your browser to authorize:',

|

||||

);

|

||||

console.log(`\n${deviceAuth.verification_uri_complete}\n`);

|

||||

console.log('Waiting for authorization to complete...\n');

|

||||

};

|

||||

|

||||

// Always show the fallback message in non-interactive environments to ensure

|

||||

// users can see the authorization URL even if browser launching is attempted.

|

||||

// This is critical for headless/remote environments where browser launching

|

||||

// may silently fail without throwing an error.

|

||||

showFallbackMessage(deviceAuth.verification_uri_complete);

|

||||

if (config.isBrowserLaunchSuppressed()) {

|

||||

// Browser launch is suppressed, show fallback message

|

||||

showFallbackMessage();

|

||||

} else {

|

||||

// Try to open the URL in browser, but always show the URL as fallback

|

||||

// to handle cases where browser launch silently fails (e.g., headless servers)

|

||||

showFallbackMessage();

|

||||

try {

|

||||

const childProcess = await open(deviceAuth.verification_uri_complete);

|

||||

|

||||

// Try to open browser if not suppressed

|

||||

if (!config.isBrowserLaunchSuppressed()) {

|

||||

await launchBrowser(deviceAuth.verification_uri_complete);

|

||||

// IMPORTANT: Attach an error handler to the returned child process.

|

||||

// Without this, if `open` fails to spawn a process (e.g., `xdg-open` is not found

|

||||

// in a minimal Docker container), it will emit an unhandled 'error' event,

|

||||

// causing the entire Node.js process to crash.

|

||||

if (childProcess) {

|

||||

childProcess.on('error', (err) => {

|

||||

console.debug(

|

||||

'Browser launch failed:',

|

||||

err.message || 'Unknown error',

|

||||

);

|

||||

});

|

||||

}

|

||||

} catch (err) {

|

||||

console.debug(

|

||||

'Failed to open browser:',

|

||||

err instanceof Error ? err.message : 'Unknown error',

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

emitAuthProgress('polling', 'Waiting for authorization...');

|

||||

// Emit auth progress event

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

'polling',

|

||||

'Waiting for authorization...',

|

||||

);

|

||||

|

||||

console.debug('Waiting for authorization...\n');

|

||||

|

||||

// Poll for the token

|

||||

@@ -762,9 +653,11 @@ async function authWithQwenDeviceFlow(

|

||||

|

||||

for (let attempt = 0; attempt < maxAttempts; attempt++) {

|

||||

// Check if authentication was cancelled

|

||||

const cancellationResult = checkCancellation();

|

||||

if (cancellationResult) {

|

||||

return cancellationResult;

|

||||

if (isCancelled) {

|

||||

const message = 'Authentication cancelled by user.';

|

||||

console.debug('\n' + message);

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthProgress, 'error', message);

|

||||

return { success: false, reason: 'cancelled', message };

|

||||

}

|

||||

|

||||

try {

|

||||

@@ -807,7 +700,9 @@ async function authWithQwenDeviceFlow(

|

||||

// minimal stub; cache invalidation is best-effort and should not break auth.

|

||||

}

|

||||

|

||||

emitAuthProgress(

|

||||

// Emit auth progress success event

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

'success',

|

||||

'Authentication successful! Access token obtained.',

|

||||

);

|

||||

@@ -830,7 +725,9 @@ async function authWithQwenDeviceFlow(

|

||||

pollInterval = 2000; // Reset to default interval

|

||||

}

|

||||

|

||||

emitAuthProgress(

|

||||

// Emit polling progress event

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

'polling',

|

||||

`Polling... (attempt ${attempt + 1}/${maxAttempts})`,

|

||||

);

|

||||

@@ -860,9 +757,15 @@ async function authWithQwenDeviceFlow(

|

||||

});

|

||||

|

||||

// Check for cancellation after waiting

|

||||

const cancellationResult = checkCancellation();

|

||||

if (cancellationResult) {

|

||||

return cancellationResult;

|

||||

if (isCancelled) {

|

||||

const message = 'Authentication cancelled by user.';

|

||||

console.debug('\n' + message);

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

'error',

|

||||

message,

|

||||

);

|

||||

return { success: false, reason: 'cancelled', message };

|

||||

}

|

||||

|

||||

continue;

|

||||

@@ -890,17 +793,15 @@ async function authWithQwenDeviceFlow(

|

||||

message: string,

|

||||

eventType: 'error' | 'rate_limit' = 'error',

|

||||

): AuthResult => {

|

||||

emitAuthProgress(eventType, message);

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

eventType,

|

||||

message,

|

||||

);

|

||||

console.error('\n' + message);

|

||||

return { success: false, reason, message };

|

||||

};

|

||||

|

||||

// Check for cancellation first

|

||||

const cancellationResult = checkCancellation();

|

||||

if (cancellationResult) {

|

||||

return cancellationResult;

|

||||

}

|

||||

|

||||

// Handle credential caching failures - stop polling immediately

|

||||

if (errorMessage.includes('Failed to cache credentials')) {

|

||||

return handleError('error', errorMessage);

|

||||

@@ -924,14 +825,26 @@ async function authWithQwenDeviceFlow(

|

||||

}

|

||||

|

||||

const message = `Error polling for token: ${errorMessage}`;

|

||||

emitAuthProgress('error', message);

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthProgress, 'error', message);

|

||||

|

||||

if (isCancelled) {

|

||||

const message = 'Authentication cancelled by user.';

|

||||

return { success: false, reason: 'cancelled', message };

|

||||

}

|

||||

|

||||

await new Promise((resolve) => setTimeout(resolve, pollInterval));

|

||||

}

|

||||

}

|

||||

|

||||

const timeoutMessage = 'Authorization timeout, please restart the process.';

|

||||

emitAuthProgress('timeout', timeoutMessage);

|

||||

|

||||

// Emit timeout error event

|

||||

qwenOAuth2Events.emit(

|

||||

QwenOAuth2Event.AuthProgress,

|

||||

'timeout',

|

||||

timeoutMessage,

|

||||

);

|

||||

|

||||

console.error('\n' + timeoutMessage);

|

||||

return { success: false, reason: 'timeout', message: timeoutMessage };

|

||||

} catch (error: unknown) {

|

||||

@@ -940,7 +853,7 @@ async function authWithQwenDeviceFlow(

|

||||

});

|

||||

const message = `Device authorization flow failed: ${fullErrorMessage}`;

|

||||

|

||||

emitAuthProgress('error', message);

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthProgress, 'error', message);

|

||||

console.error(message);

|

||||

return { success: false, reason: 'error', message };

|

||||

} finally {

|

||||

|

||||

@@ -15,11 +15,13 @@ import { uiTelemetryService } from '../telemetry/uiTelemetry.js';

|

||||

import { tokenLimit } from '../core/tokenLimits.js';

|

||||

import type { GeminiChat } from '../core/geminiChat.js';

|

||||

import type { Config } from '../config/config.js';

|

||||

import { getInitialChatHistory } from '../utils/environmentContext.js';

|

||||

import type { ContentGenerator } from '../core/contentGenerator.js';

|

||||

|

||||