mirror of

https://github.com/QwenLM/qwen-code.git

synced 2025-12-24 18:49:13 +00:00

Compare commits

107 Commits

docs-fix

...

release/v0

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

01da2e3620 | ||

|

|

bc2a7efcb3 | ||

|

|

1dfd880e17 | ||

|

|

10a0c843c1 | ||

|

|

955547d523 | ||

|

|

3bc862df89 | ||

|

|

642dda0315 | ||

|

|

bbbdeb280d | ||

|

|

0d43ddee2a | ||

|

|

50e03f2dd6 | ||

|

|

f440ff2f7f | ||

|

|

9a6b0abc37 | ||

|

|

00547ba439 | ||

|

|

fc1dac9dc7 | ||

|

|

338eb9038d | ||

|

|

87d8d82be7 | ||

|

|

e0b9044833 | ||

|

|

f33f43e2f7 | ||

|

|

fefc138485 | ||

|

|

4e7929850c | ||

|

|

9cc5c3ed8f | ||

|

|

a92be72e88 | ||

|

|

52cd1da4a7 | ||

|

|

c5c556a326 | ||

|

|

a8a863581b | ||

|

|

e4468cfcbc | ||

|

|

3bf30ead67 | ||

|

|

a786f61e49 | ||

|

|

b8a16d362a | ||

|

|

17129024f4 | ||

|

|

fa7d857945 | ||

|

|

90489933fd | ||

|

|

3354b56a05 | ||

|

|

d40447cee4 | ||

|

|

ba87cf63f6 | ||

|

|

00a8c6a924 | ||

|

|

156134d3d4 | ||

|

|

b720209888 | ||

|

|

dfe667c364 | ||

|

|

1386fba278 | ||

|

|

d942250905 | ||

|

|

ec32a24508 | ||

|

|

c2b59038ae | ||

|

|

27bf42b4f5 | ||

|

|

d07ae35c90 | ||

|

|

cb59b5a9dc | ||

|

|

01e62a2120 | ||

|

|

d464f61b72 | ||

|

|

f866f7f071 | ||

|

|

7eabf543b4 | ||

|

|

8106a6b0f4 | ||

|

|

c0839dceac | ||

|

|

12f84fb730 | ||

|

|

e274b4469a | ||

|

|

0a39c91264 | ||

|

|

8fd7490d8f | ||

|

|

4f1766e19a | ||

|

|

bf52c6db0f | ||

|

|

9267677d38 | ||

|

|

fb8412a96a | ||

|

|

2837aa6b7c | ||

|

|

49b3e0dc92 | ||

|

|

25d9c4f1a7 | ||

|

|

9942b2b877 | ||

|

|

850c52dc79 | ||

|

|

61e378644e | ||

|

|

bd3bdd82ea | ||

|

|

fc58291c5c | ||

|

|

633148b257 | ||

|

|

f9da1b819e | ||

|

|

f47c762620 | ||

|

|

573c33f68a | ||

|

|

32c085cf7d | ||

|

|

725843f9b3 | ||

|

|

07fb6faf5f | ||

|

|

1956507d90 | ||

|

|

54fd63f04b | ||

|

|

59c3d3d0f9 | ||

|

|

5d94763581 | ||

|

|

5bd1822b7d | ||

|

|

65392a057d | ||

|

|

3b9d38a325 | ||

|

|

4930a24d07 | ||

|

|

7a97fcd5f1 | ||

|

|

4504c7a0ac | ||

|

|

56a62bcb2a | ||

|

|

1098c23b26 | ||

|

|

e76f47512c | ||

|

|

f5c868702b | ||

|

|

0d40cf2213 | ||

|

|

12877ac849 | ||

|

|

2de50ae436 | ||

|

|

a761be80a5 | ||

|

|

6c77303172 | ||

|

|

4f2b2d0a3e | ||

|

|

44794121a8 | ||

|

|

bf905dcc17 | ||

|

|

95d3e5b744 | ||

|

|

6d3cf4cd98 | ||

|

|

68295d0bbf | ||

|

|

84cccfe99a | ||

|

|

b6a3ab11e0 | ||

|

|

2f0fa267c8 | ||

|

|

fa6ae0a324 | ||

|

|

387be44866 | ||

|

|

51b82771da | ||

|

|

629cd14fad |

9

.github/workflows/e2e.yml

vendored

9

.github/workflows/e2e.yml

vendored

@@ -18,8 +18,6 @@ jobs:

|

||||

- 'sandbox:docker'

|

||||

node-version:

|

||||

- '20.x'

|

||||

- '22.x'

|

||||

- '24.x'

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@08c6903cd8c0fde910a37f88322edcfb5dd907a8' # ratchet:actions/checkout@v5

|

||||

@@ -67,10 +65,13 @@ jobs:

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

OPENAI_MODEL: '${{ secrets.OPENAI_MODEL }}'

|

||||

KEEP_OUTPUT: 'true'

|

||||

SANDBOX: '${{ matrix.sandbox }}'

|

||||

VERBOSE: 'true'

|

||||

run: |-

|

||||

npm run "test:integration:${SANDBOX}"

|

||||

if [[ "${{ matrix.sandbox }}" == "sandbox:docker" ]]; then

|

||||

npm run test:integration:sandbox:docker

|

||||

else

|

||||

npm run test:integration:sandbox:none

|

||||

fi

|

||||

|

||||

e2e-test-macos:

|

||||

name: 'E2E Test - macOS'

|

||||

|

||||

12

.github/workflows/release-sdk.yml

vendored

12

.github/workflows/release-sdk.yml

vendored

@@ -121,6 +121,11 @@ jobs:

|

||||

IS_PREVIEW: '${{ steps.vars.outputs.is_preview }}'

|

||||

MANUAL_VERSION: '${{ inputs.version }}'

|

||||

|

||||

- name: 'Build CLI Bundle'

|

||||

run: |

|

||||

npm run build

|

||||

npm run bundle

|

||||

|

||||

- name: 'Run Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

@@ -132,13 +137,6 @@ jobs:

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

OPENAI_MODEL: '${{ secrets.OPENAI_MODEL }}'

|

||||

|

||||

- name: 'Build CLI for Integration Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

run: |

|

||||

npm run build

|

||||

npm run bundle

|

||||

|

||||

- name: 'Run SDK Integration Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

|

||||

4

.github/workflows/release.yml

vendored

4

.github/workflows/release.yml

vendored

@@ -133,8 +133,8 @@ jobs:

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

run: |

|

||||

npm run preflight

|

||||

npm run test:integration:sandbox:none

|

||||

npm run test:integration:sandbox:docker

|

||||

npm run test:integration:cli:sandbox:none

|

||||

npm run test:integration:cli:sandbox:docker

|

||||

env:

|

||||

OPENAI_API_KEY: '${{ secrets.OPENAI_API_KEY }}'

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

|

||||

110

CONTRIBUTING.md

110

CONTRIBUTING.md

@@ -2,27 +2,6 @@

|

||||

|

||||

We would love to accept your patches and contributions to this project.

|

||||

|

||||

## Before you begin

|

||||

|

||||

### Sign our Contributor License Agreement

|

||||

|

||||

Contributions to this project must be accompanied by a

|

||||

[Contributor License Agreement](https://cla.developers.google.com/about) (CLA).

|

||||

You (or your employer) retain the copyright to your contribution; this simply

|

||||

gives us permission to use and redistribute your contributions as part of the

|

||||

project.

|

||||

|

||||

If you or your current employer have already signed the Google CLA (even if it

|

||||

was for a different project), you probably don't need to do it again.

|

||||

|

||||

Visit <https://cla.developers.google.com/> to see your current agreements or to

|

||||

sign a new one.

|

||||

|

||||

### Review our Community Guidelines

|

||||

|

||||

This project follows [Google's Open Source Community

|

||||

Guidelines](https://opensource.google/conduct/).

|

||||

|

||||

## Contribution Process

|

||||

|

||||

### Code Reviews

|

||||

@@ -74,12 +53,6 @@ Your PR should have a clear, descriptive title and a detailed description of the

|

||||

|

||||

In the PR description, explain the "why" behind your changes and link to the relevant issue (e.g., `Fixes #123`).

|

||||

|

||||

## Forking

|

||||

|

||||

If you are forking the repository you will be able to run the Build, Test and Integration test workflows. However in order to make the integration tests run you'll need to add a [GitHub Repository Secret](https://docs.github.com/en/actions/security-for-github-actions/security-guides/using-secrets-in-github-actions#creating-secrets-for-a-repository) with a value of `GEMINI_API_KEY` and set that to a valid API key that you have available. Your key and secret are private to your repo; no one without access can see your key and you cannot see any secrets related to this repo.

|

||||

|

||||

Additionally you will need to click on the `Actions` tab and enable workflows for your repository, you'll find it's the large blue button in the center of the screen.

|

||||

|

||||

## Development Setup and Workflow

|

||||

|

||||

This section guides contributors on how to build, modify, and understand the development setup of this project.

|

||||

@@ -98,8 +71,8 @@ This section guides contributors on how to build, modify, and understand the dev

|

||||

To clone the repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/google-gemini/gemini-cli.git # Or your fork's URL

|

||||

cd gemini-cli

|

||||

git clone https://github.com/QwenLM/qwen-code.git # Or your fork's URL

|

||||

cd qwen-code

|

||||

```

|

||||

|

||||

To install dependencies defined in `package.json` as well as root dependencies:

|

||||

@@ -118,9 +91,9 @@ This command typically compiles TypeScript to JavaScript, bundles assets, and pr

|

||||

|

||||

### Enabling Sandboxing

|

||||

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `GEMINI_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `QWEN_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

|

||||

To build both the `gemini` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

To build both the `qwen-code` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

|

||||

```bash

|

||||

npm run build:all

|

||||

@@ -130,13 +103,13 @@ To skip building the sandbox container, you can use `npm run build` instead.

|

||||

|

||||

### Running

|

||||

|

||||

To start the Gemini CLI from the source code (after building), run the following command from the root directory:

|

||||

To start the Qwen Code application from the source code (after building), run the following command from the root directory:

|

||||

|

||||

```bash

|

||||

npm start

|

||||

```

|

||||

|

||||

If you'd like to run the source build outside of the gemini-cli folder, you can utilize `npm link path/to/gemini-cli/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) or `alias gemini="node path/to/gemini-cli/packages/cli"` to run with `gemini`

|

||||

If you'd like to run the source build outside of the qwen-code folder, you can utilize `npm link path/to/qwen-code/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) to run with `qwen-code`

|

||||

|

||||

### Running Tests

|

||||

|

||||

@@ -154,7 +127,7 @@ This will run tests located in the `packages/core` and `packages/cli` directorie

|

||||

|

||||

#### Integration Tests

|

||||

|

||||

The integration tests are designed to validate the end-to-end functionality of the Gemini CLI. They are not run as part of the default `npm run test` command.

|

||||

The integration tests are designed to validate the end-to-end functionality of Qwen Code. They are not run as part of the default `npm run test` command.

|

||||

|

||||

To run the integration tests, use the following command:

|

||||

|

||||

@@ -209,19 +182,61 @@ npm run lint

|

||||

### Coding Conventions

|

||||

|

||||

- Please adhere to the coding style, patterns, and conventions used throughout the existing codebase.

|

||||

- Consult [QWEN.md](https://github.com/QwenLM/qwen-code/blob/main/QWEN.md) (typically found in the project root) for specific instructions related to AI-assisted development, including conventions for React, comments, and Git usage.

|

||||

- **Imports:** Pay special attention to import paths. The project uses ESLint to enforce restrictions on relative imports between packages.

|

||||

|

||||

### Project Structure

|

||||

|

||||

- `packages/`: Contains the individual sub-packages of the project.

|

||||

- `cli/`: The command-line interface.

|

||||

- `core/`: The core backend logic for the Gemini CLI.

|

||||

- `core/`: The core backend logic for Qwen Code.

|

||||

- `docs/`: Contains all project documentation.

|

||||

- `scripts/`: Utility scripts for building, testing, and development tasks.

|

||||

|

||||

For more detailed architecture, see `docs/architecture.md`.

|

||||

|

||||

## Documentation Development

|

||||

|

||||

This section describes how to develop and preview the documentation locally.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

1. Ensure you have Node.js (version 18+) installed

|

||||

2. Have npm or yarn available

|

||||

|

||||

### Setup Documentation Site Locally

|

||||

|

||||

To work on the documentation and preview changes locally:

|

||||

|

||||

1. Navigate to the `docs-site` directory:

|

||||

|

||||

```bash

|

||||

cd docs-site

|

||||

```

|

||||

|

||||

2. Install dependencies:

|

||||

|

||||

```bash

|

||||

npm install

|

||||

```

|

||||

|

||||

3. Link the documentation content from the main `docs` directory:

|

||||

|

||||

```bash

|

||||

npm run link

|

||||

```

|

||||

|

||||

This creates a symbolic link from `../docs` to `content` in the docs-site project, allowing the documentation content to be served by the Next.js site.

|

||||

|

||||

4. Start the development server:

|

||||

|

||||

```bash

|

||||

npm run dev

|

||||

```

|

||||

|

||||

5. Open [http://localhost:3000](http://localhost:3000) in your browser to see the documentation site with live updates as you make changes.

|

||||

|

||||

Any changes made to the documentation files in the main `docs` directory will be reflected immediately in the documentation site.

|

||||

|

||||

## Debugging

|

||||

|

||||

### VS Code:

|

||||

@@ -231,7 +246,7 @@ For more detailed architecture, see `docs/architecture.md`.

|

||||

```bash

|

||||

npm run debug

|

||||

```

|

||||

This command runs `node --inspect-brk dist/gemini.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

This command runs `node --inspect-brk dist/index.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

2. In VS Code, use the "Attach" launch configuration (found in `.vscode/launch.json`).

|

||||

|

||||

Alternatively, you can use the "Launch Program" configuration in VS Code if you prefer to launch the currently open file directly, but 'F5' is generally recommended.

|

||||

@@ -239,16 +254,16 @@ Alternatively, you can use the "Launch Program" configuration in VS Code if you

|

||||

To hit a breakpoint inside the sandbox container run:

|

||||

|

||||

```bash

|

||||

DEBUG=1 gemini

|

||||

DEBUG=1 qwen-code

|

||||

```

|

||||

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect gemini-cli due to automatic exclusion. Use `.gemini/.env` files for gemini-cli specific debug settings.

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect qwen-code due to automatic exclusion. Use `.qwen-code/.env` files for qwen-code specific debug settings.

|

||||

|

||||

### React DevTools

|

||||

|

||||

To debug the CLI's React-based UI, you can use React DevTools. Ink, the library used for the CLI's interface, is compatible with React DevTools version 4.x.

|

||||

|

||||

1. **Start the Gemini CLI in development mode:**

|

||||

1. **Start the Qwen Code application in development mode:**

|

||||

|

||||

```bash

|

||||

DEV=true npm start

|

||||

@@ -270,23 +285,10 @@ To debug the CLI's React-based UI, you can use React DevTools. Ink, the library

|

||||

```

|

||||

|

||||

Your running CLI application should then connect to React DevTools.

|

||||

|

||||

|

||||

## Sandboxing

|

||||

|

||||

### macOS Seatbelt

|

||||

|

||||

On macOS, `qwen` uses Seatbelt (`sandbox-exec`) under a `permissive-open` profile (see `packages/cli/src/utils/sandbox-macos-permissive-open.sb`) that restricts writes to the project folder but otherwise allows all other operations and outbound network traffic ("open") by default. You can switch to a `restrictive-closed` profile (see `packages/cli/src/utils/sandbox-macos-restrictive-closed.sb`) that declines all operations and outbound network traffic ("closed") by default by setting `SEATBELT_PROFILE=restrictive-closed` in your environment or `.env` file. Available built-in profiles are `{permissive,restrictive}-{open,closed,proxied}` (see below for proxied networking). You can also switch to a custom profile `SEATBELT_PROFILE=<profile>` if you also create a file `.qwen/sandbox-macos-<profile>.sb` under your project settings directory `.qwen`.

|

||||

|

||||

### Container-based Sandboxing (All Platforms)

|

||||

|

||||

For stronger container-based sandboxing on macOS or other platforms, you can set `GEMINI_SANDBOX=true|docker|podman|<command>` in your environment or `.env` file. The specified command (or if `true` then either `docker` or `podman`) must be installed on the host machine. Once enabled, `npm run build:all` will build a minimal container ("sandbox") image and `npm start` will launch inside a fresh instance of that container. The first build can take 20-30s (mostly due to downloading of the base image) but after that both build and start overhead should be minimal. Default builds (`npm run build`) will not rebuild the sandbox.

|

||||

|

||||

Container-based sandboxing mounts the project directory (and system temp directory) with read-write access and is started/stopped/removed automatically as you start/stop Gemini CLI. Files created within the sandbox should be automatically mapped to your user/group on host machine. You can easily specify additional mounts, ports, or environment variables by setting `SANDBOX_{MOUNTS,PORTS,ENV}` as needed. You can also fully customize the sandbox for your projects by creating the files `.qwen/sandbox.Dockerfile` and/or `.qwen/sandbox.bashrc` under your project settings directory (`.qwen`) and running `qwen` with `BUILD_SANDBOX=1` to trigger building of your custom sandbox.

|

||||

|

||||

#### Proxied Networking

|

||||

|

||||

All sandboxing methods, including macOS Seatbelt using `*-proxied` profiles, support restricting outbound network traffic through a custom proxy server that can be specified as `GEMINI_SANDBOX_PROXY_COMMAND=<command>`, where `<command>` must start a proxy server that listens on `:::8877` for relevant requests. See `docs/examples/proxy-script.md` for a minimal proxy that only allows `HTTPS` connections to `example.com:443` (e.g. `curl https://example.com`) and declines all other requests. The proxy is started and stopped automatically alongside the sandbox.

|

||||

> TBD

|

||||

|

||||

## Manual Publish

|

||||

|

||||

|

||||

10

Makefile

10

Makefile

@@ -1,9 +1,9 @@

|

||||

# Makefile for gemini-cli

|

||||

# Makefile for qwen-code

|

||||

|

||||

.PHONY: help install build build-sandbox build-all test lint format preflight clean start debug release run-npx create-alias

|

||||

|

||||

help:

|

||||

@echo "Makefile for gemini-cli"

|

||||

@echo "Makefile for qwen-code"

|

||||

@echo ""

|

||||

@echo "Usage:"

|

||||

@echo " make install - Install npm dependencies"

|

||||

@@ -14,11 +14,11 @@ help:

|

||||

@echo " make format - Format the code"

|

||||

@echo " make preflight - Run formatting, linting, and tests"

|

||||

@echo " make clean - Remove generated files"

|

||||

@echo " make start - Start the Gemini CLI"

|

||||

@echo " make debug - Start the Gemini CLI in debug mode"

|

||||

@echo " make start - Start the Qwen Code CLI"

|

||||

@echo " make debug - Start the Qwen Code CLI in debug mode"

|

||||

@echo ""

|

||||

@echo " make run-npx - Run the CLI using npx (for testing the published package)"

|

||||

@echo " make create-alias - Create a 'gemini' alias for your shell"

|

||||

@echo " make create-alias - Create a 'qwen' alias for your shell"

|

||||

|

||||

install:

|

||||

npm install

|

||||

|

||||

181

README.md

181

README.md

@@ -1,108 +1,150 @@

|

||||

# Qwen Code

|

||||

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

[](./LICENSE)

|

||||

[](https://nodejs.org/)

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

|

||||

**AI-powered command-line workflow tool for developers**

|

||||

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/">中文</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru">Русский</a>

|

||||

|

||||

[Installation](#install-from-npm) • [Quick Start](#-quick-start) • [Features](#-why-qwen-code) • [Documentation](https://qwenlm.github.io/qwen-code-docs/en/users/overview/) • [Contributing](https://qwenlm.github.io/qwen-code-docs/en/developers/contributing/)

|

||||

**An open-source AI agent that lives in your terminal.**

|

||||

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/users/overview">中文</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/users/overview">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr/users/overview">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/users/overview">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru/users/overview">Русский</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/pt-BR/users/overview">Português (Brasil)</a>

|

||||

|

||||

</div>

|

||||

|

||||

Qwen Code is a powerful command-line AI workflow tool adapted from [**Gemini CLI**](https://github.com/google-gemini/gemini-cli), specifically optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder) models. It enhances your development workflow with advanced code understanding, automated tasks, and intelligent assistance.

|

||||

Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder). It helps you understand large codebases, automate tedious work, and ship faster.

|

||||

|

||||

|

||||

|

||||

## Why Qwen Code?

|

||||

|

||||

## 📌 Why Qwen Code?

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

|

||||

- 🎯 Free Access Available: Get started with 2,000 free requests per day via Qwen OAuth.

|

||||

- 🧠 Code Understanding & Editing - Query and edit large codebases beyond traditional context window limits

|

||||

- 🤖 Workflow Automation - Automate operational tasks like handling pull requests and complex rebases

|

||||

- 💻 Terminal-first: Designed for developers who live in the command line.

|

||||

- 🧰 VS Code: Install the VS Code extension to seamlessly integrate into your existing workflow.

|

||||

- 📦 Simple Setup: Easy installation with npm, Homebrew, or source for quick deployment.

|

||||

## Installation

|

||||

|

||||

>👉 Know more [workflows](https://qwenlm.github.io/qwen-code-docs/en/users/common-workflow/)

|

||||

>

|

||||

> 📦 The extension is currently in development. For installation, features, and development guide, see the [VS Code Extension README](./packages/vscode-ide-companion/README.md).

|

||||

|

||||

|

||||

## ❓ How to use Qwen Code?

|

||||

|

||||

### Prerequisites

|

||||

|

||||

Ensure you have [Node.js version 20](https://nodejs.org/en/download) or higher installed.

|

||||

#### Prerequisites

|

||||

|

||||

```bash

|

||||

# Node.js 20+

|

||||

curl -qL https://www.npmjs.com/install.sh | sh

|

||||

```

|

||||

|

||||

### Install from npm

|

||||

#### NPM (recommended)

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code@latest

|

||||

```

|

||||

|

||||

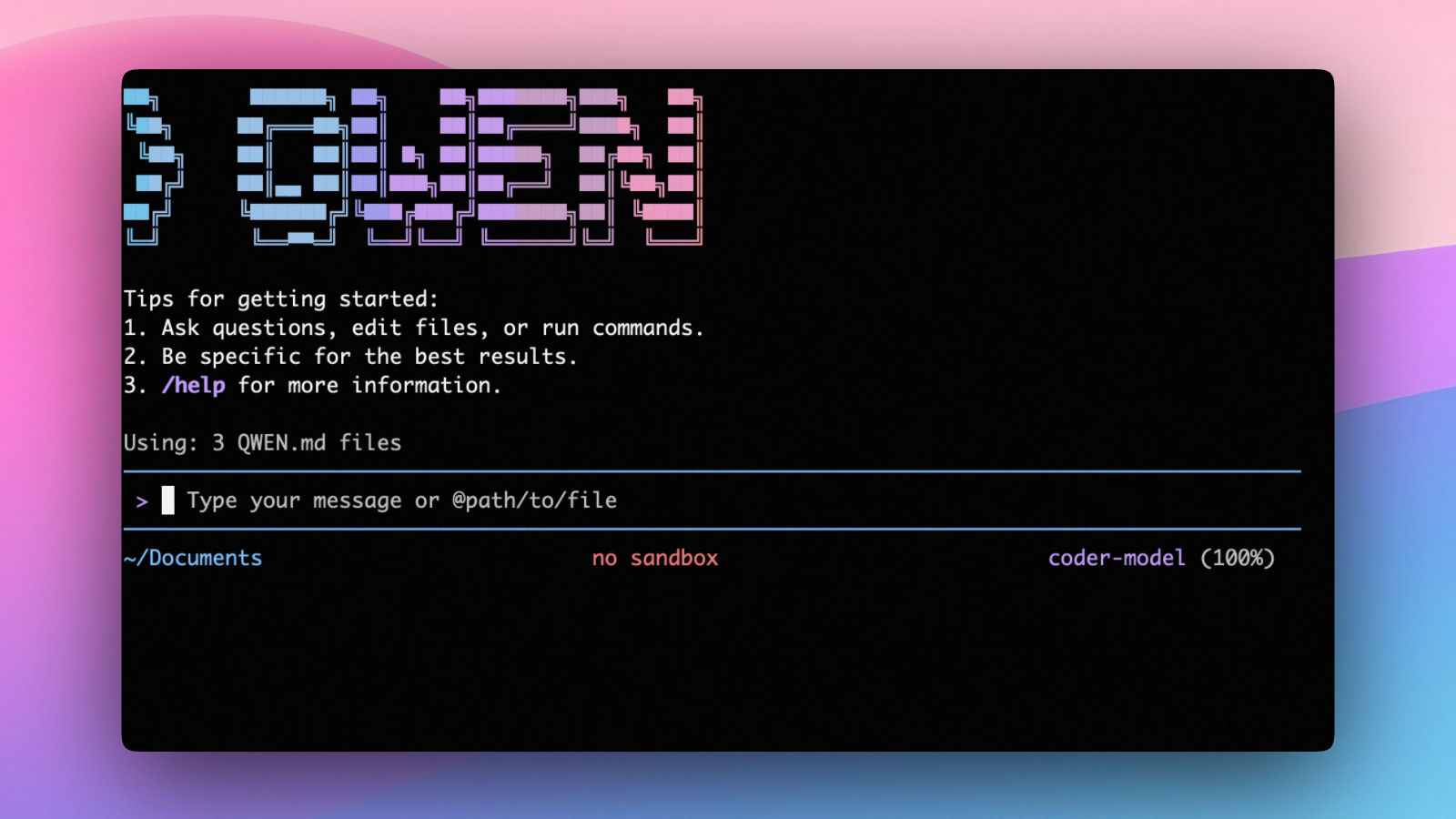

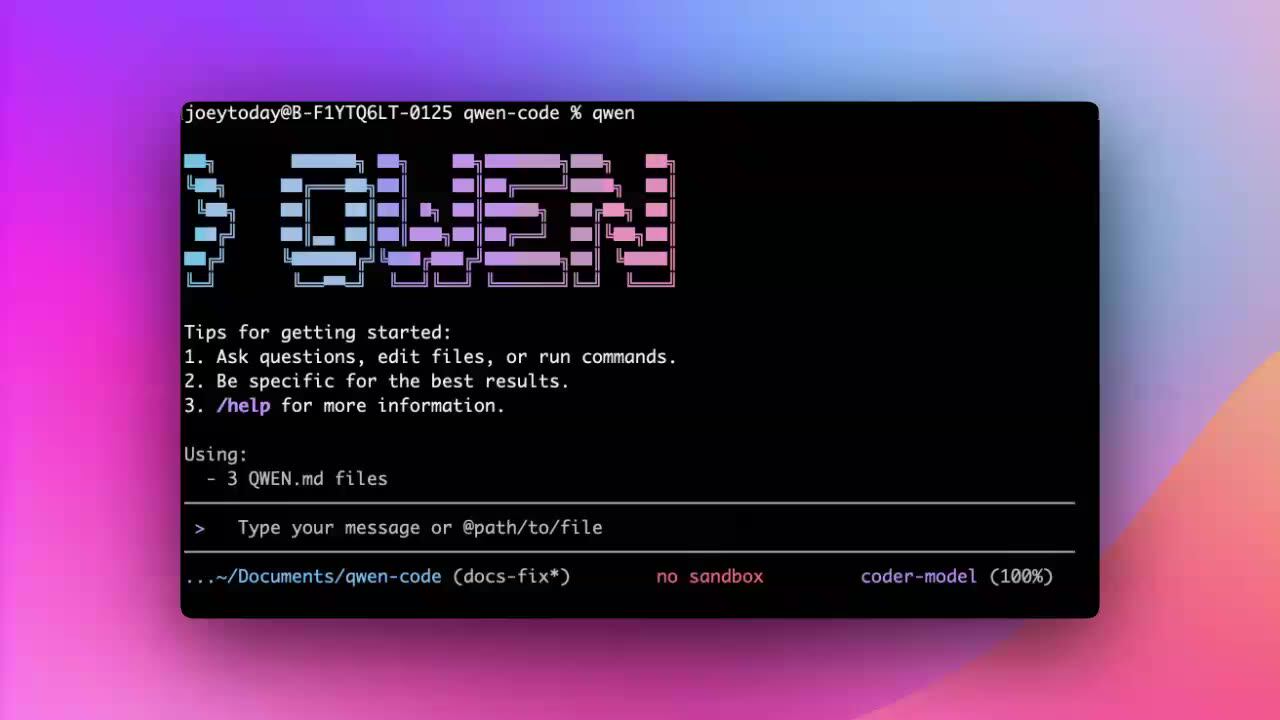

## 🚀 Quick Start

|

||||

#### Homebrew (macOS, Linux)

|

||||

|

||||

```bash

|

||||

# Start Qwen Code

|

||||

qwen

|

||||

|

||||

# Example commands

|

||||

> What does this project do?

|

||||

> Explain this codebase structure

|

||||

> Help me refactor this function

|

||||

> Generate unit tests for this module

|

||||

brew install qwen-code

|

||||

```

|

||||

|

||||

👇 Click to play video

|

||||

## Quick Start

|

||||

|

||||

[](https://cloud.video.taobao.com/vod/HLfyppnCHplRV9Qhz2xSqeazHeRzYtG-EYJnHAqtzkQ.mp4)

|

||||

```bash

|

||||

# Start Qwen Code (interactive)

|

||||

qwen

|

||||

|

||||

# Then, in the session:

|

||||

/help

|

||||

/auth

|

||||

```

|

||||

|

||||

## Usage Examples

|

||||

On first use, you'll be prompted to sign in. You can run `/auth` anytime to switch authentication methods.

|

||||

|

||||

### 1️⃣ Interactive Mode

|

||||

Example prompts:

|

||||

|

||||

```text

|

||||

What does this project do?

|

||||

Explain the codebase structure.

|

||||

Help me refactor this function.

|

||||

Generate unit tests for this module.

|

||||

```

|

||||

|

||||

<details>

|

||||

<summary>Click to watch a demo video</summary>

|

||||

|

||||

<video src="https://cloud.video.taobao.com/vod/HLfyppnCHplRV9Qhz2xSqeazHeRzYtG-EYJnHAqtzkQ.mp4" controls>

|

||||

Your browser does not support the video tag.

|

||||

</video>

|

||||

|

||||

</details>

|

||||

|

||||

## Authentication

|

||||

|

||||

Qwen Code supports two authentication methods:

|

||||

|

||||

- **Qwen OAuth (recommended & free)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use `OPENAI_API_KEY` (and optionally a custom base URL / model).

|

||||

|

||||

#### Qwen OAuth (recommended)

|

||||

|

||||

Start `qwen`, then run:

|

||||

|

||||

```bash

|

||||

/auth

|

||||

```

|

||||

|

||||

Choose **Qwen OAuth** and complete the browser flow. Your credentials are cached locally so you usually won't need to log in again.

|

||||

|

||||

#### OpenAI-compatible API (API key)

|

||||

|

||||

Environment variables (recommended for CI / headless environments):

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-api-key-here"

|

||||

export OPENAI_BASE_URL="https://api.openai.com/v1" # optional

|

||||

export OPENAI_MODEL="gpt-4o" # optional

|

||||

```

|

||||

|

||||

For details (including `.qwen/.env` loading and security notes), see the [authentication guide](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/auth/).

|

||||

|

||||

## Usage

|

||||

|

||||

As an open-source terminal agent, you can use Qwen Code in four primary ways:

|

||||

|

||||

1. Interactive mode (terminal UI)

|

||||

2. Headless mode (scripts, CI)

|

||||

3. IDE integration (VS Code, Zed)

|

||||

4. TypeScript SDK

|

||||

|

||||

#### Interactive mode

|

||||

|

||||

```bash

|

||||

cd your-project/

|

||||

qwen

|

||||

```

|

||||

|

||||

Navigate to your project folder and type `qwen` to launch Qwen Code. Start a conversation and use `@` to reference files within the folder.

|

||||

Run `qwen` in your project folder to launch the interactive terminal UI. Use `@` to reference local files (for example `@src/main.ts`).

|

||||

|

||||

If you want to learn more about common workflows, click [Common Workflows](https://qwenlm.github.io/qwen-code-docs/en/users/common-workflow/) to view.

|

||||

|

||||

### 2️⃣ Headless Mode

|

||||

#### Headless mode

|

||||

|

||||

```bash

|

||||

cd your-project/

|

||||

qwen -p "your question"

|

||||

```

|

||||

[Headless mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/headless) allows you to run Qwen Code programmatically from command line scripts and automation tools without any interactive UI. This is ideal for scripting, automation, CI/CD pipelines, and building AI-powered tools.

|

||||

|

||||

### 3️⃣ Use in IDE

|

||||

If you prefer to integrate Qwen Code into your current editor, we now support VS Code and Zed. For details, please refer to:

|

||||

Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automation, and CI/CD. Learn more: [Headless mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/headless).

|

||||

|

||||

#### IDE integration

|

||||

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

|

||||

### 4️⃣ SDK

|

||||

Qwen Code now supports an SDK designed to simplify integration with the Qwen Code platform. It provides a set of easy-to-use APIs and tools enabling developers to efficiently build, test, and deploy applications. For details, please refer to:

|

||||

#### TypeScript SDK

|

||||

|

||||

Build on top of Qwen Code with the TypeScript SDK:

|

||||

|

||||

- [Use the Qwen Code SDK](./packages/sdk-typescript/README.md)

|

||||

|

||||

@@ -114,6 +156,7 @@ Qwen Code now supports an SDK designed to simplify integration with the Qwen Cod

|

||||

- `/clear` - Clear conversation history

|

||||

- `/compress` - Compress history to save tokens

|

||||

- `/stats` - Show current session information

|

||||

- `/bug` - Submit a bug report

|

||||

- `/exit` or `/quit` - Exit Qwen Code

|

||||

|

||||

### Keyboard Shortcuts

|

||||

@@ -122,10 +165,18 @@ Qwen Code now supports an SDK designed to simplify integration with the Qwen Cod

|

||||

- `Ctrl+D` - Exit (on empty line)

|

||||

- `Up/Down` - Navigate command history

|

||||

|

||||

|

||||

> 👉 Know more about [Commands](https://qwenlm.github.io/qwen-code-docs/en/users/features/commands/)

|

||||

> Learn more about [Commands](https://qwenlm.github.io/qwen-code-docs/en/users/features/commands/)

|

||||

>

|

||||

> 💡 **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected. Know more about [Approval Mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/approval-mode/)

|

||||

> **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected. Learn more about [Approval Mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/approval-mode/)

|

||||

|

||||

## Configuration

|

||||

|

||||

Qwen Code can be configured via `settings.json`, environment variables, and CLI flags.

|

||||

|

||||

- **User settings**: `~/.qwen/settings.json`

|

||||

- **Project settings**: `.qwen/settings.json`

|

||||

|

||||

See [settings](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/settings/) for available options and precedence.

|

||||

|

||||

## Benchmark Results

|

||||

|

||||

@@ -136,24 +187,18 @@ Qwen Code now supports an SDK designed to simplify integration with the Qwen Cod

|

||||

| Qwen Code | Qwen3-Coder-480A35 | 37.5% |

|

||||

| Qwen Code | Qwen3-Coder-30BA3B | 31.3% |

|

||||

|

||||

## Development & Contributing

|

||||

## Ecosystem

|

||||

|

||||

See [CONTRIBUTING.md](https://qwenlm.github.io/qwen-code-docs/en/developers/contributing/) to learn how to contribute to the project.

|

||||

Looking for a graphical interface?

|

||||

|

||||

For detailed authentication setup, see the [authentication guide](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/auth/).

|

||||

- [**Gemini CLI Desktop**](https://github.com/Piebald-AI/gemini-cli-desktop) A cross-platform desktop/web/mobile UI for Qwen Code

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

If you encounter issues, check the [troubleshooting guide](https://qwenlm.github.io/qwen-code-docs/en/users/support/troubleshooting/).

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

## License

|

||||

|

||||

[LICENSE](./LICENSE)

|

||||

|

||||

## Star History

|

||||

|

||||

[](https://www.star-history.com/#QwenLM/qwen-code&Date)

|

||||

|

||||

@@ -627,7 +627,12 @@ The MCP integration tracks several states:

|

||||

|

||||

### Schema Compatibility

|

||||

|

||||

- **Property stripping:** The system automatically removes certain schema properties (`$schema`, `additionalProperties`) for Qwen API compatibility

|

||||

- **Schema compliance mode:** By default (`schemaCompliance: "auto"`), tool schemas are passed through as-is. Set `"model": { "generationConfig": { "schemaCompliance": "openapi_30" } }` in your `settings.json` to convert models to Strict OpenAPI 3.0 format.

|

||||

- **OpenAPI 3.0 transformations:** When `openapi_30` mode is enabled, the system handles:

|

||||

- Nullable types: `["string", "null"]` -> `type: "string", nullable: true`

|

||||

- Const values: `const: "foo"` -> `enum: ["foo"]`

|

||||

- Exclusive limits: numeric `exclusiveMinimum` -> boolean form with `minimum`

|

||||

- Keyword removal: `$schema`, `$id`, `dependencies`, `patternProperties`

|

||||

- **Name sanitization:** Tool names are automatically sanitized to meet API requirements

|

||||

- **Conflict resolution:** Tool name conflicts between servers are resolved through automatic prefixing

|

||||

|

||||

|

||||

@@ -5,6 +5,7 @@ Welcome to the Qwen Code documentation. Qwen Code is an agentic coding tool that

|

||||

## Documentation Sections

|

||||

|

||||

### [User Guide](./users/overview)

|

||||

|

||||

Learn how to use Qwen Code as an end user. This section covers:

|

||||

|

||||

- Basic installation and setup

|

||||

|

||||

@@ -20,9 +20,9 @@ You can update your `.qwenignore` file at any time. To apply the changes, you mu

|

||||

|

||||

## How to use `.qwenignore`

|

||||

|

||||

| Step | Description |

|

||||

| ---------------------- | ------------------------------------------------------------ |

|

||||

| **Enable .qwenignore** | Create a file named `.qwenignore` in your project root directory |

|

||||

| Step | Description |

|

||||

| ---------------------- | -------------------------------------------------------------------------------------- |

|

||||

| **Enable .qwenignore** | Create a file named `.qwenignore` in your project root directory |

|

||||

| **Add ignore rules** | Open `.qwenignore` file and add paths to ignore, example: `/archive/` or `apikeys.txt` |

|

||||

|

||||

### `.qwenignore` examples

|

||||

|

||||

@@ -50,13 +50,14 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

#### general

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| ------------------------------- | ------- | ------------------------------------------ | ----------- |

|

||||

| `general.preferredEditor` | string | The preferred editor to open files in. | `undefined` |

|

||||

| `general.vimMode` | boolean | Enable Vim keybindings. | `false` |

|

||||

| `general.disableAutoUpdate` | boolean | Disable automatic updates. | `false` |

|

||||

| `general.disableUpdateNag` | boolean | Disable update notification prompts. | `false` |

|

||||

| `general.checkpointing.enabled` | boolean | Enable session checkpointing for recovery. | `false` |

|

||||

| Setting | Type | Description | Default |

|

||||

| ------------------------------- | ------- | ---------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `general.preferredEditor` | string | The preferred editor to open files in. | `undefined` |

|

||||

| `general.vimMode` | boolean | Enable Vim keybindings. | `false` |

|

||||

| `general.disableAutoUpdate` | boolean | Disable automatic updates. | `false` |

|

||||

| `general.disableUpdateNag` | boolean | Disable update notification prompts. | `false` |

|

||||

| `general.gitCoAuthor` | boolean | Automatically add a Co-authored-by trailer to git commit messages when commits are made through Qwen Code. | `true` |

|

||||

| `general.checkpointing.enabled` | boolean | Enable session checkpointing for recovery. | `false` |

|

||||

|

||||

#### output

|

||||

|

||||

@@ -68,7 +69,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| ---------------------------------------- | ---------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `ui.theme` | string | The color theme for the UI. See [Themes](../configuration/themes) for available options. | `undefined` |

|

||||

| `ui.theme` | string | The color theme for the UI. See [Themes](../configuration/themes) for available options. | `undefined` |

|

||||

| `ui.customThemes` | object | Custom theme definitions. | `{}` |

|

||||

| `ui.hideWindowTitle` | boolean | Hide the window title bar. | `false` |

|

||||

| `ui.hideTips` | boolean | Hide helpful tips in the UI. | `false` |

|

||||

@@ -356,38 +357,38 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

|

||||

### Command-Line Arguments Table

|

||||

|

||||

| Argument | Alias | Description | Possible Values | Notes |

|

||||

| ---------------------------- | ----- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| `--model` | `-m` | Specifies the Qwen model to use for this session. | Model name | Example: `npm start -- --model qwen3-coder-plus` |

|

||||

| `--prompt` | `-p` | Used to pass a prompt directly to the command. This invokes Qwen Code in a non-interactive mode. | Your prompt text | For scripting examples, use the `--output-format json` flag to get structured output. |

|

||||

| `--prompt-interactive` | `-i` | Starts an interactive session with the provided prompt as the initial input. | Your prompt text | The prompt is processed within the interactive session, not before it. Cannot be used when piping input from stdin. Example: `qwen -i "explain this code"` |

|

||||

| Argument | Alias | Description | Possible Values | Notes |

|

||||

| ---------------------------- | ----- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| `--model` | `-m` | Specifies the Qwen model to use for this session. | Model name | Example: `npm start -- --model qwen3-coder-plus` |

|

||||

| `--prompt` | `-p` | Used to pass a prompt directly to the command. This invokes Qwen Code in a non-interactive mode. | Your prompt text | For scripting examples, use the `--output-format json` flag to get structured output. |

|

||||

| `--prompt-interactive` | `-i` | Starts an interactive session with the provided prompt as the initial input. | Your prompt text | The prompt is processed within the interactive session, not before it. Cannot be used when piping input from stdin. Example: `qwen -i "explain this code"` |

|

||||

| `--output-format` | `-o` | Specifies the format of the CLI output for non-interactive mode. | `text`, `json`, `stream-json` | `text`: (Default) The standard human-readable output. `json`: A machine-readable JSON output emitted at the end of execution. `stream-json`: Streaming JSON messages emitted as they occur during execution. For structured output and scripting, use the `--output-format json` or `--output-format stream-json` flag. See [Headless Mode](../features/headless) for detailed information. |

|

||||

| `--input-format` | | Specifies the format consumed from standard input. | `text`, `stream-json` | `text`: (Default) Standard text input from stdin or command-line arguments. `stream-json`: JSON message protocol via stdin for bidirectional communication. Requirement: `--input-format stream-json` requires `--output-format stream-json` to be set. When using `stream-json`, stdin is reserved for protocol messages. See [Headless Mode](../features/headless) for detailed information. |

|

||||

| `--include-partial-messages` | | Include partial assistant messages when using `stream-json` output format. When enabled, emits stream events (message_start, content_block_delta, etc.) as they occur during streaming. | | Default: `false`. Requirement: Requires `--output-format stream-json` to be set. See [Headless Mode](../features/headless) for detailed information about stream events. |

|

||||

| `--sandbox` | `-s` | Enables sandbox mode for this session. | | |

|

||||

| `--sandbox-image` | | Sets the sandbox image URI. | | |

|

||||

| `--debug` | `-d` | Enables debug mode for this session, providing more verbose output. | | |

|

||||

| `--all-files` | `-a` | If set, recursively includes all files within the current directory as context for the prompt. | | |

|

||||

| `--help` | `-h` | Displays help information about command-line arguments. | | |

|

||||

| `--show-memory-usage` | | Displays the current memory usage. | | |

|

||||

| `--yolo` | | Enables YOLO mode, which automatically approves all tool calls. | | |

|

||||

| `--sandbox` | `-s` | Enables sandbox mode for this session. | | |

|

||||

| `--sandbox-image` | | Sets the sandbox image URI. | | |

|

||||

| `--debug` | `-d` | Enables debug mode for this session, providing more verbose output. | | |

|

||||

| `--all-files` | `-a` | If set, recursively includes all files within the current directory as context for the prompt. | | |

|

||||

| `--help` | `-h` | Displays help information about command-line arguments. | | |

|

||||

| `--show-memory-usage` | | Displays the current memory usage. | | |

|

||||

| `--yolo` | | Enables YOLO mode, which automatically approves all tool calls. | | |

|

||||

| `--approval-mode` | | Sets the approval mode for tool calls. | `plan`, `default`, `auto-edit`, `yolo` | Supported modes: `plan`: Analyze only—do not modify files or execute commands. `default`: Require approval for file edits or shell commands (default behavior). `auto-edit`: Automatically approve edit tools (edit, write_file) while prompting for others. `yolo`: Automatically approve all tool calls (equivalent to `--yolo`). Cannot be used together with `--yolo`. Use `--approval-mode=yolo` instead of `--yolo` for the new unified approach. Example: `qwen --approval-mode auto-edit`<br>See more about [Approval Mode](../features/approval-mode). |

|

||||

| `--allowed-tools` | | A comma-separated list of tool names that will bypass the confirmation dialog. | Tool names | Example: `qwen --allowed-tools "Shell(git status)"` |

|

||||

| `--telemetry` | | Enables [telemetry](/developers/development/telemetry). | | |

|

||||

| `--telemetry-target` | | Sets the telemetry target. | | See [telemetry](/developers/development/telemetry) for more information. |

|

||||

| `--telemetry-otlp-endpoint` | | Sets the OTLP endpoint for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

| `--proxy` | | Sets the proxy for the CLI. | Proxy URL | Example: `--proxy http://localhost:7890`. |

|

||||

| `--include-directories` | | Includes additional directories in the workspace for multi-directory support. | Directory paths | Can be specified multiple times or as comma-separated values. 5 directories can be added at maximum. Example: `--include-directories /path/to/project1,/path/to/project2` or `--include-directories /path/to/project1 --include-directories /path/to/project2` |

|

||||

| `--screen-reader` | | Enables screen reader mode, which adjusts the TUI for better compatibility with screen readers. | | |

|

||||

| `--version` | | Displays the version of the CLI. | | |

|

||||

| `--openai-logging` | | Enables logging of OpenAI API calls for debugging and analysis. | | This flag overrides the `enableOpenAILogging` setting in `settings.json`. |

|

||||

| `--openai-logging-dir` | | Sets a custom directory path for OpenAI API logs. | Directory path | This flag overrides the `openAILoggingDir` setting in `settings.json`. Supports absolute paths, relative paths, and `~` expansion. Example: `qwen --openai-logging-dir "~/qwen-logs" --openai-logging` |

|

||||

| `--tavily-api-key` | | Sets the Tavily API key for web search functionality for this session. | API key | Example: `qwen --tavily-api-key tvly-your-api-key-here` |

|

||||

| `--allowed-tools` | | A comma-separated list of tool names that will bypass the confirmation dialog. | Tool names | Example: `qwen --allowed-tools "Shell(git status)"` |

|

||||

| `--telemetry` | | Enables [telemetry](/developers/development/telemetry). | | |

|

||||

| `--telemetry-target` | | Sets the telemetry target. | | See [telemetry](/developers/development/telemetry) for more information. |

|

||||

| `--telemetry-otlp-endpoint` | | Sets the OTLP endpoint for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

| `--proxy` | | Sets the proxy for the CLI. | Proxy URL | Example: `--proxy http://localhost:7890`. |

|

||||

| `--include-directories` | | Includes additional directories in the workspace for multi-directory support. | Directory paths | Can be specified multiple times or as comma-separated values. 5 directories can be added at maximum. Example: `--include-directories /path/to/project1,/path/to/project2` or `--include-directories /path/to/project1 --include-directories /path/to/project2` |

|

||||

| `--screen-reader` | | Enables screen reader mode, which adjusts the TUI for better compatibility with screen readers. | | |

|

||||

| `--version` | | Displays the version of the CLI. | | |

|

||||

| `--openai-logging` | | Enables logging of OpenAI API calls for debugging and analysis. | | This flag overrides the `enableOpenAILogging` setting in `settings.json`. |

|

||||

| `--openai-logging-dir` | | Sets a custom directory path for OpenAI API logs. | Directory path | This flag overrides the `openAILoggingDir` setting in `settings.json`. Supports absolute paths, relative paths, and `~` expansion. Example: `qwen --openai-logging-dir "~/qwen-logs" --openai-logging` |

|

||||

| `--tavily-api-key` | | Sets the Tavily API key for web search functionality for this session. | API key | Example: `qwen --tavily-api-key tvly-your-api-key-here` |

|

||||

|

||||

## Context Files (Hierarchical Instructional Context)

|

||||

|

||||

|

||||

@@ -140,8 +140,6 @@ The theme file must be a valid JSON file that follows the same structure as a cu

|

||||

|

||||

### Example Custom Theme

|

||||

|

||||

|

||||

|

||||

<img src="https://gw.alicdn.com/imgextra/i1/O1CN01Em30Hc1jYXAdIgls3_!!6000000004560-2-tps-1009-629.png" alt=" " style="zoom:100%;text-align:center;margin: 0 auto;" />

|

||||

|

||||

### Using Your Custom Theme

|

||||

@@ -150,15 +148,13 @@ The theme file must be a valid JSON file that follows the same structure as a cu

|

||||

- Or, set it as the default by adding `"theme": "MyCustomTheme"` to the `ui` object in your `settings.json`.

|

||||

- Custom themes can be set at the user, project, or system level, and follow the same [configuration precedence](../configuration/settings) as other settings.

|

||||

|

||||

|

||||

|

||||

## Themes Preview

|

||||

|

||||

| Dark Theme | Preview | Light Theme | Preview |

|

||||

| :-: | :-: | :-: | :-: |

|

||||

| ANSI | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01ZInJiq1GdSZc9gHsI_!!6000000000645-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | ANSI Light | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01IiJQFC1h9E3MXQj6W_!!6000000004234-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Atom OneDark | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01Zlx1SO1Sw21SkTKV3_!!6000000002310-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | Ayu Light | <img src="https://gw.alicdn.com/imgextra/i3/O1CN01zEUc1V1jeUJsnCgQb_!!6000000004573-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Ayu | <img src="https://gw.alicdn.com/imgextra/i3/O1CN019upo6v1SmPhmRjzfN_!!6000000002289-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> | Default Light | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01RHjrEs1u7TXq3M6l3_!!6000000005990-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Default | <img src="https://gw.alicdn.com/imgextra/i4/O1CN016pIeXz1pFC8owmR4Q_!!6000000005330-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | GitHub Light | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01US2b0g1VETCPAVWLA_!!6000000002621-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Dracula | <img src="https://gw.alicdn.com/imgextra/i4/O1CN016htnWH20c3gd2LpUR_!!6000000006869-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | Google Code | <img src="https://gw.alicdn.com/imgextra/i1/O1CN01Ng29ab23iQ2BuYKz8_!!6000000007289-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| GitHub | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01fFCRda1IQIQ9qDNqv_!!6000000000887-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> | Xcode | <img src="https://gw.alicdn.com/imgextra/i1/O1CN010E3QAi1Huh5o1E9LN_!!6000000000818-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Dark Theme | Preview | Light Theme | Preview |

|

||||

| :----------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------------: | :-----------: | :-------------------------------------------------------------------------------------------------------------------------------------------------------------------: |

|

||||

| ANSI | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01ZInJiq1GdSZc9gHsI_!!6000000000645-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | ANSI Light | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01IiJQFC1h9E3MXQj6W_!!6000000004234-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Atom OneDark | <img src="https://gw.alicdn.com/imgextra/i2/O1CN01Zlx1SO1Sw21SkTKV3_!!6000000002310-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | Ayu Light | <img src="https://gw.alicdn.com/imgextra/i3/O1CN01zEUc1V1jeUJsnCgQb_!!6000000004573-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Ayu | <img src="https://gw.alicdn.com/imgextra/i3/O1CN019upo6v1SmPhmRjzfN_!!6000000002289-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> | Default Light | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01RHjrEs1u7TXq3M6l3_!!6000000005990-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Default | <img src="https://gw.alicdn.com/imgextra/i4/O1CN016pIeXz1pFC8owmR4Q_!!6000000005330-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | GitHub Light | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01US2b0g1VETCPAVWLA_!!6000000002621-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| Dracula | <img src="https://gw.alicdn.com/imgextra/i4/O1CN016htnWH20c3gd2LpUR_!!6000000006869-2-tps-1140-934.png" style="zoom:30%;text-align:center;margin: 0 auto;" /> | Google Code | <img src="https://gw.alicdn.com/imgextra/i1/O1CN01Ng29ab23iQ2BuYKz8_!!6000000007289-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

| GitHub | <img src="https://gw.alicdn.com/imgextra/i4/O1CN01fFCRda1IQIQ9qDNqv_!!6000000000887-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> | Xcode | <img src="https://gw.alicdn.com/imgextra/i1/O1CN010E3QAi1Huh5o1E9LN_!!6000000000818-2-tps-1140-934.png" alt=" " style="zoom:30%;text-align:center;margin: 0 auto;" /> |

|

||||

|

||||

@@ -20,10 +20,11 @@ These commands help you save, restore, and summarize work progress.

|

||||

|

||||

| Command | Description | Usage Examples |

|

||||

| ----------- | --------------------------------------------------------- | ------------------------------------ |

|

||||

| `/init` | Analyze current directory and create initial context file | `/init` |

|

||||

| `/summary` | Generate project summary based on conversation history | `/summary` |

|

||||

| `/compress` | Replace chat history with summary to save Tokens | `/compress` |

|

||||

| `/resume` | Resume a previous conversation session | `/resume` |

|

||||

| `/restore` | Restore files to state before tool execution | `/restore` (list) or `/restore <ID>` |

|

||||

| `/init` | Analyze current directory and create initial context file | `/init` |

|

||||

|

||||

### 1.2 Interface and Workspace Control

|

||||

|

||||

|

||||

@@ -16,16 +16,15 @@ The plugin **MUST** run a local HTTP server that implements the **Model Context

|

||||

- **Endpoint:** The server should expose a single endpoint (e.g., `/mcp`) for all MCP communication.

|

||||

- **Port:** The server **MUST** listen on a dynamically assigned port (i.e., listen on port `0`).

|

||||

|

||||

### 2. Discovery Mechanism: The Port File

|

||||

### 2. Discovery Mechanism: The Lock File

|

||||

|

||||

For Qwen Code to connect, it needs to discover which IDE instance it's running in and what port your server is using. The plugin **MUST** facilitate this by creating a "discovery file."

|

||||

For Qwen Code to connect, it needs to discover what port your server is using. The plugin **MUST** facilitate this by creating a "lock file" and setting the port environment variable.

|

||||

|

||||

- **How the CLI Finds the File:** The CLI determines the Process ID (PID) of the IDE it's running in by traversing the process tree. It then looks for a discovery file that contains this PID in its name.

|

||||

- **File Location:** The file must be created in a specific directory: `os.tmpdir()/qwen/ide/`. Your plugin must create this directory if it doesn't exist.

|

||||

- **How the CLI Finds the File:** The CLI reads the port from `QWEN_CODE_IDE_SERVER_PORT`, then reads `~/.qwen/ide/<PORT>.lock`. (Legacy fallbacks exist for older extensions; see note below.)

|

||||

- **File Location:** The file must be created in a specific directory: `~/.qwen/ide/`. Your plugin must create this directory if it doesn't exist.

|

||||

- **File Naming Convention:** The filename is critical and **MUST** follow the pattern:

|

||||

`qwen-code-ide-server-${PID}-${PORT}.json`

|

||||

- `${PID}`: The process ID of the parent IDE process. Your plugin must determine this PID and include it in the filename.

|

||||

- `${PORT}`: The port your MCP server is listening on.

|

||||

`<PORT>.lock`

|

||||

- `<PORT>`: The port your MCP server is listening on.

|

||||

- **File Content & Workspace Validation:** The file **MUST** contain a JSON object with the following structure:

|

||||

|

||||

```json

|

||||

@@ -33,21 +32,20 @@ For Qwen Code to connect, it needs to discover which IDE instance it's running i

|

||||

"port": 12345,

|

||||

"workspacePath": "/path/to/project1:/path/to/project2",

|

||||

"authToken": "a-very-secret-token",

|

||||

"ideInfo": {

|

||||

"name": "vscode",

|

||||

"displayName": "VS Code"

|

||||

}

|

||||

"ppid": 1234,

|

||||

"ideName": "VS Code"

|

||||

}

|

||||

```

|

||||

- `port` (number, required): The port of the MCP server.

|

||||

- `workspacePath` (string, required): A list of all open workspace root paths, delimited by the OS-specific path separator (`:` for Linux/macOS, `;` for Windows). The CLI uses this path to ensure it's running in the same project folder that's open in the IDE. If the CLI's current working directory is not a sub-directory of `workspacePath`, the connection will be rejected. Your plugin **MUST** provide the correct, absolute path(s) to the root of the open workspace(s).

|

||||

- `authToken` (string, required): A secret token for securing the connection. The CLI will include this token in an `Authorization: Bearer <token>` header on all requests.

|

||||

- `ideInfo` (object, required): Information about the IDE.

|

||||

- `name` (string, required): A short, lowercase identifier for the IDE (e.g., `vscode`, `jetbrains`).

|

||||

- `displayName` (string, required): A user-friendly name for the IDE (e.g., `VS Code`, `JetBrains IDE`).

|

||||

- `ppid` (number, required): The parent process ID of the IDE process.

|

||||

- `ideName` (string, required): A user-friendly name for the IDE (e.g., `VS Code`, `JetBrains IDE`).

|

||||

|

||||

- **Authentication:** To secure the connection, the plugin **MUST** generate a unique, secret token and include it in the discovery file. The CLI will then include this token in the `Authorization` header for all requests to the MCP server (e.g., `Authorization: Bearer a-very-secret-token`). Your server **MUST** validate this token on every request and reject any that are unauthorized.

|

||||

- **Tie-Breaking with Environment Variables (Recommended):** For the most reliable experience, your plugin **SHOULD** both create the discovery file and set the `QWEN_CODE_IDE_SERVER_PORT` environment variable in the integrated terminal. The file serves as the primary discovery mechanism, but the environment variable is crucial for tie-breaking. If a user has multiple IDE windows open for the same workspace, the CLI uses the `QWEN_CODE_IDE_SERVER_PORT` variable to identify and connect to the correct window's server.

|

||||

- **Environment Variables (Required):** Your plugin **MUST** set `QWEN_CODE_IDE_SERVER_PORT` in the integrated terminal so the CLI can locate the correct `<PORT>.lock` file.

|

||||

|

||||

**Legacy note:** For extensions older than v0.5.1, Qwen Code may fall back to reading JSON files in the system temp directory named `qwen-code-ide-server-<PID>.json` or `qwen-code-ide-server-<PORT>.json`. New integrations should not rely on these legacy files.

|

||||

|

||||

## II. The Context Interface

|

||||

|

||||

|

||||

@@ -84,12 +84,12 @@ This guide provides solutions to common issues and debugging tips, including top

|

||||

|

||||

The Qwen Code uses specific exit codes to indicate the reason for termination. This is especially useful for scripting and automation.

|

||||

|

||||

| Exit Code | Error Type | Description |

|

||||

| --------- | -------------------------- | ------------------------------------------------------------ |

|

||||

| 41 | `FatalAuthenticationError` | An error occurred during the authentication process. |

|

||||

| 42 | `FatalInputError` | Invalid or missing input was provided to the CLI. (non-interactive mode only) |

|

||||

| 44 | `FatalSandboxError` | An error occurred with the sandboxing environment (e.g. Docker, Podman, or Seatbelt). |

|

||||

| 52 | `FatalConfigError` | A configuration file (`settings.json`) is invalid or contains errors. |

|

||||

| Exit Code | Error Type | Description |

|

||||

| --------- | -------------------------- | --------------------------------------------------------------------------------------------------- |

|

||||

| 41 | `FatalAuthenticationError` | An error occurred during the authentication process. |

|

||||

| 42 | `FatalInputError` | Invalid or missing input was provided to the CLI. (non-interactive mode only) |

|

||||

| 44 | `FatalSandboxError` | An error occurred with the sandboxing environment (e.g. Docker, Podman, or Seatbelt). |

|

||||

| 52 | `FatalConfigError` | A configuration file (`settings.json`) is invalid or contains errors. |

|

||||

| 53 | `FatalTurnLimitedError` | The maximum number of conversational turns for the session was reached. (non-interactive mode only) |

|

||||

|

||||

## Debugging Tips

|

||||

|

||||

@@ -5,8 +5,8 @@

|

||||

*/

|

||||

|

||||

import { describe, it, expect, beforeAll, afterAll } from 'vitest';

|

||||

import { writeFileSync, readFileSync } from 'node:fs';

|

||||

import { join, resolve } from 'node:path';

|

||||

import { writeFileSync } from 'node:fs';

|

||||

import { join } from 'node:path';

|

||||

import { TestRig } from './test-helper.js';

|

||||

|

||||

// Windows skip (Option A: avoid infra scope)

|

||||

@@ -121,21 +121,4 @@ d('BOM end-to-end integration', () => {

|

||||

'BOM_OK UTF-32BE',

|

||||

);

|

||||

});

|

||||

|

||||

it('Can describe a PNG file', async () => {

|

||||

const imagePath = resolve(

|

||||

process.cwd(),

|

||||

'docs/assets/gemini-screenshot.png',

|

||||

);

|

||||