mirror of

https://github.com/QwenLM/qwen-code.git

synced 2025-12-28 04:29:15 +00:00

Compare commits

54 Commits

feat/gemin

...

fix/integr

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7233d37bd1 | ||

|

|

f7d04323f3 | ||

|

|

257c6705e1 | ||

|

|

27e7438b75 | ||

|

|

8a3ff8db12 | ||

|

|

26f8b67d4f | ||

|

|

b64d636280 | ||

|

|

781c57b438 | ||

|

|

c53bdde747 | ||

|

|

99db18069d | ||

|

|

a0a5b831d4 | ||

|

|

8f74dd224c | ||

|

|

b931d28f35 | ||

|

|

9f65bd3b39 | ||

|

|

2b3830cf83 | ||

|

|

2b9140940d | ||

|

|

4efdea0981 | ||

|

|

05791d4200 | ||

|

|

add35d2904 | ||

|

|

bc2a7efcb3 | ||

|

|

4f970c9987 | ||

|

|

251031cfc5 | ||

|

|

77c257d9d0 | ||

|

|

4311af96eb | ||

|

|

b49c11e9a2 | ||

|

|

642dda0315 | ||

|

|

bbbdeb280d | ||

|

|

0d43ddee2a | ||

|

|

50e03f2dd6 | ||

|

|

f440ff2f7f | ||

|

|

9a6b0abc37 | ||

|

|

9cdd85c62a | ||

|

|

00547ba439 | ||

|

|

fc1dac9dc7 | ||

|

|

338eb9038d | ||

|

|

e0b9044833 | ||

|

|

f33f43e2f7 | ||

|

|

18e9b2340b | ||

|

|

ad427da340 | ||

|

|

484e0fd943 | ||

|

|

80bb2890df | ||

|

|

abd9ee2a7b | ||

|

|

b8df689e31 | ||

|

|

e610578ecc | ||

|

|

235159216e | ||

|

|

93b30cca29 | ||

|

|

177fc42f04 | ||

|

|

2560c2d1a2 | ||

|

|

bd6e16d41b | ||

|

|

2f0fa267c8 | ||

|

|

fa6ae0a324 | ||

|

|

387be44866 | ||

|

|

51b82771da | ||

|

|

629cd14fad |

9

.github/workflows/e2e.yml

vendored

9

.github/workflows/e2e.yml

vendored

@@ -18,8 +18,6 @@ jobs:

|

||||

- 'sandbox:docker'

|

||||

node-version:

|

||||

- '20.x'

|

||||

- '22.x'

|

||||

- '24.x'

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@08c6903cd8c0fde910a37f88322edcfb5dd907a8' # ratchet:actions/checkout@v5

|

||||

@@ -67,10 +65,13 @@ jobs:

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

OPENAI_MODEL: '${{ secrets.OPENAI_MODEL }}'

|

||||

KEEP_OUTPUT: 'true'

|

||||

SANDBOX: '${{ matrix.sandbox }}'

|

||||

VERBOSE: 'true'

|

||||

run: |-

|

||||

npm run "test:integration:${SANDBOX}"

|

||||

if [[ "${{ matrix.sandbox }}" == "sandbox:docker" ]]; then

|

||||

npm run test:integration:sandbox:docker

|

||||

else

|

||||

npm run test:integration:sandbox:none

|

||||

fi

|

||||

|

||||

e2e-test-macos:

|

||||

name: 'E2E Test - macOS'

|

||||

|

||||

54

.github/workflows/release-sdk.yml

vendored

54

.github/workflows/release-sdk.yml

vendored

@@ -33,6 +33,10 @@ on:

|

||||

type: 'boolean'

|

||||

default: false

|

||||

|

||||

concurrency:

|

||||

group: '${{ github.workflow }}'

|

||||

cancel-in-progress: false

|

||||

|

||||

jobs:

|

||||

release-sdk:

|

||||

runs-on: 'ubuntu-latest'

|

||||

@@ -46,6 +50,7 @@ jobs:

|

||||

packages: 'write'

|

||||

id-token: 'write'

|

||||

issues: 'write'

|

||||

pull-requests: 'write'

|

||||

outputs:

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

|

||||

@@ -163,11 +168,11 @@ jobs:

|

||||

echo "BRANCH_NAME=${BRANCH_NAME}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Update package version'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

env:

|

||||

RELEASE_VERSION: '${{ steps.version.outputs.RELEASE_VERSION }}'

|

||||

run: |-

|

||||

npm version "${RELEASE_VERSION}" --no-git-tag-version --allow-same-version

|

||||

# Use npm workspaces so the root lockfile is updated consistently.

|

||||

npm version -w @qwen-code/sdk "${RELEASE_VERSION}" --no-git-tag-version --allow-same-version

|

||||

|

||||

- name: 'Commit and Conditionally Push package version'

|

||||

env:

|

||||

@@ -175,7 +180,7 @@ jobs:

|

||||

IS_DRY_RUN: '${{ steps.vars.outputs.is_dry_run }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

git add packages/sdk-typescript/package.json

|

||||

git add packages/sdk-typescript/package.json package-lock.json

|

||||

if git diff --staged --quiet; then

|

||||

echo "No version changes to commit"

|

||||

else

|

||||

@@ -222,6 +227,49 @@ jobs:

|

||||

--notes-start-tag "sdk-typescript-${PREVIOUS_RELEASE_TAG}" \

|

||||

--generate-notes

|

||||

|

||||

- name: 'Create PR to merge release branch into main'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' }}

|

||||

id: 'pr'

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

|

||||

pr_url="$(gh pr list --head "${RELEASE_BRANCH}" --base main --json url --jq '.[0].url')"

|

||||

if [[ -z "${pr_url}" ]]; then

|

||||

pr_url="$(gh pr create \

|

||||

--base main \

|

||||

--head "${RELEASE_BRANCH}" \

|

||||

--title "chore(release): sdk-typescript ${RELEASE_TAG}" \

|

||||

--body "Automated release PR for sdk-typescript ${RELEASE_TAG}.")"

|

||||

fi

|

||||

|

||||

echo "PR_URL=${pr_url}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Wait for CI checks to complete'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

echo "Waiting for CI checks to complete..."

|

||||

gh pr checks "${PR_URL}" --watch --interval 30

|

||||

|

||||

- name: 'Enable auto-merge for release PR'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

gh pr merge "${PR_URL}" --merge --auto

|

||||

|

||||

- name: 'Create Issue on Failure'

|

||||

if: |-

|

||||

${{ failure() }}

|

||||

|

||||

110

CONTRIBUTING.md

110

CONTRIBUTING.md

@@ -2,27 +2,6 @@

|

||||

|

||||

We would love to accept your patches and contributions to this project.

|

||||

|

||||

## Before you begin

|

||||

|

||||

### Sign our Contributor License Agreement

|

||||

|

||||

Contributions to this project must be accompanied by a

|

||||

[Contributor License Agreement](https://cla.developers.google.com/about) (CLA).

|

||||

You (or your employer) retain the copyright to your contribution; this simply

|

||||

gives us permission to use and redistribute your contributions as part of the

|

||||

project.

|

||||

|

||||

If you or your current employer have already signed the Google CLA (even if it

|

||||

was for a different project), you probably don't need to do it again.

|

||||

|

||||

Visit <https://cla.developers.google.com/> to see your current agreements or to

|

||||

sign a new one.

|

||||

|

||||

### Review our Community Guidelines

|

||||

|

||||

This project follows [Google's Open Source Community

|

||||

Guidelines](https://opensource.google/conduct/).

|

||||

|

||||

## Contribution Process

|

||||

|

||||

### Code Reviews

|

||||

@@ -74,12 +53,6 @@ Your PR should have a clear, descriptive title and a detailed description of the

|

||||

|

||||

In the PR description, explain the "why" behind your changes and link to the relevant issue (e.g., `Fixes #123`).

|

||||

|

||||

## Forking

|

||||

|

||||

If you are forking the repository you will be able to run the Build, Test and Integration test workflows. However in order to make the integration tests run you'll need to add a [GitHub Repository Secret](https://docs.github.com/en/actions/security-for-github-actions/security-guides/using-secrets-in-github-actions#creating-secrets-for-a-repository) with a value of `GEMINI_API_KEY` and set that to a valid API key that you have available. Your key and secret are private to your repo; no one without access can see your key and you cannot see any secrets related to this repo.

|

||||

|

||||

Additionally you will need to click on the `Actions` tab and enable workflows for your repository, you'll find it's the large blue button in the center of the screen.

|

||||

|

||||

## Development Setup and Workflow

|

||||

|

||||

This section guides contributors on how to build, modify, and understand the development setup of this project.

|

||||

@@ -98,8 +71,8 @@ This section guides contributors on how to build, modify, and understand the dev

|

||||

To clone the repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/google-gemini/gemini-cli.git # Or your fork's URL

|

||||

cd gemini-cli

|

||||

git clone https://github.com/QwenLM/qwen-code.git # Or your fork's URL

|

||||

cd qwen-code

|

||||

```

|

||||

|

||||

To install dependencies defined in `package.json` as well as root dependencies:

|

||||

@@ -118,9 +91,9 @@ This command typically compiles TypeScript to JavaScript, bundles assets, and pr

|

||||

|

||||

### Enabling Sandboxing

|

||||

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `GEMINI_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `QWEN_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

|

||||

To build both the `gemini` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

To build both the `qwen-code` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

|

||||

```bash

|

||||

npm run build:all

|

||||

@@ -130,13 +103,13 @@ To skip building the sandbox container, you can use `npm run build` instead.

|

||||

|

||||

### Running

|

||||

|

||||

To start the Gemini CLI from the source code (after building), run the following command from the root directory:

|

||||

To start the Qwen Code application from the source code (after building), run the following command from the root directory:

|

||||

|

||||

```bash

|

||||

npm start

|

||||

```

|

||||

|

||||

If you'd like to run the source build outside of the gemini-cli folder, you can utilize `npm link path/to/gemini-cli/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) or `alias gemini="node path/to/gemini-cli/packages/cli"` to run with `gemini`

|

||||

If you'd like to run the source build outside of the qwen-code folder, you can utilize `npm link path/to/qwen-code/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) to run with `qwen-code`

|

||||

|

||||

### Running Tests

|

||||

|

||||

@@ -154,7 +127,7 @@ This will run tests located in the `packages/core` and `packages/cli` directorie

|

||||

|

||||

#### Integration Tests

|

||||

|

||||

The integration tests are designed to validate the end-to-end functionality of the Gemini CLI. They are not run as part of the default `npm run test` command.

|

||||

The integration tests are designed to validate the end-to-end functionality of Qwen Code. They are not run as part of the default `npm run test` command.

|

||||

|

||||

To run the integration tests, use the following command:

|

||||

|

||||

@@ -209,19 +182,61 @@ npm run lint

|

||||

### Coding Conventions

|

||||

|

||||

- Please adhere to the coding style, patterns, and conventions used throughout the existing codebase.

|

||||

- Consult [QWEN.md](https://github.com/QwenLM/qwen-code/blob/main/QWEN.md) (typically found in the project root) for specific instructions related to AI-assisted development, including conventions for React, comments, and Git usage.

|

||||

- **Imports:** Pay special attention to import paths. The project uses ESLint to enforce restrictions on relative imports between packages.

|

||||

|

||||

### Project Structure

|

||||

|

||||

- `packages/`: Contains the individual sub-packages of the project.

|

||||

- `cli/`: The command-line interface.

|

||||

- `core/`: The core backend logic for the Gemini CLI.

|

||||

- `core/`: The core backend logic for Qwen Code.

|

||||

- `docs/`: Contains all project documentation.

|

||||

- `scripts/`: Utility scripts for building, testing, and development tasks.

|

||||

|

||||

For more detailed architecture, see `docs/architecture.md`.

|

||||

|

||||

## Documentation Development

|

||||

|

||||

This section describes how to develop and preview the documentation locally.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

1. Ensure you have Node.js (version 18+) installed

|

||||

2. Have npm or yarn available

|

||||

|

||||

### Setup Documentation Site Locally

|

||||

|

||||

To work on the documentation and preview changes locally:

|

||||

|

||||

1. Navigate to the `docs-site` directory:

|

||||

|

||||

```bash

|

||||

cd docs-site

|

||||

```

|

||||

|

||||

2. Install dependencies:

|

||||

|

||||

```bash

|

||||

npm install

|

||||

```

|

||||

|

||||

3. Link the documentation content from the main `docs` directory:

|

||||

|

||||

```bash

|

||||

npm run link

|

||||

```

|

||||

|

||||

This creates a symbolic link from `../docs` to `content` in the docs-site project, allowing the documentation content to be served by the Next.js site.

|

||||

|

||||

4. Start the development server:

|

||||

|

||||

```bash

|

||||

npm run dev

|

||||

```

|

||||

|

||||

5. Open [http://localhost:3000](http://localhost:3000) in your browser to see the documentation site with live updates as you make changes.

|

||||

|

||||

Any changes made to the documentation files in the main `docs` directory will be reflected immediately in the documentation site.

|

||||

|

||||

## Debugging

|

||||

|

||||

### VS Code:

|

||||

@@ -231,7 +246,7 @@ For more detailed architecture, see `docs/architecture.md`.

|

||||

```bash

|

||||

npm run debug

|

||||

```

|

||||

This command runs `node --inspect-brk dist/gemini.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

This command runs `node --inspect-brk dist/index.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

2. In VS Code, use the "Attach" launch configuration (found in `.vscode/launch.json`).

|

||||

|

||||

Alternatively, you can use the "Launch Program" configuration in VS Code if you prefer to launch the currently open file directly, but 'F5' is generally recommended.

|

||||

@@ -239,16 +254,16 @@ Alternatively, you can use the "Launch Program" configuration in VS Code if you

|

||||

To hit a breakpoint inside the sandbox container run:

|

||||

|

||||

```bash

|

||||

DEBUG=1 gemini

|

||||

DEBUG=1 qwen-code

|

||||

```

|

||||

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect gemini-cli due to automatic exclusion. Use `.gemini/.env` files for gemini-cli specific debug settings.

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect qwen-code due to automatic exclusion. Use `.qwen-code/.env` files for qwen-code specific debug settings.

|

||||

|

||||

### React DevTools

|

||||

|

||||

To debug the CLI's React-based UI, you can use React DevTools. Ink, the library used for the CLI's interface, is compatible with React DevTools version 4.x.

|

||||

|

||||

1. **Start the Gemini CLI in development mode:**

|

||||

1. **Start the Qwen Code application in development mode:**

|

||||

|

||||

```bash

|

||||

DEV=true npm start

|

||||

@@ -270,23 +285,10 @@ To debug the CLI's React-based UI, you can use React DevTools. Ink, the library

|

||||

```

|

||||

|

||||

Your running CLI application should then connect to React DevTools.

|

||||

|

||||

|

||||

## Sandboxing

|

||||

|

||||

### macOS Seatbelt

|

||||

|

||||

On macOS, `qwen` uses Seatbelt (`sandbox-exec`) under a `permissive-open` profile (see `packages/cli/src/utils/sandbox-macos-permissive-open.sb`) that restricts writes to the project folder but otherwise allows all other operations and outbound network traffic ("open") by default. You can switch to a `restrictive-closed` profile (see `packages/cli/src/utils/sandbox-macos-restrictive-closed.sb`) that declines all operations and outbound network traffic ("closed") by default by setting `SEATBELT_PROFILE=restrictive-closed` in your environment or `.env` file. Available built-in profiles are `{permissive,restrictive}-{open,closed,proxied}` (see below for proxied networking). You can also switch to a custom profile `SEATBELT_PROFILE=<profile>` if you also create a file `.qwen/sandbox-macos-<profile>.sb` under your project settings directory `.qwen`.

|

||||

|

||||

### Container-based Sandboxing (All Platforms)

|

||||

|

||||

For stronger container-based sandboxing on macOS or other platforms, you can set `GEMINI_SANDBOX=true|docker|podman|<command>` in your environment or `.env` file. The specified command (or if `true` then either `docker` or `podman`) must be installed on the host machine. Once enabled, `npm run build:all` will build a minimal container ("sandbox") image and `npm start` will launch inside a fresh instance of that container. The first build can take 20-30s (mostly due to downloading of the base image) but after that both build and start overhead should be minimal. Default builds (`npm run build`) will not rebuild the sandbox.

|

||||

|

||||

Container-based sandboxing mounts the project directory (and system temp directory) with read-write access and is started/stopped/removed automatically as you start/stop Gemini CLI. Files created within the sandbox should be automatically mapped to your user/group on host machine. You can easily specify additional mounts, ports, or environment variables by setting `SANDBOX_{MOUNTS,PORTS,ENV}` as needed. You can also fully customize the sandbox for your projects by creating the files `.qwen/sandbox.Dockerfile` and/or `.qwen/sandbox.bashrc` under your project settings directory (`.qwen`) and running `qwen` with `BUILD_SANDBOX=1` to trigger building of your custom sandbox.

|

||||

|

||||

#### Proxied Networking

|

||||

|

||||

All sandboxing methods, including macOS Seatbelt using `*-proxied` profiles, support restricting outbound network traffic through a custom proxy server that can be specified as `GEMINI_SANDBOX_PROXY_COMMAND=<command>`, where `<command>` must start a proxy server that listens on `:::8877` for relevant requests. See `docs/examples/proxy-script.md` for a minimal proxy that only allows `HTTPS` connections to `example.com:443` (e.g. `curl https://example.com`) and declines all other requests. The proxy is started and stopped automatically alongside the sandbox.

|

||||

> TBD

|

||||

|

||||

## Manual Publish

|

||||

|

||||

|

||||

10

Makefile

10

Makefile

@@ -1,9 +1,9 @@

|

||||

# Makefile for gemini-cli

|

||||

# Makefile for qwen-code

|

||||

|

||||

.PHONY: help install build build-sandbox build-all test lint format preflight clean start debug release run-npx create-alias

|

||||

|

||||

help:

|

||||

@echo "Makefile for gemini-cli"

|

||||

@echo "Makefile for qwen-code"

|

||||

@echo ""

|

||||

@echo "Usage:"

|

||||

@echo " make install - Install npm dependencies"

|

||||

@@ -14,11 +14,11 @@ help:

|

||||

@echo " make format - Format the code"

|

||||

@echo " make preflight - Run formatting, linting, and tests"

|

||||

@echo " make clean - Remove generated files"

|

||||

@echo " make start - Start the Gemini CLI"

|

||||

@echo " make debug - Start the Gemini CLI in debug mode"

|

||||

@echo " make start - Start the Qwen Code CLI"

|

||||

@echo " make debug - Start the Qwen Code CLI in debug mode"

|

||||

@echo ""

|

||||

@echo " make run-npx - Run the CLI using npx (for testing the published package)"

|

||||

@echo " make create-alias - Create a 'gemini' alias for your shell"

|

||||

@echo " make create-alias - Create a 'qwen' alias for your shell"

|

||||

|

||||

install:

|

||||

npm install

|

||||

|

||||

410

README.md

410

README.md

@@ -1,382 +1,152 @@

|

||||

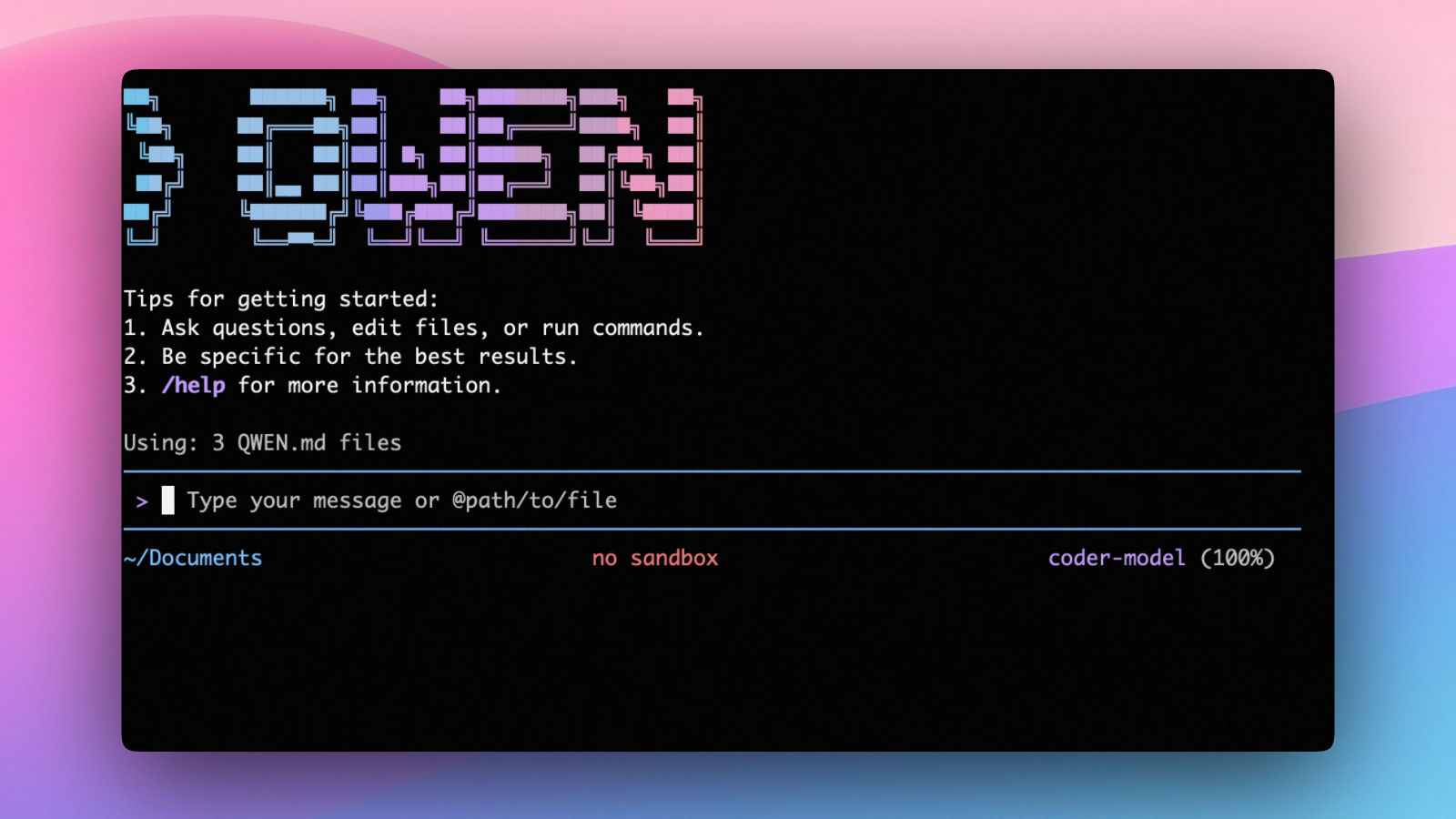

# Qwen Code

|

||||

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

[](./LICENSE)

|

||||

[](https://nodejs.org/)

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

|

||||

**AI-powered command-line workflow tool for developers**

|

||||

**An open-source AI agent that lives in your terminal.**

|

||||

|

||||

[Installation](#installation) • [Quick Start](#quick-start) • [Features](#key-features) • [Documentation](./docs/) • [Contributing](./CONTRIBUTING.md)

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/users/overview">中文</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/users/overview">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr/users/overview">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/users/overview">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru/users/overview">Русский</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/pt-BR/users/overview">Português (Brasil)</a>

|

||||

|

||||

</div>

|

||||

|

||||

<div align="center">

|

||||

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru">Русский</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/">中文</a>

|

||||

|

||||

</div>

|

||||

Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder). It helps you understand large codebases, automate tedious work, and ship faster.

|

||||

|

||||

Qwen Code is a powerful command-line AI workflow tool adapted from [**Gemini CLI**](https://github.com/google-gemini/gemini-cli), specifically optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder) models. It enhances your development workflow with advanced code understanding, automated tasks, and intelligent assistance.

|

||||

|

||||

|

||||

## 💡 Free Options Available

|

||||

## Why Qwen Code?

|

||||

|

||||

Get started with Qwen Code at no cost using any of these free options:

|

||||

|

||||

### 🔥 Qwen OAuth (Recommended)

|

||||

|

||||

- **2,000 requests per day** with no token limits

|

||||

- **60 requests per minute** rate limit

|

||||

- Simply run `qwen` and authenticate with your qwen.ai account

|

||||

- Automatic credential management and refresh

|

||||

- Use `/auth` command to switch to Qwen OAuth if you have initialized with OpenAI compatible mode

|

||||

|

||||

### 🌏 Regional Free Tiers

|

||||

|

||||

- **Mainland China**: ModelScope offers **2,000 free API calls per day**

|

||||

- **International**: OpenRouter provides **up to 1,000 free API calls per day** worldwide

|

||||

|

||||

For detailed setup instructions, see [Authorization](#authorization).

|

||||

|

||||

> [!WARNING]

|

||||

> **Token Usage Notice**: Qwen Code may issue multiple API calls per cycle, resulting in higher token usage (similar to Claude Code). We're actively optimizing API efficiency.

|

||||

|

||||

## Key Features

|

||||

|

||||

- **Code Understanding & Editing** - Query and edit large codebases beyond traditional context window limits

|

||||

- **Workflow Automation** - Automate operational tasks like handling pull requests and complex rebases

|

||||

- **Enhanced Parser** - Adapted parser specifically optimized for Qwen-Coder models

|

||||

- **Vision Model Support** - Automatically detect images in your input and seamlessly switch to vision-capable models for multimodal analysis

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

|

||||

## Installation

|

||||

|

||||

### Prerequisites

|

||||

|

||||

Ensure you have [Node.js version 20](https://nodejs.org/en/download) or higher installed.

|

||||

#### Prerequisites

|

||||

|

||||

```bash

|

||||

# Node.js 20+

|

||||

curl -qL https://www.npmjs.com/install.sh | sh

|

||||

```

|

||||

|

||||

### Install from npm

|

||||

#### NPM (recommended)

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code@latest

|

||||

qwen --version

|

||||

```

|

||||

|

||||

### Install from source

|

||||

|

||||

```bash

|

||||

git clone https://github.com/QwenLM/qwen-code.git

|

||||

cd qwen-code

|

||||

npm install

|

||||

npm install -g .

|

||||

```

|

||||

|

||||

### Install globally with Homebrew (macOS/Linux)

|

||||

#### Homebrew (macOS, Linux)

|

||||

|

||||

```bash

|

||||

brew install qwen-code

|

||||

```

|

||||

|

||||

## VS Code Extension

|

||||

|

||||

In addition to the CLI tool, Qwen Code also provides a **VS Code extension** that brings AI-powered coding assistance directly into your editor with features like file system operations, native diffing, interactive chat, and more.

|

||||

|

||||

> 📦 The extension is currently in development. For installation, features, and development guide, see the [VS Code Extension README](./packages/vscode-ide-companion/README.md).

|

||||

|

||||

## Quick Start

|

||||

|

||||

```bash

|

||||

# Start Qwen Code

|

||||

# Start Qwen Code (interactive)

|

||||

qwen

|

||||

|

||||

# Example commands

|

||||

> Explain this codebase structure

|

||||

> Help me refactor this function

|

||||

> Generate unit tests for this module

|

||||

# Then, in the session:

|

||||

/help

|

||||

/auth

|

||||

```

|

||||

|

||||

### Session Management

|

||||

On first use, you'll be prompted to sign in. You can run `/auth` anytime to switch authentication methods.

|

||||

|

||||

Control your token usage with configurable session limits to optimize costs and performance.

|

||||

Example prompts:

|

||||

|

||||

#### Configure Session Token Limit

|

||||

|

||||

Create or edit `.qwen/settings.json` in your home directory:

|

||||

|

||||

```json

|

||||

{

|

||||

"sessionTokenLimit": 32000

|

||||

}

|

||||

```text

|

||||

What does this project do?

|

||||

Explain the codebase structure.

|

||||

Help me refactor this function.

|

||||

Generate unit tests for this module.

|

||||

```

|

||||

|

||||

#### Session Commands

|

||||

|

||||

- **`/compress`** - Compress conversation history to continue within token limits

|

||||

- **`/clear`** - Clear all conversation history and start fresh

|

||||

- **`/stats`** - Check current token usage and limits

|

||||

|

||||

> 📝 **Note**: Session token limit applies to a single conversation, not cumulative API calls.

|

||||

|

||||

### Vision Model Configuration

|

||||

|

||||

Qwen Code includes intelligent vision model auto-switching that detects images in your input and can automatically switch to vision-capable models for multimodal analysis. **This feature is enabled by default** - when you include images in your queries, you'll see a dialog asking how you'd like to handle the vision model switch.

|

||||

|

||||

#### Skip the Switch Dialog (Optional)

|

||||

|

||||

If you don't want to see the interactive dialog each time, configure the default behavior in your `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"experimental": {

|

||||

"vlmSwitchMode": "once"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

**Available modes:**

|

||||

|

||||

- **`"once"`** - Switch to vision model for this query only, then revert

|

||||

- **`"session"`** - Switch to vision model for the entire session

|

||||

- **`"persist"`** - Continue with current model (no switching)

|

||||

- **Not set** - Show interactive dialog each time (default)

|

||||

|

||||

#### Command Line Override

|

||||

|

||||

You can also set the behavior via command line:

|

||||

|

||||

```bash

|

||||

# Switch once per query

|

||||

qwen --vlm-switch-mode once

|

||||

|

||||

# Switch for entire session

|

||||

qwen --vlm-switch-mode session

|

||||

|

||||

# Never switch automatically

|

||||

qwen --vlm-switch-mode persist

|

||||

```

|

||||

|

||||

#### Disable Vision Models (Optional)

|

||||

|

||||

To completely disable vision model support, add to your `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"experimental": {

|

||||

"visionModelPreview": false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

> 💡 **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected.

|

||||

|

||||

### Authorization

|

||||

|

||||

Choose your preferred authentication method based on your needs:

|

||||

|

||||

#### 1. Qwen OAuth (🚀 Recommended - Start in 30 seconds)

|

||||

|

||||

The easiest way to get started - completely free with generous quotas:

|

||||

|

||||

```bash

|

||||

# Just run this command and follow the browser authentication

|

||||

qwen

|

||||

```

|

||||

|

||||

**What happens:**

|

||||

|

||||

1. **Instant Setup**: CLI opens your browser automatically

|

||||

2. **One-Click Login**: Authenticate with your qwen.ai account

|

||||

3. **Automatic Management**: Credentials cached locally for future use

|

||||

4. **No Configuration**: Zero setup required - just start coding!

|

||||

|

||||

**Free Tier Benefits:**

|

||||

|

||||

- ✅ **2,000 requests/day** (no token counting needed)

|

||||

- ✅ **60 requests/minute** rate limit

|

||||

- ✅ **Automatic credential refresh**

|

||||

- ✅ **Zero cost** for individual users

|

||||

- ℹ️ **Note**: Model fallback may occur to maintain service quality

|

||||

|

||||

#### 2. OpenAI-Compatible API

|

||||

|

||||

Use API keys for OpenAI or other compatible providers:

|

||||

|

||||

**Configuration Methods:**

|

||||

|

||||

1. **Environment Variables**

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="your_api_endpoint"

|

||||

export OPENAI_MODEL="your_model_choice"

|

||||

```

|

||||

|

||||

2. **Project `.env` File**

|

||||

Create a `.env` file in your project root:

|

||||

```env

|

||||

OPENAI_API_KEY=your_api_key_here

|

||||

OPENAI_BASE_URL=your_api_endpoint

|

||||

OPENAI_MODEL=your_model_choice

|

||||

```

|

||||

|

||||

**API Provider Options**

|

||||

|

||||

> ⚠️ **Regional Notice:**

|

||||

>

|

||||

> - **Mainland China**: Use Alibaba Cloud Bailian or ModelScope

|

||||

> - **International**: Use Alibaba Cloud ModelStudio or OpenRouter

|

||||

|

||||

<details>

|

||||

<summary><b>🇨🇳 For Users in Mainland China</b></summary>

|

||||

<summary>Click to watch a demo video</summary>

|

||||

|

||||

**Option 1: Alibaba Cloud Bailian** ([Apply for API Key](https://bailian.console.aliyun.com/))

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://dashscope.aliyuncs.com/compatible-mode/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

**Option 2: ModelScope (Free Tier)** ([Apply for API Key](https://modelscope.cn/docs/model-service/API-Inference/intro))

|

||||

|

||||

- ✅ **2,000 free API calls per day**

|

||||

- ⚠️ Connect your Aliyun account to avoid authentication errors

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://api-inference.modelscope.cn/v1"

|

||||

export OPENAI_MODEL="Qwen/Qwen3-Coder-480B-A35B-Instruct"

|

||||

```

|

||||

<video src="https://cloud.video.taobao.com/vod/HLfyppnCHplRV9Qhz2xSqeazHeRzYtG-EYJnHAqtzkQ.mp4" controls>

|

||||

Your browser does not support the video tag.

|

||||

</video>

|

||||

|

||||

</details>

|

||||

|

||||

<details>

|

||||

<summary><b>🌍 For International Users</b></summary>

|

||||

## Authentication

|

||||

|

||||

**Option 1: Alibaba Cloud ModelStudio** ([Apply for API Key](https://modelstudio.console.alibabacloud.com/))

|

||||

Qwen Code supports two authentication methods:

|

||||

|

||||

- **Qwen OAuth (recommended & free)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use `OPENAI_API_KEY` (and optionally a custom base URL / model).

|

||||

|

||||

#### Qwen OAuth (recommended)

|

||||

|

||||

Start `qwen`, then run:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

/auth

|

||||

```

|

||||

|

||||

**Option 2: OpenRouter (Free Tier Available)** ([Apply for API Key](https://openrouter.ai/))

|

||||

Choose **Qwen OAuth** and complete the browser flow. Your credentials are cached locally so you usually won't need to log in again.

|

||||

|

||||

#### OpenAI-compatible API (API key)

|

||||

|

||||

Environment variables (recommended for CI / headless environments):

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://openrouter.ai/api/v1"

|

||||

export OPENAI_MODEL="qwen/qwen3-coder:free"

|

||||

export OPENAI_API_KEY="your-api-key-here"

|

||||

export OPENAI_BASE_URL="https://api.openai.com/v1" # optional

|

||||

export OPENAI_MODEL="gpt-4o" # optional

|

||||

```

|

||||

|

||||

</details>

|

||||

For details (including `.qwen/.env` loading and security notes), see the [authentication guide](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/auth/).

|

||||

|

||||

## Usage Examples

|

||||

## Usage

|

||||

|

||||

### 🔍 Explore Codebases

|

||||

As an open-source terminal agent, you can use Qwen Code in four primary ways:

|

||||

|

||||

1. Interactive mode (terminal UI)

|

||||

2. Headless mode (scripts, CI)

|

||||

3. IDE integration (VS Code, Zed)

|

||||

4. TypeScript SDK

|

||||

|

||||

#### Interactive mode

|

||||

|

||||

```bash

|

||||

cd your-project/

|

||||

qwen

|

||||

|

||||

# Architecture analysis

|

||||

> Describe the main pieces of this system's architecture

|

||||

> What are the key dependencies and how do they interact?

|

||||

> Find all API endpoints and their authentication methods

|

||||

```

|

||||

|

||||

### 💻 Code Development

|

||||

Run `qwen` in your project folder to launch the interactive terminal UI. Use `@` to reference local files (for example `@src/main.ts`).

|

||||

|

||||

#### Headless mode

|

||||

|

||||

```bash

|

||||

# Refactoring

|

||||

> Refactor this function to improve readability and performance

|

||||

> Convert this class to use dependency injection

|

||||

> Split this large module into smaller, focused components

|

||||

|

||||

# Code generation

|

||||

> Create a REST API endpoint for user management

|

||||

> Generate unit tests for the authentication module

|

||||

> Add error handling to all database operations

|

||||

cd your-project/

|

||||

qwen -p "your question"

|

||||

```

|

||||

|

||||

### 🔄 Automate Workflows

|

||||

Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automation, and CI/CD. Learn more: [Headless mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/headless).

|

||||

|

||||

```bash

|

||||

# Git automation

|

||||

> Analyze git commits from the last 7 days, grouped by feature

|

||||

> Create a changelog from recent commits

|

||||

> Find all TODO comments and create GitHub issues

|

||||

#### IDE integration

|

||||

|

||||

# File operations

|

||||

> Convert all images in this directory to PNG format

|

||||

> Rename all test files to follow the *.test.ts pattern

|

||||

> Find and remove all console.log statements

|

||||

```

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

|

||||

### 🐛 Debugging & Analysis

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

|

||||

```bash

|

||||

# Performance analysis

|

||||

> Identify performance bottlenecks in this React component

|

||||

> Find all N+1 query problems in the codebase

|

||||

#### TypeScript SDK

|

||||

|

||||

# Security audit

|

||||

> Check for potential SQL injection vulnerabilities

|

||||

> Find all hardcoded credentials or API keys

|

||||

```

|

||||

Build on top of Qwen Code with the TypeScript SDK:

|

||||

|

||||

## Popular Tasks

|

||||

|

||||

### 📚 Understand New Codebases

|

||||

|

||||

```text

|

||||

> What are the core business logic components?

|

||||

> What security mechanisms are in place?

|

||||

> How does the data flow through the system?

|

||||

> What are the main design patterns used?

|

||||

> Generate a dependency graph for this module

|

||||

```

|

||||

|

||||

### 🔨 Code Refactoring & Optimization

|

||||

|

||||

```text

|

||||

> What parts of this module can be optimized?

|

||||

> Help me refactor this class to follow SOLID principles

|

||||

> Add proper error handling and logging

|

||||

> Convert callbacks to async/await pattern

|

||||

> Implement caching for expensive operations

|

||||

```

|

||||

|

||||

### 📝 Documentation & Testing

|

||||

|

||||

```text

|

||||

> Generate comprehensive JSDoc comments for all public APIs

|

||||

> Write unit tests with edge cases for this component

|

||||

> Create API documentation in OpenAPI format

|

||||

> Add inline comments explaining complex algorithms

|

||||

> Generate a README for this module

|

||||

```

|

||||

|

||||

### 🚀 Development Acceleration

|

||||

|

||||

```text

|

||||

> Set up a new Express server with authentication

|

||||

> Create a React component with TypeScript and tests

|

||||

> Implement a rate limiter middleware

|

||||

> Add database migrations for new schema

|

||||

> Configure CI/CD pipeline for this project

|

||||

```

|

||||

- [Use the Qwen Code SDK](./packages/sdk-typescript/README.md)

|

||||

|

||||

## Commands & Shortcuts

|

||||

|

||||

@@ -386,6 +156,7 @@ qwen

|

||||

- `/clear` - Clear conversation history

|

||||

- `/compress` - Compress history to save tokens

|

||||

- `/stats` - Show current session information

|

||||

- `/bug` - Submit a bug report

|

||||

- `/exit` or `/quit` - Exit Qwen Code

|

||||

|

||||

### Keyboard Shortcuts

|

||||

@@ -394,6 +165,19 @@ qwen

|

||||

- `Ctrl+D` - Exit (on empty line)

|

||||

- `Up/Down` - Navigate command history

|

||||

|

||||

> Learn more about [Commands](https://qwenlm.github.io/qwen-code-docs/en/users/features/commands/)

|

||||

>

|

||||

> **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected. Learn more about [Approval Mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/approval-mode/)

|

||||

|

||||

## Configuration

|

||||

|

||||

Qwen Code can be configured via `settings.json`, environment variables, and CLI flags.

|

||||

|

||||

- **User settings**: `~/.qwen/settings.json`

|

||||

- **Project settings**: `.qwen/settings.json`

|

||||

|

||||

See [settings](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/settings/) for available options and precedence.

|

||||

|

||||

## Benchmark Results

|

||||

|

||||

### Terminal-Bench Performance

|

||||

@@ -403,24 +187,18 @@ qwen

|

||||

| Qwen Code | Qwen3-Coder-480A35 | 37.5% |

|

||||

| Qwen Code | Qwen3-Coder-30BA3B | 31.3% |

|

||||

|

||||

## Development & Contributing

|

||||

## Ecosystem

|

||||

|

||||

See [CONTRIBUTING.md](./CONTRIBUTING.md) to learn how to contribute to the project.

|

||||

Looking for a graphical interface?

|

||||

|

||||

For detailed authentication setup, see the [authentication guide](./docs/cli/authentication.md).

|

||||

- [**Gemini CLI Desktop**](https://github.com/Piebald-AI/gemini-cli-desktop) A cross-platform desktop/web/mobile UI for Qwen Code

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

If you encounter issues, check the [troubleshooting guide](docs/troubleshooting.md).

|

||||

If you encounter issues, check the [troubleshooting guide](https://qwenlm.github.io/qwen-code-docs/en/users/support/troubleshooting/).

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

## License

|

||||

|

||||

[LICENSE](./LICENSE)

|

||||

|

||||

## Star History

|

||||

|

||||

[](https://www.star-history.com/#QwenLM/qwen-code&Date)

|

||||

|

||||

@@ -43,6 +43,7 @@ Qwen Code uses JSON settings files for persistent configuration. There are four

|

||||

In addition to a project settings file, a project's `.qwen` directory can contain other project-specific files related to Qwen Code's operation, such as:

|

||||

|

||||

- [Custom sandbox profiles](../features/sandbox) (e.g. `.qwen/sandbox-macos-custom.sb`, `.qwen/sandbox.Dockerfile`).

|

||||

- [Agent Skills](../features/skills) (experimental) under `.qwen/skills/` (each Skill is a directory containing a `SKILL.md`).

|

||||

|

||||

### Available settings in `settings.json`

|

||||

|

||||

@@ -380,6 +381,8 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--experimental-acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Experimental. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

| `--proxy` | | Sets the proxy for the CLI. | Proxy URL | Example: `--proxy http://localhost:7890`. |

|

||||

|

||||

@@ -1,6 +1,7 @@

|

||||

export default {

|

||||

commands: 'Commands',

|

||||

'sub-agents': 'SubAgents',

|

||||

skills: 'Skills (Experimental)',

|

||||

headless: 'Headless Mode',

|

||||

checkpointing: {

|

||||

display: 'hidden',

|

||||

|

||||

@@ -189,19 +189,20 @@ qwen -p "Write code" --output-format stream-json --include-partial-messages | jq

|

||||

|

||||

Key command-line options for headless usage:

|

||||

|

||||

| Option | Description | Example |

|

||||

| ---------------------------- | --------------------------------------------------- | ------------------------------------------------------------------------ |

|

||||

| `--prompt`, `-p` | Run in headless mode | `qwen -p "query"` |

|

||||

| `--output-format`, `-o` | Specify output format (text, json, stream-json) | `qwen -p "query" --output-format json` |

|

||||

| `--input-format` | Specify input format (text, stream-json) | `qwen --input-format text --output-format stream-json` |

|

||||

| `--include-partial-messages` | Include partial messages in stream-json output | `qwen -p "query" --output-format stream-json --include-partial-messages` |

|

||||

| `--debug`, `-d` | Enable debug mode | `qwen -p "query" --debug` |

|

||||

| `--all-files`, `-a` | Include all files in context | `qwen -p "query" --all-files` |

|

||||

| `--include-directories` | Include additional directories | `qwen -p "query" --include-directories src,docs` |

|

||||

| `--yolo`, `-y` | Auto-approve all actions | `qwen -p "query" --yolo` |

|

||||

| `--approval-mode` | Set approval mode | `qwen -p "query" --approval-mode auto_edit` |

|

||||

| `--continue` | Resume the most recent session for this project | `qwen --continue -p "Pick up where we left off"` |

|

||||

| `--resume [sessionId]` | Resume a specific session (or choose interactively) | `qwen --resume 123e... -p "Finish the refactor"` |

|

||||

| Option | Description | Example |

|

||||

| ---------------------------- | ------------------------------------------------------- | ------------------------------------------------------------------------ |

|

||||

| `--prompt`, `-p` | Run in headless mode | `qwen -p "query"` |

|

||||

| `--output-format`, `-o` | Specify output format (text, json, stream-json) | `qwen -p "query" --output-format json` |

|

||||

| `--input-format` | Specify input format (text, stream-json) | `qwen --input-format text --output-format stream-json` |

|

||||

| `--include-partial-messages` | Include partial messages in stream-json output | `qwen -p "query" --output-format stream-json --include-partial-messages` |

|

||||

| `--debug`, `-d` | Enable debug mode | `qwen -p "query" --debug` |

|

||||

| `--all-files`, `-a` | Include all files in context | `qwen -p "query" --all-files` |

|

||||

| `--include-directories` | Include additional directories | `qwen -p "query" --include-directories src,docs` |

|

||||

| `--yolo`, `-y` | Auto-approve all actions | `qwen -p "query" --yolo` |

|

||||

| `--approval-mode` | Set approval mode | `qwen -p "query" --approval-mode auto_edit` |

|

||||

| `--continue` | Resume the most recent session for this project | `qwen --continue -p "Pick up where we left off"` |

|

||||

| `--resume [sessionId]` | Resume a specific session (or choose interactively) | `qwen --resume 123e... -p "Finish the refactor"` |

|

||||

| `--experimental-skills` | Enable experimental Skills (registers the `skill` tool) | `qwen --experimental-skills -p "What Skills are available?"` |

|

||||

|

||||

For complete details on all available configuration options, settings files, and environment variables, see the [Configuration Guide](../configuration/settings).

|

||||

|

||||

|

||||

282

docs/users/features/skills.md

Normal file

282

docs/users/features/skills.md

Normal file

@@ -0,0 +1,282 @@

|

||||

# Agent Skills (Experimental)

|

||||

|

||||

> Create, manage, and share Skills to extend Qwen Code’s capabilities.

|

||||

|

||||

This guide shows you how to create, use, and manage Agent Skills in **Qwen Code**. Skills are modular capabilities that extend the model’s effectiveness through organized folders containing instructions (and optionally scripts/resources).

|

||||

|

||||

> [!note]

|

||||

>

|

||||

> Skills are currently **experimental** and must be enabled with `--experimental-skills`.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

|

||||

Agent Skills package expertise into discoverable capabilities. Each Skill consists of a `SKILL.md` file with instructions that the model can load when relevant, plus optional supporting files like scripts and templates.

|

||||

|

||||

### How Skills are invoked

|

||||

|

||||

Skills are **model-invoked** — the model autonomously decides when to use them based on your request and the Skill’s description. This is different from slash commands, which are **user-invoked** (you explicitly type `/command`).

|

||||

|

||||

### Benefits

|

||||

|

||||

- Extend Qwen Code for your workflows

|

||||

- Share expertise across your team via git

|

||||

- Reduce repetitive prompting

|

||||

- Compose multiple Skills for complex tasks

|

||||

|

||||

## Create a Skill

|

||||

|

||||

Skills are stored as directories containing a `SKILL.md` file.

|

||||

|

||||

### Personal Skills

|

||||

|

||||

Personal Skills are available across all your projects. Store them in `~/.qwen/skills/`:

|

||||

|

||||

```bash

|

||||

mkdir -p ~/.qwen/skills/my-skill-name

|

||||

```

|

||||

|

||||

Use personal Skills for:

|

||||

|

||||

- Your individual workflows and preferences

|

||||

- Experimental Skills you’re developing

|

||||

- Personal productivity helpers

|

||||

|

||||

### Project Skills

|

||||

|

||||

Project Skills are shared with your team. Store them in `.qwen/skills/` within your project:

|

||||

|

||||

```bash

|

||||

mkdir -p .qwen/skills/my-skill-name

|

||||

```

|

||||

|

||||

Use project Skills for:

|

||||

|

||||

- Team workflows and conventions

|

||||

- Project-specific expertise

|

||||

- Shared utilities and scripts

|

||||

|

||||

Project Skills can be checked into git and automatically become available to teammates.

|

||||

|

||||

## Write `SKILL.md`

|

||||

|

||||

Create a `SKILL.md` file with YAML frontmatter and Markdown content:

|

||||

|

||||

```yaml

|

||||

---

|

||||

name: your-skill-name

|

||||

description: Brief description of what this Skill does and when to use it

|

||||

---

|

||||

|

||||

# Your Skill Name

|

||||

|

||||

## Instructions

|

||||

Provide clear, step-by-step guidance for Qwen Code.

|

||||

|

||||

## Examples

|

||||

Show concrete examples of using this Skill.

|

||||

```

|

||||

|

||||

### Field requirements

|

||||

|

||||

Qwen Code currently validates that:

|

||||

|

||||

- `name` is a non-empty string

|

||||

- `description` is a non-empty string

|

||||

|

||||

Recommended conventions (not strictly enforced yet):

|

||||

|

||||

- Use lowercase letters, numbers, and hyphens in `name`

|

||||

- Make `description` specific: include both **what** the Skill does and **when** to use it (key words users will naturally mention)

|

||||

|

||||

## Add supporting files

|

||||

|

||||

Create additional files alongside `SKILL.md`:

|

||||

|

||||

```text

|

||||

my-skill/

|

||||

├── SKILL.md (required)

|

||||

├── reference.md (optional documentation)

|

||||

├── examples.md (optional examples)

|

||||

├── scripts/

|

||||

│ └── helper.py (optional utility)

|

||||

└── templates/

|

||||

└── template.txt (optional template)

|

||||

```

|

||||

|

||||

Reference these files from `SKILL.md`:

|

||||

|

||||

````markdown

|

||||

For advanced usage, see [reference.md](reference.md).

|

||||

|

||||

Run the helper script:

|

||||

|

||||

```bash

|

||||

python scripts/helper.py input.txt

|

||||

```

|

||||

````

|

||||

|

||||

## View available Skills

|

||||

|

||||

When `--experimental-skills` is enabled, Qwen Code discovers Skills from:

|

||||

|

||||

- Personal Skills: `~/.qwen/skills/`

|

||||

- Project Skills: `.qwen/skills/`

|

||||

|

||||

To view available Skills, ask Qwen Code directly:

|

||||

|

||||

```text

|

||||

What Skills are available?

|

||||

```

|

||||

|

||||

Or inspect the filesystem:

|

||||

|

||||

```bash

|

||||

# List personal Skills

|

||||

ls ~/.qwen/skills/

|

||||

|

||||

# List project Skills (if in a project directory)

|

||||

ls .qwen/skills/

|

||||

|

||||

# View a specific Skill’s content

|

||||

cat ~/.qwen/skills/my-skill/SKILL.md

|

||||

```

|

||||

|

||||

## Test a Skill

|

||||

|

||||

After creating a Skill, test it by asking questions that match your description.

|

||||

|

||||

Example: if your description mentions “PDF files”:

|

||||

|

||||

```text

|

||||

Can you help me extract text from this PDF?

|

||||

```

|

||||

|

||||

The model autonomously decides to use your Skill if it matches the request — you don’t need to explicitly invoke it.

|

||||

|

||||

## Debug a Skill

|

||||

|

||||

If Qwen Code doesn’t use your Skill, check these common issues:

|

||||

|

||||

### Make the description specific

|

||||

|

||||

Too vague:

|

||||

|

||||

```yaml

|

||||

description: Helps with documents

|

||||

```

|

||||

|

||||

Specific:

|

||||

|

||||

```yaml

|

||||

description: Extract text and tables from PDF files, fill forms, merge documents. Use when working with PDFs, forms, or document extraction.

|

||||

```

|

||||

|

||||

### Verify file path

|

||||

|

||||

- Personal Skills: `~/.qwen/skills/<skill-name>/SKILL.md`

|

||||

- Project Skills: `.qwen/skills/<skill-name>/SKILL.md`

|

||||

|

||||

```bash

|

||||

# Personal

|

||||

ls ~/.qwen/skills/my-skill/SKILL.md

|

||||

|

||||

# Project

|

||||

ls .qwen/skills/my-skill/SKILL.md

|

||||

```

|

||||

|

||||

### Check YAML syntax

|

||||

|

||||

Invalid YAML prevents the Skill metadata from loading correctly.

|

||||

|

||||

```bash

|

||||

cat SKILL.md | head -n 15

|

||||

```

|

||||

|

||||

Ensure:

|

||||

|

||||

- Opening `---` on line 1

|

||||

- Closing `---` before Markdown content

|

||||

- Valid YAML syntax (no tabs, correct indentation)

|

||||

|

||||

### View errors

|

||||

|

||||

Run Qwen Code with debug mode to see Skill loading errors:

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills --debug

|

||||

```

|

||||

|

||||

## Share Skills with your team

|

||||

|

||||

You can share Skills through project repositories:

|

||||

|

||||

1. Add the Skill under `.qwen/skills/`

|

||||

2. Commit and push

|

||||

3. Teammates pull the changes and run with `--experimental-skills`

|

||||

|

||||

```bash

|

||||

git add .qwen/skills/

|

||||

git commit -m "Add team Skill for PDF processing"

|

||||

git push

|

||||

```

|

||||

|

||||

## Update a Skill

|

||||

|

||||

Edit `SKILL.md` directly:

|

||||

|

||||

```bash

|

||||

# Personal Skill

|

||||

code ~/.qwen/skills/my-skill/SKILL.md

|

||||

|

||||

# Project Skill

|

||||

code .qwen/skills/my-skill/SKILL.md

|

||||

```

|

||||

|

||||

Changes take effect the next time you start Qwen Code. If Qwen Code is already running, restart it to load the updates.

|

||||

|

||||

## Remove a Skill

|

||||

|

||||

Delete the Skill directory:

|

||||

|

||||

```bash

|

||||

# Personal

|

||||

rm -rf ~/.qwen/skills/my-skill

|

||||

|

||||

# Project

|

||||

rm -rf .qwen/skills/my-skill

|

||||

git commit -m "Remove unused Skill"

|

||||

```

|

||||

|

||||

## Best practices

|

||||

|

||||

### Keep Skills focused

|

||||

|

||||

One Skill should address one capability:

|

||||

|

||||

- Focused: “PDF form filling”, “Excel analysis”, “Git commit messages”

|

||||

- Too broad: “Document processing” (split into smaller Skills)

|

||||

|

||||

### Write clear descriptions

|

||||

|

||||

Help the model discover when to use Skills by including specific triggers:

|

||||

|

||||

```yaml

|

||||

description: Analyze Excel spreadsheets, create pivot tables, and generate charts. Use when working with Excel files, spreadsheets, or .xlsx data.

|

||||

```

|

||||

|

||||

### Test with your team

|

||||

|

||||

- Does the Skill activate when expected?

|

||||

- Are the instructions clear?

|

||||

- Are there missing examples or edge cases?

|

||||

@@ -1,4 +1,6 @@

|

||||

# Qwen Code overview

|

||||

[](https://npm-compare.com/@qwen-code/qwen-code)

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

|

||||

> Learn about Qwen Code, Qwen's agentic coding tool that lives in your terminal and helps you turn ideas into code faster than ever before.

|

||||

|

||||

@@ -46,7 +48,7 @@ You'll be prompted to log in on first use. That's it! [Continue with Quickstart

|

||||

|

||||

> [!note]

|

||||

>

|

||||

> **New VS Code Extension (Beta)**: Prefer a graphical interface? Our new **VS Code extension** provides an easy-to-use native IDE experience without requiring terminal familiarity. Simply install from the marketplace and start coding with Qwen Code directly in your sidebar. You can search for **Qwen Code** in the VS Code Marketplace and download it.

|

||||

> **New VS Code Extension (Beta)**: Prefer a graphical interface? Our new **VS Code extension** provides an easy-to-use native IDE experience without requiring terminal familiarity. Simply install from the marketplace and start coding with Qwen Code directly in your sidebar. Download and install the [Qwen Code Companion](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion) now.

|

||||

|

||||

## What Qwen Code does for you

|

||||

|

||||

|

||||

@@ -5,8 +5,6 @@

|

||||

*/

|

||||

|

||||

import { describe, it, expect } from 'vitest';

|

||||

import { existsSync } from 'node:fs';

|

||||

import * as path from 'node:path';

|

||||

import { TestRig, printDebugInfo, validateModelOutput } from './test-helper.js';

|

||||

|

||||

describe('file-system', () => {

|

||||

@@ -202,8 +200,8 @@ describe('file-system', () => {

|

||||

const readAttempt = toolLogs.find(

|

||||

(log) => log.toolRequest.name === 'read_file',

|

||||

);

|

||||

const writeAttempt = toolLogs.find(

|

||||

(log) => log.toolRequest.name === 'write_file',

|

||||