mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-21 08:16:21 +00:00

Compare commits

1 Commits

feat/multi

...

mingholy/f

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9457870c93 |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -12,7 +12,7 @@

|

||||

!.gemini/config.yaml

|

||||

!.gemini/commands/

|

||||

|

||||

# Note: .qwen-clipboard/ is NOT in gitignore so Gemini can access pasted images

|

||||

# Note: .gemini-clipboard/ is NOT in gitignore so Gemini can access pasted images

|

||||

|

||||

# Dependency directory

|

||||

node_modules

|

||||

|

||||

@@ -5,13 +5,11 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

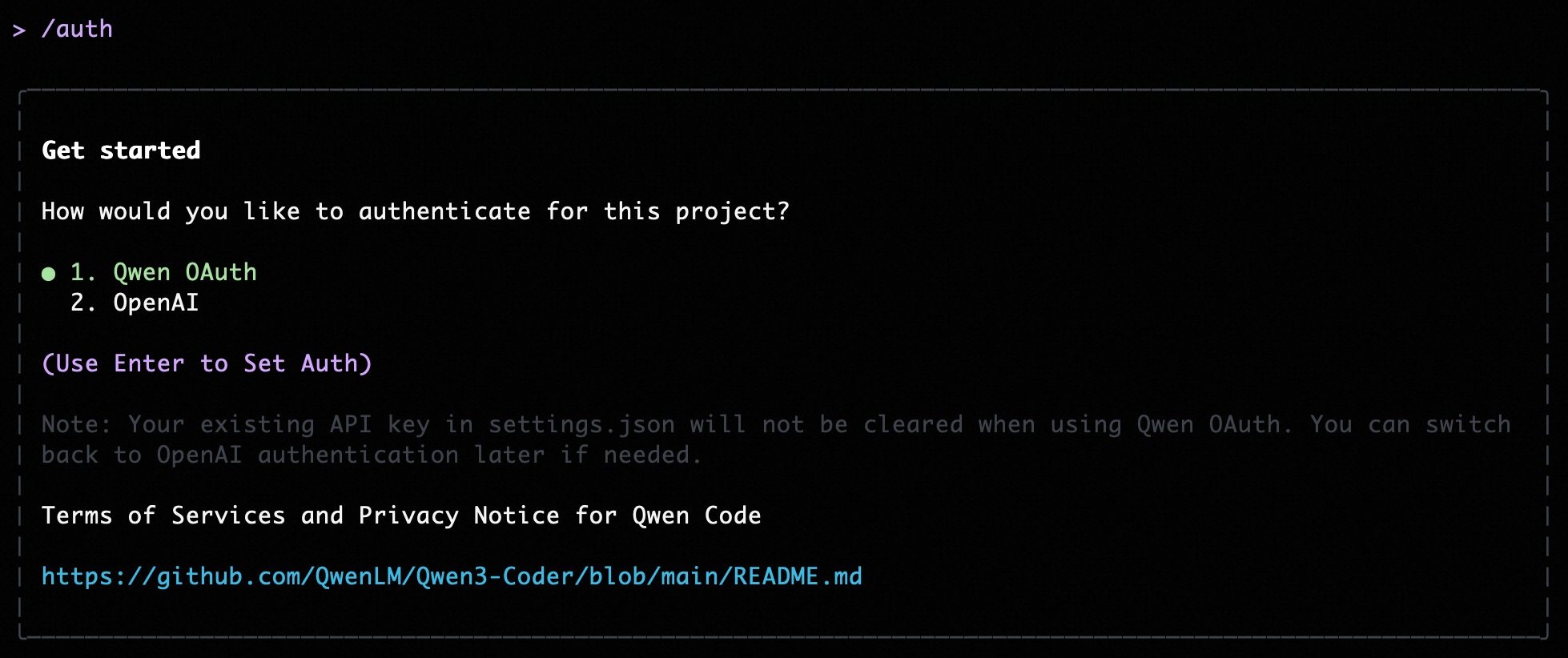

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -26,54 +24,15 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

### Quick start (interactive, recommended for local use)

|

||||

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -241,6 +241,7 @@ Per-field precedence for `generationConfig`:

|

||||

| ------------------------------------------------- | -------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `context.fileName` | string or array of strings | The name of the context file(s). | `undefined` |

|

||||

| `context.importFormat` | string | The format to use when importing memory. | `undefined` |

|

||||

| `context.discoveryMaxDirs` | number | Maximum number of directories to search for memory. | `200` |

|

||||

| `context.includeDirectories` | array | Additional directories to include in the workspace context. Specifies an array of additional absolute or relative paths to include in the workspace context. Missing directories will be skipped with a warning by default. Paths can use `~` to refer to the user's home directory. This setting can be combined with the `--include-directories` command-line flag. | `[]` |

|

||||

| `context.loadFromIncludeDirectories` | boolean | Controls the behavior of the `/memory refresh` command. If set to `true`, `QWEN.md` files should be loaded from all directories that are added. If set to `false`, `QWEN.md` should only be loaded from the current directory. | `false` |

|

||||

| `context.fileFiltering.respectGitIgnore` | boolean | Respect .gitignore files when searching. | `true` |

|

||||

@@ -310,12 +311,6 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

>

|

||||

> **Note about advanced.tavilyApiKey:** This is a legacy configuration format. For Qwen OAuth users, DashScope provider is automatically available without any configuration. For other authentication types, configure Tavily or Google providers using the new `webSearch` configuration format.

|

||||

|

||||

#### experimental

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| --------------------- | ------- | -------------------------------- | ------- |

|

||||

| `experimental.skills` | boolean | Enable experimental Agent Skills | `false` |

|

||||

|

||||

#### mcpServers

|

||||

|

||||

Configures connections to one or more Model-Context Protocol (MCP) servers for discovering and using custom tools. Qwen Code attempts to connect to each configured MCP server to discover available tools. If multiple MCP servers expose a tool with the same name, the tool names will be prefixed with the server alias you defined in the configuration (e.g., `serverAlias__actualToolName`) to avoid conflicts. Note that the system might strip certain schema properties from MCP tool definitions for compatibility. At least one of `command`, `url`, or `httpUrl` must be provided. If multiple are specified, the order of precedence is `httpUrl`, then `url`, then `command`.

|

||||

@@ -534,13 +529,16 @@ Here's a conceptual example of what a context file at the root of a TypeScript p

|

||||

|

||||

This example demonstrates how you can provide general project context, specific coding conventions, and even notes about particular files or components. The more relevant and precise your context files are, the better the AI can assist you. Project-specific context files are highly encouraged to establish conventions and context.

|

||||

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a sophisticated hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

1. **Global Context File:**

|

||||

- Location: `~/.qwen/<configured-context-filename>` (e.g., `~/.qwen/QWEN.md` in your user home directory).

|

||||

- Scope: Provides default instructions for all your projects.

|

||||

2. **Project Root & Ancestors Context Files:**

|

||||

- Location: The CLI searches for the configured context file in the current working directory and then in each parent directory up to either the project root (identified by a `.git` folder) or your home directory.

|

||||

- Scope: Provides context relevant to the entire project or a significant portion of it.

|

||||

3. **Sub-directory Context Files (Contextual/Local):**

|

||||

- Location: The CLI also scans for the configured context file in subdirectories _below_ the current working directory (respecting common ignore patterns like `node_modules`, `.git`, etc.). The breadth of this search is limited to 200 directories by default, but can be configured with the `context.discoveryMaxDirs` setting in your `settings.json` file.

|

||||

- Scope: Allows for highly specific instructions relevant to a particular component, module, or subsection of your project.

|

||||

- **Concatenation & UI Indication:** The contents of all found context files are concatenated (with separators indicating their origin and path) and provided as part of the system prompt. The CLI footer displays the count of loaded context files, giving you a quick visual cue about the active instructional context.

|

||||

- **Importing Content:** You can modularize your context files by importing other Markdown files using the `@path/to/file.md` syntax. For more details, see the [Memory Import Processor documentation](../configuration/memory).

|

||||

- **Commands for Memory Management:**

|

||||

|

||||

@@ -11,29 +11,12 @@ This guide shows you how to create, use, and manage Agent Skills in **Qwen Code*

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

|

||||

## How to enable

|

||||

|

||||

### Via CLI flag

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

### Via settings.json

|

||||

|

||||

Add to your `~/.qwen/settings.json` or project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": {

|

||||

"experimental": {

|

||||

"skills": true

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

|

||||

@@ -311,9 +311,9 @@ function setupAcpTest(

|

||||

}

|

||||

});

|

||||

|

||||

it('returns modes on initialize and allows setting mode and model', async () => {

|

||||

it('returns modes on initialize and allows setting approval mode', async () => {

|

||||

const rig = new TestRig();

|

||||

rig.setup('acp mode and model');

|

||||

rig.setup('acp approval mode');

|

||||

|

||||

const { sendRequest, cleanup, stderr } = setupAcpTest(rig);

|

||||

|

||||

@@ -366,14 +366,8 @@ function setupAcpTest(

|

||||

const newSession = (await sendRequest('session/new', {

|

||||

cwd: rig.testDir!,

|

||||

mcpServers: [],

|

||||

})) as {

|

||||

sessionId: string;

|

||||

models: {

|

||||

availableModels: Array<{ modelId: string }>;

|

||||

};

|

||||

};

|

||||

})) as { sessionId: string };

|

||||

expect(newSession.sessionId).toBeTruthy();

|

||||

expect(newSession.models.availableModels.length).toBeGreaterThan(0);

|

||||

|

||||

// Test 4: Set approval mode to 'yolo'

|

||||

const setModeResult = (await sendRequest('session/set_mode', {

|

||||

@@ -398,15 +392,6 @@ function setupAcpTest(

|

||||

})) as { modeId: string };

|

||||

expect(setModeResult3).toBeDefined();

|

||||

expect(setModeResult3.modeId).toBe('default');

|

||||

|

||||

// Test 7: Set model using first available model

|

||||

const firstModel = newSession.models.availableModels[0];

|

||||

const setModelResult = (await sendRequest('session/set_model', {

|

||||

sessionId: newSession.sessionId,

|

||||

modelId: firstModel.modelId,

|

||||

})) as { modelId: string };

|

||||

expect(setModelResult).toBeDefined();

|

||||

expect(setModelResult.modelId).toBeTruthy();

|

||||

} catch (e) {

|

||||

if (stderr.length) {

|

||||

console.error('Agent stderr:', stderr.join(''));

|

||||

|

||||

12

package-lock.json

generated

12

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -17310,7 +17310,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17947,7 +17947,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -21408,7 +21408,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21420,7 +21420,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

|

||||

@@ -8,7 +8,6 @@

|

||||

|

||||

import { z } from 'zod';

|

||||

import * as schema from './schema.js';

|

||||

import { ACP_ERROR_CODES } from './errorCodes.js';

|

||||

export * from './schema.js';

|

||||

|

||||

import type { WritableStream, ReadableStream } from 'node:stream/web';

|

||||

@@ -71,13 +70,6 @@ export class AgentSideConnection implements Client {

|

||||

const validatedParams = schema.setModeRequestSchema.parse(params);

|

||||

return agent.setMode(validatedParams);

|

||||

}

|

||||

case schema.AGENT_METHODS.session_set_model: {

|

||||

if (!agent.setModel) {

|

||||

throw RequestError.methodNotFound();

|

||||

}

|

||||

const validatedParams = schema.setModelRequestSchema.parse(params);

|

||||

return agent.setModel(validatedParams);

|

||||

}

|

||||

default:

|

||||

throw RequestError.methodNotFound(method);

|

||||

}

|

||||

@@ -350,51 +342,27 @@ export class RequestError extends Error {

|

||||

}

|

||||

|

||||

static parseError(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.PARSE_ERROR,

|

||||

'Parse error',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32700, 'Parse error', details);

|

||||

}

|

||||

|

||||

static invalidRequest(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.INVALID_REQUEST,

|

||||

'Invalid request',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32600, 'Invalid request', details);

|

||||

}

|

||||

|

||||

static methodNotFound(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.METHOD_NOT_FOUND,

|

||||

'Method not found',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32601, 'Method not found', details);

|

||||

}

|

||||

|

||||

static invalidParams(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.INVALID_PARAMS,

|

||||

'Invalid params',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32602, 'Invalid params', details);

|

||||

}

|

||||

|

||||

static internalError(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.INTERNAL_ERROR,

|

||||

'Internal error',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32603, 'Internal error', details);

|

||||

}

|

||||

|

||||

static authRequired(details?: string): RequestError {

|

||||

return new RequestError(

|

||||

ACP_ERROR_CODES.AUTH_REQUIRED,

|

||||

'Authentication required',

|

||||

details,

|

||||

);

|

||||

return new RequestError(-32000, 'Authentication required', details);

|

||||

}

|

||||

|

||||

toResult<T>(): Result<T> {

|

||||

@@ -440,5 +408,4 @@ export interface Agent {

|

||||

prompt(params: schema.PromptRequest): Promise<schema.PromptResponse>;

|

||||

cancel(params: schema.CancelNotification): Promise<void>;

|

||||

setMode?(params: schema.SetModeRequest): Promise<schema.SetModeResponse>;

|

||||

setModel?(params: schema.SetModelRequest): Promise<schema.SetModelResponse>;

|

||||

}

|

||||

|

||||

@@ -165,11 +165,30 @@ class GeminiAgent {

|

||||

this.setupFileSystem(config);

|

||||

|

||||

const session = await this.createAndStoreSession(config);

|

||||

const availableModels = this.buildAvailableModels(config);

|

||||

const configuredModel = (

|

||||

config.getModel() ||

|

||||

this.config.getModel() ||

|

||||

''

|

||||

).trim();

|

||||

const modelId = configuredModel || 'default';

|

||||

const modelName = configuredModel || modelId;

|

||||

|

||||

return {

|

||||

sessionId: session.getId(),

|

||||

models: availableModels,

|

||||

models: {

|

||||

currentModelId: modelId,

|

||||

availableModels: [

|

||||

{

|

||||

modelId,

|

||||

name: modelName,

|

||||

description: null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(modelId),

|

||||

},

|

||||

},

|

||||

],

|

||||

_meta: null,

|

||||

},

|

||||

};

|

||||

}

|

||||

|

||||

@@ -286,29 +305,15 @@ class GeminiAgent {

|

||||

async setMode(params: acp.SetModeRequest): Promise<acp.SetModeResponse> {

|

||||

const session = this.sessions.get(params.sessionId);

|

||||

if (!session) {

|

||||

throw acp.RequestError.invalidParams(

|

||||

`Session not found for id: ${params.sessionId}`,

|

||||

);

|

||||

throw new Error(`Session not found: ${params.sessionId}`);

|

||||

}

|

||||

return session.setMode(params);

|

||||

}

|

||||

|

||||

async setModel(params: acp.SetModelRequest): Promise<acp.SetModelResponse> {

|

||||

const session = this.sessions.get(params.sessionId);

|

||||

if (!session) {

|

||||

throw acp.RequestError.invalidParams(

|

||||

`Session not found for id: ${params.sessionId}`,

|

||||

);

|

||||

}

|

||||

return session.setModel(params);

|

||||

}

|

||||

|

||||

private async ensureAuthenticated(config: Config): Promise<void> {

|

||||

const selectedType = this.settings.merged.security?.auth?.selectedType;

|

||||

if (!selectedType) {

|

||||

throw acp.RequestError.authRequired(

|

||||

'Use Qwen Code CLI to authenticate first.',

|

||||

);

|

||||

throw acp.RequestError.authRequired('No Selected Type');

|

||||

}

|

||||

|

||||

try {

|

||||

@@ -377,43 +382,4 @@ class GeminiAgent {

|

||||

|

||||

return session;

|

||||

}

|

||||

|

||||

private buildAvailableModels(

|

||||

config: Config,

|

||||

): acp.NewSessionResponse['models'] {

|

||||

const currentModelId = (

|

||||

config.getModel() ||

|

||||

this.config.getModel() ||

|

||||

''

|

||||

).trim();

|

||||

const availableModels = config.getAvailableModels();

|

||||

|

||||

const mappedAvailableModels = availableModels.map((model) => ({

|

||||

modelId: model.id,

|

||||

name: model.label,

|

||||

description: model.description ?? null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(model.id),

|

||||

},

|

||||

}));

|

||||

|

||||

if (

|

||||

currentModelId &&

|

||||

!mappedAvailableModels.some((model) => model.modelId === currentModelId)

|

||||

) {

|

||||

mappedAvailableModels.unshift({

|

||||

modelId: currentModelId,

|

||||

name: currentModelId,

|

||||

description: null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(currentModelId),

|

||||

},

|

||||

});

|

||||

}

|

||||

|

||||

return {

|

||||

currentModelId,

|

||||

availableModels: mappedAvailableModels,

|

||||

};

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,25 +0,0 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Google LLC

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

export const ACP_ERROR_CODES = {

|

||||

// Parse error: invalid JSON received by server.

|

||||

PARSE_ERROR: -32700,

|

||||

// Invalid request: JSON is not a valid Request object.

|

||||

INVALID_REQUEST: -32600,

|

||||

// Method not found: method does not exist or is unavailable.

|

||||

METHOD_NOT_FOUND: -32601,

|

||||

// Invalid params: invalid method parameter(s).

|

||||

INVALID_PARAMS: -32602,

|

||||

// Internal error: implementation-defined server error.

|

||||

INTERNAL_ERROR: -32603,

|

||||

// Authentication required: must authenticate before operation.

|

||||

AUTH_REQUIRED: -32000,

|

||||

// Resource not found: e.g. missing file.

|

||||

RESOURCE_NOT_FOUND: -32002,

|

||||

} as const;

|

||||

|

||||

export type AcpErrorCode =

|

||||

(typeof ACP_ERROR_CODES)[keyof typeof ACP_ERROR_CODES];

|

||||

@@ -15,7 +15,6 @@ export const AGENT_METHODS = {

|

||||

session_prompt: 'session/prompt',

|

||||

session_list: 'session/list',

|

||||

session_set_mode: 'session/set_mode',

|

||||

session_set_model: 'session/set_model',

|

||||

};

|

||||

|

||||

export const CLIENT_METHODS = {

|

||||

@@ -267,18 +266,6 @@ export const modelInfoSchema = z.object({

|

||||

name: z.string(),

|

||||

});

|

||||

|

||||

export const setModelRequestSchema = z.object({

|

||||

sessionId: z.string(),

|

||||

modelId: z.string(),

|

||||

});

|

||||

|

||||

export const setModelResponseSchema = z.object({

|

||||

modelId: z.string(),

|

||||

});

|

||||

|

||||

export type SetModelRequest = z.infer<typeof setModelRequestSchema>;

|

||||

export type SetModelResponse = z.infer<typeof setModelResponseSchema>;

|

||||

|

||||

export const sessionModelStateSchema = z.object({

|

||||

_meta: acpMetaSchema,

|

||||

availableModels: z.array(modelInfoSchema),

|

||||

@@ -605,7 +592,6 @@ export const agentResponseSchema = z.union([

|

||||

promptResponseSchema,

|

||||

listSessionsResponseSchema,

|

||||

setModeResponseSchema,

|

||||

setModelResponseSchema,

|

||||

]);

|

||||

|

||||

export const requestPermissionRequestSchema = z.object({

|

||||

@@ -638,7 +624,6 @@ export const agentRequestSchema = z.union([

|

||||

promptRequestSchema,

|

||||

listSessionsRequestSchema,

|

||||

setModeRequestSchema,

|

||||

setModelRequestSchema,

|

||||

]);

|

||||

|

||||

export const agentNotificationSchema = sessionNotificationSchema;

|

||||

|

||||

@@ -7,7 +7,6 @@

|

||||

import { describe, expect, it, vi } from 'vitest';

|

||||

import type { FileSystemService } from '@qwen-code/qwen-code-core';

|

||||

import { AcpFileSystemService } from './filesystem.js';

|

||||

import { ACP_ERROR_CODES } from '../errorCodes.js';

|

||||

|

||||

const createFallback = (): FileSystemService => ({

|

||||

readTextFile: vi.fn(),

|

||||

@@ -17,13 +16,11 @@ const createFallback = (): FileSystemService => ({

|

||||

|

||||

describe('AcpFileSystemService', () => {

|

||||

describe('readTextFile ENOENT handling', () => {

|

||||

it('converts RESOURCE_NOT_FOUND error to ENOENT', async () => {

|

||||

const resourceNotFoundError = {

|

||||

code: ACP_ERROR_CODES.RESOURCE_NOT_FOUND,

|

||||

message: 'File not found',

|

||||

};

|

||||

it('parses path from ACP ENOENT message (quoted)', async () => {

|

||||

const client = {

|

||||

readTextFile: vi.fn().mockRejectedValue(resourceNotFoundError),

|

||||

readTextFile: vi

|

||||

.fn()

|

||||

.mockResolvedValue({ content: 'ERROR: ENOENT: "/remote/file.txt"' }),

|

||||

} as unknown as import('../acp.js').Client;

|

||||

|

||||

const svc = new AcpFileSystemService(

|

||||

@@ -33,20 +30,15 @@ describe('AcpFileSystemService', () => {

|

||||

createFallback(),

|

||||

);

|

||||

|

||||

await expect(svc.readTextFile('/some/file.txt')).rejects.toMatchObject({

|

||||

await expect(svc.readTextFile('/local/file.txt')).rejects.toMatchObject({

|

||||

code: 'ENOENT',

|

||||

errno: -2,

|

||||

path: '/some/file.txt',

|

||||

path: '/remote/file.txt',

|

||||

});

|

||||

});

|

||||

|

||||

it('re-throws other errors unchanged', async () => {

|

||||

const otherError = {

|

||||

code: ACP_ERROR_CODES.INTERNAL_ERROR,

|

||||

message: 'Internal error',

|

||||

};

|

||||

it('falls back to requested path when none provided', async () => {

|

||||

const client = {

|

||||

readTextFile: vi.fn().mockRejectedValue(otherError),

|

||||

readTextFile: vi.fn().mockResolvedValue({ content: 'ERROR: ENOENT:' }),

|

||||

} as unknown as import('../acp.js').Client;

|

||||

|

||||

const svc = new AcpFileSystemService(

|

||||

@@ -56,34 +48,12 @@ describe('AcpFileSystemService', () => {

|

||||

createFallback(),

|

||||

);

|

||||

|

||||

await expect(svc.readTextFile('/some/file.txt')).rejects.toMatchObject({

|

||||

code: ACP_ERROR_CODES.INTERNAL_ERROR,

|

||||

message: 'Internal error',

|

||||

await expect(

|

||||

svc.readTextFile('/fallback/path.txt'),

|

||||

).rejects.toMatchObject({

|

||||

code: 'ENOENT',

|

||||

path: '/fallback/path.txt',

|

||||

});

|

||||

});

|

||||

|

||||

it('uses fallback when readTextFile capability is disabled', async () => {

|

||||

const client = {

|

||||

readTextFile: vi.fn(),

|

||||

} as unknown as import('../acp.js').Client;

|

||||

|

||||

const fallback = createFallback();

|

||||

(fallback.readTextFile as ReturnType<typeof vi.fn>).mockResolvedValue(

|

||||

'fallback content',

|

||||

);

|

||||

|

||||

const svc = new AcpFileSystemService(

|

||||

client,

|

||||

'session-3',

|

||||

{ readTextFile: false, writeTextFile: true },

|

||||

fallback,

|

||||

);

|

||||

|

||||

const result = await svc.readTextFile('/some/file.txt');

|

||||

|

||||

expect(result).toBe('fallback content');

|

||||

expect(fallback.readTextFile).toHaveBeenCalledWith('/some/file.txt');

|

||||

expect(client.readTextFile).not.toHaveBeenCalled();

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

@@ -6,7 +6,6 @@

|

||||

|

||||

import type { FileSystemService } from '@qwen-code/qwen-code-core';

|

||||

import type * as acp from '../acp.js';

|

||||

import { ACP_ERROR_CODES } from '../errorCodes.js';

|

||||

|

||||

/**

|

||||

* ACP client-based implementation of FileSystemService

|

||||

@@ -24,31 +23,25 @@ export class AcpFileSystemService implements FileSystemService {

|

||||

return this.fallback.readTextFile(filePath);

|

||||

}

|

||||

|

||||

let response: { content: string };

|

||||

try {

|

||||

response = await this.client.readTextFile({

|

||||

path: filePath,

|

||||

sessionId: this.sessionId,

|

||||

line: null,

|

||||

limit: null,

|

||||

});

|

||||

} catch (error) {

|

||||

const errorCode =

|

||||

typeof error === 'object' && error !== null && 'code' in error

|

||||

? (error as { code?: unknown }).code

|

||||

: undefined;

|

||||

const response = await this.client.readTextFile({

|

||||

path: filePath,

|

||||

sessionId: this.sessionId,

|

||||

line: null,

|

||||

limit: null,

|

||||

});

|

||||

|

||||

if (errorCode === ACP_ERROR_CODES.RESOURCE_NOT_FOUND) {

|

||||

const err = new Error(

|

||||

`File not found: ${filePath}`,

|

||||

) as NodeJS.ErrnoException;

|

||||

err.code = 'ENOENT';

|

||||

err.errno = -2;

|

||||

err.path = filePath;

|

||||

throw err;

|

||||

}

|

||||

|

||||

throw error;

|

||||

if (response.content.startsWith('ERROR: ENOENT:')) {

|

||||

// Treat ACP error strings as structured ENOENT errors without

|

||||

// assuming a specific platform format.

|

||||

const match = /^ERROR:\s*ENOENT:\s*(?<path>.*)$/i.exec(response.content);

|

||||

const err = new Error(response.content) as NodeJS.ErrnoException;

|

||||

err.code = 'ENOENT';

|

||||

err.errno = -2;

|

||||

const rawPath = match?.groups?.['path']?.trim();

|

||||

err['path'] = rawPath

|

||||

? rawPath.replace(/^['"]|['"]$/g, '') || filePath

|

||||

: filePath;

|

||||

throw err;

|

||||

}

|

||||

|

||||

return response.content;

|

||||

|

||||

@@ -1,174 +0,0 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Qwen

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import { describe, it, expect, vi, beforeEach } from 'vitest';

|

||||

import { Session } from './Session.js';

|

||||

import type { Config, GeminiChat } from '@qwen-code/qwen-code-core';

|

||||

import { ApprovalMode } from '@qwen-code/qwen-code-core';

|

||||

import type * as acp from '../acp.js';

|

||||

import type { LoadedSettings } from '../../config/settings.js';

|

||||

import * as nonInteractiveCliCommands from '../../nonInteractiveCliCommands.js';

|

||||

|

||||

vi.mock('../../nonInteractiveCliCommands.js', () => ({

|

||||

getAvailableCommands: vi.fn(),

|

||||

handleSlashCommand: vi.fn(),

|

||||

}));

|

||||

|

||||

describe('Session', () => {

|

||||

let mockChat: GeminiChat;

|

||||

let mockConfig: Config;

|

||||

let mockClient: acp.Client;

|

||||

let mockSettings: LoadedSettings;

|

||||

let session: Session;

|

||||

let currentModel: string;

|

||||

let setModelSpy: ReturnType<typeof vi.fn>;

|

||||

let getAvailableCommandsSpy: ReturnType<typeof vi.fn>;

|

||||

|

||||

beforeEach(() => {

|

||||

currentModel = 'qwen3-code-plus';

|

||||

setModelSpy = vi.fn().mockImplementation(async (modelId: string) => {

|

||||

currentModel = modelId;

|

||||

});

|

||||

|

||||

mockChat = {

|

||||

sendMessageStream: vi.fn(),

|

||||

addHistory: vi.fn(),

|

||||

} as unknown as GeminiChat;

|

||||

|

||||

mockConfig = {

|

||||

setApprovalMode: vi.fn(),

|

||||

setModel: setModelSpy,

|

||||

getModel: vi.fn().mockImplementation(() => currentModel),

|

||||

} as unknown as Config;

|

||||

|

||||

mockClient = {

|

||||

sessionUpdate: vi.fn().mockResolvedValue(undefined),

|

||||

requestPermission: vi.fn().mockResolvedValue({

|

||||

outcome: { outcome: 'selected', optionId: 'proceed_once' },

|

||||

}),

|

||||

sendCustomNotification: vi.fn().mockResolvedValue(undefined),

|

||||

} as unknown as acp.Client;

|

||||

|

||||

mockSettings = {

|

||||

merged: {},

|

||||

} as LoadedSettings;

|

||||

|

||||

getAvailableCommandsSpy = vi.mocked(nonInteractiveCliCommands)

|

||||

.getAvailableCommands as unknown as ReturnType<typeof vi.fn>;

|

||||

getAvailableCommandsSpy.mockResolvedValue([]);

|

||||

|

||||

session = new Session(

|

||||

'test-session-id',

|

||||

mockChat,

|

||||

mockConfig,

|

||||

mockClient,

|

||||

mockSettings,

|

||||

);

|

||||

});

|

||||

|

||||

describe('setMode', () => {

|

||||

it.each([

|

||||

['plan', ApprovalMode.PLAN],

|

||||

['default', ApprovalMode.DEFAULT],

|

||||

['auto-edit', ApprovalMode.AUTO_EDIT],

|

||||

['yolo', ApprovalMode.YOLO],

|

||||

] as const)('maps %s mode', async (modeId, expected) => {

|

||||

const result = await session.setMode({

|

||||

sessionId: 'test-session-id',

|

||||

modeId,

|

||||

});

|

||||

|

||||

expect(mockConfig.setApprovalMode).toHaveBeenCalledWith(expected);

|

||||

expect(result).toEqual({ modeId });

|

||||

});

|

||||

});

|

||||

|

||||

describe('setModel', () => {

|

||||

it('sets model via config and returns current model', async () => {

|

||||

const result = await session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: ' qwen3-coder-plus ',

|

||||

});

|

||||

|

||||

expect(mockConfig.setModel).toHaveBeenCalledWith('qwen3-coder-plus', {

|

||||

reason: 'user_request_acp',

|

||||

context: 'session/set_model',

|

||||

});

|

||||

expect(mockConfig.getModel).toHaveBeenCalled();

|

||||

expect(result).toEqual({ modelId: 'qwen3-coder-plus' });

|

||||

});

|

||||

|

||||

it('rejects empty/whitespace model IDs', async () => {

|

||||

await expect(

|

||||

session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: ' ',

|

||||

}),

|

||||

).rejects.toThrow('Invalid params');

|

||||

|

||||

expect(mockConfig.setModel).not.toHaveBeenCalled();

|

||||

});

|

||||

|

||||

it('propagates errors from config.setModel', async () => {

|

||||

const configError = new Error('Invalid model');

|

||||

setModelSpy.mockRejectedValueOnce(configError);

|

||||

|

||||

await expect(

|

||||

session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: 'invalid-model',

|

||||

}),

|

||||

).rejects.toThrow('Invalid model');

|

||||

});

|

||||

});

|

||||

|

||||

describe('sendAvailableCommandsUpdate', () => {

|

||||

it('sends available_commands_update from getAvailableCommands()', async () => {

|

||||

getAvailableCommandsSpy.mockResolvedValueOnce([

|

||||

{

|

||||

name: 'init',

|

||||

description: 'Initialize project context',

|

||||

},

|

||||

]);

|

||||

|

||||

await session.sendAvailableCommandsUpdate();

|

||||

|

||||

expect(getAvailableCommandsSpy).toHaveBeenCalledWith(

|

||||

mockConfig,

|

||||

expect.any(AbortSignal),

|

||||

);

|

||||

expect(mockClient.sessionUpdate).toHaveBeenCalledWith({

|

||||

sessionId: 'test-session-id',

|

||||

update: {

|

||||

sessionUpdate: 'available_commands_update',

|

||||

availableCommands: [

|

||||

{

|

||||

name: 'init',

|

||||

description: 'Initialize project context',

|

||||

input: null,

|

||||

},

|

||||

],

|

||||

},

|

||||

});

|

||||

});

|

||||

|

||||

it('swallows errors and does not throw', async () => {

|

||||

const consoleErrorSpy = vi

|

||||

.spyOn(console, 'error')

|

||||

.mockImplementation(() => undefined);

|

||||

getAvailableCommandsSpy.mockRejectedValueOnce(

|

||||

new Error('Command discovery failed'),

|

||||

);

|

||||

|

||||

await expect(

|

||||

session.sendAvailableCommandsUpdate(),

|

||||

).resolves.toBeUndefined();

|

||||

expect(mockClient.sessionUpdate).not.toHaveBeenCalled();

|

||||

expect(consoleErrorSpy).toHaveBeenCalled();

|

||||

consoleErrorSpy.mockRestore();

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -52,8 +52,6 @@ import type {

|

||||

AvailableCommandsUpdate,

|

||||

SetModeRequest,

|

||||

SetModeResponse,

|

||||

SetModelRequest,

|

||||

SetModelResponse,

|

||||

ApprovalModeValue,

|

||||

CurrentModeUpdate,

|

||||

} from '../schema.js';

|

||||

@@ -350,31 +348,6 @@ export class Session implements SessionContext {

|

||||

return { modeId: params.modeId };

|

||||

}

|

||||

|

||||

/**

|

||||

* Sets the model for the current session.

|

||||

* Validates the model ID and switches the model via Config.

|

||||

*/

|

||||

async setModel(params: SetModelRequest): Promise<SetModelResponse> {

|

||||

const modelId = params.modelId.trim();

|

||||

|

||||

if (!modelId) {

|

||||

throw acp.RequestError.invalidParams('modelId cannot be empty');

|

||||

}

|

||||

|

||||

// Attempt to set the model using config

|

||||

await this.config.setModel(modelId, {

|

||||

reason: 'user_request_acp',

|

||||

context: 'session/set_model',

|

||||

});

|

||||

|

||||

// Get updated model info

|

||||

const currentModel = this.config.getModel();

|

||||

|

||||

return {

|

||||

modelId: currentModel,

|

||||

};

|

||||

}

|

||||

|

||||

/**

|

||||

* Sends a current_mode_update notification to the client.

|

||||

* Called after the agent switches modes (e.g., from exit_plan_mode tool).

|

||||

|

||||

@@ -1196,6 +1196,11 @@ describe('Hierarchical Memory Loading (config.ts) - Placeholder Suite', () => {

|

||||

],

|

||||

true,

|

||||

'tree',

|

||||

{

|

||||

respectGitIgnore: false,

|

||||

respectQwenIgnore: true,

|

||||

},

|

||||

undefined, // maxDirs

|

||||

);

|

||||

});

|

||||

|

||||

|

||||

@@ -9,6 +9,7 @@ import {

|

||||

AuthType,

|

||||

Config,

|

||||

DEFAULT_QWEN_EMBEDDING_MODEL,

|

||||

DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

FileDiscoveryService,

|

||||

getCurrentGeminiMdFilename,

|

||||

loadServerHierarchicalMemory,

|

||||

@@ -21,6 +22,7 @@ import {

|

||||

isToolEnabled,

|

||||

SessionService,

|

||||

type ResumedSessionData,

|

||||

type FileFilteringOptions,

|

||||

type MCPServerConfig,

|

||||

type ToolName,

|

||||

EditTool,

|

||||

@@ -332,14 +334,7 @@ export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

.option('experimental-skills', {

|

||||

type: 'boolean',

|

||||

description: 'Enable experimental Skills feature',

|

||||

default: (() => {

|

||||

const legacySkills = (

|

||||

settings as Settings & {

|

||||

tools?: { experimental?: { skills?: boolean } };

|

||||

}

|

||||

).tools?.experimental?.skills;

|

||||

return settings.experimental?.skills ?? legacySkills ?? false;

|

||||

})(),

|

||||

default: false,

|

||||

})

|

||||

.option('channel', {

|

||||

type: 'string',

|

||||

@@ -648,6 +643,7 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths: string[] = [],

|

||||

folderTrust: boolean,

|

||||

memoryImportFormat: 'flat' | 'tree' = 'tree',

|

||||

fileFilteringOptions?: FileFilteringOptions,

|

||||

): Promise<{ memoryContent: string; fileCount: number }> {

|

||||

// FIX: Use real, canonical paths for a reliable comparison to handle symlinks.

|

||||

const realCwd = fs.realpathSync(path.resolve(currentWorkingDirectory));

|

||||

@@ -673,6 +669,8 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths,

|

||||

folderTrust,

|

||||

memoryImportFormat,

|

||||

fileFilteringOptions,

|

||||

settings.context?.discoveryMaxDirs,

|

||||

);

|

||||

}

|

||||

|

||||

@@ -742,6 +740,11 @@ export async function loadCliConfig(

|

||||

|

||||

const fileService = new FileDiscoveryService(cwd);

|

||||

|

||||

const fileFiltering = {

|

||||

...DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

...settings.context?.fileFiltering,

|

||||

};

|

||||

|

||||

const includeDirectories = (settings.context?.includeDirectories || [])

|

||||

.map(resolvePath)

|

||||

.concat((argv.includeDirectories || []).map(resolvePath));

|

||||

@@ -758,6 +761,7 @@ export async function loadCliConfig(

|

||||

extensionContextFilePaths,

|

||||

trustedFolder,

|

||||

memoryImportFormat,

|

||||

fileFiltering,

|

||||

);

|

||||

|

||||

let mcpServers = mergeMcpServers(settings, activeExtensions);

|

||||

@@ -870,10 +874,11 @@ export async function loadCliConfig(

|

||||

}

|

||||

};

|

||||

|

||||

// ACP mode check: must include both --acp (current) and --experimental-acp (deprecated).

|

||||

// Without this check, edit, write_file, run_shell_command would be excluded in ACP mode.

|

||||

const isAcpMode = argv.acp || argv.experimentalAcp;

|

||||

if (!interactive && !isAcpMode && inputFormat !== InputFormat.STREAM_JSON) {

|

||||

if (

|

||||

!interactive &&

|

||||

!argv.experimentalAcp &&

|

||||

inputFormat !== InputFormat.STREAM_JSON

|

||||

) {

|

||||

switch (approvalMode) {

|

||||

case ApprovalMode.PLAN:

|

||||

case ApprovalMode.DEFAULT:

|

||||

|

||||

@@ -122,10 +122,9 @@ export const defaultKeyBindings: KeyBindingConfig = {

|

||||

|

||||

// Auto-completion

|

||||

[Command.ACCEPT_SUGGESTION]: [{ key: 'tab' }, { key: 'return', ctrl: false }],

|

||||

// Completion navigation uses only arrow keys

|

||||

// Ctrl+P/N are reserved for history navigation (HISTORY_UP/DOWN)

|

||||

[Command.COMPLETION_UP]: [{ key: 'up' }],

|

||||

[Command.COMPLETION_DOWN]: [{ key: 'down' }],

|

||||

// Completion navigation (arrow or Ctrl+P/N)

|

||||

[Command.COMPLETION_UP]: [{ key: 'up' }, { key: 'p', ctrl: true }],

|

||||

[Command.COMPLETION_DOWN]: [{ key: 'down' }, { key: 'n', ctrl: true }],

|

||||

|

||||

// Text input

|

||||

// Must also exclude shift to allow shift+enter for newline

|

||||

|

||||

@@ -106,6 +106,7 @@ const MIGRATION_MAP: Record<string, string> = {

|

||||

mcpServers: 'mcpServers',

|

||||

mcpServerCommand: 'mcp.serverCommand',

|

||||

memoryImportFormat: 'context.importFormat',

|

||||

memoryDiscoveryMaxDirs: 'context.discoveryMaxDirs',

|

||||

model: 'model.name',

|

||||

preferredEditor: 'general.preferredEditor',

|

||||

sandbox: 'tools.sandbox',

|

||||

@@ -921,21 +922,6 @@ export function migrateDeprecatedSettings(

|

||||

|

||||

loadedSettings.setValue(scope, 'extensions', newExtensionsValue);

|

||||

}

|

||||

|

||||

const legacySkills = (

|

||||

settings as Settings & {

|

||||

tools?: { experimental?: { skills?: boolean } };

|

||||

}

|

||||

).tools?.experimental?.skills;

|

||||

if (

|

||||

legacySkills !== undefined &&

|

||||

settings.experimental?.skills === undefined

|

||||

) {

|

||||

console.log(

|

||||

`Migrating deprecated tools.experimental.skills setting from ${scope} settings...`,

|

||||

);

|

||||

loadedSettings.setValue(scope, 'experimental.skills', legacySkills);

|

||||

}

|

||||

};

|

||||

|

||||

processScope(SettingScope.User);

|

||||

|

||||

@@ -434,16 +434,6 @@ const SETTINGS_SCHEMA = {

|

||||

'Show welcome back dialog when returning to a project with conversation history.',

|

||||

showInDialog: true,

|

||||

},

|

||||

enableUserFeedback: {

|

||||

type: 'boolean',

|

||||

label: 'Enable User Feedback',

|

||||

category: 'UI',

|

||||

requiresRestart: false,

|

||||

default: true,

|

||||

description:

|

||||

'Show optional feedback dialog after conversations to help improve Qwen performance.',

|

||||

showInDialog: true,

|

||||

},

|

||||

accessibility: {

|

||||

type: 'object',

|

||||

label: 'Accessibility',

|

||||

@@ -474,15 +464,6 @@ const SETTINGS_SCHEMA = {

|

||||

},

|

||||

},

|

||||

},

|

||||

feedbackLastShownTimestamp: {

|

||||

type: 'number',

|

||||

label: 'Feedback Last Shown Timestamp',

|

||||

category: 'UI',

|

||||

requiresRestart: false,

|

||||

default: 0,

|

||||

description: 'The last time the feedback dialog was shown.',

|

||||

showInDialog: false,

|

||||

},

|

||||

},

|

||||

},

|

||||

|

||||

@@ -741,6 +722,15 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'The format to use when importing memory.',

|

||||

showInDialog: false,

|

||||

},

|

||||

discoveryMaxDirs: {

|

||||

type: 'number',

|

||||

label: 'Memory Discovery Max Dirs',

|

||||

category: 'Context',

|

||||

requiresRestart: false,

|

||||

default: 200,

|

||||

description: 'Maximum number of directories to search for memory.',

|

||||

showInDialog: true,

|

||||

},

|

||||

includeDirectories: {

|

||||

type: 'array',

|

||||

label: 'Include Directories',

|

||||

@@ -1217,16 +1207,6 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'Setting to enable experimental features',

|

||||

showInDialog: false,

|

||||

properties: {

|

||||

skills: {

|

||||

type: 'boolean',

|

||||

label: 'Skills',

|

||||

category: 'Experimental',

|

||||

requiresRestart: true,

|

||||

default: false,

|

||||

description:

|

||||

'Enable experimental Agent Skills feature. When enabled, Qwen Code can use Skills from .qwen/skills/ and ~/.qwen/skills/.',

|

||||

showInDialog: true,

|

||||

},

|

||||

extensionManagement: {

|

||||

type: 'boolean',

|

||||

label: 'Extension Management',

|

||||

|

||||

@@ -289,13 +289,6 @@ export default {

|

||||

'Show Citations': 'Quellenangaben anzeigen',

|

||||

'Custom Witty Phrases': 'Benutzerdefinierte Witzige Sprüche',

|

||||

'Enable Welcome Back': 'Willkommen-zurück aktivieren',

|

||||

'Enable User Feedback': 'Benutzerfeedback aktivieren',

|

||||

'How is Qwen doing this session? (optional)':

|

||||

'Wie macht sich Qwen in dieser Sitzung? (optional)',

|

||||

Bad: 'Schlecht',

|

||||

Good: 'Gut',

|

||||

'Not Sure Yet': 'Noch nicht sicher',

|

||||

'Any other key': 'Beliebige andere Taste',

|

||||

'Disable Loading Phrases': 'Ladesprüche deaktivieren',

|

||||

'Screen Reader Mode': 'Bildschirmleser-Modus',

|

||||

'IDE Mode': 'IDE-Modus',

|

||||

|

||||

@@ -286,13 +286,6 @@ export default {

|

||||

'Show Citations': 'Show Citations',

|

||||

'Custom Witty Phrases': 'Custom Witty Phrases',

|

||||

'Enable Welcome Back': 'Enable Welcome Back',

|

||||

'Enable User Feedback': 'Enable User Feedback',

|

||||

'How is Qwen doing this session? (optional)':

|

||||

'How is Qwen doing this session? (optional)',

|

||||

Bad: 'Bad',

|

||||

Good: 'Good',

|

||||

'Not Sure Yet': 'Not Sure Yet',

|

||||

'Any other key': 'Any other key',

|

||||

'Disable Loading Phrases': 'Disable Loading Phrases',

|

||||

'Screen Reader Mode': 'Screen Reader Mode',

|

||||

'IDE Mode': 'IDE Mode',

|

||||

|

||||

@@ -289,13 +289,6 @@ export default {

|

||||

'Show Citations': 'Показывать цитаты',

|

||||

'Custom Witty Phrases': 'Пользовательские остроумные фразы',

|

||||

'Enable Welcome Back': 'Включить приветствие при возврате',

|

||||

'Enable User Feedback': 'Включить отзывы пользователей',

|

||||

'How is Qwen doing this session? (optional)':

|

||||

'Как дела у Qwen в этой сессии? (необязательно)',

|

||||

Bad: 'Плохо',

|

||||

Good: 'Хорошо',

|

||||

'Not Sure Yet': 'Пока не уверен',

|

||||

'Any other key': 'Любая другая клавиша',

|

||||

'Disable Loading Phrases': 'Отключить фразы при загрузке',

|

||||

'Screen Reader Mode': 'Режим программы чтения с экрана',

|

||||

'IDE Mode': 'Режим IDE',

|

||||

|

||||

@@ -277,12 +277,6 @@ export default {

|

||||

'Show Citations': '显示引用',

|

||||

'Custom Witty Phrases': '自定义诙谐短语',

|

||||

'Enable Welcome Back': '启用欢迎回来',

|

||||

'Enable User Feedback': '启用用户反馈',

|

||||

'How is Qwen doing this session? (optional)': 'Qwen 这次表现如何?(可选)',

|

||||

Bad: '不满意',

|

||||

Good: '满意',

|

||||

'Not Sure Yet': '暂不评价',

|

||||

'Any other key': '任意其他键',

|

||||

'Disable Loading Phrases': '禁用加载短语',

|

||||

'Screen Reader Mode': '屏幕阅读器模式',

|

||||

'IDE Mode': 'IDE 模式',

|

||||

@@ -879,11 +873,11 @@ export default {

|

||||

'Session Stats': '会话统计',

|

||||

'Model Usage': '模型使用情况',

|

||||

Reqs: '请求数',

|

||||

'Input Tokens': '输入 token 数',

|

||||

'Output Tokens': '输出 token 数',

|

||||

'Input Tokens': '输入令牌',

|

||||

'Output Tokens': '输出令牌',

|

||||

'Savings Highlight:': '节省亮点:',

|

||||

'of input tokens were served from the cache, reducing costs.':

|

||||

'从缓存载入 token ,降低了成本',

|

||||

'的输入令牌来自缓存,降低了成本',

|

||||

'Tip: For a full token breakdown, run `/stats model`.':

|

||||

'提示:要查看完整的令牌明细,请运行 `/stats model`',

|

||||

'Model Stats For Nerds': '模型统计(技术细节)',

|

||||

|

||||

@@ -18,6 +18,7 @@ import { copyCommand } from '../ui/commands/copyCommand.js';

|

||||

import { docsCommand } from '../ui/commands/docsCommand.js';

|

||||

import { directoryCommand } from '../ui/commands/directoryCommand.js';

|

||||

import { editorCommand } from '../ui/commands/editorCommand.js';

|

||||

import { exportCommand } from '../ui/commands/exportCommand.js';

|

||||

import { extensionsCommand } from '../ui/commands/extensionsCommand.js';

|

||||

import { helpCommand } from '../ui/commands/helpCommand.js';

|

||||

import { ideCommand } from '../ui/commands/ideCommand.js';

|

||||

@@ -67,6 +68,7 @@ export class BuiltinCommandLoader implements ICommandLoader {

|

||||

docsCommand,

|

||||

directoryCommand,

|

||||

editorCommand,

|

||||

exportCommand,

|

||||

extensionsCommand,

|

||||

helpCommand,

|

||||

await ideCommand(),

|

||||

|

||||

@@ -45,7 +45,6 @@ import process from 'node:process';

|

||||

import { useHistory } from './hooks/useHistoryManager.js';

|

||||

import { useMemoryMonitor } from './hooks/useMemoryMonitor.js';

|

||||

import { useThemeCommand } from './hooks/useThemeCommand.js';

|

||||

import { useFeedbackDialog } from './hooks/useFeedbackDialog.js';

|

||||

import { useAuthCommand } from './auth/useAuth.js';

|

||||

import { useEditorSettings } from './hooks/useEditorSettings.js';

|

||||

import { useSettingsCommand } from './hooks/useSettingsCommand.js';

|

||||

@@ -576,6 +575,7 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

config.getExtensionContextFilePaths(),

|

||||

config.isTrustedFolder(),

|

||||

settings.merged.context?.importFormat || 'tree', // Use setting or default to 'tree'

|

||||

config.getFileFilteringOptions(),

|

||||

);

|

||||

|

||||

config.setUserMemory(memoryContent);

|

||||

@@ -1196,19 +1196,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

isApprovalModeDialogOpen ||

|

||||

isResumeDialogOpen;

|

||||

|

||||

const {

|

||||

isFeedbackDialogOpen,

|

||||

openFeedbackDialog,

|

||||

closeFeedbackDialog,

|

||||

submitFeedback,

|

||||

} = useFeedbackDialog({

|

||||

config,

|

||||

settings,

|

||||

streamingState,

|

||||

history: historyManager.history,

|

||||

sessionStats,

|

||||

});

|

||||

|

||||

const pendingHistoryItems = useMemo(

|

||||

() => [...pendingSlashCommandHistoryItems, ...pendingGeminiHistoryItems],

|

||||

[pendingSlashCommandHistoryItems, pendingGeminiHistoryItems],

|

||||

@@ -1305,8 +1292,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

// Subagent dialogs

|

||||

isSubagentCreateDialogOpen,

|

||||

isAgentsManagerDialogOpen,

|

||||

// Feedback dialog

|

||||

isFeedbackDialogOpen,

|

||||

}),

|

||||

[

|

||||

isThemeDialogOpen,

|

||||

@@ -1397,8 +1382,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

// Subagent dialogs

|

||||

isSubagentCreateDialogOpen,

|

||||

isAgentsManagerDialogOpen,

|

||||

// Feedback dialog

|

||||

isFeedbackDialogOpen,

|

||||

],

|

||||

);

|

||||

|

||||

@@ -1439,10 +1422,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

openResumeDialog,

|

||||

closeResumeDialog,

|

||||

handleResume,

|

||||

// Feedback dialog

|

||||

openFeedbackDialog,

|

||||

closeFeedbackDialog,

|

||||

submitFeedback,

|

||||

}),

|

||||

[

|

||||

handleThemeSelect,

|

||||

@@ -1478,10 +1457,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

openResumeDialog,

|

||||

closeResumeDialog,

|

||||

handleResume,

|

||||

// Feedback dialog

|

||||

openFeedbackDialog,

|

||||

closeFeedbackDialog,

|

||||

submitFeedback,

|

||||

],

|

||||

);

|

||||

|

||||

|

||||

@@ -1,61 +0,0 @@

|

||||

import { Box, Text } from 'ink';

|

||||

import type React from 'react';

|

||||

import { t } from '../i18n/index.js';

|

||||

import { useUIActions } from './contexts/UIActionsContext.js';

|

||||

import { useUIState } from './contexts/UIStateContext.js';

|

||||

import { useKeypress } from './hooks/useKeypress.js';

|

||||

|

||||

const FEEDBACK_OPTIONS = {

|

||||

GOOD: 1,

|

||||

BAD: 2,

|

||||

NOT_SURE: 3,

|

||||

} as const;

|

||||

|

||||

const FEEDBACK_OPTION_KEYS = {

|

||||

[FEEDBACK_OPTIONS.GOOD]: '1',

|

||||

[FEEDBACK_OPTIONS.BAD]: '2',

|

||||

[FEEDBACK_OPTIONS.NOT_SURE]: 'any',

|

||||

} as const;

|

||||

|

||||

export const FEEDBACK_DIALOG_KEYS = ['1', '2'] as const;

|

||||

|

||||

export const FeedbackDialog: React.FC = () => {

|

||||

const uiState = useUIState();

|

||||

const uiActions = useUIActions();

|

||||

|

||||

useKeypress(

|

||||

(key) => {

|

||||

if (key.name === FEEDBACK_OPTION_KEYS[FEEDBACK_OPTIONS.GOOD]) {

|

||||

uiActions.submitFeedback(FEEDBACK_OPTIONS.GOOD);

|

||||

} else if (key.name === FEEDBACK_OPTION_KEYS[FEEDBACK_OPTIONS.BAD]) {

|

||||

uiActions.submitFeedback(FEEDBACK_OPTIONS.BAD);

|

||||

} else {

|

||||

uiActions.submitFeedback(FEEDBACK_OPTIONS.NOT_SURE);

|

||||

}

|

||||

|

||||

uiActions.closeFeedbackDialog();

|

||||

},

|

||||

{ isActive: uiState.isFeedbackDialogOpen },

|

||||

);

|

||||

|

||||

return (

|

||||

<Box flexDirection="column" marginY={1}>

|

||||

<Box>

|

||||

<Text color="cyan">● </Text>

|

||||

<Text bold>{t('How is Qwen doing this session? (optional)')}</Text>

|

||||

</Box>

|

||||

<Box marginTop={1}>

|

||||

<Text color="cyan">

|

||||

{FEEDBACK_OPTION_KEYS[FEEDBACK_OPTIONS.GOOD]}:{' '}

|

||||

</Text>

|

||||

<Text>{t('Good')}</Text>

|

||||

<Text> </Text>

|

||||

<Text color="cyan">{FEEDBACK_OPTION_KEYS[FEEDBACK_OPTIONS.BAD]}: </Text>

|

||||

<Text>{t('Bad')}</Text>

|

||||

<Text> </Text>

|

||||

<Text color="cyan">{t('Any other key')}: </Text>

|

||||

<Text>{t('Not Sure Yet')}</Text>

|

||||

</Box>

|

||||

</Box>

|

||||

);

|

||||

};

|

||||

@@ -4,11 +4,7 @@

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import type {

|

||||

Config,

|

||||

ContentGeneratorConfig,

|

||||

ModelProvidersConfig,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import type { Config, ModelProvidersConfig } from '@qwen-code/qwen-code-core';

|

||||

import {

|

||||