mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-07 01:19:13 +00:00

Compare commits

1 Commits

feat/suppo

...

v0.5.1-nig

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7ecd2520ff |

9

.github/workflows/e2e.yml

vendored

9

.github/workflows/e2e.yml

vendored

@@ -18,6 +18,8 @@ jobs:

|

||||

- 'sandbox:docker'

|

||||

node-version:

|

||||

- '20.x'

|

||||

- '22.x'

|

||||

- '24.x'

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@08c6903cd8c0fde910a37f88322edcfb5dd907a8' # ratchet:actions/checkout@v5

|

||||

@@ -65,13 +67,10 @@ jobs:

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

OPENAI_MODEL: '${{ secrets.OPENAI_MODEL }}'

|

||||

KEEP_OUTPUT: 'true'

|

||||

SANDBOX: '${{ matrix.sandbox }}'

|

||||

VERBOSE: 'true'

|

||||

run: |-

|

||||

if [[ "${{ matrix.sandbox }}" == "sandbox:docker" ]]; then

|

||||

npm run test:integration:sandbox:docker

|

||||

else

|

||||

npm run test:integration:sandbox:none

|

||||

fi

|

||||

npm run "test:integration:${SANDBOX}"

|

||||

|

||||

e2e-test-macos:

|

||||

name: 'E2E Test - macOS'

|

||||

|

||||

143

.github/workflows/release-sdk.yml

vendored

143

.github/workflows/release-sdk.yml

vendored

@@ -33,10 +33,6 @@ on:

|

||||

type: 'boolean'

|

||||

default: false

|

||||

|

||||

concurrency:

|

||||

group: '${{ github.workflow }}'

|

||||

cancel-in-progress: false

|

||||

|

||||

jobs:

|

||||

release-sdk:

|

||||

runs-on: 'ubuntu-latest'

|

||||

@@ -50,7 +46,6 @@ jobs:

|

||||

packages: 'write'

|

||||

id-token: 'write'

|

||||

issues: 'write'

|

||||

pull-requests: 'write'

|

||||

outputs:

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

|

||||

@@ -91,8 +86,6 @@ jobs:

|

||||

with:

|

||||

node-version-file: '.nvmrc'

|

||||

cache: 'npm'

|

||||

registry-url: 'https://registry.npmjs.org'

|

||||

scope: '@qwen-code'

|

||||

|

||||

- name: 'Install Dependencies'

|

||||

run: |-

|

||||

@@ -128,19 +121,6 @@ jobs:

|

||||

IS_PREVIEW: '${{ steps.vars.outputs.is_preview }}'

|

||||

MANUAL_VERSION: '${{ inputs.version }}'

|

||||

|

||||

- name: 'Set SDK package version (local only)'

|

||||

env:

|

||||

RELEASE_VERSION: '${{ steps.version.outputs.RELEASE_VERSION }}'

|

||||

run: |-

|

||||

# Ensure the package version matches the computed release version.

|

||||

# This is required for nightly/preview because npm does not allow re-publishing the same version.

|

||||

npm version -w @qwen-code/sdk "${RELEASE_VERSION}" --no-git-tag-version --allow-same-version

|

||||

|

||||

- name: 'Build CLI Bundle'

|

||||

run: |

|

||||

npm run build

|

||||

npm run bundle

|

||||

|

||||

- name: 'Run Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

@@ -152,6 +132,13 @@ jobs:

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

OPENAI_MODEL: '${{ secrets.OPENAI_MODEL }}'

|

||||

|

||||

- name: 'Build CLI for Integration Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

run: |

|

||||

npm run build

|

||||

npm run bundle

|

||||

|

||||

- name: 'Run SDK Integration Tests'

|

||||

if: |-

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

@@ -168,21 +155,7 @@ jobs:

|

||||

git config user.name "github-actions[bot]"

|

||||

git config user.email "github-actions[bot]@users.noreply.github.com"

|

||||

|

||||

- name: 'Build SDK'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

run: |-

|

||||

npm run build

|

||||

|

||||

- name: 'Publish @qwen-code/sdk'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

run: |-

|

||||

npm publish --access public --tag=${{ steps.version.outputs.NPM_TAG }} ${{ steps.vars.outputs.is_dry_run == 'true' && '--dry-run' || '' }}

|

||||

env:

|

||||

NODE_AUTH_TOKEN: '${{ secrets.NPM_TOKEN }}'

|

||||

|

||||

- name: 'Create and switch to a release branch'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

id: 'release_branch'

|

||||

env:

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

@@ -191,22 +164,50 @@ jobs:

|

||||

git switch -c "${BRANCH_NAME}"

|

||||

echo "BRANCH_NAME=${BRANCH_NAME}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Commit and Push package version (stable only)'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

- name: 'Update package version'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

env:

|

||||

RELEASE_VERSION: '${{ steps.version.outputs.RELEASE_VERSION }}'

|

||||

run: |-

|

||||

npm version "${RELEASE_VERSION}" --no-git-tag-version --allow-same-version

|

||||

|

||||

- name: 'Commit and Conditionally Push package version'

|

||||

env:

|

||||

BRANCH_NAME: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

IS_DRY_RUN: '${{ steps.vars.outputs.is_dry_run }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

# Only persist version bumps after a successful publish.

|

||||

git add packages/sdk-typescript/package.json package-lock.json

|

||||

git add packages/sdk-typescript/package.json

|

||||

if git diff --staged --quiet; then

|

||||

echo "No version changes to commit"

|

||||

else

|

||||

git commit -m "chore(release): sdk-typescript ${RELEASE_TAG}"

|

||||

fi

|

||||

echo "Pushing release branch to remote..."

|

||||

git push --set-upstream origin "${BRANCH_NAME}" --follow-tags

|

||||

if [[ "${IS_DRY_RUN}" == "false" ]]; then

|

||||

echo "Pushing release branch to remote..."

|

||||

git push --set-upstream origin "${BRANCH_NAME}" --follow-tags

|

||||

else

|

||||

echo "Dry run enabled. Skipping push."

|

||||

fi

|

||||

|

||||

- name: 'Build SDK'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

run: |-

|

||||

npm run build

|

||||

|

||||

- name: 'Configure npm for publishing'

|

||||

uses: 'actions/setup-node@49933ea5288caeca8642d1e84afbd3f7d6820020' # ratchet:actions/setup-node@v4

|

||||

with:

|

||||

node-version-file: '.nvmrc'

|

||||

registry-url: 'https://registry.npmjs.org'

|

||||

scope: '@qwen-code'

|

||||

|

||||

- name: 'Publish @qwen-code/sdk'

|

||||

working-directory: 'packages/sdk-typescript'

|

||||

run: |-

|

||||

npm publish --access public --tag=${{ steps.version.outputs.NPM_TAG }} ${{ steps.vars.outputs.is_dry_run == 'true' && '--dry-run' || '' }}

|

||||

env:

|

||||

NODE_AUTH_TOKEN: '${{ secrets.NPM_TOKEN }}'

|

||||

|

||||

- name: 'Create GitHub Release and Tag'

|

||||

if: |-

|

||||

@@ -216,68 +217,12 @@ jobs:

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

PREVIOUS_RELEASE_TAG: '${{ steps.version.outputs.PREVIOUS_RELEASE_TAG }}'

|

||||

IS_NIGHTLY: '${{ steps.vars.outputs.is_nightly }}'

|

||||

IS_PREVIEW: '${{ steps.vars.outputs.is_preview }}'

|

||||

REF: '${{ github.event.inputs.ref || github.sha }}'

|

||||

run: |-

|

||||

# For stable releases, use the release branch; for nightly/preview, use the current ref

|

||||

if [[ "${IS_NIGHTLY}" == "true" || "${IS_PREVIEW}" == "true" ]]; then

|

||||

TARGET="${REF}"

|

||||

PRERELEASE_FLAG="--prerelease"

|

||||

else

|

||||

TARGET="${RELEASE_BRANCH}"

|

||||

PRERELEASE_FLAG=""

|

||||

fi

|

||||

|

||||

gh release create "sdk-typescript-${RELEASE_TAG}" \

|

||||

--target "${TARGET}" \

|

||||

--target "$RELEASE_BRANCH" \

|

||||

--title "SDK TypeScript Release ${RELEASE_TAG}" \

|

||||

--notes-start-tag "sdk-typescript-${PREVIOUS_RELEASE_TAG}" \

|

||||

--generate-notes \

|

||||

${PRERELEASE_FLAG}

|

||||

|

||||

- name: 'Create PR to merge release branch into main'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

id: 'pr'

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

|

||||

pr_url="$(gh pr list --head "${RELEASE_BRANCH}" --base main --json url --jq '.[0].url')"

|

||||

if [[ -z "${pr_url}" ]]; then

|

||||

pr_url="$(gh pr create \

|

||||

--base main \

|

||||

--head "${RELEASE_BRANCH}" \

|

||||

--title "chore(release): sdk-typescript ${RELEASE_TAG}" \

|

||||

--body "Automated release PR for sdk-typescript ${RELEASE_TAG}.")"

|

||||

fi

|

||||

|

||||

echo "PR_URL=${pr_url}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Wait for CI checks to complete'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

echo "Waiting for CI checks to complete..."

|

||||

gh pr checks "${PR_URL}" --watch --interval 30

|

||||

|

||||

- name: 'Enable auto-merge for release PR'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

gh pr merge "${PR_URL}" --merge --auto

|

||||

--generate-notes

|

||||

|

||||

- name: 'Create Issue on Failure'

|

||||

if: |-

|

||||

|

||||

4

.github/workflows/release.yml

vendored

4

.github/workflows/release.yml

vendored

@@ -133,8 +133,8 @@ jobs:

|

||||

${{ github.event.inputs.force_skip_tests != 'true' }}

|

||||

run: |

|

||||

npm run preflight

|

||||

npm run test:integration:cli:sandbox:none

|

||||

npm run test:integration:cli:sandbox:docker

|

||||

npm run test:integration:sandbox:none

|

||||

npm run test:integration:sandbox:docker

|

||||

env:

|

||||

OPENAI_API_KEY: '${{ secrets.OPENAI_API_KEY }}'

|

||||

OPENAI_BASE_URL: '${{ secrets.OPENAI_BASE_URL }}'

|

||||

|

||||

7

.vscode/settings.json

vendored

7

.vscode/settings.json

vendored

@@ -13,10 +13,5 @@

|

||||

"[javascript]": {

|

||||

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

||||

},

|

||||

"vitest.disableWorkspaceWarning": true,

|

||||

"lsp": {

|

||||

"enabled": true,

|

||||

"allowed": ["typescript-language-server"],

|

||||

"excluded": ["gopls"]

|

||||

}

|

||||

"vitest.disableWorkspaceWarning": true

|

||||

}

|

||||

|

||||

110

CONTRIBUTING.md

110

CONTRIBUTING.md

@@ -2,6 +2,27 @@

|

||||

|

||||

We would love to accept your patches and contributions to this project.

|

||||

|

||||

## Before you begin

|

||||

|

||||

### Sign our Contributor License Agreement

|

||||

|

||||

Contributions to this project must be accompanied by a

|

||||

[Contributor License Agreement](https://cla.developers.google.com/about) (CLA).

|

||||

You (or your employer) retain the copyright to your contribution; this simply

|

||||

gives us permission to use and redistribute your contributions as part of the

|

||||

project.

|

||||

|

||||

If you or your current employer have already signed the Google CLA (even if it

|

||||

was for a different project), you probably don't need to do it again.

|

||||

|

||||

Visit <https://cla.developers.google.com/> to see your current agreements or to

|

||||

sign a new one.

|

||||

|

||||

### Review our Community Guidelines

|

||||

|

||||

This project follows [Google's Open Source Community

|

||||

Guidelines](https://opensource.google/conduct/).

|

||||

|

||||

## Contribution Process

|

||||

|

||||

### Code Reviews

|

||||

@@ -53,6 +74,12 @@ Your PR should have a clear, descriptive title and a detailed description of the

|

||||

|

||||

In the PR description, explain the "why" behind your changes and link to the relevant issue (e.g., `Fixes #123`).

|

||||

|

||||

## Forking

|

||||

|

||||

If you are forking the repository you will be able to run the Build, Test and Integration test workflows. However in order to make the integration tests run you'll need to add a [GitHub Repository Secret](https://docs.github.com/en/actions/security-for-github-actions/security-guides/using-secrets-in-github-actions#creating-secrets-for-a-repository) with a value of `GEMINI_API_KEY` and set that to a valid API key that you have available. Your key and secret are private to your repo; no one without access can see your key and you cannot see any secrets related to this repo.

|

||||

|

||||

Additionally you will need to click on the `Actions` tab and enable workflows for your repository, you'll find it's the large blue button in the center of the screen.

|

||||

|

||||

## Development Setup and Workflow

|

||||

|

||||

This section guides contributors on how to build, modify, and understand the development setup of this project.

|

||||

@@ -71,8 +98,8 @@ This section guides contributors on how to build, modify, and understand the dev

|

||||

To clone the repository:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/QwenLM/qwen-code.git # Or your fork's URL

|

||||

cd qwen-code

|

||||

git clone https://github.com/google-gemini/gemini-cli.git # Or your fork's URL

|

||||

cd gemini-cli

|

||||

```

|

||||

|

||||

To install dependencies defined in `package.json` as well as root dependencies:

|

||||

@@ -91,9 +118,9 @@ This command typically compiles TypeScript to JavaScript, bundles assets, and pr

|

||||

|

||||

### Enabling Sandboxing

|

||||

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `QWEN_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

[Sandboxing](#sandboxing) is highly recommended and requires, at a minimum, setting `GEMINI_SANDBOX=true` in your `~/.env` and ensuring a sandboxing provider (e.g. `macOS Seatbelt`, `docker`, or `podman`) is available. See [Sandboxing](#sandboxing) for details.

|

||||

|

||||

To build both the `qwen-code` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

To build both the `gemini` CLI utility and the sandbox container, run `build:all` from the root directory:

|

||||

|

||||

```bash

|

||||

npm run build:all

|

||||

@@ -103,13 +130,13 @@ To skip building the sandbox container, you can use `npm run build` instead.

|

||||

|

||||

### Running

|

||||

|

||||

To start the Qwen Code application from the source code (after building), run the following command from the root directory:

|

||||

To start the Gemini CLI from the source code (after building), run the following command from the root directory:

|

||||

|

||||

```bash

|

||||

npm start

|

||||

```

|

||||

|

||||

If you'd like to run the source build outside of the qwen-code folder, you can utilize `npm link path/to/qwen-code/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) to run with `qwen-code`

|

||||

If you'd like to run the source build outside of the gemini-cli folder, you can utilize `npm link path/to/gemini-cli/packages/cli` (see: [docs](https://docs.npmjs.com/cli/v9/commands/npm-link)) or `alias gemini="node path/to/gemini-cli/packages/cli"` to run with `gemini`

|

||||

|

||||

### Running Tests

|

||||

|

||||

@@ -127,7 +154,7 @@ This will run tests located in the `packages/core` and `packages/cli` directorie

|

||||

|

||||

#### Integration Tests

|

||||

|

||||

The integration tests are designed to validate the end-to-end functionality of Qwen Code. They are not run as part of the default `npm run test` command.

|

||||

The integration tests are designed to validate the end-to-end functionality of the Gemini CLI. They are not run as part of the default `npm run test` command.

|

||||

|

||||

To run the integration tests, use the following command:

|

||||

|

||||

@@ -182,61 +209,19 @@ npm run lint

|

||||

### Coding Conventions

|

||||

|

||||

- Please adhere to the coding style, patterns, and conventions used throughout the existing codebase.

|

||||

- Consult [QWEN.md](https://github.com/QwenLM/qwen-code/blob/main/QWEN.md) (typically found in the project root) for specific instructions related to AI-assisted development, including conventions for React, comments, and Git usage.

|

||||

- **Imports:** Pay special attention to import paths. The project uses ESLint to enforce restrictions on relative imports between packages.

|

||||

|

||||

### Project Structure

|

||||

|

||||

- `packages/`: Contains the individual sub-packages of the project.

|

||||

- `cli/`: The command-line interface.

|

||||

- `core/`: The core backend logic for Qwen Code.

|

||||

- `core/`: The core backend logic for the Gemini CLI.

|

||||

- `docs/`: Contains all project documentation.

|

||||

- `scripts/`: Utility scripts for building, testing, and development tasks.

|

||||

|

||||

For more detailed architecture, see `docs/architecture.md`.

|

||||

|

||||

## Documentation Development

|

||||

|

||||

This section describes how to develop and preview the documentation locally.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

1. Ensure you have Node.js (version 18+) installed

|

||||

2. Have npm or yarn available

|

||||

|

||||

### Setup Documentation Site Locally

|

||||

|

||||

To work on the documentation and preview changes locally:

|

||||

|

||||

1. Navigate to the `docs-site` directory:

|

||||

|

||||

```bash

|

||||

cd docs-site

|

||||

```

|

||||

|

||||

2. Install dependencies:

|

||||

|

||||

```bash

|

||||

npm install

|

||||

```

|

||||

|

||||

3. Link the documentation content from the main `docs` directory:

|

||||

|

||||

```bash

|

||||

npm run link

|

||||

```

|

||||

|

||||

This creates a symbolic link from `../docs` to `content` in the docs-site project, allowing the documentation content to be served by the Next.js site.

|

||||

|

||||

4. Start the development server:

|

||||

|

||||

```bash

|

||||

npm run dev

|

||||

```

|

||||

|

||||

5. Open [http://localhost:3000](http://localhost:3000) in your browser to see the documentation site with live updates as you make changes.

|

||||

|

||||

Any changes made to the documentation files in the main `docs` directory will be reflected immediately in the documentation site.

|

||||

|

||||

## Debugging

|

||||

|

||||

### VS Code:

|

||||

@@ -246,7 +231,7 @@ Any changes made to the documentation files in the main `docs` directory will be

|

||||

```bash

|

||||

npm run debug

|

||||

```

|

||||

This command runs `node --inspect-brk dist/index.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

This command runs `node --inspect-brk dist/gemini.js` within the `packages/cli` directory, pausing execution until a debugger attaches. You can then open `chrome://inspect` in your Chrome browser to connect to the debugger.

|

||||

2. In VS Code, use the "Attach" launch configuration (found in `.vscode/launch.json`).

|

||||

|

||||

Alternatively, you can use the "Launch Program" configuration in VS Code if you prefer to launch the currently open file directly, but 'F5' is generally recommended.

|

||||

@@ -254,16 +239,16 @@ Alternatively, you can use the "Launch Program" configuration in VS Code if you

|

||||

To hit a breakpoint inside the sandbox container run:

|

||||

|

||||

```bash

|

||||

DEBUG=1 qwen-code

|

||||

DEBUG=1 gemini

|

||||

```

|

||||

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect qwen-code due to automatic exclusion. Use `.qwen-code/.env` files for qwen-code specific debug settings.

|

||||

**Note:** If you have `DEBUG=true` in a project's `.env` file, it won't affect gemini-cli due to automatic exclusion. Use `.gemini/.env` files for gemini-cli specific debug settings.

|

||||

|

||||

### React DevTools

|

||||

|

||||

To debug the CLI's React-based UI, you can use React DevTools. Ink, the library used for the CLI's interface, is compatible with React DevTools version 4.x.

|

||||

|

||||

1. **Start the Qwen Code application in development mode:**

|

||||

1. **Start the Gemini CLI in development mode:**

|

||||

|

||||

```bash

|

||||

DEV=true npm start

|

||||

@@ -285,10 +270,23 @@ To debug the CLI's React-based UI, you can use React DevTools. Ink, the library

|

||||

```

|

||||

|

||||

Your running CLI application should then connect to React DevTools.

|

||||

|

||||

|

||||

## Sandboxing

|

||||

|

||||

> TBD

|

||||

### macOS Seatbelt

|

||||

|

||||

On macOS, `qwen` uses Seatbelt (`sandbox-exec`) under a `permissive-open` profile (see `packages/cli/src/utils/sandbox-macos-permissive-open.sb`) that restricts writes to the project folder but otherwise allows all other operations and outbound network traffic ("open") by default. You can switch to a `restrictive-closed` profile (see `packages/cli/src/utils/sandbox-macos-restrictive-closed.sb`) that declines all operations and outbound network traffic ("closed") by default by setting `SEATBELT_PROFILE=restrictive-closed` in your environment or `.env` file. Available built-in profiles are `{permissive,restrictive}-{open,closed,proxied}` (see below for proxied networking). You can also switch to a custom profile `SEATBELT_PROFILE=<profile>` if you also create a file `.qwen/sandbox-macos-<profile>.sb` under your project settings directory `.qwen`.

|

||||

|

||||

### Container-based Sandboxing (All Platforms)

|

||||

|

||||

For stronger container-based sandboxing on macOS or other platforms, you can set `GEMINI_SANDBOX=true|docker|podman|<command>` in your environment or `.env` file. The specified command (or if `true` then either `docker` or `podman`) must be installed on the host machine. Once enabled, `npm run build:all` will build a minimal container ("sandbox") image and `npm start` will launch inside a fresh instance of that container. The first build can take 20-30s (mostly due to downloading of the base image) but after that both build and start overhead should be minimal. Default builds (`npm run build`) will not rebuild the sandbox.

|

||||

|

||||

Container-based sandboxing mounts the project directory (and system temp directory) with read-write access and is started/stopped/removed automatically as you start/stop Gemini CLI. Files created within the sandbox should be automatically mapped to your user/group on host machine. You can easily specify additional mounts, ports, or environment variables by setting `SANDBOX_{MOUNTS,PORTS,ENV}` as needed. You can also fully customize the sandbox for your projects by creating the files `.qwen/sandbox.Dockerfile` and/or `.qwen/sandbox.bashrc` under your project settings directory (`.qwen`) and running `qwen` with `BUILD_SANDBOX=1` to trigger building of your custom sandbox.

|

||||

|

||||

#### Proxied Networking

|

||||

|

||||

All sandboxing methods, including macOS Seatbelt using `*-proxied` profiles, support restricting outbound network traffic through a custom proxy server that can be specified as `GEMINI_SANDBOX_PROXY_COMMAND=<command>`, where `<command>` must start a proxy server that listens on `:::8877` for relevant requests. See `docs/examples/proxy-script.md` for a minimal proxy that only allows `HTTPS` connections to `example.com:443` (e.g. `curl https://example.com`) and declines all other requests. The proxy is started and stopped automatically alongside the sandbox.

|

||||

|

||||

## Manual Publish

|

||||

|

||||

|

||||

10

Makefile

10

Makefile

@@ -1,9 +1,9 @@

|

||||

# Makefile for qwen-code

|

||||

# Makefile for gemini-cli

|

||||

|

||||

.PHONY: help install build build-sandbox build-all test lint format preflight clean start debug release run-npx create-alias

|

||||

|

||||

help:

|

||||

@echo "Makefile for qwen-code"

|

||||

@echo "Makefile for gemini-cli"

|

||||

@echo ""

|

||||

@echo "Usage:"

|

||||

@echo " make install - Install npm dependencies"

|

||||

@@ -14,11 +14,11 @@ help:

|

||||

@echo " make format - Format the code"

|

||||

@echo " make preflight - Run formatting, linting, and tests"

|

||||

@echo " make clean - Remove generated files"

|

||||

@echo " make start - Start the Qwen Code CLI"

|

||||

@echo " make debug - Start the Qwen Code CLI in debug mode"

|

||||

@echo " make start - Start the Gemini CLI"

|

||||

@echo " make debug - Start the Gemini CLI in debug mode"

|

||||

@echo ""

|

||||

@echo " make run-npx - Run the CLI using npx (for testing the published package)"

|

||||

@echo " make create-alias - Create a 'qwen' alias for your shell"

|

||||

@echo " make create-alias - Create a 'gemini' alias for your shell"

|

||||

|

||||

install:

|

||||

npm install

|

||||

|

||||

411

README.md

411

README.md

@@ -1,152 +1,382 @@

|

||||

# Qwen Code

|

||||

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

[](./LICENSE)

|

||||

[](https://nodejs.org/)

|

||||

[](https://www.npmjs.com/package/@qwen-code/qwen-code)

|

||||

|

||||

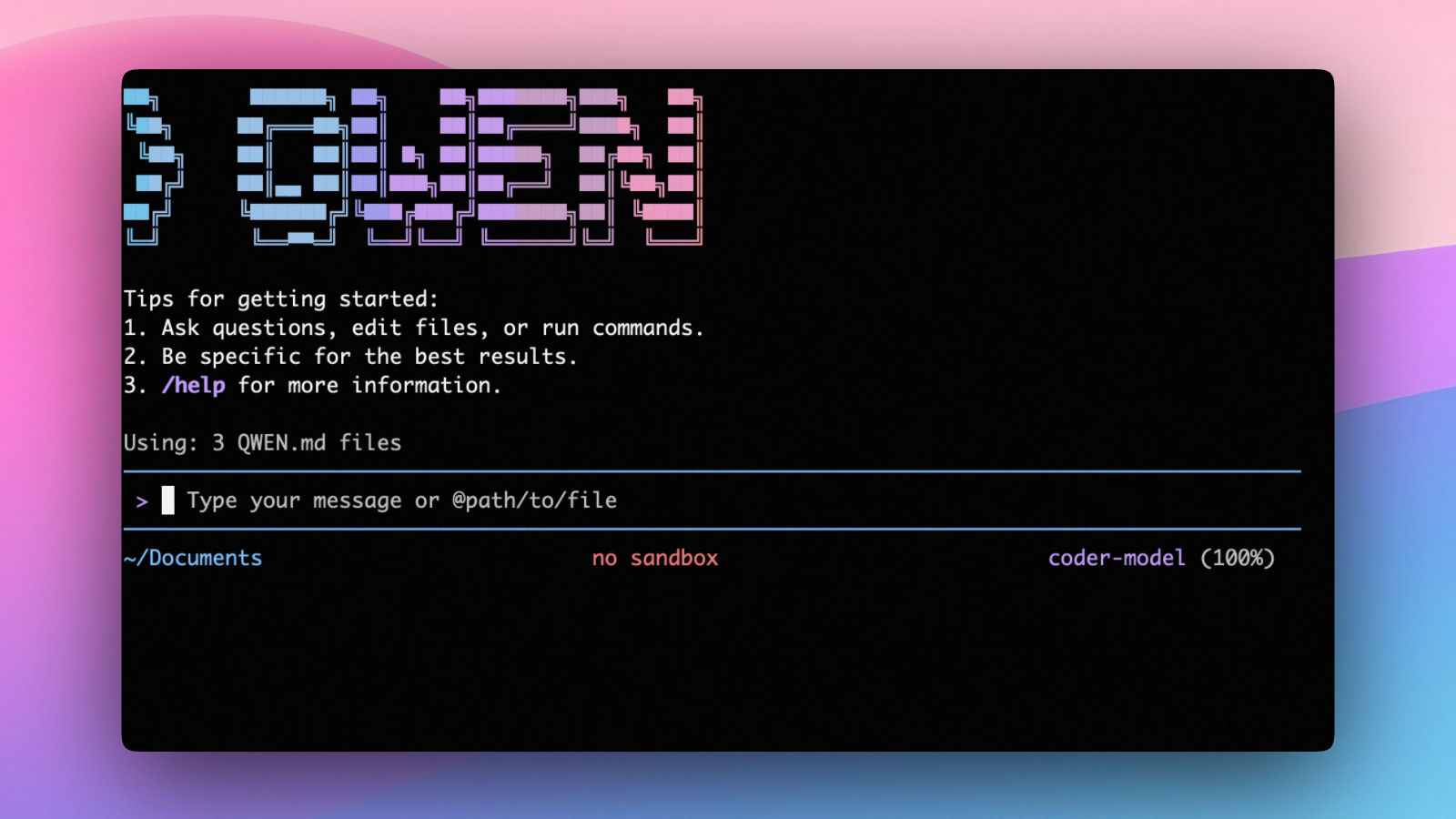

**An open-source AI agent that lives in your terminal.**

|

||||

**AI-powered command-line workflow tool for developers**

|

||||

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/users/overview">中文</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/users/overview">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr/users/overview">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/users/overview">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru/users/overview">Русский</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/pt-BR/users/overview">Português (Brasil)</a>

|

||||

[Installation](#installation) • [Quick Start](#quick-start) • [Features](#key-features) • [Documentation](./docs/) • [Contributing](./CONTRIBUTING.md)

|

||||

|

||||

</div>

|

||||

|

||||

Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder). It helps you understand large codebases, automate tedious work, and ship faster.

|

||||

<div align="center">

|

||||

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/de/">Deutsch</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/fr">français</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ja/">日本語</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/ru">Русский</a> |

|

||||

<a href="https://qwenlm.github.io/qwen-code-docs/zh/">中文</a>

|

||||

|

||||

</div>

|

||||

|

||||

|

||||

Qwen Code is a powerful command-line AI workflow tool adapted from [**Gemini CLI**](https://github.com/google-gemini/gemini-cli), specifically optimized for [Qwen3-Coder](https://github.com/QwenLM/Qwen3-Coder) models. It enhances your development workflow with advanced code understanding, automated tasks, and intelligent assistance.

|

||||

|

||||

## Why Qwen Code?

|

||||

## 💡 Free Options Available

|

||||

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

Get started with Qwen Code at no cost using any of these free options:

|

||||

|

||||

### 🔥 Qwen OAuth (Recommended)

|

||||

|

||||

- **2,000 requests per day** with no token limits

|

||||

- **60 requests per minute** rate limit

|

||||

- Simply run `qwen` and authenticate with your qwen.ai account

|

||||

- Automatic credential management and refresh

|

||||

- Use `/auth` command to switch to Qwen OAuth if you have initialized with OpenAI compatible mode

|

||||

|

||||

### 🌏 Regional Free Tiers

|

||||

|

||||

- **Mainland China**: ModelScope offers **2,000 free API calls per day**

|

||||

- **International**: OpenRouter provides **up to 1,000 free API calls per day** worldwide

|

||||

|

||||

For detailed setup instructions, see [Authorization](#authorization).

|

||||

|

||||

> [!WARNING]

|

||||

> **Token Usage Notice**: Qwen Code may issue multiple API calls per cycle, resulting in higher token usage (similar to Claude Code). We're actively optimizing API efficiency.

|

||||

|

||||

## Key Features

|

||||

|

||||

- **Code Understanding & Editing** - Query and edit large codebases beyond traditional context window limits

|

||||

- **Workflow Automation** - Automate operational tasks like handling pull requests and complex rebases

|

||||

- **Enhanced Parser** - Adapted parser specifically optimized for Qwen-Coder models

|

||||

- **Vision Model Support** - Automatically detect images in your input and seamlessly switch to vision-capable models for multimodal analysis

|

||||

|

||||

## Installation

|

||||

|

||||

#### Prerequisites

|

||||

### Prerequisites

|

||||

|

||||

Ensure you have [Node.js version 20](https://nodejs.org/en/download) or higher installed.

|

||||

|

||||

```bash

|

||||

# Node.js 20+

|

||||

curl -qL https://www.npmjs.com/install.sh | sh

|

||||

```

|

||||

|

||||

#### NPM (recommended)

|

||||

### Install from npm

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code@latest

|

||||

qwen --version

|

||||

```

|

||||

|

||||

#### Homebrew (macOS, Linux)

|

||||

### Install from source

|

||||

|

||||

```bash

|

||||

git clone https://github.com/QwenLM/qwen-code.git

|

||||

cd qwen-code

|

||||

npm install

|

||||

npm install -g .

|

||||

```

|

||||

|

||||

### Install globally with Homebrew (macOS/Linux)

|

||||

|

||||

```bash

|

||||

brew install qwen-code

|

||||

```

|

||||

|

||||

## VS Code Extension

|

||||

|

||||

In addition to the CLI tool, Qwen Code also provides a **VS Code extension** that brings AI-powered coding assistance directly into your editor with features like file system operations, native diffing, interactive chat, and more.

|

||||

|

||||

> 📦 The extension is currently in development. For installation, features, and development guide, see the [VS Code Extension README](./packages/vscode-ide-companion/README.md).

|

||||

|

||||

## Quick Start

|

||||

|

||||

```bash

|

||||

# Start Qwen Code (interactive)

|

||||

# Start Qwen Code

|

||||

qwen

|

||||

|

||||

# Then, in the session:

|

||||

/help

|

||||

/auth

|

||||

# Example commands

|

||||

> Explain this codebase structure

|

||||

> Help me refactor this function

|

||||

> Generate unit tests for this module

|

||||

```

|

||||

|

||||

On first use, you'll be prompted to sign in. You can run `/auth` anytime to switch authentication methods.

|

||||

### Session Management

|

||||

|

||||

Example prompts:

|

||||

Control your token usage with configurable session limits to optimize costs and performance.

|

||||

|

||||

```text

|

||||

What does this project do?

|

||||

Explain the codebase structure.

|

||||

Help me refactor this function.

|

||||

Generate unit tests for this module.

|

||||

#### Configure Session Token Limit

|

||||

|

||||

Create or edit `.qwen/settings.json` in your home directory:

|

||||

|

||||

```json

|

||||

{

|

||||

"sessionTokenLimit": 32000

|

||||

}

|

||||

```

|

||||

|

||||

#### Session Commands

|

||||

|

||||

- **`/compress`** - Compress conversation history to continue within token limits

|

||||

- **`/clear`** - Clear all conversation history and start fresh

|

||||

- **`/stats`** - Check current token usage and limits

|

||||

|

||||

> 📝 **Note**: Session token limit applies to a single conversation, not cumulative API calls.

|

||||

|

||||

### Vision Model Configuration

|

||||

|

||||

Qwen Code includes intelligent vision model auto-switching that detects images in your input and can automatically switch to vision-capable models for multimodal analysis. **This feature is enabled by default** - when you include images in your queries, you'll see a dialog asking how you'd like to handle the vision model switch.

|

||||

|

||||

#### Skip the Switch Dialog (Optional)

|

||||

|

||||

If you don't want to see the interactive dialog each time, configure the default behavior in your `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"experimental": {

|

||||

"vlmSwitchMode": "once"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

**Available modes:**

|

||||

|

||||

- **`"once"`** - Switch to vision model for this query only, then revert

|

||||

- **`"session"`** - Switch to vision model for the entire session

|

||||

- **`"persist"`** - Continue with current model (no switching)

|

||||

- **Not set** - Show interactive dialog each time (default)

|

||||

|

||||

#### Command Line Override

|

||||

|

||||

You can also set the behavior via command line:

|

||||

|

||||

```bash

|

||||

# Switch once per query

|

||||

qwen --vlm-switch-mode once

|

||||

|

||||

# Switch for entire session

|

||||

qwen --vlm-switch-mode session

|

||||

|

||||

# Never switch automatically

|

||||

qwen --vlm-switch-mode persist

|

||||

```

|

||||

|

||||

#### Disable Vision Models (Optional)

|

||||

|

||||

To completely disable vision model support, add to your `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"experimental": {

|

||||

"visionModelPreview": false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

> 💡 **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected.

|

||||

|

||||

### Authorization

|

||||

|

||||

Choose your preferred authentication method based on your needs:

|

||||

|

||||

#### 1. Qwen OAuth (🚀 Recommended - Start in 30 seconds)

|

||||

|

||||

The easiest way to get started - completely free with generous quotas:

|

||||

|

||||

```bash

|

||||

# Just run this command and follow the browser authentication

|

||||

qwen

|

||||

```

|

||||

|

||||

**What happens:**

|

||||

|

||||

1. **Instant Setup**: CLI opens your browser automatically

|

||||

2. **One-Click Login**: Authenticate with your qwen.ai account

|

||||

3. **Automatic Management**: Credentials cached locally for future use

|

||||

4. **No Configuration**: Zero setup required - just start coding!

|

||||

|

||||

**Free Tier Benefits:**

|

||||

|

||||

- ✅ **2,000 requests/day** (no token counting needed)

|

||||

- ✅ **60 requests/minute** rate limit

|

||||

- ✅ **Automatic credential refresh**

|

||||

- ✅ **Zero cost** for individual users

|

||||

- ℹ️ **Note**: Model fallback may occur to maintain service quality

|

||||

|

||||

#### 2. OpenAI-Compatible API

|

||||

|

||||

Use API keys for OpenAI or other compatible providers:

|

||||

|

||||

**Configuration Methods:**

|

||||

|

||||

1. **Environment Variables**

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="your_api_endpoint"

|

||||

export OPENAI_MODEL="your_model_choice"

|

||||

```

|

||||

|

||||

2. **Project `.env` File**

|

||||

Create a `.env` file in your project root:

|

||||

```env

|

||||

OPENAI_API_KEY=your_api_key_here

|

||||

OPENAI_BASE_URL=your_api_endpoint

|

||||

OPENAI_MODEL=your_model_choice

|

||||

```

|

||||

|

||||

**API Provider Options**

|

||||

|

||||

> ⚠️ **Regional Notice:**

|

||||

>

|

||||

> - **Mainland China**: Use Alibaba Cloud Bailian or ModelScope

|

||||

> - **International**: Use Alibaba Cloud ModelStudio or OpenRouter

|

||||

|

||||

<details>

|

||||

<summary>Click to watch a demo video</summary>

|

||||

<summary><b>🇨🇳 For Users in Mainland China</b></summary>

|

||||

|

||||

<video src="https://cloud.video.taobao.com/vod/HLfyppnCHplRV9Qhz2xSqeazHeRzYtG-EYJnHAqtzkQ.mp4" controls>

|

||||

Your browser does not support the video tag.

|

||||

</video>

|

||||

**Option 1: Alibaba Cloud Bailian** ([Apply for API Key](https://bailian.console.aliyun.com/))

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://dashscope.aliyuncs.com/compatible-mode/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

**Option 2: ModelScope (Free Tier)** ([Apply for API Key](https://modelscope.cn/docs/model-service/API-Inference/intro))

|

||||

|

||||

- ✅ **2,000 free API calls per day**

|

||||

- ⚠️ Connect your Aliyun account to avoid authentication errors

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://api-inference.modelscope.cn/v1"

|

||||

export OPENAI_MODEL="Qwen/Qwen3-Coder-480B-A35B-Instruct"

|

||||

```

|

||||

|

||||

</details>

|

||||

|

||||

## Authentication

|

||||

<details>

|

||||

<summary><b>🌍 For International Users</b></summary>

|

||||

|

||||

Qwen Code supports two authentication methods:

|

||||

|

||||

- **Qwen OAuth (recommended & free)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use `OPENAI_API_KEY` (and optionally a custom base URL / model).

|

||||

|

||||

#### Qwen OAuth (recommended)

|

||||

|

||||

Start `qwen`, then run:

|

||||

**Option 1: Alibaba Cloud ModelStudio** ([Apply for API Key](https://modelstudio.console.alibabacloud.com/))

|

||||

|

||||

```bash

|

||||

/auth

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://dashscope-intl.aliyuncs.com/compatible-mode/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

Choose **Qwen OAuth** and complete the browser flow. Your credentials are cached locally so you usually won't need to log in again.

|

||||

|

||||

#### OpenAI-compatible API (API key)

|

||||

|

||||

Environment variables (recommended for CI / headless environments):

|

||||

**Option 2: OpenRouter (Free Tier Available)** ([Apply for API Key](https://openrouter.ai/))

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-api-key-here"

|

||||

export OPENAI_BASE_URL="https://api.openai.com/v1" # optional

|

||||

export OPENAI_MODEL="gpt-4o" # optional

|

||||

export OPENAI_API_KEY="your_api_key_here"

|

||||

export OPENAI_BASE_URL="https://openrouter.ai/api/v1"

|

||||

export OPENAI_MODEL="qwen/qwen3-coder:free"

|

||||

```

|

||||

|

||||

For details (including `.qwen/.env` loading and security notes), see the [authentication guide](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/auth/).

|

||||

</details>

|

||||

|

||||

## Usage

|

||||

## Usage Examples

|

||||

|

||||

As an open-source terminal agent, you can use Qwen Code in four primary ways:

|

||||

|

||||

1. Interactive mode (terminal UI)

|

||||

2. Headless mode (scripts, CI)

|

||||

3. IDE integration (VS Code, Zed)

|

||||

4. TypeScript SDK

|

||||

|

||||

#### Interactive mode

|

||||

### 🔍 Explore Codebases

|

||||

|

||||

```bash

|

||||

cd your-project/

|

||||

qwen

|

||||

|

||||

# Architecture analysis

|

||||

> Describe the main pieces of this system's architecture

|

||||

> What are the key dependencies and how do they interact?

|

||||

> Find all API endpoints and their authentication methods

|

||||

```

|

||||

|

||||

Run `qwen` in your project folder to launch the interactive terminal UI. Use `@` to reference local files (for example `@src/main.ts`).

|

||||

|

||||

#### Headless mode

|

||||

### 💻 Code Development

|

||||

|

||||

```bash

|

||||

cd your-project/

|

||||

qwen -p "your question"

|

||||

# Refactoring

|

||||

> Refactor this function to improve readability and performance

|

||||

> Convert this class to use dependency injection

|

||||

> Split this large module into smaller, focused components

|

||||

|

||||

# Code generation

|

||||

> Create a REST API endpoint for user management

|

||||

> Generate unit tests for the authentication module

|

||||

> Add error handling to all database operations

|

||||

```

|

||||

|

||||

Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automation, and CI/CD. Learn more: [Headless mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/headless).

|

||||

### 🔄 Automate Workflows

|

||||

|

||||

#### IDE integration

|

||||

```bash

|

||||

# Git automation

|

||||

> Analyze git commits from the last 7 days, grouped by feature

|

||||

> Create a changelog from recent commits

|

||||

> Find all TODO comments and create GitHub issues

|

||||

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

# File operations

|

||||

> Convert all images in this directory to PNG format

|

||||

> Rename all test files to follow the *.test.ts pattern

|

||||

> Find and remove all console.log statements

|

||||

```

|

||||

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

### 🐛 Debugging & Analysis

|

||||

|

||||

#### TypeScript SDK

|

||||

```bash

|

||||

# Performance analysis

|

||||

> Identify performance bottlenecks in this React component

|

||||

> Find all N+1 query problems in the codebase

|

||||

|

||||

Build on top of Qwen Code with the TypeScript SDK:

|

||||

# Security audit

|

||||

> Check for potential SQL injection vulnerabilities

|

||||

> Find all hardcoded credentials or API keys

|

||||

```

|

||||

|

||||

- [Use the Qwen Code SDK](./packages/sdk-typescript/README.md)

|

||||

## Popular Tasks

|

||||

|

||||

### 📚 Understand New Codebases

|

||||

|

||||

```text

|

||||

> What are the core business logic components?

|

||||

> What security mechanisms are in place?

|

||||

> How does the data flow through the system?

|

||||

> What are the main design patterns used?

|

||||

> Generate a dependency graph for this module

|

||||

```

|

||||

|

||||

### 🔨 Code Refactoring & Optimization

|

||||

|

||||

```text

|

||||

> What parts of this module can be optimized?

|

||||

> Help me refactor this class to follow SOLID principles

|

||||

> Add proper error handling and logging

|

||||

> Convert callbacks to async/await pattern

|

||||

> Implement caching for expensive operations

|

||||

```

|

||||

|

||||

### 📝 Documentation & Testing

|

||||

|

||||

```text

|

||||

> Generate comprehensive JSDoc comments for all public APIs

|

||||

> Write unit tests with edge cases for this component

|

||||

> Create API documentation in OpenAPI format

|

||||

> Add inline comments explaining complex algorithms

|

||||

> Generate a README for this module

|

||||

```

|

||||

|

||||

### 🚀 Development Acceleration

|

||||

|

||||

```text

|

||||

> Set up a new Express server with authentication

|

||||

> Create a React component with TypeScript and tests

|

||||

> Implement a rate limiter middleware

|

||||

> Add database migrations for new schema

|

||||

> Configure CI/CD pipeline for this project

|

||||

```

|

||||

|

||||

## Commands & Shortcuts

|

||||

|

||||

@@ -156,7 +386,6 @@ Build on top of Qwen Code with the TypeScript SDK:

|

||||

- `/clear` - Clear conversation history

|

||||

- `/compress` - Compress history to save tokens

|

||||

- `/stats` - Show current session information

|

||||

- `/bug` - Submit a bug report

|

||||

- `/exit` or `/quit` - Exit Qwen Code

|

||||

|

||||

### Keyboard Shortcuts

|

||||

@@ -165,19 +394,6 @@ Build on top of Qwen Code with the TypeScript SDK:

|

||||

- `Ctrl+D` - Exit (on empty line)

|

||||

- `Up/Down` - Navigate command history

|

||||

|

||||

> Learn more about [Commands](https://qwenlm.github.io/qwen-code-docs/en/users/features/commands/)

|

||||

>

|

||||

> **Tip**: In YOLO mode (`--yolo`), vision switching happens automatically without prompts when images are detected. Learn more about [Approval Mode](https://qwenlm.github.io/qwen-code-docs/en/users/features/approval-mode/)

|

||||

|

||||

## Configuration

|

||||

|

||||

Qwen Code can be configured via `settings.json`, environment variables, and CLI flags.

|

||||

|

||||

- **User settings**: `~/.qwen/settings.json`

|

||||

- **Project settings**: `.qwen/settings.json`

|

||||

|

||||

See [settings](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/settings/) for available options and precedence.

|

||||

|

||||

## Benchmark Results

|

||||

|

||||

### Terminal-Bench Performance

|

||||

@@ -187,19 +403,24 @@ See [settings](https://qwenlm.github.io/qwen-code-docs/en/users/configuration/se

|

||||

| Qwen Code | Qwen3-Coder-480A35 | 37.5% |

|

||||

| Qwen Code | Qwen3-Coder-30BA3B | 31.3% |

|

||||

|

||||

## Ecosystem

|

||||

## Development & Contributing

|

||||

|

||||

Looking for a graphical interface?

|

||||

See [CONTRIBUTING.md](./CONTRIBUTING.md) to learn how to contribute to the project.

|

||||

|

||||

- [**AionUi**](https://github.com/iOfficeAI/AionUi) A modern GUI for command-line AI tools including Qwen Code

|

||||

- [**Gemini CLI Desktop**](https://github.com/Piebald-AI/gemini-cli-desktop) A cross-platform desktop/web/mobile UI for Qwen Code

|

||||

For detailed authentication setup, see the [authentication guide](./docs/cli/authentication.md).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

If you encounter issues, check the [troubleshooting guide](https://qwenlm.github.io/qwen-code-docs/en/users/support/troubleshooting/).

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

If you encounter issues, check the [troubleshooting guide](docs/troubleshooting.md).

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

## License

|

||||

|

||||

[LICENSE](./LICENSE)

|

||||

|

||||

## Star History

|

||||

|

||||

[](https://www.star-history.com/#QwenLM/qwen-code&Date)

|

||||

|

||||

@@ -1,147 +0,0 @@

|

||||

# Qwen Code CLI LSP 集成实现方案分析

|

||||

|

||||

## 1. 项目概述

|

||||

|

||||

本方案旨在将 LSP(Language Server Protocol)能力原生集成到 Qwen Code CLI 中,使 AI 代理能够利用代码导航、定义查找、引用查找等功能。LSP 将作为与 MCP 并行的一级扩展机制实现。

|

||||

|

||||

## 2. 技术方案对比

|

||||

|

||||

### 2.1 Piebald-AI/claude-code-lsps 方案

|

||||

- **架构**: 客户端直接与每个 LSP 通信,通过 `.lsp.json` 配置文件声明服务器命令/参数、stdio 传输和文件扩展名路由

|

||||

- **用户配置**: 低摩擦,只需放置 `.lsp.json` 配置并确保 LSP 二进制文件已安装

|

||||

- **安全**: LSP 子进程以用户权限运行,无内置信任门控

|

||||

- **功能覆盖**: 可以暴露完整的 LSP 表面(hover、诊断、代码操作、重命名等)

|

||||

|

||||

### 2.2 原生 LSP 客户端方案(推荐方案)

|

||||

- **架构**: Qwen Code CLI 直接作为 LSP 客户端,与语言服务器建立 JSON-RPC 连接

|

||||

- **用户配置**: 支持内置预设 + 用户自定义 `.lsp.json` 配置

|

||||

- **安全**: 与 MCP 共享相同的安全控制(信任工作区、允许/拒绝列表、确认提示)

|

||||

- **功能覆盖**: 暴露完整的 LSP 功能(流式诊断、代码操作、重命名、语义标记等)

|

||||

|

||||

### 2.3 cclsp + MCP 方案(备选)

|

||||

- **架构**: 通过 MCP 协议调用 cclsp 作为 LSP 桥接

|

||||

- **用户配置**: 需要 MCP 配置

|

||||

- **安全**: 通过 MCP 安全控制

|

||||

- **功能覆盖**: 依赖于 cclsp 映射的 MCP 工具

|

||||

|

||||

## 3. 原生 LSP 集成详细计划

|

||||

|

||||

### 3.1 方案选择

|

||||

- **推荐方案**: 原生 LSP 客户端作为主要路径,因为它提供完整 LSP 功能、更低延迟和更好的用户体验

|

||||

- **兼容层**: 保留 cclsp+MCP 作为现有 MCP 工作流的兼容桥接

|

||||

- **并行架构**: LSP 和 MCP 作为独立的扩展机制共存,共享安全策略

|

||||

|

||||

### 3.2 实现步骤

|

||||

|

||||

#### 3.2.1 创建原生 LSP 服务

|

||||

在 `packages/cli/src/services/lsp/` 目录下创建 `NativeLspService` 类,处理:

|

||||

- 工作区语言检测

|

||||

- 自动发现和启动语言服务器

|

||||

- 与现有文档/编辑模型同步

|

||||

- LSP 能力直接暴露给代理

|

||||

|

||||

#### 3.2.2 配置支持

|

||||

- 支持内置预设配置(常见语言服务器)

|

||||

- 支持用户自定义 `.lsp.json` 配置文件

|

||||

- 与 MCP 配置共存,共享信任控制

|

||||

|

||||

#### 3.2.3 集成启动流程

|

||||

- 在 `packages/cli/src/config/config.ts` 中的 `loadCliConfig` 函数内集成

|

||||

- 确保 LSP 服务与 MCP 服务共享相同的安全控制机制

|

||||

- 处理沙箱预检和主运行的重复调用问题

|

||||

|

||||

#### 3.2.4 功能标志配置

|

||||

- 在 `packages/cli/src/config/settingsSchema.ts` 中添加新的设置项

|

||||

- 提供全局开关(如 `lsp.enabled=false`)允许用户禁用 LSP 功能

|

||||

- 尊重 `mcp.allowed`/`mcp.excluded` 和文件夹信任设置

|

||||

|

||||

#### 3.2.5 安全控制

|

||||

- 与 MCP 共享相同的安全控制机制

|

||||

- 在信任工作区中自动启用,在非信任工作区中提示用户

|

||||

- 实现路径允许列表和进程启动确认

|

||||

|

||||

#### 3.2.6 错误处理与用户通知

|

||||

- 检测缺失的语言服务器并提供安装命令

|

||||

- 通过现有 MCP 状态 UI 显示错误信息

|

||||

- 实现重试/退避机制,检测沙箱环境并抑制自动启动

|

||||

|

||||

### 3.3 需要确认的不确定项

|

||||

|

||||

1. **启动集成点**:在 `loadCliConfig` 中集成原生 LSP 服务,需确保与 MCP 服务的协调

|

||||

|

||||

2. **配置优先级**:如果用户已有 cclsp MCP 配置,应保持并存还是优先使用原生 LSP

|

||||

|

||||

3. **功能开关设计**:开关应该是全局级别的,LSP 和 MCP 可独立启用/禁用

|

||||

|

||||

4. **共享安全模型**:如何在代码中复用 MCP 的信任/安全控制逻辑

|

||||

|

||||

5. **语言服务器管理**:如何管理 LSP 服务器生命周期并与文档编辑模型同步

|

||||

|

||||

6. **依赖检测机制**:检测 LSP 服务器可用性,失败时提供降级选项

|

||||

|

||||

7. **测试策略**:需要测试 LSP 与 MCP 的并行运行,以及共享安全控制

|

||||

|

||||

### 3.4 安全考虑

|

||||

|

||||

- 与 MCP 共享相同的安全控制模型

|

||||

- 仅在受信任工作区中启用自动 LSP 功能

|

||||

- 提供用户确认机制用于启动新的 LSP 服务器

|

||||

- 防止路径劫持,使用安全的路径解析

|

||||

|

||||

### 3.5 高级 LSP 功能支持

|

||||

|

||||

- **完整 LSP 功能**: 支持流式诊断、代码操作、重命名、语义高亮、工作区编辑等

|

||||

- **兼容 Claude 配置**: 支持导入 Claude Code 风格的 `.lsp.json` 配置

|

||||

- **性能优化**: 优化 LSP 服务器启动时间和内存使用

|

||||

|

||||

### 3.6 用户体验

|

||||

|

||||

- 提供安装提示而非自动安装

|

||||

- 在统一的状态界面显示 LSP 和 MCP 服务器状态

|

||||

- 提供独立开关让用户控制 LSP 和 MCP 功能

|

||||

- 为只读/沙箱环境提供安全的配置处理和清晰的错误消息

|

||||

|

||||

## 4. 实施总结

|

||||

|

||||

### 4.1 已完成的工作

|

||||

1. **NativeLspService 类**:创建了核心服务类,包含语言检测、配置合并、LSP 连接管理等功能

|

||||

2. **LSP 连接工厂**:实现了基于 stdio 的 LSP 连接创建和管理

|

||||

3. **语言检测机制**:实现了基于文件扩展名和项目配置文件的语言自动检测

|

||||

4. **配置系统**:实现了内置预设、用户配置和 Claude 兼容配置的合并

|

||||

5. **安全控制**:实现了与 MCP 共享的安全控制机制,包括信任检查、用户确认、路径安全验证

|

||||

6. **CLI 集成**:在 `loadCliConfig` 函数中集成了 LSP 服务初始化点

|

||||

|

||||

### 4.2 关键组件

|

||||

|

||||

#### 4.2.1 LspConnectionFactory

|

||||

- 使用 `vscode-jsonrpc` 和 `vscode-languageserver-protocol` 实现 LSP 连接

|

||||

- 支持 stdio 传输方式,可以扩展支持 TCP 传输

|

||||

- 提供连接创建、初始化和关闭的完整生命周期管理

|

||||

|

||||

#### 4.2.2 NativeLspService

|

||||

- **语言检测**:扫描项目文件和配置文件来识别编程语言

|

||||

- **配置合并**:按优先级合并内置预设、用户配置和兼容层配置

|

||||

- **LSP 服务器管理**:启动、停止和状态管理

|

||||

- **安全控制**:与 MCP 共享的信任和确认机制

|

||||

|

||||

#### 4.2.3 配置架构

|

||||

- **内置预设**:为常见语言提供默认 LSP 服务器配置

|

||||

- **用户配置**:支持 `.lsp.json` 文件格式

|

||||

- **Claude 兼容**:可导入 Claude Code 的 LSP 配置

|

||||

|

||||

### 4.3 依赖管理

|

||||

- 使用 `vscode-languageserver-protocol` 进行 LSP 协议通信

|

||||

- 使用 `vscode-jsonrpc` 进行 JSON-RPC 消息传递

|

||||

- 使用 `vscode-languageserver-textdocument` 管理文档版本

|

||||

|

||||

### 4.4 安全特性

|

||||

- 工作区信任检查

|

||||

- 用户确认机制(对于非信任工作区)

|

||||

- 命令存在性验证

|

||||

- 路径安全性检查

|

||||

|

||||

## 5. 总结

|

||||

|

||||

原生 LSP 客户端是当前最符合 Qwen Code 架构的选择,它提供了完整的 LSP 功能、更低的延迟和更好的用户体验。LSP 作为与 MCP 并行的一级扩展机制,将与 MCP 共享安全控制策略,但提供更丰富的代码智能功能。cclsp+MCP 可作为兼容层保留,以支持现有的 MCP 工作流。

|

||||

|

||||

该实现方案将使 Qwen Code CLI 具备完整的 LSP 功能,包括代码跳转、引用查找、自动补全、代码诊断等,为 AI 代理提供更丰富的代码理解能力。

|

||||

@@ -627,12 +627,7 @@ The MCP integration tracks several states:

|

||||

|

||||

### Schema Compatibility

|

||||

|

||||

- **Schema compliance mode:** By default (`schemaCompliance: "auto"`), tool schemas are passed through as-is. Set `"model": { "generationConfig": { "schemaCompliance": "openapi_30" } }` in your `settings.json` to convert models to Strict OpenAPI 3.0 format.

|

||||

- **OpenAPI 3.0 transformations:** When `openapi_30` mode is enabled, the system handles:

|

||||

- Nullable types: `["string", "null"]` -> `type: "string", nullable: true`

|

||||

- Const values: `const: "foo"` -> `enum: ["foo"]`

|

||||

- Exclusive limits: numeric `exclusiveMinimum` -> boolean form with `minimum`

|

||||

- Keyword removal: `$schema`, `$id`, `dependencies`, `patternProperties`

|

||||

- **Property stripping:** The system automatically removes certain schema properties (`$schema`, `additionalProperties`) for Qwen API compatibility

|

||||

- **Name sanitization:** Tool names are automatically sanitized to meet API requirements

|

||||

- **Conflict resolution:** Tool name conflicts between servers are resolved through automatic prefixing

|

||||

|

||||

|

||||

@@ -14,7 +14,7 @@ Learn how to use Qwen Code as an end user. This section covers:

|

||||

- Configuration options

|

||||

- Troubleshooting

|

||||

|

||||

### [Developer Guide](./developers/architecture)

|

||||

### [Developer Guide](./developers/contributing)

|

||||

|

||||

Learn how to contribute to and develop Qwen Code. This section covers:

|

||||

|

||||

|

||||

@@ -189,8 +189,8 @@ Then select "create" and follow the prompts to define:

|

||||

> - Create project-specific subagents in `.qwen/agents/` for team sharing

|

||||

> - Use descriptive `description` fields to enable automatic delegation

|

||||

> - Limit tool access to what each subagent actually needs

|

||||

> - Know more about [Sub Agents](./features/sub-agents)

|

||||

> - Know more about [Approval Mode](./features/approval-mode)

|

||||

> - Know more about [Sub Agents](/users/features/sub-agents)

|

||||

> - Know more about [Approval Mode](/users/features/approval-mode)

|

||||

|

||||

## Work with tests

|

||||

|

||||

@@ -318,7 +318,7 @@ This provides a directory listing with file information.

|

||||

Show me the data from @github: repos/owner/repo/issues

|

||||

```

|

||||

|

||||

This fetches data from connected MCP servers using the format @server: resource. See [MCP](./features/mcp) for details.

|

||||

This fetches data from connected MCP servers using the format @server: resource. See [MCP](/users/features/mcp) for details.

|

||||

|

||||

> [!tip]

|

||||

>

|

||||

|

||||

@@ -6,7 +6,7 @@ Qwen Code includes the ability to automatically ignore files, similar to `.gitig

|

||||

|

||||

## How it works

|

||||

|

||||

When you add a path to your `.qwenignore` file, tools that respect this file will exclude matching files and directories from their operations. For example, when you use the [`read_many_files`](../../developers/tools/multi-file) command, any paths in your `.qwenignore` file will be automatically excluded.

|

||||

When you add a path to your `.qwenignore` file, tools that respect this file will exclude matching files and directories from their operations. For example, when you use the [`read_many_files`](/developers/tools/multi-file) command, any paths in your `.qwenignore` file will be automatically excluded.

|

||||

|

||||

For the most part, `.qwenignore` follows the conventions of `.gitignore` files:

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

> [!tip]

|

||||

>

|

||||

> **Authentication / API keys:** Authentication (Qwen OAuth vs OpenAI-compatible API) and auth-related environment variables (like `OPENAI_API_KEY`) are documented in **[Authentication](../configuration/auth)**.

|

||||

> **Authentication / API keys:** Authentication (Qwen OAuth vs OpenAI-compatible API) and auth-related environment variables (like `OPENAI_API_KEY`) are documented in **[Authentication](/users/configuration/auth)**.

|

||||

|

||||

> [!note]

|

||||

>

|

||||

@@ -42,8 +42,7 @@ Qwen Code uses JSON settings files for persistent configuration. There are four

|

||||

|

||||

In addition to a project settings file, a project's `.qwen` directory can contain other project-specific files related to Qwen Code's operation, such as:

|

||||

|

||||

- [Custom sandbox profiles](../features/sandbox) (e.g. `.qwen/sandbox-macos-custom.sb`, `.qwen/sandbox.Dockerfile`).

|

||||

- [Agent Skills](../features/skills) (experimental) under `.qwen/skills/` (each Skill is a directory containing a `SKILL.md`).

|

||||

- [Custom sandbox profiles](/users/features/sandbox) (e.g. `.qwen/sandbox-macos-custom.sb`, `.qwen/sandbox.Dockerfile`).

|

||||

|

||||

### Available settings in `settings.json`

|

||||

|

||||

@@ -70,7 +69,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| ---------------------------------------- | ---------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `ui.theme` | string | The color theme for the UI. See [Themes](../configuration/themes) for available options. | `undefined` |

|

||||

| `ui.theme` | string | The color theme for the UI. See [Themes](/users/configuration/themes) for available options. | `undefined` |

|

||||

| `ui.customThemes` | object | Custom theme definitions. | `{}` |

|

||||

| `ui.hideWindowTitle` | boolean | Hide the window title bar. | `false` |

|

||||

| `ui.hideTips` | boolean | Hide helpful tips in the UI. | `false` |

|

||||

@@ -327,7 +326,7 @@ The CLI keeps a history of shell commands you run. To avoid conflicts between di

|

||||

Environment variables are a common way to configure applications, especially for sensitive information (like tokens) or for settings that might change between environments.

|

||||

|

||||

Qwen Code can automatically load environment variables from `.env` files.

|

||||

For authentication-related variables (like `OPENAI_*`) and the recommended `.qwen/.env` approach, see **[Authentication](../configuration/auth)**.

|

||||

For authentication-related variables (like `OPENAI_*`) and the recommended `.qwen/.env` approach, see **[Authentication](/users/configuration/auth)**.

|

||||

|

||||

> [!tip]

|

||||

>

|

||||

@@ -358,40 +357,38 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

|