mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-19 23:36:19 +00:00

Compare commits

33 Commits

fix/1454-s

...

chore/no-t

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

c14ddab6fe | ||

|

|

35c865968f | ||

|

|

ff5ea3c6d7 | ||

|

|

0faaac8fa4 | ||

|

|

c2e62b9122 | ||

|

|

f54b62cda3 | ||

|

|

9521987a09 | ||

|

|

d20f2a41a2 | ||

|

|

e3eccb5987 | ||

|

|

22916457cd | ||

|

|

28bc4e6467 | ||

|

|

50bf65b10b | ||

|

|

47c8bc5303 | ||

|

|

e70ecdf3a8 | ||

|

|

117af05122 | ||

|

|

557e6397bb | ||

|

|

f762a62a2e | ||

|

|

ca12772a28 | ||

|

|

cec4b831b6 | ||

|

|

e4dee3a2b2 | ||

|

|

996b9df947 | ||

|

|

64291db926 | ||

|

|

85473210e5 | ||

|

|

c0c94bd4fc | ||

|

|

a8eb858f99 | ||

|

|

adb53a6dc6 | ||

|

|

b33525183f | ||

|

|

2d1934bf2f | ||

|

|

1b7418f91f | ||

|

|

0bd17a2406 | ||

|

|

59be5163fd | ||

|

|

4f664d00ac | ||

|

|

7fdebe8fe6 |

@@ -201,6 +201,11 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -202,7 +202,7 @@ This is the most critical stage where files are moved and transformed into their

|

||||

- Copies README.md and LICENSE to dist/

|

||||

- Copies locales folder for internationalization

|

||||

- Creates a clean package.json for distribution with only necessary dependencies

|

||||

- Includes runtime dependencies like tiktoken

|

||||

- Keeps distribution dependencies minimal (no bundled runtime deps)

|

||||

- Maintains optional dependencies for node-pty

|

||||

|

||||

2. The JavaScript Bundle is Created:

|

||||

|

||||

@@ -10,4 +10,5 @@ export default {

|

||||

'web-search': 'Web Search',

|

||||

memory: 'Memory',

|

||||

'mcp-server': 'MCP Servers',

|

||||

sandbox: 'Sandboxing',

|

||||

};

|

||||

|

||||

90

docs/developers/tools/sandbox.md

Normal file

90

docs/developers/tools/sandbox.md

Normal file

@@ -0,0 +1,90 @@

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

### Currently, the project does not support the use of the BUILD_SANDBOX function after installation through the npm package

|

||||

|

||||

1. To build a custom sandbox, you need to access the build scripts (scripts/build_sandbox.js) in the source code repository.

|

||||

2. These build scripts are not included in the packages released by npm.

|

||||

3. The code contains hard-coded path checks that explicitly reject build requests from non-source code environments.

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile, The specific operation is as follows

|

||||

|

||||

#### 1、Clone qwen code project first, https://github.com/QwenLM/qwen-code.git

|

||||

|

||||

#### 2、Make sure you perform the following operation in the source code repository directory

|

||||

|

||||

```bash

|

||||

# 1. First, install the dependencies of the project

|

||||

npm install

|

||||

|

||||

# 2. Build the Qwen Code project

|

||||

npm run build

|

||||

|

||||

# 3. Verify that the dist directory has been generated

|

||||

ls -la packages/cli/dist/

|

||||

|

||||

# 4. Create a global link in the CLI package directory

|

||||

cd packages/cli

|

||||

npm link

|

||||

|

||||

# 5. Verification link (it should now point to the source code)

|

||||

which qwen

|

||||

# Expected output: /xxx/xxx/.nvm/versions/node/v24.11.1/bin/qwen

|

||||

# Or similar paths, but it should be a symbolic link

|

||||

|

||||

# 6. For details of the symbolic link, you can see the specific source code path

|

||||

ls -la $(dirname $(which qwen))/../lib/node_modules/@qwen-code/qwen-code

|

||||

# It should show that this is a symbolic link pointing to your source code directory

|

||||

|

||||

# 7.Test the version of qwen

|

||||

qwen -v

|

||||

# npm link will overwrite the global qwen. To avoid being unable to distinguish the same version number, you can uninstall the global CLI first

|

||||

```

|

||||

|

||||

#### 3、Create your sandbox Dockerfile under the root directory of your own project

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

|

||||

- Official mirror image address:https://github.com/QwenLM/qwen-code/pkgs/container/qwen-code

|

||||

|

||||

```bash

|

||||

# Based on the official Qwen sandbox image (It is recommended to explicitly specify the version)

|

||||

FROM ghcr.io/qwenlm/qwen-code:sha-570ec43

|

||||

# Add your extra tools here

|

||||

RUN apt-get update && apt-get install -y \

|

||||

git \

|

||||

python3 \

|

||||

ripgrep

|

||||

```

|

||||

|

||||

#### 4、Create the first sandbox image under the root directory of your project

|

||||

|

||||

```bash

|

||||

GEMINI_SANDBOX=docker BUILD_SANDBOX=1 qwen -s

|

||||

# Observe whether the sandbox version of the tool you launched is consistent with the version of your custom image. If they are consistent, the startup will be successful

|

||||

```

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

#### Remove npm link

|

||||

|

||||

- If you want to restore the official CLI of qwen, please remove the npm link

|

||||

|

||||

```bash

|

||||

# Method 1: Unlink globally

|

||||

npm unlink -g @qwen-code/qwen-code

|

||||

|

||||

# Method 2: Remove it in the packages/cli directory

|

||||

cd packages/cli

|

||||

npm unlink

|

||||

|

||||

# Verification has been lifted

|

||||

which qwen

|

||||

# It should display "qwen not found"

|

||||

|

||||

# Reinstall the global version if necessary

|

||||

npm install -g @qwen-code/qwen-code

|

||||

|

||||

# Verification Recovery

|

||||

which qwen

|

||||

qwen --version

|

||||

```

|

||||

@@ -104,7 +104,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

| `model.name` | string | The Qwen model to use for conversations. | `undefined` |

|

||||

| `model.maxSessionTurns` | number | Maximum number of user/model/tool turns to keep in a session. -1 means unlimited. | `-1` |

|

||||

| `model.summarizeToolOutput` | object | Enables or disables the summarization of tool output. You can specify the token budget for the summarization using the `tokenBudget` setting. Note: Currently only the `run_shell_command` tool is supported. For example `{"run_shell_command": {"tokenBudget": 2000}}` | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, and `disableCacheControl`, along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, `disableCacheControl`, and `customHeaders` (custom HTTP headers for API requests), along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.chatCompression.contextPercentageThreshold` | number | Sets the threshold for chat history compression as a percentage of the model's total token limit. This is a value between 0 and 1 that applies to both automatic compression and the manual `/compress` command. For example, a value of `0.6` will trigger compression when the chat history exceeds 60% of the token limit. Use `0` to disable compression entirely. | `0.7` |

|

||||

| `model.skipNextSpeakerCheck` | boolean | Skip the next speaker check. | `false` |

|

||||

| `model.skipLoopDetection` | boolean | Disables loop detection checks. Loop detection prevents infinite loops in AI responses but can generate false positives that interrupt legitimate workflows. Enable this option if you experience frequent false positive loop detection interruptions. | `false` |

|

||||

@@ -114,12 +114,16 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

**Example model.generationConfig:**

|

||||

|

||||

```

|

||||

```json

|

||||

{

|

||||

"model": {

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"disableCacheControl": false,

|

||||

"customHeaders": {

|

||||

"X-Request-ID": "req-123",

|

||||

"X-User-ID": "user-456"

|

||||

},

|

||||

"samplingParams": {

|

||||

"temperature": 0.2,

|

||||

"top_p": 0.8,

|

||||

@@ -130,6 +134,8 @@ Settings are organized into categories. All settings should be placed within the

|

||||

}

|

||||

```

|

||||

|

||||

The `customHeaders` field allows you to add custom HTTP headers to all API requests. This is useful for request tracing, monitoring, API gateway routing, or when different models require different headers. If `customHeaders` is defined in `modelProviders[].generationConfig.customHeaders`, it will be used directly; otherwise, headers from `model.generationConfig.customHeaders` will be used. No merging occurs between the two levels.

|

||||

|

||||

**model.openAILoggingDir examples:**

|

||||

|

||||

- `"~/qwen-logs"` - Logs to `~/qwen-logs` directory

|

||||

@@ -154,6 +160,10 @@ Use `modelProviders` to declare curated model lists per auth type that the `/mod

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"maxRetries": 3,

|

||||

"customHeaders": {

|

||||

"X-Model-Version": "v1.0",

|

||||

"X-Request-Priority": "high"

|

||||

},

|

||||

"samplingParams": { "temperature": 0.2 }

|

||||

}

|

||||

}

|

||||

@@ -215,7 +225,7 @@ Per-field precedence for `generationConfig`:

|

||||

3. `settings.model.generationConfig`

|

||||

4. Content-generator defaults (`getDefaultGenerationConfig` for OpenAI, `getParameterValue` for Gemini, etc.)

|

||||

|

||||

`samplingParams` is treated atomically; provider values replace the entire object. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

`samplingParams` and `customHeaders` are both treated atomically; provider values replace the entire object. If `modelProviders[].generationConfig` defines these fields, they are used directly; otherwise, values from `model.generationConfig` are used. No merging occurs between provider and global configuration levels. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

|

||||

##### Selection persistence and recommendations

|

||||

|

||||

@@ -470,7 +480,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

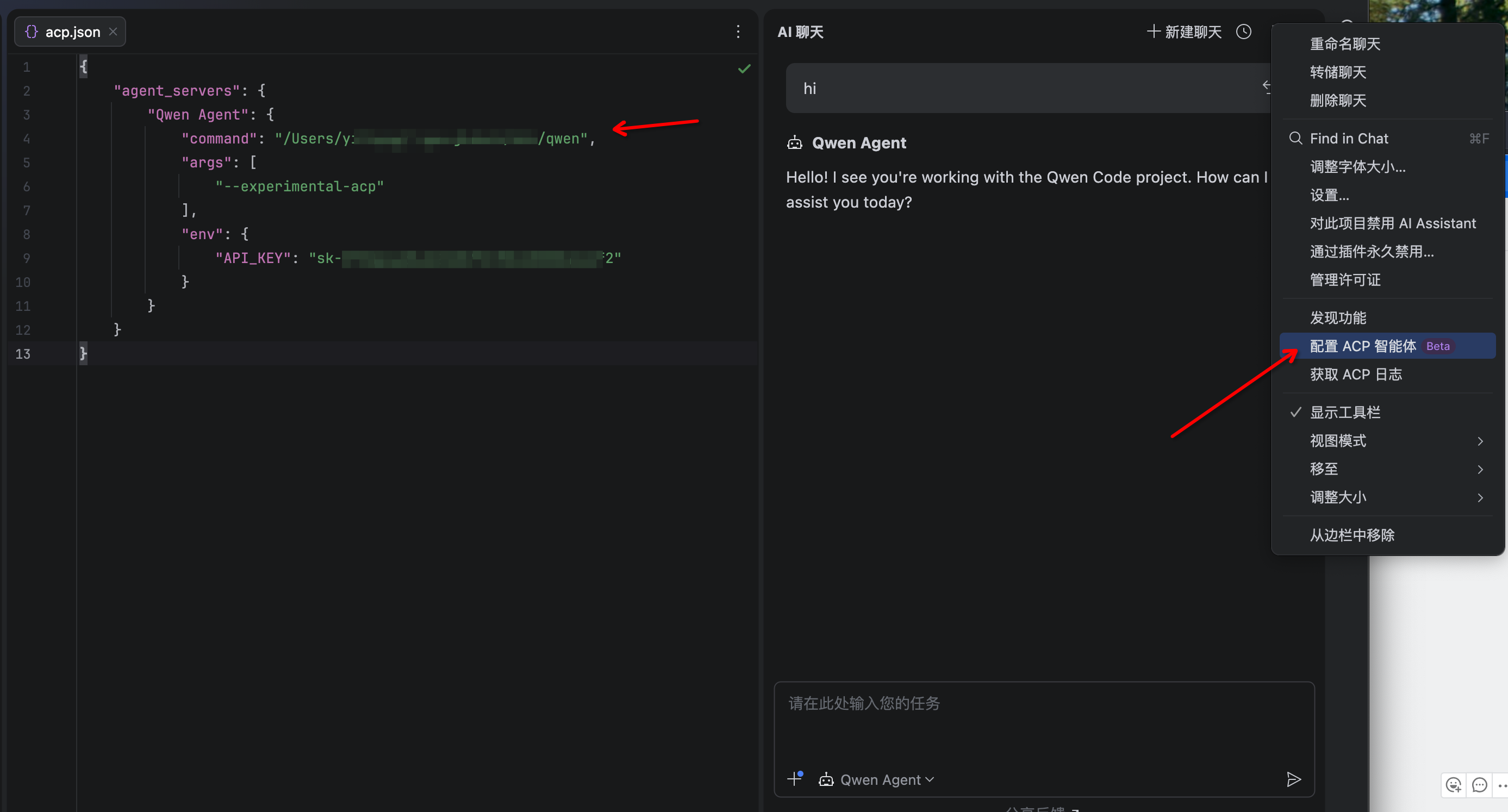

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

|

||||

@@ -166,15 +166,6 @@ export SANDBOX_SET_UID_GID=true # Force host UID/GID

|

||||

export SANDBOX_SET_UID_GID=false # Disable UID/GID mapping

|

||||

```

|

||||

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile:

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

- Then run with: `BUILD_SANDBOX=1 qwen -s ...`

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common issues

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 36 KiB |

@@ -1,11 +1,11 @@

|

||||

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Control Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -22,13 +22,7 @@

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

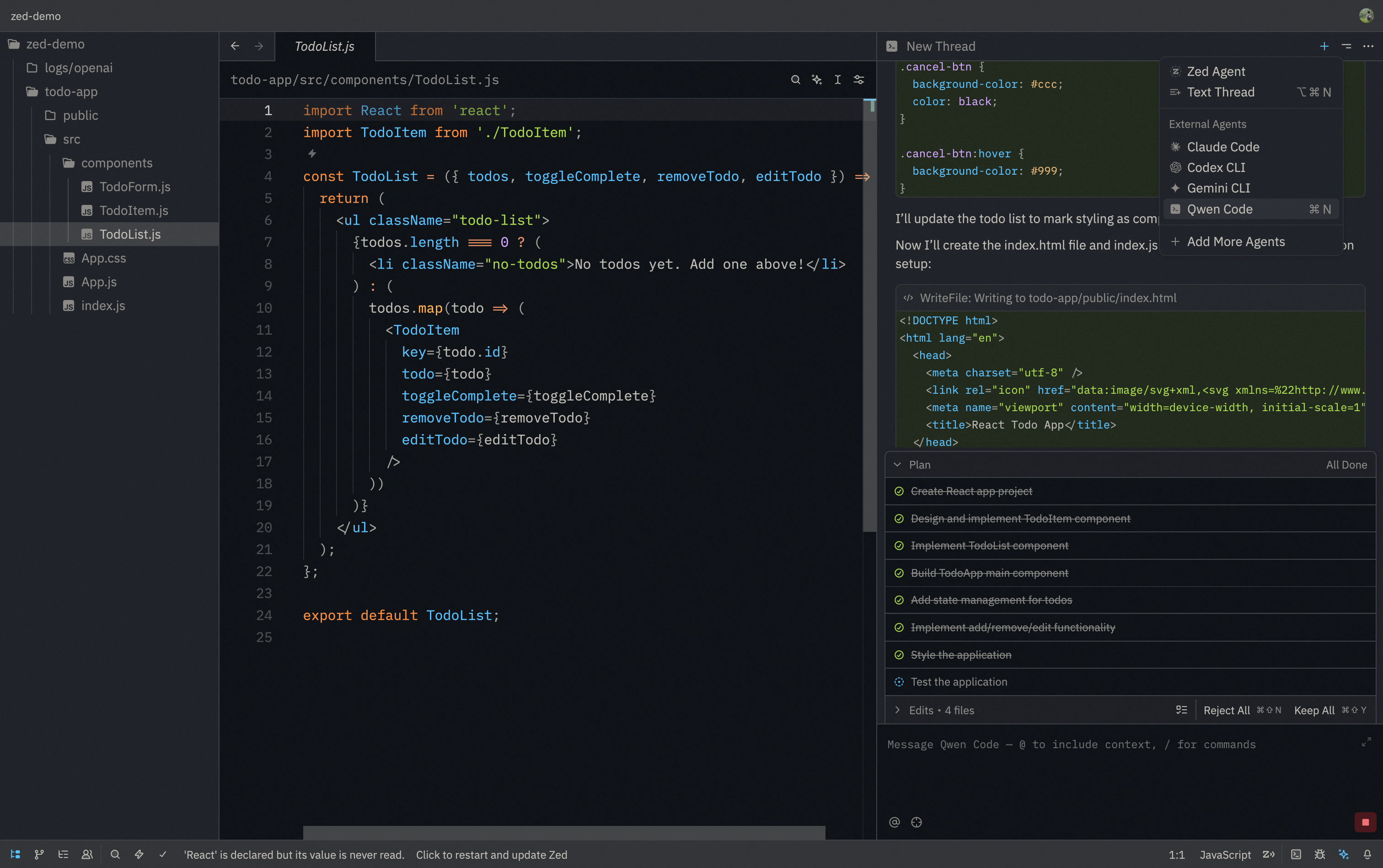

# Zed Editor

|

||||

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

@@ -159,7 +159,7 @@ Qwen Code will:

|

||||

|

||||

### Test out other common workflows

|

||||

|

||||

There are a number of ways to work with Claude:

|

||||

There are a number of ways to work with Qwen Code:

|

||||

|

||||

**Refactor code**

|

||||

|

||||

|

||||

@@ -33,7 +33,6 @@ const external = [

|

||||

'@lydell/node-pty-linux-x64',

|

||||

'@lydell/node-pty-win32-arm64',

|

||||

'@lydell/node-pty-win32-x64',

|

||||

'tiktoken',

|

||||

];

|

||||

|

||||

esbuild

|

||||

|

||||

@@ -831,7 +831,7 @@ describe('Permission Control (E2E)', () => {

|

||||

TEST_TIMEOUT,

|

||||

);

|

||||

|

||||

it(

|

||||

it.skip(

|

||||

'should execute dangerous commands without confirmation',

|

||||

async () => {

|

||||

const q = query({

|

||||

|

||||

10

package-lock.json

generated

10

package-lock.json

generated

@@ -15682,12 +15682,6 @@

|

||||

"tslib": "^2"

|

||||

}

|

||||

},

|

||||

"node_modules/tiktoken": {

|

||||

"version": "1.0.22",

|

||||

"resolved": "https://registry.npmjs.org/tiktoken/-/tiktoken-1.0.22.tgz",

|

||||

"integrity": "sha512-PKvy1rVF1RibfF3JlXBSP0Jrcw2uq3yXdgcEXtKTYn3QJ/cBRBHDnrJ5jHky+MENZ6DIPwNUGWpkVx+7joCpNA==",

|

||||

"license": "MIT"

|

||||

},

|

||||

"node_modules/tinybench": {

|

||||

"version": "2.9.0",

|

||||

"resolved": "https://registry.npmjs.org/tinybench/-/tinybench-2.9.0.tgz",

|

||||

@@ -17990,7 +17984,6 @@

|

||||

"shell-quote": "^1.8.3",

|

||||

"simple-git": "^3.28.0",

|

||||

"strip-ansi": "^7.1.0",

|

||||

"tiktoken": "^1.0.21",

|

||||

"undici": "^6.22.0",

|

||||

"uuid": "^9.0.1",

|

||||

"ws": "^8.18.0"

|

||||

@@ -18588,11 +18581,10 @@

|

||||

},

|

||||

"packages/sdk-typescript": {

|

||||

"name": "@qwen-code/sdk",

|

||||

"version": "0.1.2",

|

||||

"version": "0.1.3",

|

||||

"license": "Apache-2.0",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

"tiktoken": "^1.0.21",

|

||||

"zod": "^3.25.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

|

||||

@@ -38,14 +38,15 @@

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

"@qwen-code/qwen-code-core": "file:../core",

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

"@qwen-code/qwen-code-core": "file:../core",

|

||||

"@types/update-notifier": "^6.0.8",

|

||||

"ansi-regex": "^6.2.2",

|

||||

"command-exists": "^1.2.9",

|

||||

"comment-json": "^4.2.5",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"extract-zip": "^2.0.1",

|

||||

"fzf": "^0.5.2",

|

||||

"glob": "^10.5.0",

|

||||

"highlight.js": "^11.11.1",

|

||||

@@ -65,7 +66,6 @@

|

||||

"strip-json-comments": "^3.1.1",

|

||||

"tar": "^7.5.2",

|

||||

"undici": "^6.22.0",

|

||||

"extract-zip": "^2.0.1",

|

||||

"update-notifier": "^7.3.1",

|

||||

"wrap-ansi": "9.0.2",

|

||||

"yargs": "^17.7.2",

|

||||

@@ -74,6 +74,7 @@

|

||||

"devDependencies": {

|

||||

"@babel/runtime": "^7.27.6",

|

||||

"@google/gemini-cli-test-utils": "file:../test-utils",

|

||||

"@qwen-code/qwen-code-test-utils": "file:../test-utils",

|

||||

"@testing-library/react": "^16.3.0",

|

||||

"@types/archiver": "^6.0.3",

|

||||

"@types/command-exists": "^1.2.3",

|

||||

@@ -92,8 +93,7 @@

|

||||

"pretty-format": "^30.0.2",

|

||||

"react-dom": "^19.1.0",

|

||||

"typescript": "^5.3.3",

|

||||

"vitest": "^3.1.1",

|

||||

"@qwen-code/qwen-code-test-utils": "file:../test-utils"

|

||||

"vitest": "^3.1.1"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">=20"

|

||||

|

||||

@@ -83,12 +83,26 @@ export const useAuthCommand = (

|

||||

async (authType: AuthType, credentials?: OpenAICredentials) => {

|

||||

try {

|

||||

const authTypeScope = getPersistScopeForModelSelection(settings);

|

||||

|

||||

// Persist authType

|

||||

settings.setValue(

|

||||

authTypeScope,

|

||||

'security.auth.selectedType',

|

||||

authType,

|

||||

);

|

||||

|

||||

// Persist model from ContentGenerator config (handles fallback cases)

|

||||

// This ensures that when syncAfterAuthRefresh falls back to default model,

|

||||

// it gets persisted to settings.json

|

||||

const contentGeneratorConfig = config.getContentGeneratorConfig();

|

||||

if (contentGeneratorConfig?.model) {

|

||||

settings.setValue(

|

||||

authTypeScope,

|

||||

'model.name',

|

||||

contentGeneratorConfig.model,

|

||||

);

|

||||

}

|

||||

|

||||

// Only update credentials if not switching to QWEN_OAUTH,

|

||||

// so that OpenAI credentials are preserved when switching to QWEN_OAUTH.

|

||||

if (authType !== AuthType.QWEN_OAUTH && credentials) {

|

||||

@@ -106,9 +120,6 @@ export const useAuthCommand = (

|

||||

credentials.baseUrl,

|

||||

);

|

||||

}

|

||||

if (credentials?.model != null) {

|

||||

settings.setValue(authTypeScope, 'model.name', credentials.model);

|

||||

}

|

||||

}

|

||||

} catch (error) {

|

||||

handleAuthFailure(error);

|

||||

|

||||

@@ -117,8 +117,33 @@ describe('errors', () => {

|

||||

expect(getErrorMessage(undefined)).toBe('undefined');

|

||||

});

|

||||

|

||||

it('should handle objects', () => {

|

||||

const obj = { message: 'test' };

|

||||

it('should extract message from error-like objects', () => {

|

||||

const obj = { message: 'test error message' };

|

||||

expect(getErrorMessage(obj)).toBe('test error message');

|

||||

});

|

||||

|

||||

it('should stringify plain objects without message property', () => {

|

||||

const obj = { code: 500, details: 'internal error' };

|

||||

expect(getErrorMessage(obj)).toBe(

|

||||

'{"code":500,"details":"internal error"}',

|

||||

);

|

||||

});

|

||||

|

||||

it('should handle empty objects', () => {

|

||||

expect(getErrorMessage({})).toBe('{}');

|

||||

});

|

||||

|

||||

it('should handle objects with non-string message property', () => {

|

||||

const obj = { message: 123 };

|

||||

expect(getErrorMessage(obj)).toBe('{"message":123}');

|

||||

});

|

||||

|

||||

it('should fallback to String() when toJSON returns undefined', () => {

|

||||

const obj = {

|

||||

toJSON() {

|

||||

return undefined;

|

||||

},

|

||||

};

|

||||

expect(getErrorMessage(obj)).toBe('[object Object]');

|

||||

});

|

||||

});

|

||||

|

||||

@@ -18,6 +18,29 @@ export function getErrorMessage(error: unknown): string {

|

||||

if (error instanceof Error) {

|

||||

return error.message;

|

||||

}

|

||||

|

||||

// Handle objects with message property (error-like objects)

|

||||

if (

|

||||

error !== null &&

|

||||

typeof error === 'object' &&

|

||||

'message' in error &&

|

||||

typeof (error as { message: unknown }).message === 'string'

|

||||

) {

|

||||

return (error as { message: string }).message;

|

||||

}

|

||||

|

||||

// Handle plain objects by stringifying them

|

||||

if (error !== null && typeof error === 'object') {

|

||||

try {

|

||||

const stringified = JSON.stringify(error);

|

||||

// JSON.stringify can return undefined for objects with toJSON() returning undefined

|

||||

return stringified ?? String(error);

|

||||

} catch {

|

||||

// If JSON.stringify fails (circular reference, etc.), fall back to String

|

||||

return String(error);

|

||||

}

|

||||

}

|

||||

|

||||

return String(error);

|

||||

}

|

||||

|

||||

|

||||

@@ -10,6 +10,7 @@ import {

|

||||

type ContentGeneratorConfigSources,

|

||||

resolveModelConfig,

|

||||

type ModelConfigSourcesInput,

|

||||

type ProviderModelConfig,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import type { Settings } from '../config/settings.js';

|

||||

|

||||

@@ -81,6 +82,21 @@ export function resolveCliGenerationConfig(

|

||||

|

||||

const authType = selectedAuthType;

|

||||

|

||||

// Find modelProvider from settings.modelProviders based on authType and model

|

||||

let modelProvider: ProviderModelConfig | undefined;

|

||||

if (authType && settings.modelProviders) {

|

||||

const providers = settings.modelProviders[authType];

|

||||

if (providers && Array.isArray(providers)) {

|

||||

// Try to find by requested model (from CLI or settings)

|

||||

const requestedModel = argv.model || settings.model?.name;

|

||||

if (requestedModel) {

|

||||

modelProvider = providers.find((p) => p.id === requestedModel) as

|

||||

| ProviderModelConfig

|

||||

| undefined;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

const configSources: ModelConfigSourcesInput = {

|

||||

authType,

|

||||

cli: {

|

||||

@@ -96,6 +112,7 @@ export function resolveCliGenerationConfig(

|

||||

| Partial<ContentGeneratorConfig>

|

||||

| undefined,

|

||||

},

|

||||

modelProvider,

|

||||

env,

|

||||

};

|

||||

|

||||

@@ -103,7 +120,7 @@ export function resolveCliGenerationConfig(

|

||||

|

||||

// Log warnings if any

|

||||

for (const warning of resolved.warnings) {

|

||||

console.warn(`[modelProviderUtils] ${warning}`);

|

||||

console.warn(warning);

|

||||

}

|

||||

|

||||

// Resolve OpenAI logging config (CLI-specific, not part of core resolver)

|

||||

|

||||

@@ -360,10 +360,10 @@ export async function start_sandbox(

|

||||

//

|

||||

// note this can only be done with binary linked from gemini-cli repo

|

||||

if (process.env['BUILD_SANDBOX']) {

|

||||

if (!gcPath.includes('gemini-cli/packages/')) {

|

||||

if (!gcPath.includes('qwen-code/packages/')) {

|

||||

throw new FatalSandboxError(

|

||||

'Cannot build sandbox using installed gemini binary; ' +

|

||||

'run `npm link ./packages/cli` under gemini-cli repo to switch to linked binary.',

|

||||

'Cannot build sandbox using installed Qwen Code binary; ' +

|

||||

'run `npm link ./packages/cli` under QwenCode-cli repo to switch to linked binary.',

|

||||

);

|

||||

} else {

|

||||

console.error('building sandbox ...');

|

||||

|

||||

@@ -63,7 +63,6 @@

|

||||

"shell-quote": "^1.8.3",

|

||||

"simple-git": "^3.28.0",

|

||||

"strip-ansi": "^7.1.0",

|

||||

"tiktoken": "^1.0.21",

|

||||

"undici": "^6.22.0",

|

||||

"uuid": "^9.0.1",

|

||||

"ws": "^8.18.0"

|

||||

|

||||

@@ -10,6 +10,7 @@ import type {

|

||||

GenerateContentParameters,

|

||||

} from '@google/genai';

|

||||

import { FinishReason, GenerateContentResponse } from '@google/genai';

|

||||

import type { ContentGeneratorConfig } from '../contentGenerator.js';

|

||||

|

||||

// Mock the request tokenizer module BEFORE importing the class that uses it.

|

||||

const mockTokenizer = {

|

||||

@@ -18,9 +19,7 @@ const mockTokenizer = {

|

||||

};

|

||||

|

||||

vi.mock('../../utils/request-tokenizer/index.js', () => ({

|

||||

getDefaultTokenizer: vi.fn(() => mockTokenizer),

|

||||

DefaultRequestTokenizer: vi.fn(() => mockTokenizer),

|

||||

disposeDefaultTokenizer: vi.fn(),

|

||||

RequestTokenEstimator: vi.fn(() => mockTokenizer),

|

||||

}));

|

||||

|

||||

type AnthropicCreateArgs = [unknown, { signal?: AbortSignal }?];

|

||||

@@ -127,6 +126,32 @@ describe('AnthropicContentGenerator', () => {

|

||||

);

|

||||

});

|

||||

|

||||

it('merges customHeaders into defaultHeaders (does not replace defaults)', async () => {

|

||||

const { AnthropicContentGenerator } = await importGenerator();

|

||||

void new AnthropicContentGenerator(

|

||||

{

|

||||

model: 'claude-test',

|

||||

apiKey: 'test-key',

|

||||

baseUrl: 'https://example.invalid',

|

||||

timeout: 10_000,

|

||||

maxRetries: 2,

|

||||

samplingParams: {},

|

||||

schemaCompliance: 'auto',

|

||||

reasoning: { effort: 'medium' },

|

||||

customHeaders: {

|

||||

'X-Custom': '1',

|

||||

},

|

||||

} as unknown as Record<string, unknown> as ContentGeneratorConfig,

|

||||

mockConfig,

|

||||

);

|

||||

|

||||

const headers = (anthropicState.constructorOptions?.['defaultHeaders'] ||

|

||||

{}) as Record<string, string>;

|

||||

expect(headers['User-Agent']).toContain('QwenCode/1.2.3');

|

||||

expect(headers['anthropic-beta']).toContain('effort-2025-11-24');

|

||||

expect(headers['X-Custom']).toBe('1');

|

||||

});

|

||||

|

||||

it('adds the effort beta header when reasoning.effort is set', async () => {

|

||||

const { AnthropicContentGenerator } = await importGenerator();

|

||||

void new AnthropicContentGenerator(

|

||||

@@ -325,9 +350,7 @@ describe('AnthropicContentGenerator', () => {

|

||||

};

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

expect(mockTokenizer.calculateTokens).toHaveBeenCalledWith(request, {

|

||||

textEncoding: 'cl100k_base',

|

||||

});

|

||||

expect(mockTokenizer.calculateTokens).toHaveBeenCalledWith(request);

|

||||

expect(result.totalTokens).toBe(50);

|

||||

});

|

||||

|

||||

|

||||

@@ -25,7 +25,7 @@ type MessageCreateParamsNonStreaming =

|

||||

Anthropic.MessageCreateParamsNonStreaming;

|

||||

type MessageCreateParamsStreaming = Anthropic.MessageCreateParamsStreaming;

|

||||

type RawMessageStreamEvent = Anthropic.RawMessageStreamEvent;

|

||||

import { getDefaultTokenizer } from '../../utils/request-tokenizer/index.js';

|

||||

import { RequestTokenEstimator } from '../../utils/request-tokenizer/index.js';

|

||||

import { safeJsonParse } from '../../utils/safeJsonParse.js';

|

||||

import { AnthropicContentConverter } from './converter.js';

|

||||

|

||||

@@ -105,10 +105,8 @@ export class AnthropicContentGenerator implements ContentGenerator {

|

||||

request: CountTokensParameters,

|

||||

): Promise<CountTokensResponse> {

|

||||

try {

|

||||

const tokenizer = getDefaultTokenizer();

|

||||

const result = await tokenizer.calculateTokens(request, {

|

||||

textEncoding: 'cl100k_base',

|

||||

});

|

||||

const estimator = new RequestTokenEstimator();

|

||||

const result = await estimator.calculateTokens(request);

|

||||

|

||||

return {

|

||||

totalTokens: result.totalTokens,

|

||||

@@ -141,6 +139,7 @@ export class AnthropicContentGenerator implements ContentGenerator {

|

||||

private buildHeaders(): Record<string, string> {

|

||||

const version = this.cliConfig.getCliVersion() || 'unknown';

|

||||

const userAgent = `QwenCode/${version} (${process.platform}; ${process.arch})`;

|

||||

const { customHeaders } = this.contentGeneratorConfig;

|

||||

|

||||

const betas: string[] = [];

|

||||

const reasoning = this.contentGeneratorConfig.reasoning;

|

||||

@@ -163,7 +162,7 @@ export class AnthropicContentGenerator implements ContentGenerator {

|

||||

headers['anthropic-beta'] = betas.join(',');

|

||||

}

|

||||

|

||||

return headers;

|

||||

return customHeaders ? { ...headers, ...customHeaders } : headers;

|

||||

}

|

||||

|

||||

private async buildRequest(

|

||||

|

||||

@@ -153,6 +153,26 @@ vi.mock('../telemetry/loggers.js', () => ({

|

||||

logNextSpeakerCheck: vi.fn(),

|

||||

}));

|

||||

|

||||

// Mock RequestTokenizer to use simple character-based estimation

|

||||

vi.mock('../utils/request-tokenizer/requestTokenizer.js', () => ({

|

||||

RequestTokenizer: class {

|

||||

async calculateTokens(request: { contents: unknown }) {

|

||||

// Simple estimation: count characters in JSON and divide by 4

|

||||

const totalChars = JSON.stringify(request.contents).length;

|

||||

return {

|

||||

totalTokens: Math.floor(totalChars / 4),

|

||||

breakdown: {

|

||||

textTokens: Math.floor(totalChars / 4),

|

||||

imageTokens: 0,

|

||||

audioTokens: 0,

|

||||

otherTokens: 0,

|

||||

},

|

||||

processingTime: 0,

|

||||

};

|

||||

}

|

||||

},

|

||||

}));

|

||||

|

||||

/**

|

||||

* Array.fromAsync ponyfill, which will be available in es 2024.

|

||||

*

|

||||

@@ -417,6 +437,12 @@ describe('Gemini Client (client.ts)', () => {

|

||||

] as Content[],

|

||||

originalTokenCount = 1000,

|

||||

summaryText = 'This is a summary.',

|

||||

// Token counts returned in usageMetadata to simulate what the API would return

|

||||

// Default values ensure successful compression:

|

||||

// newTokenCount = originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

// = 1000 - (1600 - 1000) + 50 = 1000 - 600 + 50 = 450 (< 1000, success)

|

||||

compressionInputTokenCount = 1600,

|

||||

compressionOutputTokenCount = 50,

|

||||

} = {}) {

|

||||

const mockOriginalChat: Partial<GeminiChat> = {

|

||||

getHistory: vi.fn((_curated?: boolean) => chatHistory),

|

||||

@@ -438,6 +464,12 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: compressionInputTokenCount,

|

||||

candidatesTokenCount: compressionOutputTokenCount,

|

||||

totalTokenCount:

|

||||

compressionInputTokenCount + compressionOutputTokenCount,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

// Calculate what the new history will be

|

||||

@@ -477,11 +509,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

// New token count formula: originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

const estimatedNewTokenCount = Math.max(

|

||||

0,

|

||||

originalTokenCount -

|

||||

(compressionInputTokenCount - 1000) +

|

||||

compressionOutputTokenCount,

|

||||

);

|

||||

const estimatedNewTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

return {

|

||||

client,

|

||||

@@ -493,49 +527,58 @@ describe('Gemini Client (client.ts)', () => {

|

||||

|

||||

describe('when compression inflates the token count', () => {

|

||||

it('allows compression to be forced/manual after a failure', async () => {

|

||||

// Call 1 (Fails): Setup with a long summary to inflate tokens

|

||||

// Call 1 (Fails): Setup with token counts that will inflate

|

||||

// newTokenCount = originalTokenCount - (compressionInputTokenCount - 1000) + compressionOutputTokenCount

|

||||

// = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount: inflatedTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(inflatedTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

await client.tryCompressChat('prompt-id-4', false); // Fails

|

||||

|

||||

// Call 2 (Forced): Re-setup with a short summary

|

||||

// Call 2 (Forced): Re-setup with token counts that will compress

|

||||

// newTokenCount = 100 - (1100 - 1000) + 50 = 100 - 100 + 50 = 50 <= 100 (compression)

|

||||

const shortSummary = 'short';

|

||||

const { estimatedNewTokenCount: compressedTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: shortSummary,

|

||||

compressionInputTokenCount: 1100,

|

||||

compressionOutputTokenCount: 50,

|

||||

});

|

||||

expect(compressedTokenCount).toBeLessThanOrEqual(100); // Ensure setup is correct

|

||||

|

||||

const result = await client.tryCompressChat('prompt-id-4', true); // Forced

|

||||

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

newTokenCount: compressedTokenCount,

|

||||

originalTokenCount: 100,

|

||||

});

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(100);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(100);

|

||||

});

|

||||

|

||||

it('yields the result even if the compression inflated the tokens', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

const result = await client.tryCompressChat('prompt-id-4', false);

|

||||

|

||||

expect(result).toEqual({

|

||||

compressionStatus:

|

||||

CompressionStatus.COMPRESSION_FAILED_INFLATED_TOKEN_COUNT,

|

||||

newTokenCount: estimatedNewTokenCount,

|

||||

originalTokenCount: 100,

|

||||

});

|

||||

expect(result.compressionStatus).toBe(

|

||||

CompressionStatus.COMPRESSION_FAILED_INFLATED_TOKEN_COUNT,

|

||||

);

|

||||

expect(result.originalTokenCount).toBe(100);

|

||||

// The newTokenCount should be higher than original since compression failed due to inflation

|

||||

expect(result.newTokenCount).toBeGreaterThan(100);

|

||||

// IMPORTANT: The change in client.ts means setLastPromptTokenCount is NOT called on failure

|

||||

expect(

|

||||

uiTelemetryService.setLastPromptTokenCount,

|

||||

@@ -543,10 +586,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

});

|

||||

|

||||

it('does not manipulate the source chat', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, mockOriginalChat, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

@@ -557,10 +603,13 @@ describe('Gemini Client (client.ts)', () => {

|

||||

});

|

||||

|

||||

it('will not attempt to compress context after a failure', async () => {

|

||||

// newTokenCount = 100 - (1010 - 1000) + 200 = 100 - 10 + 200 = 290 > 100 (inflation)

|

||||

const longSummary = 'long summary '.repeat(100);

|

||||

const { client, estimatedNewTokenCount } = setup({

|

||||

originalTokenCount: 100,

|

||||

summaryText: longSummary,

|

||||

compressionInputTokenCount: 1010,

|

||||

compressionOutputTokenCount: 200,

|

||||

});

|

||||

expect(estimatedNewTokenCount).toBeGreaterThan(100); // Ensure setup is correct

|

||||

|

||||

@@ -631,6 +680,7 @@ describe('Gemini Client (client.ts)', () => {

|

||||

);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 501 - (1400 - 1000) + 50 = 501 - 400 + 50 = 151 <= 501 (success)

|

||||

const summaryText = 'This is a summary.';

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

@@ -641,6 +691,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1400,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1450,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

// Mock startChat to complete the compression flow

|

||||

@@ -719,13 +774,8 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 501 - (1400 - 1000) + 50 = 501 - 400 + 50 = 151 <= 501 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -735,6 +785,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1400,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1450,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -744,12 +799,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

expect(tokenLimit).toHaveBeenCalled();

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

// Assert that summarization happened and returned the correct stats

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

// Assert that summarization happened

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

@@ -809,13 +863,8 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 700 - (1500 - 1000) + 50 = 700 - 500 + 50 = 250 <= 700 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -825,6 +874,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1500,

|

||||

candidatesTokenCount: 50,

|

||||

totalTokenCount: 1550,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -834,12 +888,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

expect(tokenLimit).toHaveBeenCalled();

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

// Assert that summarization happened and returned the correct stats

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

// Assert that summarization happened

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

|

||||

@@ -887,13 +940,8 @@ describe('Gemini Client (client.ts)', () => {

|

||||

.fn()

|

||||

.mockResolvedValue(mockNewChat as GeminiChat);

|

||||

|

||||

const totalChars = newCompressedHistory.reduce(

|

||||

(total, content) => total + JSON.stringify(content).length,

|

||||

0,

|

||||

);

|

||||

const newTokenCount = Math.floor(totalChars / 4);

|

||||

|

||||

// Mock the summary response from the chat

|

||||

// newTokenCount = 100 - (1060 - 1000) + 20 = 100 - 60 + 20 = 60 <= 100 (success)

|

||||

mockGenerateContentFn.mockResolvedValue({

|

||||

candidates: [

|

||||

{

|

||||

@@ -903,6 +951,11 @@ describe('Gemini Client (client.ts)', () => {

|

||||

},

|

||||

},

|

||||

],

|

||||

usageMetadata: {

|

||||

promptTokenCount: 1060,

|

||||

candidatesTokenCount: 20,

|

||||

totalTokenCount: 1080,

|

||||

},

|

||||

} as unknown as GenerateContentResponse);

|

||||

|

||||

const initialChat = client.getChat();

|

||||

@@ -911,11 +964,10 @@ describe('Gemini Client (client.ts)', () => {

|

||||

|

||||

expect(mockGenerateContentFn).toHaveBeenCalled();

|

||||

|

||||

expect(result).toEqual({

|

||||

compressionStatus: CompressionStatus.COMPRESSED,

|

||||

originalTokenCount,

|

||||

newTokenCount,

|

||||

});

|

||||

expect(result.compressionStatus).toBe(CompressionStatus.COMPRESSED);

|

||||

expect(result.originalTokenCount).toBe(originalTokenCount);

|

||||

// newTokenCount might be clamped to originalTokenCount due to tolerance logic

|

||||

expect(result.newTokenCount).toBeLessThanOrEqual(originalTokenCount);

|

||||

|

||||

// Assert that the chat was reset

|

||||

expect(newChat).not.toBe(initialChat);

|

||||

|

||||

@@ -441,47 +441,19 @@ export class GeminiClient {

|

||||

yield { type: GeminiEventType.ChatCompressed, value: compressed };

|

||||

}

|

||||

|

||||

// Check session token limit after compression using accurate token counting

|

||||

// Check session token limit after compression.

|

||||

// `lastPromptTokenCount` is treated as authoritative for the (possibly compressed) history;

|

||||

const sessionTokenLimit = this.config.getSessionTokenLimit();

|

||||

if (sessionTokenLimit > 0) {

|

||||

// Get all the content that would be sent in an API call

|

||||

const currentHistory = this.getChat().getHistory(true);

|

||||

const userMemory = this.config.getUserMemory();

|

||||

const systemPrompt = getCoreSystemPrompt(

|

||||

userMemory,

|

||||

this.config.getModel(),

|

||||

);

|

||||

const initialHistory = await getInitialChatHistory(this.config);

|

||||

|

||||

// Create a mock request content to count total tokens

|

||||

const mockRequestContent = [

|

||||

{

|

||||

role: 'system' as const,

|

||||

parts: [{ text: systemPrompt }],

|

||||

},

|

||||

...initialHistory,

|

||||

...currentHistory,

|

||||

];

|

||||

|

||||

// Use the improved countTokens method for accurate counting

|

||||

const { totalTokens: totalRequestTokens } = await this.config

|

||||

.getContentGenerator()

|

||||

.countTokens({

|

||||

model: this.config.getModel(),

|

||||

contents: mockRequestContent,

|

||||

});

|

||||

|

||||

if (

|

||||

totalRequestTokens !== undefined &&

|

||||

totalRequestTokens > sessionTokenLimit

|

||||

) {

|

||||

const lastPromptTokenCount = uiTelemetryService.getLastPromptTokenCount();

|

||||

if (lastPromptTokenCount > sessionTokenLimit) {

|

||||

yield {

|

||||

type: GeminiEventType.SessionTokenLimitExceeded,

|

||||

value: {

|

||||

currentTokens: totalRequestTokens,

|

||||

currentTokens: lastPromptTokenCount,

|

||||

limit: sessionTokenLimit,

|

||||

message:

|

||||

`Session token limit exceeded: ${totalRequestTokens} tokens > ${sessionTokenLimit} limit. ` +

|

||||

`Session token limit exceeded: ${lastPromptTokenCount} tokens > ${sessionTokenLimit} limit. ` +

|

||||

'Please start a new session or increase the sessionTokenLimit in your settings.json.',

|

||||

},

|

||||

};

|

||||

|

||||

@@ -91,6 +91,8 @@ export type ContentGeneratorConfig = {

|

||||

userAgent?: string;

|

||||

// Schema compliance mode for tool definitions

|

||||

schemaCompliance?: 'auto' | 'openapi_30';

|

||||

// Custom HTTP headers to be sent with requests

|

||||

customHeaders?: Record<string, string>;

|

||||

};

|

||||

|

||||

// Keep the public ContentGeneratorConfigSources API, but reuse the generic

|

||||

|

||||

@@ -708,7 +708,7 @@ describe('GeminiChat', () => {

|

||||

|

||||

// Verify that token counting is called when usageMetadata is present

|

||||

expect(uiTelemetryService.setLastPromptTokenCount).toHaveBeenCalledWith(

|

||||

42,

|

||||

57,

|

||||

);

|

||||

expect(uiTelemetryService.setLastPromptTokenCount).toHaveBeenCalledTimes(

|

||||

1,

|

||||

|

||||

@@ -529,10 +529,10 @@ export class GeminiChat {

|

||||

// Collect token usage for consolidated recording

|

||||

if (chunk.usageMetadata) {

|

||||

usageMetadata = chunk.usageMetadata;

|

||||

if (chunk.usageMetadata.promptTokenCount !== undefined) {

|

||||

uiTelemetryService.setLastPromptTokenCount(

|

||||

chunk.usageMetadata.promptTokenCount,

|

||||

);

|

||||

const lastPromptTokenCount =

|

||||

usageMetadata.totalTokenCount ?? usageMetadata.promptTokenCount;

|

||||

if (lastPromptTokenCount) {

|

||||

uiTelemetryService.setLastPromptTokenCount(lastPromptTokenCount);

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

@@ -39,6 +39,41 @@ describe('GeminiContentGenerator', () => {

|

||||

mockGoogleGenAI = vi.mocked(GoogleGenAI).mock.results[0].value;

|

||||

});

|

||||

|

||||

it('should merge customHeaders into existing httpOptions.headers', async () => {

|

||||

vi.mocked(GoogleGenAI).mockClear();

|

||||

|

||||

void new GeminiContentGenerator(

|

||||

{

|

||||

apiKey: 'test-api-key',

|

||||

httpOptions: {

|

||||

headers: {

|

||||

'X-Base': 'base',

|

||||

'X-Override': 'base',

|

||||

},

|

||||

},

|

||||

},

|

||||

{

|

||||

customHeaders: {

|

||||

'X-Custom': 'custom',

|

||||

'X-Override': 'custom',

|

||||

},

|

||||

// eslint-disable-next-line @typescript-eslint/no-explicit-any

|

||||

} as any,

|

||||

);

|

||||

|

||||

expect(vi.mocked(GoogleGenAI)).toHaveBeenCalledTimes(1);

|

||||

expect(vi.mocked(GoogleGenAI)).toHaveBeenCalledWith({

|

||||

apiKey: 'test-api-key',

|

||||

httpOptions: {

|

||||

headers: {

|

||||

'X-Base': 'base',

|

||||

'X-Custom': 'custom',

|

||||

'X-Override': 'custom',

|

||||

},

|

||||

},

|

||||

});

|

||||

});

|

||||

|

||||

it('should call generateContent on the underlying model', async () => {

|

||||

const request = { model: 'gemini-1.5-flash', contents: [] };

|

||||

const expectedResponse = { responseId: 'test-id' };

|

||||

|

||||

@@ -35,7 +35,26 @@ export class GeminiContentGenerator implements ContentGenerator {

|

||||

},

|

||||

contentGeneratorConfig?: ContentGeneratorConfig,

|

||||

) {

|

||||

this.googleGenAI = new GoogleGenAI(options);

|

||||

const customHeaders = contentGeneratorConfig?.customHeaders;

|

||||

const finalOptions = customHeaders

|

||||

? (() => {

|

||||

const baseHttpOptions = options.httpOptions;

|

||||

const baseHeaders = baseHttpOptions?.headers ?? {};

|

||||

|

||||

return {

|

||||

...options,

|

||||

httpOptions: {

|

||||

...(baseHttpOptions ?? {}),

|

||||

headers: {

|

||||

...baseHeaders,

|

||||

...customHeaders,

|

||||

},

|

||||

},

|

||||

};

|

||||

})()

|

||||

: options;

|

||||

|

||||

this.googleGenAI = new GoogleGenAI(finalOptions);

|

||||

this.contentGeneratorConfig = contentGeneratorConfig;

|

||||

}

|

||||

|

||||

|

||||

@@ -22,17 +22,7 @@ const mockTokenizer = {

|

||||

};

|

||||

|

||||

vi.mock('../../../utils/request-tokenizer/index.js', () => ({

|

||||

getDefaultTokenizer: vi.fn(() => mockTokenizer),

|

||||

DefaultRequestTokenizer: vi.fn(() => mockTokenizer),

|

||||

disposeDefaultTokenizer: vi.fn(),

|

||||

}));

|

||||

|

||||

// Mock tiktoken as well for completeness

|

||||

vi.mock('tiktoken', () => ({

|

||||

get_encoding: vi.fn(() => ({

|

||||

encode: vi.fn(() => new Array(50)), // Mock 50 tokens

|

||||

free: vi.fn(),

|

||||

})),

|

||||

RequestTokenEstimator: vi.fn(() => mockTokenizer),

|

||||

}));

|

||||

|

||||

// Now import the modules that depend on the mocked modules

|

||||

@@ -134,7 +124,7 @@ describe('OpenAIContentGenerator (Refactored)', () => {

|

||||

});

|

||||

|

||||

describe('countTokens', () => {

|

||||

it('should count tokens using tiktoken', async () => {

|

||||

it('should count tokens using character-based estimation', async () => {

|

||||

const request: CountTokensParameters = {

|

||||

contents: [{ role: 'user', parts: [{ text: 'Hello world' }] }],

|

||||

model: 'gpt-4',

|

||||

@@ -142,26 +132,27 @@ describe('OpenAIContentGenerator (Refactored)', () => {

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

|

||||

expect(result.totalTokens).toBe(50); // Mocked value

|

||||

// 'Hello world' = 11 ASCII chars

|

||||

// 11 / 4 = 2.75 -> ceil = 3 tokens

|

||||

expect(result.totalTokens).toBe(3);

|

||||

});

|

||||

|

||||

it('should fall back to character approximation if tiktoken fails', async () => {

|

||||

// Mock tiktoken to throw error

|

||||

vi.doMock('tiktoken', () => ({

|

||||

get_encoding: vi.fn().mockImplementation(() => {

|

||||

throw new Error('Tiktoken failed');

|

||||

}),

|

||||

}));

|

||||

|

||||

it('should handle multimodal content', async () => {

|

||||

const request: CountTokensParameters = {

|

||||

contents: [{ role: 'user', parts: [{ text: 'Hello world' }] }],

|

||||

contents: [

|

||||

{

|

||||

role: 'user',

|

||||

parts: [{ text: 'Hello' }, { text: ' world' }],

|

||||

},

|

||||

],

|

||||

model: 'gpt-4',

|

||||

};

|

||||

|

||||

const result = await generator.countTokens(request);

|

||||

|

||||

// Should use character approximation (content length / 4)

|

||||

expect(result.totalTokens).toBeGreaterThan(0);

|

||||

// Parts are combined for estimation:

|

||||

// 'Hello world' = 11 ASCII chars -> 11/4 = 2.75 -> ceil = 3 tokens

|

||||

expect(result.totalTokens).toBe(3);

|

||||

});

|

||||

});

|

||||

|

||||

|

||||

@@ -12,7 +12,7 @@ import type {

|

||||

import type { PipelineConfig } from './pipeline.js';

|

||||

import { ContentGenerationPipeline } from './pipeline.js';

|

||||

import { EnhancedErrorHandler } from './errorHandler.js';

|

||||

import { getDefaultTokenizer } from '../../utils/request-tokenizer/index.js';

|

||||

import { RequestTokenEstimator } from '../../utils/request-tokenizer/index.js';

|

||||

import type { ContentGeneratorConfig } from '../contentGenerator.js';

|

||||

|

||||

export class OpenAIContentGenerator implements ContentGenerator {

|

||||

@@ -68,11 +68,9 @@ export class OpenAIContentGenerator implements ContentGenerator {

|

||||

request: CountTokensParameters,

|

||||

): Promise<CountTokensResponse> {

|

||||

try {

|

||||

// Use the new high-performance request tokenizer

|

||||

const tokenizer = getDefaultTokenizer();

|

||||

const result = await tokenizer.calculateTokens(request, {

|

||||

textEncoding: 'cl100k_base', // Use GPT-4 encoding for consistency

|

||||

});

|

||||

// Use the request token estimator (character-based).

|

||||

const estimator = new RequestTokenEstimator();

|

||||

const result = await estimator.calculateTokens(request);

|

||||

|

||||

return {

|

||||

totalTokens: result.totalTokens,

|

||||

|

||||

@@ -142,6 +142,27 @@ describe('DashScopeOpenAICompatibleProvider', () => {

|

||||

});

|

||||

});

|

||||

|

||||

it('should merge custom headers with DashScope defaults', () => {

|

||||

const providerWithCustomHeaders = new DashScopeOpenAICompatibleProvider(

|

||||

{

|

||||

...mockContentGeneratorConfig,

|

||||

customHeaders: {

|

||||

'X-Custom': '1',

|

||||

'X-DashScope-CacheControl': 'disable',

|

||||

},

|

||||

} as ContentGeneratorConfig,

|

||||

mockCliConfig,

|

||||

);

|

||||

|

||||

const headers = providerWithCustomHeaders.buildHeaders();

|

||||

|

||||

expect(headers['User-Agent']).toContain('QwenCode/1.0.0');

|

||||

expect(headers['X-DashScope-UserAgent']).toContain('QwenCode/1.0.0');

|

||||

expect(headers['X-DashScope-AuthType']).toBe(AuthType.QWEN_OAUTH);

|

||||

expect(headers['X-Custom']).toBe('1');

|

||||

expect(headers['X-DashScope-CacheControl']).toBe('disable');

|

||||

});

|

||||

|

||||

it('should handle unknown CLI version', () => {

|

||||

(

|

||||

mockCliConfig.getCliVersion as MockedFunction<

|

||||

|

||||

@@ -47,13 +47,17 @@ export class DashScopeOpenAICompatibleProvider

|

||||

buildHeaders(): Record<string, string | undefined> {

|

||||

const version = this.cliConfig.getCliVersion() || 'unknown';

|

||||

const userAgent = `QwenCode/${version} (${process.platform}; ${process.arch})`;

|

||||

const { authType } = this.contentGeneratorConfig;

|

||||

return {

|

||||

const { authType, customHeaders } = this.contentGeneratorConfig;

|

||||

const defaultHeaders = {

|

||||

'User-Agent': userAgent,

|

||||

'X-DashScope-CacheControl': 'enable',

|

||||

'X-DashScope-UserAgent': userAgent,

|

||||

'X-DashScope-AuthType': authType,

|

||||

};

|

||||

|

||||

return customHeaders

|

||||

? { ...defaultHeaders, ...customHeaders }

|

||||

: defaultHeaders;

|

||||

}

|

||||

|

||||

buildClient(): OpenAI {

|

||||

|

||||

@@ -73,6 +73,26 @@ describe('DefaultOpenAICompatibleProvider', () => {

|

||||

});

|

||||

});

|

||||

|

||||

it('should merge customHeaders with defaults (and allow overrides)', () => {

|

||||

const providerWithCustomHeaders = new DefaultOpenAICompatibleProvider(

|

||||

{

|

||||

...mockContentGeneratorConfig,

|

||||

customHeaders: {

|

||||

'X-Custom': '1',

|

||||

'User-Agent': 'custom-agent',

|