mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-20 15:56:19 +00:00

Compare commits

149 Commits

fix/nonint

...

v0.7.2-pre

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

1041becda3 | ||

|

|

55a5df46ba | ||

|

|

eb7dc53d2e | ||

|

|

de47c4e98b | ||

|

|

eed46447da | ||

|

|

8de81b6299 | ||

|

|

b13c5bf090 | ||

|

|

0a64fa78f5 | ||

|

|

f99295462d | ||

|

|

1145045a5a | ||

|

|

95c551c1b4 | ||

|

|

ec2aa6d86d | ||

|

|

66ad936c31 | ||

|

|

8b5f198e3c | ||

|

|

e8356c5f9e | ||

|

|

dc067697dc | ||

|

|

79cce84280 | ||

|

|

b9207c5884 | ||

|

|

baf848a4d9 | ||

|

|

d0104dc487 | ||

|

|

531062aeaf | ||

|

|

ced1b1db80 | ||

|

|

cf140b1b9d | ||

|

|

1f1e78aa3b | ||

|

|

511269446f | ||

|

|

0901b228a7 | ||

|

|

0681c71894 | ||

|

|

155c4b9728 | ||

|

|

57ca2823b3 | ||

|

|

620341eeae | ||

|

|

da8c49cb9d | ||

|

|

2852f48a4a | ||

|

|

d7d3371ddf | ||

|

|

c6c33233c5 | ||

|

|

4213d06ab9 | ||

|

|

106b69e5c0 | ||

|

|

6afe0f8c29 | ||

|

|

0b3be1a82c | ||

|

|

8af43e3ac3 | ||

|

|

04a11aa111 | ||

|

|

45236b6ec5 | ||

|

|

9e8724a749 | ||

|

|

d91e372c72 | ||

|

|

9325721811 | ||

|

|

56391b11ad | ||

|

|

e748532e6d | ||

|

|

d095a8b3f1 | ||

|

|

f7585153b7 | ||

|

|

d5ad3aebe4 | ||

|

|

98c680642f | ||

|

|

e4efd3a15d | ||

|

|

886f914fb3 | ||

|

|

90365af2f8 | ||

|

|

cbef5ffd89 | ||

|

|

63406b4ba4 | ||

|

|

52db3a766d | ||

|

|

5e80e80387 | ||

|

|

985f65f8fa | ||

|

|

9b9c5fadd5 | ||

|

|

372c67cad4 | ||

|

|

af3864b5de | ||

|

|

1e3791f30a | ||

|

|

9bf626d051 | ||

|

|

6f33d92b2c | ||

|

|

a35af6550f | ||

|

|

d6607e134e | ||

|

|

9024a41723 | ||

|

|

bde056b62e | ||

|

|

ff5ea3c6d7 | ||

|

|

0faaac8fa4 | ||

|

|

b923acd278 | ||

|

|

c2e62b9122 | ||

|

|

f54b62cda3 | ||

|

|

9521987a09 | ||

|

|

d20f2a41a2 | ||

|

|

e3eccb5987 | ||

|

|

22916457cd | ||

|

|

28bc4e6467 | ||

|

|

50bf65b10b | ||

|

|

47c8bc5303 | ||

|

|

e70ecdf3a8 | ||

|

|

117af05122 | ||

|

|

557e6397bb | ||

|

|

f762a62a2e | ||

|

|

ca12772a28 | ||

|

|

cec4b831b6 | ||

|

|

74bf72877d | ||

|

|

b60ae42d10 | ||

|

|

54fd4c22a9 | ||

|

|

f3b7c63cd1 | ||

|

|

e4dee3a2b2 | ||

|

|

996b9df947 | ||

|

|

64291db926 | ||

|

|

a8e3b9ebe7 | ||

|

|

5cfc9f4686 | ||

|

|

97497457a8 | ||

|

|

85473210e5 | ||

|

|

c0c94bd4fc | ||

|

|

8111511a89 | ||

|

|

a8eb858f99 | ||

|

|

52d6d1ff13 | ||

|

|

c845049d26 | ||

|

|

299b7de030 | ||

|

|

b93bb8bff6 | ||

|

|

adb53a6dc6 | ||

|

|

09196c6e19 | ||

|

|

4bd01d592b | ||

|

|

6917031128 | ||

|

|

b33525183f | ||

|

|

1aed5ce858 | ||

|

|

bad5b0485d | ||

|

|

5a6e5bb452 | ||

|

|

5f8e1ebc94 | ||

|

|

9670456a56 | ||

|

|

4c186e7c92 | ||

|

|

2f6b0b233a | ||

|

|

9a8ce605c5 | ||

|

|

afc693a4ab | ||

|

|

7173cba844 | ||

|

|

ec8cccafd7 | ||

|

|

8c56b612fb | ||

|

|

7d40e1470c | ||

|

|

b0e561ca73 | ||

|

|

563d68ad5b | ||

|

|

82c524f87d | ||

|

|

df75aa06b6 | ||

|

|

8ea9871d23 | ||

|

|

097482910e | ||

|

|

9b78c17638 | ||

|

|

2d1934bf2f | ||

|

|

7f15256eba | ||

|

|

587fc82fbc | ||

|

|

1b7418f91f | ||

|

|

b7828ac765 | ||

|

|

8705f734d0 | ||

|

|

0bd17a2406 | ||

|

|

59be5163fd | ||

|

|

95efe89ac0 | ||

|

|

d86903ced5 | ||

|

|

a47bdc0b06 | ||

|

|

0e769e100b | ||

|

|

b5bcc07223 | ||

|

|

9653dc90d5 | ||

|

|

052337861b | ||

|

|

f8aecb2631 | ||

|

|

361492247e | ||

|

|

824ca056a4 | ||

|

|

4f664d00ac | ||

|

|

7fdebe8fe6 |

3

.github/CODEOWNERS

vendored

Normal file

3

.github/CODEOWNERS

vendored

Normal file

@@ -0,0 +1,3 @@

|

||||

* @tanzhenxin @DennisYu07 @gwinthis @LaZzyMan @pomelo-nwu @Mingholy

|

||||

# SDK TypeScript package changes require review from Mingholy

|

||||

packages/sdk-typescript/** @Mingholy

|

||||

17

.github/workflows/release-sdk.yml

vendored

17

.github/workflows/release-sdk.yml

vendored

@@ -241,7 +241,7 @@ jobs:

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

id: 'pr'

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

@@ -258,26 +258,15 @@ jobs:

|

||||

|

||||

echo "PR_URL=${pr_url}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Wait for CI checks to complete'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

echo "Waiting for CI checks to complete..."

|

||||

gh pr checks "${PR_URL}" --watch --interval 30

|

||||

|

||||

- name: 'Enable auto-merge for release PR'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

gh pr merge "${PR_URL}" --merge --auto

|

||||

gh pr merge "${PR_URL}" --merge --auto --delete-branch

|

||||

|

||||

- name: 'Create Issue on Failure'

|

||||

if: |-

|

||||

|

||||

10

README.md

10

README.md

@@ -25,7 +25,7 @@ Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Code

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code, Zed, and JetBrains IDEs.

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -137,10 +137,11 @@ Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automa

|

||||

|

||||

#### IDE integration

|

||||

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

Use Qwen Code inside your editor (VS Code, Zed, and JetBrains IDEs):

|

||||

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

- [Use in JetBrains IDEs](https://qwenlm.github.io/qwen-code-docs/en/users/integration-jetbrains/)

|

||||

|

||||

#### TypeScript SDK

|

||||

|

||||

@@ -200,6 +201,11 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -10,4 +10,5 @@ export default {

|

||||

'web-search': 'Web Search',

|

||||

memory: 'Memory',

|

||||

'mcp-server': 'MCP Servers',

|

||||

sandbox: 'Sandboxing',

|

||||

};

|

||||

|

||||

90

docs/developers/tools/sandbox.md

Normal file

90

docs/developers/tools/sandbox.md

Normal file

@@ -0,0 +1,90 @@

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

### Currently, the project does not support the use of the BUILD_SANDBOX function after installation through the npm package

|

||||

|

||||

1. To build a custom sandbox, you need to access the build scripts (scripts/build_sandbox.js) in the source code repository.

|

||||

2. These build scripts are not included in the packages released by npm.

|

||||

3. The code contains hard-coded path checks that explicitly reject build requests from non-source code environments.

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile, The specific operation is as follows

|

||||

|

||||

#### 1、Clone qwen code project first, https://github.com/QwenLM/qwen-code.git

|

||||

|

||||

#### 2、Make sure you perform the following operation in the source code repository directory

|

||||

|

||||

```bash

|

||||

# 1. First, install the dependencies of the project

|

||||

npm install

|

||||

|

||||

# 2. Build the Qwen Code project

|

||||

npm run build

|

||||

|

||||

# 3. Verify that the dist directory has been generated

|

||||

ls -la packages/cli/dist/

|

||||

|

||||

# 4. Create a global link in the CLI package directory

|

||||

cd packages/cli

|

||||

npm link

|

||||

|

||||

# 5. Verification link (it should now point to the source code)

|

||||

which qwen

|

||||

# Expected output: /xxx/xxx/.nvm/versions/node/v24.11.1/bin/qwen

|

||||

# Or similar paths, but it should be a symbolic link

|

||||

|

||||

# 6. For details of the symbolic link, you can see the specific source code path

|

||||

ls -la $(dirname $(which qwen))/../lib/node_modules/@qwen-code/qwen-code

|

||||

# It should show that this is a symbolic link pointing to your source code directory

|

||||

|

||||

# 7.Test the version of qwen

|

||||

qwen -v

|

||||

# npm link will overwrite the global qwen. To avoid being unable to distinguish the same version number, you can uninstall the global CLI first

|

||||

```

|

||||

|

||||

#### 3、Create your sandbox Dockerfile under the root directory of your own project

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

|

||||

- Official mirror image address:https://github.com/QwenLM/qwen-code/pkgs/container/qwen-code

|

||||

|

||||

```bash

|

||||

# Based on the official Qwen sandbox image (It is recommended to explicitly specify the version)

|

||||

FROM ghcr.io/qwenlm/qwen-code:sha-570ec43

|

||||

# Add your extra tools here

|

||||

RUN apt-get update && apt-get install -y \

|

||||

git \

|

||||

python3 \

|

||||

ripgrep

|

||||

```

|

||||

|

||||

#### 4、Create the first sandbox image under the root directory of your project

|

||||

|

||||

```bash

|

||||

GEMINI_SANDBOX=docker BUILD_SANDBOX=1 qwen -s

|

||||

# Observe whether the sandbox version of the tool you launched is consistent with the version of your custom image. If they are consistent, the startup will be successful

|

||||

```

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

#### Remove npm link

|

||||

|

||||

- If you want to restore the official CLI of qwen, please remove the npm link

|

||||

|

||||

```bash

|

||||

# Method 1: Unlink globally

|

||||

npm unlink -g @qwen-code/qwen-code

|

||||

|

||||

# Method 2: Remove it in the packages/cli directory

|

||||

cd packages/cli

|

||||

npm unlink

|

||||

|

||||

# Verification has been lifted

|

||||

which qwen

|

||||

# It should display "qwen not found"

|

||||

|

||||

# Reinstall the global version if necessary

|

||||

npm install -g @qwen-code/qwen-code

|

||||

|

||||

# Verification Recovery

|

||||

which qwen

|

||||

qwen --version

|

||||

```

|

||||

@@ -12,6 +12,7 @@ export default {

|

||||

},

|

||||

'integration-vscode': 'Visual Studio Code',

|

||||

'integration-zed': 'Zed IDE',

|

||||

'integration-jetbrains': 'JetBrains IDEs',

|

||||

'integration-github-action': 'Github Actions',

|

||||

'Code with Qwen Code': {

|

||||

type: 'separator',

|

||||

|

||||

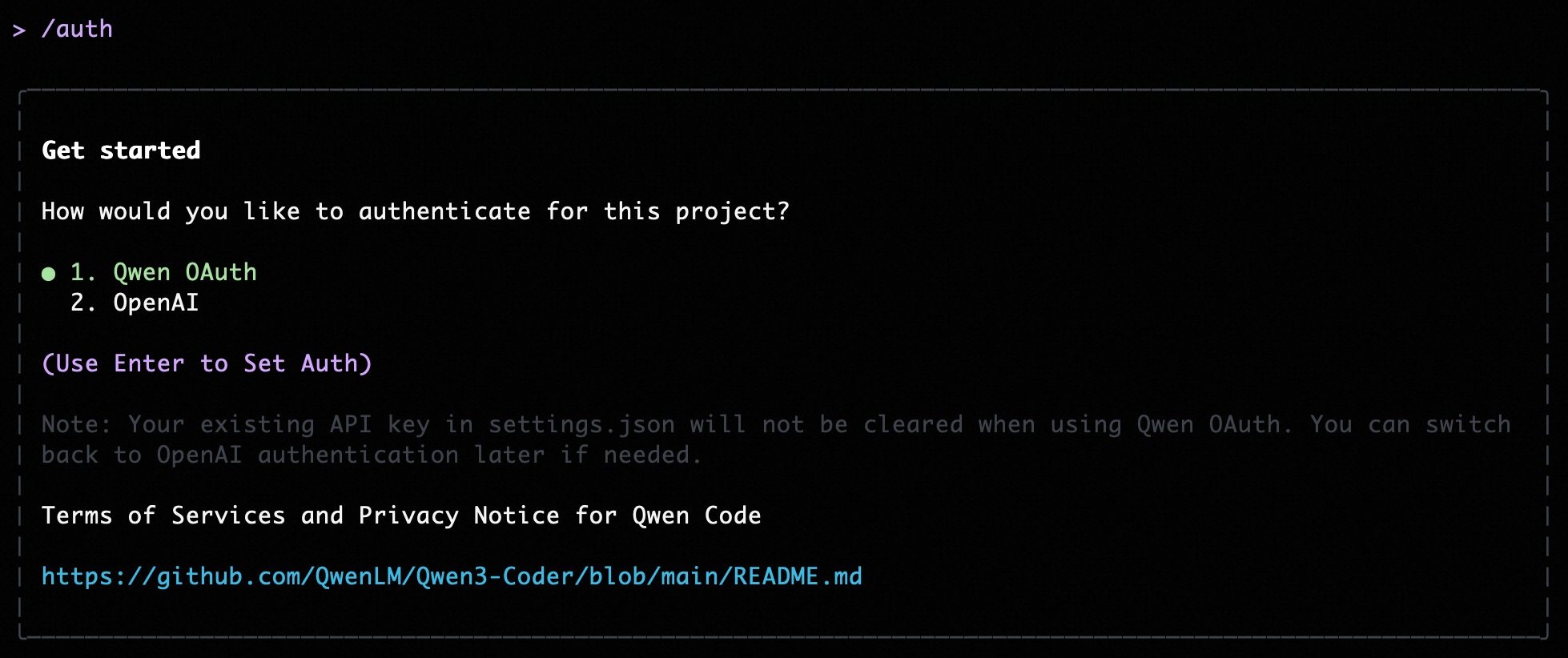

@@ -5,11 +5,13 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -24,15 +26,54 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Quick start (interactive, recommended for local use)

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -104,7 +104,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

| `model.name` | string | The Qwen model to use for conversations. | `undefined` |

|

||||

| `model.maxSessionTurns` | number | Maximum number of user/model/tool turns to keep in a session. -1 means unlimited. | `-1` |

|

||||

| `model.summarizeToolOutput` | object | Enables or disables the summarization of tool output. You can specify the token budget for the summarization using the `tokenBudget` setting. Note: Currently only the `run_shell_command` tool is supported. For example `{"run_shell_command": {"tokenBudget": 2000}}` | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, and `disableCacheControl`, along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, `disableCacheControl`, and `customHeaders` (custom HTTP headers for API requests), along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.chatCompression.contextPercentageThreshold` | number | Sets the threshold for chat history compression as a percentage of the model's total token limit. This is a value between 0 and 1 that applies to both automatic compression and the manual `/compress` command. For example, a value of `0.6` will trigger compression when the chat history exceeds 60% of the token limit. Use `0` to disable compression entirely. | `0.7` |

|

||||

| `model.skipNextSpeakerCheck` | boolean | Skip the next speaker check. | `false` |

|

||||

| `model.skipLoopDetection` | boolean | Disables loop detection checks. Loop detection prevents infinite loops in AI responses but can generate false positives that interrupt legitimate workflows. Enable this option if you experience frequent false positive loop detection interruptions. | `false` |

|

||||

@@ -114,12 +114,16 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

**Example model.generationConfig:**

|

||||

|

||||

```

|

||||

```json

|

||||

{

|

||||

"model": {

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"disableCacheControl": false,

|

||||

"customHeaders": {

|

||||

"X-Request-ID": "req-123",

|

||||

"X-User-ID": "user-456"

|

||||

},

|

||||

"samplingParams": {

|

||||

"temperature": 0.2,

|

||||

"top_p": 0.8,

|

||||

@@ -130,6 +134,8 @@ Settings are organized into categories. All settings should be placed within the

|

||||

}

|

||||

```

|

||||

|

||||

The `customHeaders` field allows you to add custom HTTP headers to all API requests. This is useful for request tracing, monitoring, API gateway routing, or when different models require different headers. If `customHeaders` is defined in `modelProviders[].generationConfig.customHeaders`, it will be used directly; otherwise, headers from `model.generationConfig.customHeaders` will be used. No merging occurs between the two levels.

|

||||

|

||||

**model.openAILoggingDir examples:**

|

||||

|

||||

- `"~/qwen-logs"` - Logs to `~/qwen-logs` directory

|

||||

@@ -154,6 +160,10 @@ Use `modelProviders` to declare curated model lists per auth type that the `/mod

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"maxRetries": 3,

|

||||

"customHeaders": {

|

||||

"X-Model-Version": "v1.0",

|

||||

"X-Request-Priority": "high"

|

||||

},

|

||||

"samplingParams": { "temperature": 0.2 }

|

||||

}

|

||||

}

|

||||

@@ -215,7 +225,7 @@ Per-field precedence for `generationConfig`:

|

||||

3. `settings.model.generationConfig`

|

||||

4. Content-generator defaults (`getDefaultGenerationConfig` for OpenAI, `getParameterValue` for Gemini, etc.)

|

||||

|

||||

`samplingParams` is treated atomically; provider values replace the entire object. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

`samplingParams` and `customHeaders` are both treated atomically; provider values replace the entire object. If `modelProviders[].generationConfig` defines these fields, they are used directly; otherwise, values from `model.generationConfig` are used. No merging occurs between provider and global configuration levels. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

|

||||

##### Selection persistence and recommendations

|

||||

|

||||

@@ -231,7 +241,6 @@ Per-field precedence for `generationConfig`:

|

||||

| ------------------------------------------------- | -------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `context.fileName` | string or array of strings | The name of the context file(s). | `undefined` |

|

||||

| `context.importFormat` | string | The format to use when importing memory. | `undefined` |

|

||||

| `context.discoveryMaxDirs` | number | Maximum number of directories to search for memory. | `200` |

|

||||

| `context.includeDirectories` | array | Additional directories to include in the workspace context. Specifies an array of additional absolute or relative paths to include in the workspace context. Missing directories will be skipped with a warning by default. Paths can use `~` to refer to the user's home directory. This setting can be combined with the `--include-directories` command-line flag. | `[]` |

|

||||

| `context.loadFromIncludeDirectories` | boolean | Controls the behavior of the `/memory refresh` command. If set to `true`, `QWEN.md` files should be loaded from all directories that are added. If set to `false`, `QWEN.md` should only be loaded from the current directory. | `false` |

|

||||

| `context.fileFiltering.respectGitIgnore` | boolean | Respect .gitignore files when searching. | `true` |

|

||||

@@ -301,6 +310,12 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

>

|

||||

> **Note about advanced.tavilyApiKey:** This is a legacy configuration format. For Qwen OAuth users, DashScope provider is automatically available without any configuration. For other authentication types, configure Tavily or Google providers using the new `webSearch` configuration format.

|

||||

|

||||

#### experimental

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| --------------------- | ------- | -------------------------------- | ------- |

|

||||

| `experimental.skills` | boolean | Enable experimental Agent Skills | `false` |

|

||||

|

||||

#### mcpServers

|

||||

|

||||

Configures connections to one or more Model-Context Protocol (MCP) servers for discovering and using custom tools. Qwen Code attempts to connect to each configured MCP server to discover available tools. If multiple MCP servers expose a tool with the same name, the tool names will be prefixed with the server alias you defined in the configuration (e.g., `serverAlias__actualToolName`) to avoid conflicts. Note that the system might strip certain schema properties from MCP tool definitions for compatibility. At least one of `command`, `url`, or `httpUrl` must be provided. If multiple are specified, the order of precedence is `httpUrl`, then `url`, then `command`.

|

||||

@@ -470,7 +485,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

@@ -519,16 +534,13 @@ Here's a conceptual example of what a context file at the root of a TypeScript p

|

||||

|

||||

This example demonstrates how you can provide general project context, specific coding conventions, and even notes about particular files or components. The more relevant and precise your context files are, the better the AI can assist you. Project-specific context files are highly encouraged to establish conventions and context.

|

||||

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a sophisticated hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

1. **Global Context File:**

|

||||

- Location: `~/.qwen/<configured-context-filename>` (e.g., `~/.qwen/QWEN.md` in your user home directory).

|

||||

- Scope: Provides default instructions for all your projects.

|

||||

2. **Project Root & Ancestors Context Files:**

|

||||

- Location: The CLI searches for the configured context file in the current working directory and then in each parent directory up to either the project root (identified by a `.git` folder) or your home directory.

|

||||

- Scope: Provides context relevant to the entire project or a significant portion of it.

|

||||

3. **Sub-directory Context Files (Contextual/Local):**

|

||||

- Location: The CLI also scans for the configured context file in subdirectories _below_ the current working directory (respecting common ignore patterns like `node_modules`, `.git`, etc.). The breadth of this search is limited to 200 directories by default, but can be configured with the `context.discoveryMaxDirs` setting in your `settings.json` file.

|

||||

- Scope: Allows for highly specific instructions relevant to a particular component, module, or subsection of your project.

|

||||

- **Concatenation & UI Indication:** The contents of all found context files are concatenated (with separators indicating their origin and path) and provided as part of the system prompt. The CLI footer displays the count of loaded context files, giving you a quick visual cue about the active instructional context.

|

||||

- **Importing Content:** You can modularize your context files by importing other Markdown files using the `@path/to/file.md` syntax. For more details, see the [Memory Import Processor documentation](../configuration/memory).

|

||||

- **Commands for Memory Management:**

|

||||

|

||||

@@ -59,6 +59,7 @@ Commands for managing AI tools and models.

|

||||

| ---------------- | --------------------------------------------- | --------------------------------------------- |

|

||||

| `/mcp` | List configured MCP servers and tools | `/mcp`, `/mcp desc` |

|

||||

| `/tools` | Display currently available tool list | `/tools`, `/tools desc` |

|

||||

| `/skills` | List and run available skills (experimental) | `/skills`, `/skills <name>` |

|

||||

| `/approval-mode` | Change approval mode for tool usage | `/approval-mode <mode (auto-edit)> --project` |

|

||||

| →`plan` | Analysis only, no execution | Secure review |

|

||||

| →`default` | Require approval for edits | Daily use |

|

||||

|

||||

@@ -49,6 +49,8 @@ Cross-platform sandboxing with complete process isolation.

|

||||

|

||||

By default, Qwen Code uses a published sandbox image (configured in the CLI package) and will pull it as needed.

|

||||

|

||||

The container sandbox mounts your workspace and your `~/.qwen` directory into the container so auth and settings persist between runs.

|

||||

|

||||

**Best for**: Strong isolation on any OS, consistent tooling inside a known image.

|

||||

|

||||

### Choosing a method

|

||||

@@ -157,22 +159,13 @@ For a working allowlist-style proxy example, see: [Example Proxy Script](/develo

|

||||

|

||||

## Linux UID/GID handling

|

||||

|

||||

The sandbox automatically handles user permissions on Linux. Override these permissions with:

|

||||

On Linux, Qwen Code defaults to enabling UID/GID mapping so the sandbox runs as your user (and reuses the mounted `~/.qwen`). Override with:

|

||||

|

||||

```bash

|

||||

export SANDBOX_SET_UID_GID=true # Force host UID/GID

|

||||

export SANDBOX_SET_UID_GID=false # Disable UID/GID mapping

|

||||

```

|

||||

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile:

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

- Then run with: `BUILD_SANDBOX=1 qwen -s ...`

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common issues

|

||||

|

||||

@@ -11,12 +11,29 @@ This guide shows you how to create, use, and manage Agent Skills in **Qwen Code*

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

## How to enable

|

||||

|

||||

### Via CLI flag

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

### Via settings.json

|

||||

|

||||

Add to your `~/.qwen/settings.json` or project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": {

|

||||

"experimental": {

|

||||

"skills": true

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

@@ -27,6 +44,14 @@ Agent Skills package expertise into discoverable capabilities. Each Skill consis

|

||||

|

||||

Skills are **model-invoked** — the model autonomously decides when to use them based on your request and the Skill’s description. This is different from slash commands, which are **user-invoked** (you explicitly type `/command`).

|

||||

|

||||

If you want to invoke a Skill explicitly, use the `/skills` slash command:

|

||||

|

||||

```bash

|

||||

/skills <skill-name>

|

||||

```

|

||||

|

||||

The `/skills` command is only available when you run with `--experimental-skills`. Use autocomplete to browse available Skills and descriptions.

|

||||

|

||||

### Benefits

|

||||

|

||||

- Extend Qwen Code for your workflows

|

||||

|

||||

57

docs/users/integration-jetbrains.md

Normal file

57

docs/users/integration-jetbrains.md

Normal file

@@ -0,0 +1,57 @@

|

||||

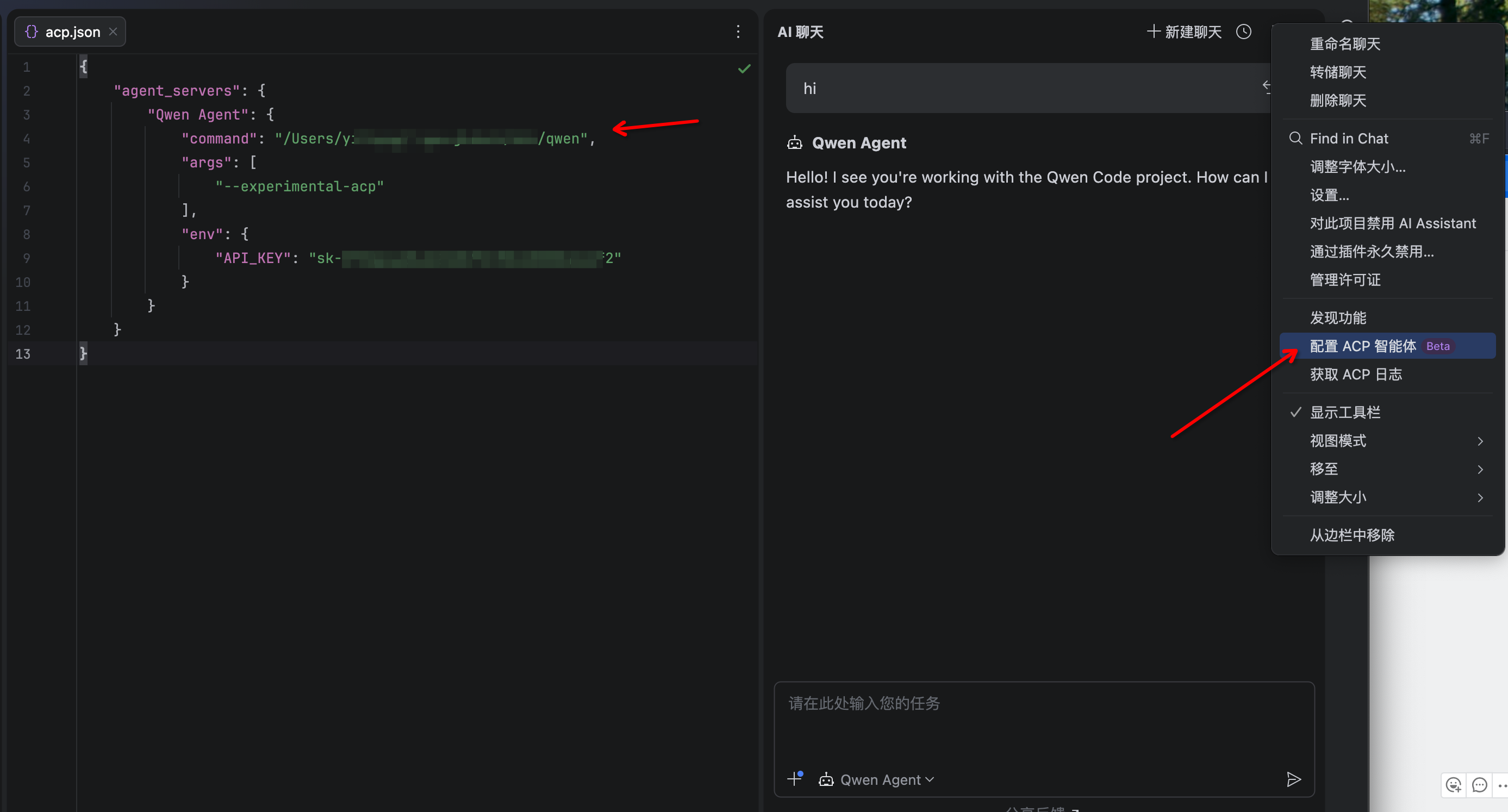

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

### Requirements

|

||||

|

||||

- JetBrains IDE with ACP support (IntelliJ IDEA, WebStorm, PyCharm, etc.)

|

||||

- Qwen Code CLI installed

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Open your JetBrains IDE and navigate to AI Chat tool window.

|

||||

|

||||

3. Click the 3-dot menu in the upper-right corner and select **Configure ACP Agent** and configure Qwen Code with the following settings:

|

||||

|

||||

```json

|

||||

{

|

||||

"agent_servers": {

|

||||

"qwen": {

|

||||

"command": "/path/to/qwen",

|

||||

"args": ["--acp"],

|

||||

"env": {}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Agent not appearing

|

||||

|

||||

- Run `qwen --version` in terminal to verify installation

|

||||

- Ensure your JetBrains IDE version supports ACP

|

||||

- Restart your JetBrains IDE

|

||||

|

||||

### Qwen Code not responding

|

||||

|

||||

- Check your internet connection

|

||||

- Verify CLI works by running `qwen` in terminal

|

||||

- [File an issue on GitHub](https://github.com/qwenlm/qwen-code/issues) if the problem persists

|

||||

@@ -18,23 +18,17 @@

|

||||

|

||||

### Requirements

|

||||

|

||||

- VS Code 1.98.0 or higher

|

||||

- VS Code 1.85.0 or higher

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Extension not installing

|

||||

|

||||

- Ensure you have VS Code 1.98.0 or higher

|

||||

- Ensure you have VS Code 1.85.0 or higher

|

||||

- Check that VS Code has permission to install extensions

|

||||

- Try installing directly from the Marketplace website

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

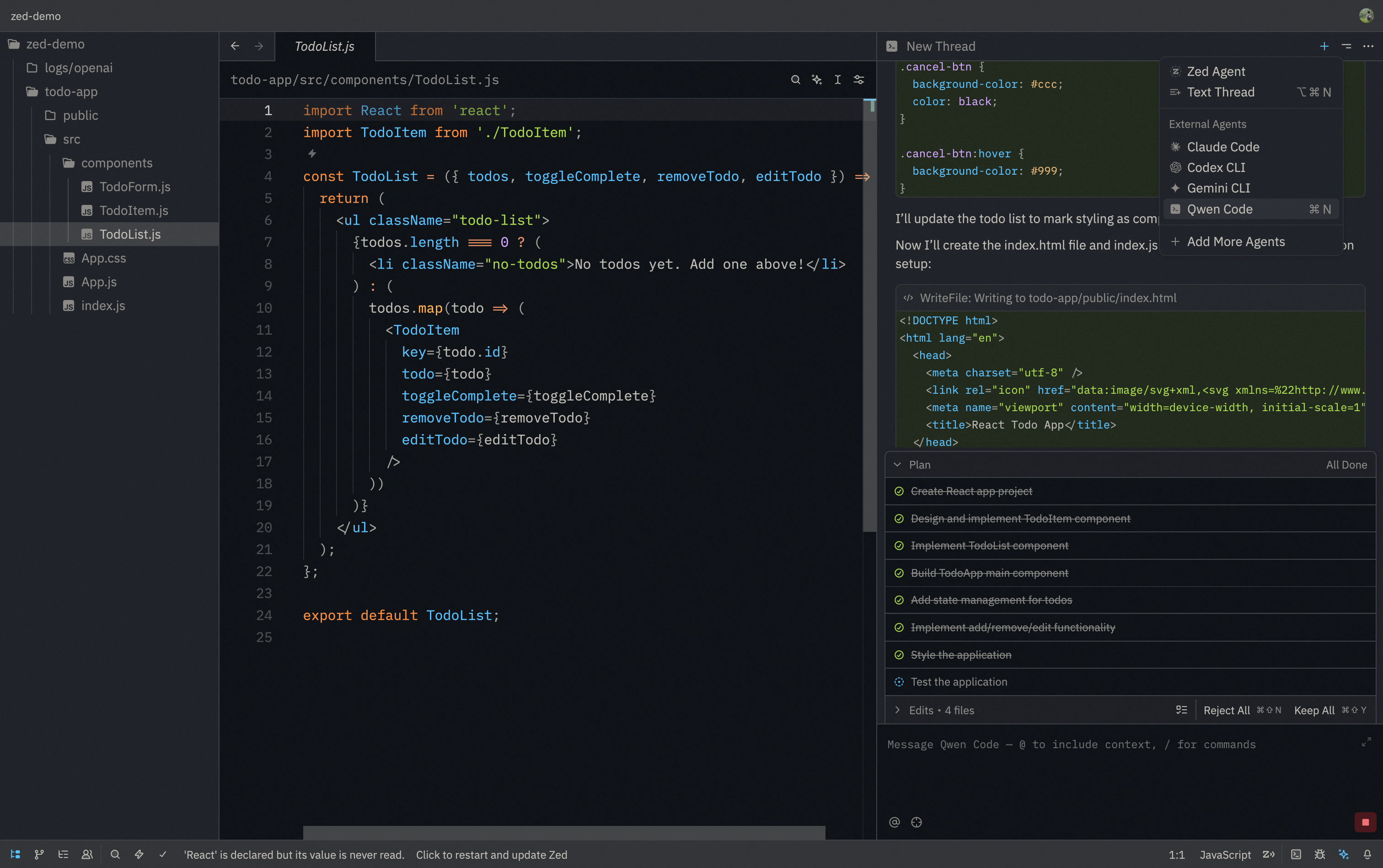

# Zed Editor

|

||||

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

@@ -159,7 +159,7 @@ Qwen Code will:

|

||||

|

||||

### Test out other common workflows

|

||||

|

||||

There are a number of ways to work with Claude:

|

||||

There are a number of ways to work with Qwen Code:

|

||||

|

||||

**Refactor code**

|

||||

|

||||

|

||||

@@ -9,11 +9,18 @@ This guide provides solutions to common issues and debugging tips, including top

|

||||

|

||||

## Authentication or login errors

|

||||

|

||||

- **Error: `UNABLE_TO_GET_ISSUER_CERT_LOCALLY` or `unable to get local issuer certificate`**

|

||||

- **Error: `UNABLE_TO_GET_ISSUER_CERT_LOCALLY`, `UNABLE_TO_VERIFY_LEAF_SIGNATURE`, or `unable to get local issuer certificate`**

|

||||

- **Cause:** You may be on a corporate network with a firewall that intercepts and inspects SSL/TLS traffic. This often requires a custom root CA certificate to be trusted by Node.js.

|

||||

- **Solution:** Set the `NODE_EXTRA_CA_CERTS` environment variable to the absolute path of your corporate root CA certificate file.

|

||||

- Example: `export NODE_EXTRA_CA_CERTS=/path/to/your/corporate-ca.crt`

|

||||

|

||||

- **Error: `Device authorization flow failed: fetch failed`**

|

||||

- **Cause:** Node.js could not reach Qwen OAuth endpoints (often a proxy or SSL/TLS trust issue). When available, Qwen Code will also print the underlying error cause (for example: `UNABLE_TO_VERIFY_LEAF_SIGNATURE`).

|

||||

- **Solution:**

|

||||

- Confirm you can access `https://chat.qwen.ai` from the same machine/network.

|

||||

- If you are behind a proxy, set it via `qwen --proxy <url>` (or the `proxy` setting in `settings.json`).

|

||||

- If your network uses a corporate TLS inspection CA, set `NODE_EXTRA_CA_CERTS` as described above.

|

||||

|

||||

- **Issue: Unable to display UI after authentication failure**

|

||||

- **Cause:** If authentication fails after selecting an authentication type, the `security.auth.selectedType` setting may be persisted in `settings.json`. On restart, the CLI may get stuck trying to authenticate with the failed auth type and fail to display the UI.

|

||||

- **Solution:** Clear the `security.auth.selectedType` configuration item in your `settings.json` file:

|

||||

|

||||

@@ -311,9 +311,9 @@ function setupAcpTest(

|

||||

}

|

||||

});

|

||||

|

||||

it('returns modes on initialize and allows setting approval mode', async () => {

|

||||

it('returns modes on initialize and allows setting mode and model', async () => {

|

||||

const rig = new TestRig();

|

||||

rig.setup('acp approval mode');

|

||||

rig.setup('acp mode and model');

|

||||

|

||||

const { sendRequest, cleanup, stderr } = setupAcpTest(rig);

|

||||

|

||||

@@ -366,8 +366,14 @@ function setupAcpTest(

|

||||

const newSession = (await sendRequest('session/new', {

|

||||

cwd: rig.testDir!,

|

||||

mcpServers: [],

|

||||

})) as { sessionId: string };

|

||||

})) as {

|

||||

sessionId: string;

|

||||

models: {

|

||||

availableModels: Array<{ modelId: string }>;

|

||||

};

|

||||

};

|

||||

expect(newSession.sessionId).toBeTruthy();

|

||||

expect(newSession.models.availableModels.length).toBeGreaterThan(0);

|

||||

|

||||

// Test 4: Set approval mode to 'yolo'

|

||||

const setModeResult = (await sendRequest('session/set_mode', {

|

||||

@@ -392,6 +398,15 @@ function setupAcpTest(

|

||||

})) as { modeId: string };

|

||||

expect(setModeResult3).toBeDefined();

|

||||

expect(setModeResult3.modeId).toBe('default');

|

||||

|

||||

// Test 7: Set model using first available model

|

||||

const firstModel = newSession.models.availableModels[0];

|

||||

const setModelResult = (await sendRequest('session/set_model', {

|

||||

sessionId: newSession.sessionId,

|

||||

modelId: firstModel.modelId,

|

||||

})) as { modelId: string };

|

||||

expect(setModelResult).toBeDefined();

|

||||

expect(setModelResult.modelId).toBeTruthy();

|

||||

} catch (e) {

|

||||

if (stderr.length) {

|

||||

console.error('Agent stderr:', stderr.join(''));

|

||||

|

||||

@@ -831,7 +831,7 @@ describe('Permission Control (E2E)', () => {

|

||||

TEST_TIMEOUT,

|

||||

);

|

||||

|

||||

it(

|

||||

it.skip(

|

||||

'should execute dangerous commands without confirmation',

|

||||

async () => {

|

||||

const q = query({

|

||||

|

||||

21

package-lock.json

generated

21

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -6216,10 +6216,7 @@

|

||||

"version": "4.0.3",

|

||||

"resolved": "https://registry.npmjs.org/chokidar/-/chokidar-4.0.3.tgz",

|

||||

"integrity": "sha512-Qgzu8kfBvo+cA4962jnP1KkS6Dop5NS6g7R5LFYJr4b8Ub94PPQXUksCw9PvXoeXPRRddRNC5C1JQUR2SMGtnA==",

|

||||

"dev": true,

|

||||

"license": "MIT",

|

||||

"optional": true,

|

||||

"peer": true,

|

||||

"dependencies": {

|

||||

"readdirp": "^4.0.1"

|

||||

},

|

||||

@@ -13882,10 +13879,7 @@

|

||||

"version": "4.1.2",

|

||||

"resolved": "https://registry.npmjs.org/readdirp/-/readdirp-4.1.2.tgz",

|

||||

"integrity": "sha512-GDhwkLfywWL2s6vEjyhri+eXmfH6j1L7JE27WhqLeYzoh/A3DBaYGEj2H/HFZCn/kMfim73FXxEJTw06WtxQwg==",

|

||||

"dev": true,

|

||||

"license": "MIT",

|

||||

"optional": true,

|

||||

"peer": true,

|

||||

"engines": {

|

||||

"node": ">= 14.18.0"

|

||||

},

|

||||

@@ -17316,7 +17310,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17953,7 +17947,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -17974,6 +17968,7 @@

|

||||

"ajv-formats": "^3.0.0",

|

||||

"async-mutex": "^0.5.0",

|

||||

"chardet": "^2.1.0",

|

||||

"chokidar": "^4.0.3",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"fast-levenshtein": "^2.0.6",

|

||||

@@ -18593,7 +18588,7 @@

|

||||

},

|

||||

"packages/sdk-typescript": {

|

||||

"name": "@qwen-code/sdk",

|

||||

"version": "0.1.0",

|

||||

"version": "0.1.3",

|

||||

"license": "Apache-2.0",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

@@ -21413,7 +21408,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21425,7 +21420,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.2-preview.1"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.2-preview.1",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.2-preview.1"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

|

||||

@@ -70,6 +70,13 @@ export class AgentSideConnection implements Client {

|

||||

const validatedParams = schema.setModeRequestSchema.parse(params);

|

||||

return agent.setMode(validatedParams);

|

||||

}

|

||||

case schema.AGENT_METHODS.session_set_model: {

|

||||

if (!agent.setModel) {

|

||||

throw RequestError.methodNotFound();

|

||||

}

|

||||

const validatedParams = schema.setModelRequestSchema.parse(params);

|

||||

return agent.setModel(validatedParams);

|

||||

}

|

||||

default:

|

||||

throw RequestError.methodNotFound(method);

|

||||

}

|

||||

@@ -408,4 +415,5 @@ export interface Agent {

|

||||

prompt(params: schema.PromptRequest): Promise<schema.PromptResponse>;

|

||||

cancel(params: schema.CancelNotification): Promise<void>;

|

||||

setMode?(params: schema.SetModeRequest): Promise<schema.SetModeResponse>;

|

||||

setModel?(params: schema.SetModelRequest): Promise<schema.SetModelResponse>;

|

||||

}

|

||||

|

||||

@@ -165,30 +165,11 @@ class GeminiAgent {

|

||||

this.setupFileSystem(config);

|

||||

|

||||

const session = await this.createAndStoreSession(config);

|

||||

const configuredModel = (

|

||||

config.getModel() ||

|

||||

this.config.getModel() ||

|

||||

''

|

||||

).trim();

|

||||

const modelId = configuredModel || 'default';

|

||||

const modelName = configuredModel || modelId;

|

||||

const availableModels = this.buildAvailableModels(config);

|

||||

|

||||

return {

|

||||

sessionId: session.getId(),

|

||||

models: {

|

||||

currentModelId: modelId,

|

||||

availableModels: [

|

||||

{

|

||||

modelId,

|

||||

name: modelName,

|

||||

description: null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(modelId),

|

||||

},

|

||||

},

|

||||

],

|

||||

_meta: null,

|

||||

},

|

||||

models: availableModels,

|

||||

};

|

||||

}

|

||||

|

||||

@@ -305,15 +286,29 @@ class GeminiAgent {

|

||||

async setMode(params: acp.SetModeRequest): Promise<acp.SetModeResponse> {

|

||||

const session = this.sessions.get(params.sessionId);

|

||||

if (!session) {

|

||||

throw new Error(`Session not found: ${params.sessionId}`);

|

||||

throw acp.RequestError.invalidParams(

|

||||

`Session not found for id: ${params.sessionId}`,

|

||||

);

|

||||

}

|

||||

return session.setMode(params);

|

||||

}

|

||||

|

||||

async setModel(params: acp.SetModelRequest): Promise<acp.SetModelResponse> {

|

||||

const session = this.sessions.get(params.sessionId);

|

||||

if (!session) {

|

||||

throw acp.RequestError.invalidParams(

|

||||

`Session not found for id: ${params.sessionId}`,

|

||||

);

|

||||

}

|

||||

return session.setModel(params);

|

||||

}

|

||||

|

||||

private async ensureAuthenticated(config: Config): Promise<void> {

|

||||

const selectedType = this.settings.merged.security?.auth?.selectedType;

|

||||

if (!selectedType) {

|

||||

throw acp.RequestError.authRequired('No Selected Type');

|

||||

throw acp.RequestError.authRequired(

|

||||

'Use Qwen Code CLI to authenticate first.',

|

||||

);

|

||||

}

|

||||

|

||||

try {

|

||||

@@ -382,4 +377,43 @@ class GeminiAgent {

|

||||

|

||||

return session;

|

||||

}

|

||||

|

||||

private buildAvailableModels(

|

||||

config: Config,

|

||||

): acp.NewSessionResponse['models'] {

|

||||

const currentModelId = (

|

||||

config.getModel() ||

|

||||

this.config.getModel() ||

|

||||

''

|

||||

).trim();

|

||||

const availableModels = config.getAvailableModels();

|

||||

|

||||

const mappedAvailableModels = availableModels.map((model) => ({

|

||||

modelId: model.id,

|

||||

name: model.label,

|

||||

description: model.description ?? null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(model.id),

|

||||

},

|

||||

}));

|

||||

|

||||

if (

|

||||

currentModelId &&

|

||||

!mappedAvailableModels.some((model) => model.modelId === currentModelId)

|

||||

) {

|

||||

mappedAvailableModels.unshift({

|

||||

modelId: currentModelId,

|

||||

name: currentModelId,

|

||||

description: null,

|

||||

_meta: {

|

||||

contextLimit: tokenLimit(currentModelId),

|

||||

},

|

||||

});

|

||||

}

|

||||

|

||||

return {

|

||||

currentModelId,

|

||||

availableModels: mappedAvailableModels,

|

||||

};

|

||||

}

|

||||

}

|

||||

|

||||

@@ -15,6 +15,7 @@ export const AGENT_METHODS = {

|

||||

session_prompt: 'session/prompt',

|

||||

session_list: 'session/list',

|

||||

session_set_mode: 'session/set_mode',

|

||||

session_set_model: 'session/set_model',

|

||||

};

|

||||

|

||||

export const CLIENT_METHODS = {

|

||||

@@ -266,6 +267,18 @@ export const modelInfoSchema = z.object({

|

||||

name: z.string(),

|

||||

});

|

||||

|

||||

export const setModelRequestSchema = z.object({

|

||||

sessionId: z.string(),

|

||||

modelId: z.string(),

|

||||

});

|

||||

|

||||

export const setModelResponseSchema = z.object({

|

||||

modelId: z.string(),

|

||||

});

|

||||

|

||||

export type SetModelRequest = z.infer<typeof setModelRequestSchema>;

|

||||

export type SetModelResponse = z.infer<typeof setModelResponseSchema>;

|

||||

|

||||

export const sessionModelStateSchema = z.object({

|

||||

_meta: acpMetaSchema,

|

||||

availableModels: z.array(modelInfoSchema),

|

||||

@@ -592,6 +605,7 @@ export const agentResponseSchema = z.union([

|

||||

promptResponseSchema,

|

||||

listSessionsResponseSchema,

|

||||

setModeResponseSchema,

|

||||

setModelResponseSchema,

|

||||

]);

|

||||

|

||||

export const requestPermissionRequestSchema = z.object({

|

||||

@@ -624,6 +638,7 @@ export const agentRequestSchema = z.union([

|

||||

promptRequestSchema,

|

||||

listSessionsRequestSchema,

|

||||

setModeRequestSchema,

|

||||

setModelRequestSchema,

|

||||

]);

|

||||

|

||||

export const agentNotificationSchema = sessionNotificationSchema;

|

||||

|

||||

174

packages/cli/src/acp-integration/session/Session.test.ts

Normal file

174

packages/cli/src/acp-integration/session/Session.test.ts

Normal file

@@ -0,0 +1,174 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Qwen

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import { describe, it, expect, vi, beforeEach } from 'vitest';

|

||||

import { Session } from './Session.js';

|

||||

import type { Config, GeminiChat } from '@qwen-code/qwen-code-core';

|

||||

import { ApprovalMode } from '@qwen-code/qwen-code-core';

|

||||

import type * as acp from '../acp.js';

|

||||

import type { LoadedSettings } from '../../config/settings.js';

|

||||

import * as nonInteractiveCliCommands from '../../nonInteractiveCliCommands.js';

|

||||

|

||||

vi.mock('../../nonInteractiveCliCommands.js', () => ({

|

||||

getAvailableCommands: vi.fn(),

|

||||

handleSlashCommand: vi.fn(),

|

||||

}));

|

||||

|

||||

describe('Session', () => {

|

||||

let mockChat: GeminiChat;

|

||||

let mockConfig: Config;

|

||||

let mockClient: acp.Client;

|

||||

let mockSettings: LoadedSettings;

|

||||

let session: Session;

|

||||

let currentModel: string;

|

||||

let setModelSpy: ReturnType<typeof vi.fn>;

|

||||

let getAvailableCommandsSpy: ReturnType<typeof vi.fn>;

|

||||

|

||||

beforeEach(() => {

|

||||

currentModel = 'qwen3-code-plus';

|

||||

setModelSpy = vi.fn().mockImplementation(async (modelId: string) => {

|

||||

currentModel = modelId;

|

||||

});

|

||||

|

||||

mockChat = {

|

||||

sendMessageStream: vi.fn(),

|

||||

addHistory: vi.fn(),

|

||||

} as unknown as GeminiChat;

|

||||

|

||||

mockConfig = {

|

||||

setApprovalMode: vi.fn(),

|

||||

setModel: setModelSpy,

|

||||

getModel: vi.fn().mockImplementation(() => currentModel),

|

||||

} as unknown as Config;

|

||||

|

||||

mockClient = {

|

||||

sessionUpdate: vi.fn().mockResolvedValue(undefined),

|

||||

requestPermission: vi.fn().mockResolvedValue({

|

||||

outcome: { outcome: 'selected', optionId: 'proceed_once' },

|

||||

}),

|

||||

sendCustomNotification: vi.fn().mockResolvedValue(undefined),

|

||||

} as unknown as acp.Client;

|

||||

|

||||

mockSettings = {

|

||||

merged: {},

|

||||

} as LoadedSettings;

|

||||

|

||||

getAvailableCommandsSpy = vi.mocked(nonInteractiveCliCommands)

|

||||

.getAvailableCommands as unknown as ReturnType<typeof vi.fn>;

|

||||

getAvailableCommandsSpy.mockResolvedValue([]);

|

||||

|

||||

session = new Session(

|

||||

'test-session-id',

|

||||

mockChat,

|

||||

mockConfig,

|

||||

mockClient,

|

||||

mockSettings,

|

||||

);

|

||||

});

|

||||

|

||||

describe('setMode', () => {

|

||||

it.each([

|

||||

['plan', ApprovalMode.PLAN],

|

||||

['default', ApprovalMode.DEFAULT],

|

||||

['auto-edit', ApprovalMode.AUTO_EDIT],

|

||||

['yolo', ApprovalMode.YOLO],

|

||||

] as const)('maps %s mode', async (modeId, expected) => {

|

||||

const result = await session.setMode({

|

||||

sessionId: 'test-session-id',

|

||||

modeId,

|

||||

});

|

||||

|

||||

expect(mockConfig.setApprovalMode).toHaveBeenCalledWith(expected);

|

||||

expect(result).toEqual({ modeId });

|

||||

});

|

||||

});

|

||||

|

||||

describe('setModel', () => {

|

||||

it('sets model via config and returns current model', async () => {

|

||||

const result = await session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: ' qwen3-coder-plus ',

|

||||

});

|

||||

|

||||

expect(mockConfig.setModel).toHaveBeenCalledWith('qwen3-coder-plus', {

|

||||

reason: 'user_request_acp',

|

||||

context: 'session/set_model',

|

||||

});

|

||||

expect(mockConfig.getModel).toHaveBeenCalled();

|

||||

expect(result).toEqual({ modelId: 'qwen3-coder-plus' });

|

||||

});

|

||||

|

||||

it('rejects empty/whitespace model IDs', async () => {

|

||||

await expect(

|

||||

session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: ' ',

|

||||

}),

|

||||

).rejects.toThrow('Invalid params');

|

||||

|

||||

expect(mockConfig.setModel).not.toHaveBeenCalled();

|

||||

});

|

||||

|

||||

it('propagates errors from config.setModel', async () => {

|

||||

const configError = new Error('Invalid model');

|

||||

setModelSpy.mockRejectedValueOnce(configError);

|

||||

|

||||

await expect(

|

||||

session.setModel({

|

||||

sessionId: 'test-session-id',

|

||||

modelId: 'invalid-model',

|

||||

}),

|

||||

).rejects.toThrow('Invalid model');

|

||||

});

|

||||

});

|

||||

|

||||

describe('sendAvailableCommandsUpdate', () => {

|

||||

it('sends available_commands_update from getAvailableCommands()', async () => {

|

||||

getAvailableCommandsSpy.mockResolvedValueOnce([

|

||||

{

|

||||

name: 'init',

|

||||

description: 'Initialize project context',

|

||||

},

|

||||

]);

|

||||

|

||||

await session.sendAvailableCommandsUpdate();

|

||||

|

||||

expect(getAvailableCommandsSpy).toHaveBeenCalledWith(

|

||||

mockConfig,

|

||||

expect.any(AbortSignal),

|

||||

);

|

||||

expect(mockClient.sessionUpdate).toHaveBeenCalledWith({

|

||||

sessionId: 'test-session-id',

|

||||

update: {

|

||||

sessionUpdate: 'available_commands_update',

|

||||

availableCommands: [

|

||||

{

|

||||

name: 'init',

|

||||

description: 'Initialize project context',

|

||||

input: null,

|

||||

},

|

||||

],

|

||||

},

|

||||

});

|

||||

});

|

||||

|

||||

it('swallows errors and does not throw', async () => {

|

||||

const consoleErrorSpy = vi

|

||||

.spyOn(console, 'error')

|

||||

.mockImplementation(() => undefined);

|

||||

getAvailableCommandsSpy.mockRejectedValueOnce(

|

||||

new Error('Command discovery failed'),

|

||||

);

|

||||

|

||||

await expect(

|

||||

session.sendAvailableCommandsUpdate(),

|

||||

).resolves.toBeUndefined();

|

||||

expect(mockClient.sessionUpdate).not.toHaveBeenCalled();

|

||||

expect(consoleErrorSpy).toHaveBeenCalled();

|

||||

consoleErrorSpy.mockRestore();

|

||||

});

|

||||

});

|

||||

});

|

||||

@@ -52,6 +52,8 @@ import type {

|

||||

AvailableCommandsUpdate,

|