mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-18 23:06:19 +00:00

Compare commits

112 Commits

mingholy/f

...

feat/suppo

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7475ffcbeb | ||

|

|

ccd51a6a00 | ||

|

|

a7e14255c3 | ||

|

|

886f914fb3 | ||

|

|

90365af2f8 | ||

|

|

cbef5ffd89 | ||

|

|

5e80e80387 | ||

|

|

985f65f8fa | ||

|

|

9b9c5fadd5 | ||

|

|

372c67cad4 | ||

|

|

af3864b5de | ||

|

|

1e3791f30a | ||

|

|

9bf626d051 | ||

|

|

a35af6550f | ||

|

|

d6607e134e | ||

|

|

9024a41723 | ||

|

|

bde056b62e | ||

|

|

ff5ea3c6d7 | ||

|

|

0faaac8fa4 | ||

|

|

c2e62b9122 | ||

|

|

f54b62cda3 | ||

|

|

9521987a09 | ||

|

|

d20f2a41a2 | ||

|

|

e3eccb5987 | ||

|

|

22916457cd | ||

|

|

28bc4e6467 | ||

|

|

50bf65b10b | ||

|

|

47c8bc5303 | ||

|

|

e70ecdf3a8 | ||

|

|

117af05122 | ||

|

|

557e6397bb | ||

|

|

f762a62a2e | ||

|

|

ca12772a28 | ||

|

|

cec4b831b6 | ||

|

|

74bf72877d | ||

|

|

b60ae42d10 | ||

|

|

54fd4c22a9 | ||

|

|

f3b7c63cd1 | ||

|

|

e4dee3a2b2 | ||

|

|

996b9df947 | ||

|

|

64291db926 | ||

|

|

a8e3b9ebe7 | ||

|

|

5cfc9f4686 | ||

|

|

97497457a8 | ||

|

|

85473210e5 | ||

|

|

c0c94bd4fc | ||

|

|

8111511a89 | ||

|

|

a8eb858f99 | ||

|

|

52d6d1ff13 | ||

|

|

c845049d26 | ||

|

|

299b7de030 | ||

|

|

b93bb8bff6 | ||

|

|

adb53a6dc6 | ||

|

|

09196c6e19 | ||

|

|

4bd01d592b | ||

|

|

6917031128 | ||

|

|

b33525183f | ||

|

|

1aed5ce858 | ||

|

|

bad5b0485d | ||

|

|

5a6e5bb452 | ||

|

|

5f8e1ebc94 | ||

|

|

9670456a56 | ||

|

|

4c186e7c92 | ||

|

|

2f6b0b233a | ||

|

|

9a8ce605c5 | ||

|

|

afc693a4ab | ||

|

|

7173cba844 | ||

|

|

ec8cccafd7 | ||

|

|

8c56b612fb | ||

|

|

7d40e1470c | ||

|

|

b0e561ca73 | ||

|

|

563d68ad5b | ||

|

|

82c524f87d | ||

|

|

df75aa06b6 | ||

|

|

8ea9871d23 | ||

|

|

097482910e | ||

|

|

9b78c17638 | ||

|

|

bde31d1261 | ||

|

|

2d1934bf2f | ||

|

|

7f15256eba | ||

|

|

587fc82fbc | ||

|

|

cba9c424eb | ||

|

|

1b7418f91f | ||

|

|

b7828ac765 | ||

|

|

8705f734d0 | ||

|

|

0bd17a2406 | ||

|

|

59be5163fd | ||

|

|

95efe89ac0 | ||

|

|

6714f9ce3c | ||

|

|

155d1f9518 | ||

|

|

f776075aa8 | ||

|

|

d86903ced5 | ||

|

|

a47bdc0b06 | ||

|

|

0e769e100b | ||

|

|

b5bcc07223 | ||

|

|

9653dc90d5 | ||

|

|

052337861b | ||

|

|

0a0ab64da0 | ||

|

|

8a15017593 | ||

|

|

f8aecb2631 | ||

|

|

3d059b71de | ||

|

|

361492247e | ||

|

|

87dc618a21 | ||

|

|

94a5d828bd | ||

|

|

824ca056a4 | ||

|

|

4f664d00ac | ||

|

|

7fdebe8fe6 | ||

|

|

fd41309ed2 | ||

|

|

48bc0f35d7 | ||

|

|

e30c2dbe23 | ||

|

|

e9204ecba9 | ||

|

|

f24bda3d7b |

3

.github/CODEOWNERS

vendored

Normal file

3

.github/CODEOWNERS

vendored

Normal file

@@ -0,0 +1,3 @@

|

||||

* @tanzhenxin @DennisYu07 @gwinthis @LaZzyMan @pomelo-nwu @Mingholy

|

||||

# SDK TypeScript package changes require review from Mingholy

|

||||

packages/sdk-typescript/** @Mingholy

|

||||

17

.github/workflows/release-sdk.yml

vendored

17

.github/workflows/release-sdk.yml

vendored

@@ -241,7 +241,7 @@ jobs:

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

id: 'pr'

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

@@ -258,26 +258,15 @@ jobs:

|

||||

|

||||

echo "PR_URL=${pr_url}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Wait for CI checks to complete'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

echo "Waiting for CI checks to complete..."

|

||||

gh pr checks "${PR_URL}" --watch --interval 30

|

||||

|

||||

- name: 'Enable auto-merge for release PR'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

gh pr merge "${PR_URL}" --merge --auto

|

||||

gh pr merge "${PR_URL}" --merge --auto --delete-branch

|

||||

|

||||

- name: 'Create Issue on Failure'

|

||||

if: |-

|

||||

|

||||

10

README.md

10

README.md

@@ -25,7 +25,7 @@ Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Code

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code, Zed, and JetBrains IDEs.

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -137,10 +137,11 @@ Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automa

|

||||

|

||||

#### IDE integration

|

||||

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

Use Qwen Code inside your editor (VS Code, Zed, and JetBrains IDEs):

|

||||

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

- [Use in JetBrains IDEs](https://qwenlm.github.io/qwen-code-docs/en/users/integration-jetbrains/)

|

||||

|

||||

#### TypeScript SDK

|

||||

|

||||

@@ -200,6 +201,11 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -10,4 +10,5 @@ export default {

|

||||

'web-search': 'Web Search',

|

||||

memory: 'Memory',

|

||||

'mcp-server': 'MCP Servers',

|

||||

sandbox: 'Sandboxing',

|

||||

};

|

||||

|

||||

90

docs/developers/tools/sandbox.md

Normal file

90

docs/developers/tools/sandbox.md

Normal file

@@ -0,0 +1,90 @@

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

### Currently, the project does not support the use of the BUILD_SANDBOX function after installation through the npm package

|

||||

|

||||

1. To build a custom sandbox, you need to access the build scripts (scripts/build_sandbox.js) in the source code repository.

|

||||

2. These build scripts are not included in the packages released by npm.

|

||||

3. The code contains hard-coded path checks that explicitly reject build requests from non-source code environments.

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile, The specific operation is as follows

|

||||

|

||||

#### 1、Clone qwen code project first, https://github.com/QwenLM/qwen-code.git

|

||||

|

||||

#### 2、Make sure you perform the following operation in the source code repository directory

|

||||

|

||||

```bash

|

||||

# 1. First, install the dependencies of the project

|

||||

npm install

|

||||

|

||||

# 2. Build the Qwen Code project

|

||||

npm run build

|

||||

|

||||

# 3. Verify that the dist directory has been generated

|

||||

ls -la packages/cli/dist/

|

||||

|

||||

# 4. Create a global link in the CLI package directory

|

||||

cd packages/cli

|

||||

npm link

|

||||

|

||||

# 5. Verification link (it should now point to the source code)

|

||||

which qwen

|

||||

# Expected output: /xxx/xxx/.nvm/versions/node/v24.11.1/bin/qwen

|

||||

# Or similar paths, but it should be a symbolic link

|

||||

|

||||

# 6. For details of the symbolic link, you can see the specific source code path

|

||||

ls -la $(dirname $(which qwen))/../lib/node_modules/@qwen-code/qwen-code

|

||||

# It should show that this is a symbolic link pointing to your source code directory

|

||||

|

||||

# 7.Test the version of qwen

|

||||

qwen -v

|

||||

# npm link will overwrite the global qwen. To avoid being unable to distinguish the same version number, you can uninstall the global CLI first

|

||||

```

|

||||

|

||||

#### 3、Create your sandbox Dockerfile under the root directory of your own project

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

|

||||

- Official mirror image address:https://github.com/QwenLM/qwen-code/pkgs/container/qwen-code

|

||||

|

||||

```bash

|

||||

# Based on the official Qwen sandbox image (It is recommended to explicitly specify the version)

|

||||

FROM ghcr.io/qwenlm/qwen-code:sha-570ec43

|

||||

# Add your extra tools here

|

||||

RUN apt-get update && apt-get install -y \

|

||||

git \

|

||||

python3 \

|

||||

ripgrep

|

||||

```

|

||||

|

||||

#### 4、Create the first sandbox image under the root directory of your project

|

||||

|

||||

```bash

|

||||

GEMINI_SANDBOX=docker BUILD_SANDBOX=1 qwen -s

|

||||

# Observe whether the sandbox version of the tool you launched is consistent with the version of your custom image. If they are consistent, the startup will be successful

|

||||

```

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

#### Remove npm link

|

||||

|

||||

- If you want to restore the official CLI of qwen, please remove the npm link

|

||||

|

||||

```bash

|

||||

# Method 1: Unlink globally

|

||||

npm unlink -g @qwen-code/qwen-code

|

||||

|

||||

# Method 2: Remove it in the packages/cli directory

|

||||

cd packages/cli

|

||||

npm unlink

|

||||

|

||||

# Verification has been lifted

|

||||

which qwen

|

||||

# It should display "qwen not found"

|

||||

|

||||

# Reinstall the global version if necessary

|

||||

npm install -g @qwen-code/qwen-code

|

||||

|

||||

# Verification Recovery

|

||||

which qwen

|

||||

qwen --version

|

||||

```

|

||||

@@ -12,6 +12,7 @@ export default {

|

||||

},

|

||||

'integration-vscode': 'Visual Studio Code',

|

||||

'integration-zed': 'Zed IDE',

|

||||

'integration-jetbrains': 'JetBrains IDEs',

|

||||

'integration-github-action': 'Github Actions',

|

||||

'Code with Qwen Code': {

|

||||

type: 'separator',

|

||||

|

||||

@@ -104,7 +104,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

| `model.name` | string | The Qwen model to use for conversations. | `undefined` |

|

||||

| `model.maxSessionTurns` | number | Maximum number of user/model/tool turns to keep in a session. -1 means unlimited. | `-1` |

|

||||

| `model.summarizeToolOutput` | object | Enables or disables the summarization of tool output. You can specify the token budget for the summarization using the `tokenBudget` setting. Note: Currently only the `run_shell_command` tool is supported. For example `{"run_shell_command": {"tokenBudget": 2000}}` | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, and `disableCacheControl`, along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, `disableCacheControl`, and `customHeaders` (custom HTTP headers for API requests), along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.chatCompression.contextPercentageThreshold` | number | Sets the threshold for chat history compression as a percentage of the model's total token limit. This is a value between 0 and 1 that applies to both automatic compression and the manual `/compress` command. For example, a value of `0.6` will trigger compression when the chat history exceeds 60% of the token limit. Use `0` to disable compression entirely. | `0.7` |

|

||||

| `model.skipNextSpeakerCheck` | boolean | Skip the next speaker check. | `false` |

|

||||

| `model.skipLoopDetection` | boolean | Disables loop detection checks. Loop detection prevents infinite loops in AI responses but can generate false positives that interrupt legitimate workflows. Enable this option if you experience frequent false positive loop detection interruptions. | `false` |

|

||||

@@ -114,12 +114,16 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

**Example model.generationConfig:**

|

||||

|

||||

```

|

||||

```json

|

||||

{

|

||||

"model": {

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"disableCacheControl": false,

|

||||

"customHeaders": {

|

||||

"X-Request-ID": "req-123",

|

||||

"X-User-ID": "user-456"

|

||||

},

|

||||

"samplingParams": {

|

||||

"temperature": 0.2,

|

||||

"top_p": 0.8,

|

||||

@@ -130,6 +134,8 @@ Settings are organized into categories. All settings should be placed within the

|

||||

}

|

||||

```

|

||||

|

||||

The `customHeaders` field allows you to add custom HTTP headers to all API requests. This is useful for request tracing, monitoring, API gateway routing, or when different models require different headers. If `customHeaders` is defined in `modelProviders[].generationConfig.customHeaders`, it will be used directly; otherwise, headers from `model.generationConfig.customHeaders` will be used. No merging occurs between the two levels.

|

||||

|

||||

**model.openAILoggingDir examples:**

|

||||

|

||||

- `"~/qwen-logs"` - Logs to `~/qwen-logs` directory

|

||||

@@ -154,6 +160,10 @@ Use `modelProviders` to declare curated model lists per auth type that the `/mod

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"maxRetries": 3,

|

||||

"customHeaders": {

|

||||

"X-Model-Version": "v1.0",

|

||||

"X-Request-Priority": "high"

|

||||

},

|

||||

"samplingParams": { "temperature": 0.2 }

|

||||

}

|

||||

}

|

||||

@@ -215,7 +225,7 @@ Per-field precedence for `generationConfig`:

|

||||

3. `settings.model.generationConfig`

|

||||

4. Content-generator defaults (`getDefaultGenerationConfig` for OpenAI, `getParameterValue` for Gemini, etc.)

|

||||

|

||||

`samplingParams` is treated atomically; provider values replace the entire object. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

`samplingParams` and `customHeaders` are both treated atomically; provider values replace the entire object. If `modelProviders[].generationConfig` defines these fields, they are used directly; otherwise, values from `model.generationConfig` are used. No merging occurs between provider and global configuration levels. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

|

||||

##### Selection persistence and recommendations

|

||||

|

||||

@@ -470,7 +480,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

|

||||

@@ -59,6 +59,7 @@ Commands for managing AI tools and models.

|

||||

| ---------------- | --------------------------------------------- | --------------------------------------------- |

|

||||

| `/mcp` | List configured MCP servers and tools | `/mcp`, `/mcp desc` |

|

||||

| `/tools` | Display currently available tool list | `/tools`, `/tools desc` |

|

||||

| `/skills` | List and run available skills (experimental) | `/skills`, `/skills <name>` |

|

||||

| `/approval-mode` | Change approval mode for tool usage | `/approval-mode <mode (auto-edit)> --project` |

|

||||

| →`plan` | Analysis only, no execution | Secure review |

|

||||

| →`default` | Require approval for edits | Daily use |

|

||||

|

||||

@@ -49,6 +49,8 @@ Cross-platform sandboxing with complete process isolation.

|

||||

|

||||

By default, Qwen Code uses a published sandbox image (configured in the CLI package) and will pull it as needed.

|

||||

|

||||

The container sandbox mounts your workspace and your `~/.qwen` directory into the container so auth and settings persist between runs.

|

||||

|

||||

**Best for**: Strong isolation on any OS, consistent tooling inside a known image.

|

||||

|

||||

### Choosing a method

|

||||

@@ -157,22 +159,13 @@ For a working allowlist-style proxy example, see: [Example Proxy Script](/develo

|

||||

|

||||

## Linux UID/GID handling

|

||||

|

||||

The sandbox automatically handles user permissions on Linux. Override these permissions with:

|

||||

On Linux, Qwen Code defaults to enabling UID/GID mapping so the sandbox runs as your user (and reuses the mounted `~/.qwen`). Override with:

|

||||

|

||||

```bash

|

||||

export SANDBOX_SET_UID_GID=true # Force host UID/GID

|

||||

export SANDBOX_SET_UID_GID=false # Disable UID/GID mapping

|

||||

```

|

||||

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile:

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

- Then run with: `BUILD_SANDBOX=1 qwen -s ...`

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common issues

|

||||

|

||||

@@ -27,6 +27,14 @@ Agent Skills package expertise into discoverable capabilities. Each Skill consis

|

||||

|

||||

Skills are **model-invoked** — the model autonomously decides when to use them based on your request and the Skill’s description. This is different from slash commands, which are **user-invoked** (you explicitly type `/command`).

|

||||

|

||||

If you want to invoke a Skill explicitly, use the `/skills` slash command:

|

||||

|

||||

```bash

|

||||

/skills <skill-name>

|

||||

```

|

||||

|

||||

The `/skills` command is only available when you run with `--experimental-skills`. Use autocomplete to browse available Skills and descriptions.

|

||||

|

||||

### Benefits

|

||||

|

||||

- Extend Qwen Code for your workflows

|

||||

|

||||

57

docs/users/integration-jetbrains.md

Normal file

57

docs/users/integration-jetbrains.md

Normal file

@@ -0,0 +1,57 @@

|

||||

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

### Requirements

|

||||

|

||||

- JetBrains IDE with ACP support (IntelliJ IDEA, WebStorm, PyCharm, etc.)

|

||||

- Qwen Code CLI installed

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Open your JetBrains IDE and navigate to AI Chat tool window.

|

||||

|

||||

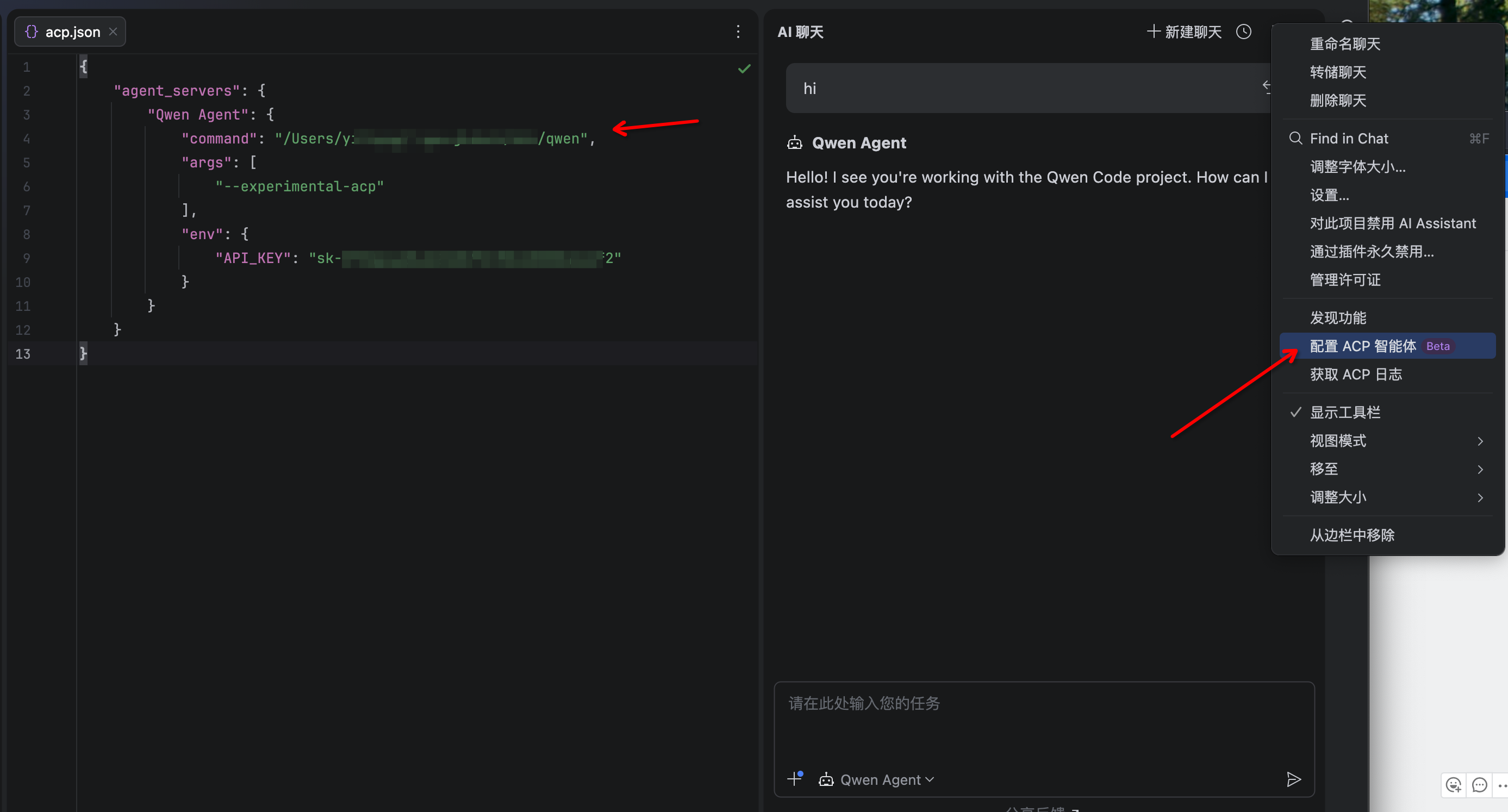

3. Click the 3-dot menu in the upper-right corner and select **Configure ACP Agent** and configure Qwen Code with the following settings:

|

||||

|

||||

```json

|

||||

{

|

||||

"agent_servers": {

|

||||

"qwen": {

|

||||

"command": "/path/to/qwen",

|

||||

"args": ["--acp"],

|

||||

"env": {}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Agent not appearing

|

||||

|

||||

- Run `qwen --version` in terminal to verify installation

|

||||

- Ensure your JetBrains IDE version supports ACP

|

||||

- Restart your JetBrains IDE

|

||||

|

||||

### Qwen Code not responding

|

||||

|

||||

- Check your internet connection

|

||||

- Verify CLI works by running `qwen` in terminal

|

||||

- [File an issue on GitHub](https://github.com/qwenlm/qwen-code/issues) if the problem persists

|

||||

@@ -18,23 +18,17 @@

|

||||

|

||||

### Requirements

|

||||

|

||||

- VS Code 1.98.0 or higher

|

||||

- VS Code 1.85.0 or higher

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Extension not installing

|

||||

|

||||

- Ensure you have VS Code 1.98.0 or higher

|

||||

- Ensure you have VS Code 1.85.0 or higher

|

||||

- Check that VS Code has permission to install extensions

|

||||

- Try installing directly from the Marketplace website

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Zed Editor

|

||||

|

||||

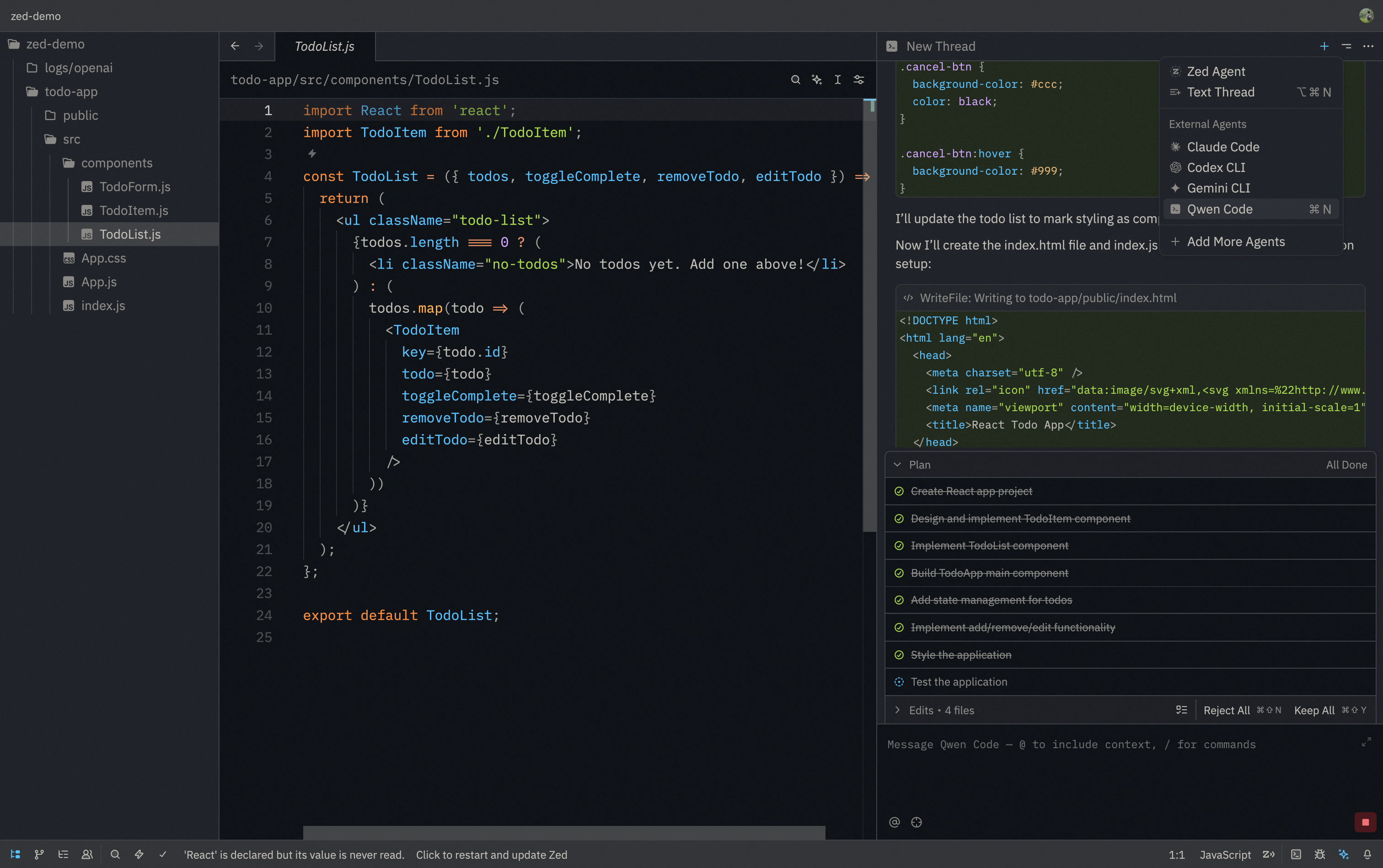

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

@@ -159,7 +159,7 @@ Qwen Code will:

|

||||

|

||||

### Test out other common workflows

|

||||

|

||||

There are a number of ways to work with Claude:

|

||||

There are a number of ways to work with Qwen Code:

|

||||

|

||||

**Refactor code**

|

||||

|

||||

|

||||

@@ -9,11 +9,18 @@ This guide provides solutions to common issues and debugging tips, including top

|

||||

|

||||

## Authentication or login errors

|

||||

|

||||

- **Error: `UNABLE_TO_GET_ISSUER_CERT_LOCALLY` or `unable to get local issuer certificate`**

|

||||

- **Error: `UNABLE_TO_GET_ISSUER_CERT_LOCALLY`, `UNABLE_TO_VERIFY_LEAF_SIGNATURE`, or `unable to get local issuer certificate`**

|

||||

- **Cause:** You may be on a corporate network with a firewall that intercepts and inspects SSL/TLS traffic. This often requires a custom root CA certificate to be trusted by Node.js.

|

||||

- **Solution:** Set the `NODE_EXTRA_CA_CERTS` environment variable to the absolute path of your corporate root CA certificate file.

|

||||

- Example: `export NODE_EXTRA_CA_CERTS=/path/to/your/corporate-ca.crt`

|

||||

|

||||

- **Error: `Device authorization flow failed: fetch failed`**

|

||||

- **Cause:** Node.js could not reach Qwen OAuth endpoints (often a proxy or SSL/TLS trust issue). When available, Qwen Code will also print the underlying error cause (for example: `UNABLE_TO_VERIFY_LEAF_SIGNATURE`).

|

||||

- **Solution:**

|

||||

- Confirm you can access `https://chat.qwen.ai` from the same machine/network.

|

||||

- If you are behind a proxy, set it via `qwen --proxy <url>` (or the `proxy` setting in `settings.json`).

|

||||

- If your network uses a corporate TLS inspection CA, set `NODE_EXTRA_CA_CERTS` as described above.

|

||||

|

||||

- **Issue: Unable to display UI after authentication failure**

|

||||

- **Cause:** If authentication fails after selecting an authentication type, the `security.auth.selectedType` setting may be persisted in `settings.json`. On restart, the CLI may get stuck trying to authenticate with the failed auth type and fail to display the UI.

|

||||

- **Solution:** Clear the `security.auth.selectedType` configuration item in your `settings.json` file:

|

||||

|

||||

@@ -26,6 +26,7 @@ export default tseslint.config(

|

||||

'dist/**',

|

||||

'docs-site/.next/**',

|

||||

'docs-site/out/**',

|

||||

'packages/cli/src/services/insight-page/**',

|

||||

],

|

||||

},

|

||||

eslint.configs.recommended,

|

||||

|

||||

@@ -831,7 +831,7 @@ describe('Permission Control (E2E)', () => {

|

||||

TEST_TIMEOUT,

|

||||

);

|

||||

|

||||

it(

|

||||

it.skip(

|

||||

'should execute dangerous commands without confirmation',

|

||||

async () => {

|

||||

const q = query({

|

||||

|

||||

21

package-lock.json

generated

21

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -6216,10 +6216,7 @@

|

||||

"version": "4.0.3",

|

||||

"resolved": "https://registry.npmjs.org/chokidar/-/chokidar-4.0.3.tgz",

|

||||

"integrity": "sha512-Qgzu8kfBvo+cA4962jnP1KkS6Dop5NS6g7R5LFYJr4b8Ub94PPQXUksCw9PvXoeXPRRddRNC5C1JQUR2SMGtnA==",

|

||||

"dev": true,

|

||||

"license": "MIT",

|

||||

"optional": true,

|

||||

"peer": true,

|

||||

"dependencies": {

|

||||

"readdirp": "^4.0.1"

|

||||

},

|

||||

@@ -13882,10 +13879,7 @@

|

||||

"version": "4.1.2",

|

||||

"resolved": "https://registry.npmjs.org/readdirp/-/readdirp-4.1.2.tgz",

|

||||

"integrity": "sha512-GDhwkLfywWL2s6vEjyhri+eXmfH6j1L7JE27WhqLeYzoh/A3DBaYGEj2H/HFZCn/kMfim73FXxEJTw06WtxQwg==",

|

||||

"dev": true,

|

||||

"license": "MIT",

|

||||

"optional": true,

|

||||

"peer": true,

|

||||

"engines": {

|

||||

"node": ">= 14.18.0"

|

||||

},

|

||||

@@ -17316,7 +17310,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17953,7 +17947,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -17974,6 +17968,7 @@

|

||||

"ajv-formats": "^3.0.0",

|

||||

"async-mutex": "^0.5.0",

|

||||

"chardet": "^2.1.0",

|

||||

"chokidar": "^4.0.3",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"fast-levenshtein": "^2.0.6",

|

||||

@@ -18593,7 +18588,7 @@

|

||||

},

|

||||

"packages/sdk-typescript": {

|

||||

"name": "@qwen-code/sdk",

|

||||

"version": "0.1.0",

|

||||

"version": "0.1.3",

|

||||

"license": "Apache-2.0",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

@@ -21413,7 +21408,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21425,7 +21420,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

@@ -125,7 +125,7 @@

|

||||

"lint-staged": {

|

||||

"*.{js,jsx,ts,tsx}": [

|

||||

"prettier --write",

|

||||

"eslint --fix --max-warnings 0"

|

||||

"eslint --fix --max-warnings 0 --no-warn-ignored"

|

||||

],

|

||||

"*.{json,md}": [

|

||||

"prettier --write"

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0",

|

||||

"version": "0.7.1",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

|

||||

@@ -170,7 +170,17 @@ function normalizeOutputFormat(

|

||||

}

|

||||

|

||||

export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

const rawArgv = hideBin(process.argv);

|

||||

let rawArgv = hideBin(process.argv);

|

||||

|

||||

// hack: if the first argument is the CLI entry point, remove it

|

||||

if (

|

||||

rawArgv.length > 0 &&

|

||||

(rawArgv[0].endsWith('/dist/qwen-cli/cli.js') ||

|

||||

rawArgv[0].endsWith('/dist/cli.js'))

|

||||

) {

|

||||

rawArgv = rawArgv.slice(1);

|

||||

}

|

||||

|

||||

const yargsInstance = yargs(rawArgv)

|

||||

.locale('en')

|

||||

.scriptName('qwen')

|

||||

@@ -864,11 +874,10 @@ export async function loadCliConfig(

|

||||

}

|

||||

};

|

||||

|

||||

if (

|

||||

!interactive &&

|

||||

!argv.experimentalAcp &&

|

||||

inputFormat !== InputFormat.STREAM_JSON

|

||||

) {

|

||||

// ACP mode check: must include both --acp (current) and --experimental-acp (deprecated).

|

||||

// Without this check, edit, write_file, run_shell_command would be excluded in ACP mode.

|

||||

const isAcpMode = argv.acp || argv.experimentalAcp;

|

||||

if (!interactive && !isAcpMode && inputFormat !== InputFormat.STREAM_JSON) {

|

||||

switch (approvalMode) {

|

||||

case ApprovalMode.PLAN:

|

||||

case ApprovalMode.DEFAULT:

|

||||

|

||||

@@ -55,6 +55,7 @@ import { disableExtension } from './extension.js';

|

||||

|

||||

// These imports will get the versions from the vi.mock('./settings.js', ...) factory.

|

||||

import {

|

||||

getSettingsWarnings,

|

||||

loadSettings,

|

||||

USER_SETTINGS_PATH, // This IS the mocked path.

|

||||

getSystemSettingsPath,

|

||||

@@ -418,6 +419,86 @@ describe('Settings Loading and Merging', () => {

|

||||

});

|

||||

});

|

||||

|

||||

it('should warn about ignored legacy keys in a v2 settings file', () => {

|

||||

(mockFsExistsSync as Mock).mockImplementation(

|

||||

(p: fs.PathLike) => p === USER_SETTINGS_PATH,

|

||||

);

|

||||

const userSettingsContent = {

|

||||

[SETTINGS_VERSION_KEY]: SETTINGS_VERSION,

|

||||

usageStatisticsEnabled: false,

|

||||

};

|

||||

(fs.readFileSync as Mock).mockImplementation(

|

||||

(p: fs.PathOrFileDescriptor) => {

|

||||

if (p === USER_SETTINGS_PATH)

|

||||

return JSON.stringify(userSettingsContent);

|

||||

return '{}';

|

||||

},

|

||||

);

|

||||

|

||||

const settings = loadSettings(MOCK_WORKSPACE_DIR);

|

||||

|

||||

expect(getSettingsWarnings(settings)).toEqual(

|

||||

expect.arrayContaining([

|

||||

expect.stringContaining(

|

||||

"Legacy setting 'usageStatisticsEnabled' will be ignored",

|

||||

),

|

||||

]),

|

||||

);

|

||||

expect(getSettingsWarnings(settings)).toEqual(

|

||||

expect.arrayContaining([

|

||||

expect.stringContaining("'privacy.usageStatisticsEnabled'"),

|

||||

]),

|

||||

);

|

||||

});

|

||||

|

||||

it('should warn about unknown top-level keys in a v2 settings file', () => {

|

||||

(mockFsExistsSync as Mock).mockImplementation(

|

||||

(p: fs.PathLike) => p === USER_SETTINGS_PATH,

|

||||

);

|

||||

const userSettingsContent = {

|

||||

[SETTINGS_VERSION_KEY]: SETTINGS_VERSION,

|

||||

someUnknownKey: 'value',

|

||||

};

|

||||

(fs.readFileSync as Mock).mockImplementation(

|

||||

(p: fs.PathOrFileDescriptor) => {

|

||||

if (p === USER_SETTINGS_PATH)

|

||||

return JSON.stringify(userSettingsContent);

|

||||

return '{}';

|

||||

},

|

||||

);

|

||||

|

||||

const settings = loadSettings(MOCK_WORKSPACE_DIR);

|

||||

|

||||

expect(getSettingsWarnings(settings)).toEqual(

|

||||

expect.arrayContaining([

|

||||

expect.stringContaining(

|

||||

"Unknown setting 'someUnknownKey' will be ignored",

|

||||

),

|

||||

]),

|

||||

);

|

||||

});

|

||||

|

||||

it('should not warn for valid v2 container keys', () => {

|

||||

(mockFsExistsSync as Mock).mockImplementation(

|

||||

(p: fs.PathLike) => p === USER_SETTINGS_PATH,

|

||||

);

|

||||

const userSettingsContent = {

|

||||

[SETTINGS_VERSION_KEY]: SETTINGS_VERSION,

|

||||

model: { name: 'qwen-coder' },

|

||||

};

|

||||

(fs.readFileSync as Mock).mockImplementation(

|

||||

(p: fs.PathOrFileDescriptor) => {

|

||||

if (p === USER_SETTINGS_PATH)

|

||||

return JSON.stringify(userSettingsContent);

|

||||

return '{}';

|

||||

},

|

||||

);

|

||||

|

||||

const settings = loadSettings(MOCK_WORKSPACE_DIR);

|

||||

|

||||

expect(getSettingsWarnings(settings)).toEqual([]);

|

||||

});

|

||||

|

||||

it('should rewrite allowedTools to tools.allowed during migration', () => {

|

||||

(mockFsExistsSync as Mock).mockImplementation(

|

||||

(p: fs.PathLike) => p === USER_SETTINGS_PATH,

|

||||

|

||||

@@ -344,6 +344,97 @@ const KNOWN_V2_CONTAINERS = new Set(

|

||||

Object.values(MIGRATION_MAP).map((path) => path.split('.')[0]),

|

||||

);

|

||||

|

||||

function getSettingsFileKeyWarnings(

|

||||

settings: Record<string, unknown>,

|

||||

settingsFilePath: string,

|

||||

): string[] {

|

||||

const version = settings[SETTINGS_VERSION_KEY];

|

||||

if (typeof version !== 'number' || version < SETTINGS_VERSION) {

|

||||

return [];

|

||||

}

|

||||

|

||||

const warnings: string[] = [];

|

||||

const ignoredLegacyKeys = new Set<string>();

|

||||

|

||||

// Ignored legacy keys (V1 top-level keys that moved to a nested V2 path).

|

||||

for (const [oldKey, newPath] of Object.entries(MIGRATION_MAP)) {

|

||||

if (oldKey === newPath) {

|

||||

continue;

|

||||

}

|

||||

if (!(oldKey in settings)) {

|

||||

continue;

|

||||

}

|

||||

|

||||

const oldValue = settings[oldKey];

|

||||

|

||||

// If this key is a V2 container (like 'model') and it's already an object,

|

||||

// it's likely already in V2 format. Don't warn.

|

||||

if (

|

||||

KNOWN_V2_CONTAINERS.has(oldKey) &&

|

||||

typeof oldValue === 'object' &&

|

||||

oldValue !== null &&

|

||||

!Array.isArray(oldValue)

|

||||

) {

|

||||

continue;

|

||||

}

|

||||

|

||||

ignoredLegacyKeys.add(oldKey);

|

||||

warnings.push(

|

||||

`⚠️ Legacy setting '${oldKey}' will be ignored in ${settingsFilePath}. Please use '${newPath}' instead.`,

|

||||

);

|

||||

}

|

||||

|

||||

// Unknown top-level keys.

|

||||

const schemaKeys = new Set(Object.keys(getSettingsSchema()));

|

||||

for (const key of Object.keys(settings)) {

|

||||

if (key === SETTINGS_VERSION_KEY) {

|

||||

continue;

|

||||

}

|

||||

if (ignoredLegacyKeys.has(key)) {

|

||||

continue;

|

||||

}

|

||||

if (schemaKeys.has(key)) {

|

||||

continue;

|

||||

}

|

||||

|

||||

warnings.push(

|

||||

`⚠️ Unknown setting '${key}' will be ignored in ${settingsFilePath}.`,

|

||||

);

|

||||

}

|

||||

|

||||

return warnings;

|

||||

}

|

||||

|

||||

/**

|

||||

* Collects warnings for ignored legacy and unknown settings keys.

|

||||

*

|

||||

* For `$version: 2` settings files, we do not apply implicit migrations.

|

||||

* Instead, we surface actionable, de-duplicated warnings in the terminal UI.

|

||||

*/

|

||||

export function getSettingsWarnings(loadedSettings: LoadedSettings): string[] {

|

||||

const warningSet = new Set<string>();

|

||||

|

||||

for (const scope of [SettingScope.User, SettingScope.Workspace]) {

|

||||

const settingsFile = loadedSettings.forScope(scope);

|

||||

if (settingsFile.rawJson === undefined) {

|

||||

continue; // File not present / not loaded.

|

||||

}

|

||||

const settingsObject = settingsFile.originalSettings as unknown as Record<

|

||||

string,

|

||||

unknown

|

||||

>;

|

||||

|

||||

for (const warning of getSettingsFileKeyWarnings(

|

||||

settingsObject,

|

||||

settingsFile.path,

|

||||

)) {

|

||||

warningSet.add(warning);

|

||||

}

|

||||

}

|

||||

|

||||

return [...warningSet];

|

||||

}

|

||||

|

||||

export function migrateSettingsToV1(

|

||||

v2Settings: Record<string, unknown>,

|

||||

): Record<string, unknown> {

|

||||

|

||||

@@ -17,7 +17,11 @@ import * as cliConfig from './config/config.js';

|

||||

import { loadCliConfig, parseArguments } from './config/config.js';

|

||||

import { ExtensionStorage, loadExtensions } from './config/extension.js';

|

||||

import type { DnsResolutionOrder, LoadedSettings } from './config/settings.js';

|

||||

import { loadSettings, migrateDeprecatedSettings } from './config/settings.js';

|

||||

import {

|

||||

getSettingsWarnings,

|

||||

loadSettings,

|

||||

migrateDeprecatedSettings,

|

||||

} from './config/settings.js';

|

||||

import {

|

||||

initializeApp,

|

||||

type InitializationResult,

|

||||

@@ -342,6 +346,7 @@ export async function main() {

|

||||

extensionEnablementManager,

|

||||

argv,

|

||||

);

|

||||

registerCleanup(() => config.shutdown());

|

||||

|

||||

if (config.getListExtensions()) {

|

||||

console.log('Installed extensions:');

|

||||

@@ -400,12 +405,15 @@ export async function main() {

|

||||

|

||||

let input = config.getQuestion();

|

||||

const startupWarnings = [

|

||||

...(await getStartupWarnings()),

|

||||

...(await getUserStartupWarnings({

|

||||

workspaceRoot: process.cwd(),

|

||||

useRipgrep: settings.merged.tools?.useRipgrep ?? true,

|

||||

useBuiltinRipgrep: settings.merged.tools?.useBuiltinRipgrep ?? true,

|

||||

})),

|

||||

...new Set([

|

||||

...(await getStartupWarnings()),

|

||||

...(await getUserStartupWarnings({

|

||||

workspaceRoot: process.cwd(),

|

||||

useRipgrep: settings.merged.tools?.useRipgrep ?? true,

|

||||

useBuiltinRipgrep: settings.merged.tools?.useBuiltinRipgrep ?? true,

|

||||

})),

|

||||

...getSettingsWarnings(settings),

|

||||

]),

|

||||

];

|

||||

|

||||

// Render UI, passing necessary config values. Check that there is no command line question.

|

||||

|

||||

@@ -31,6 +31,7 @@ import { quitCommand } from '../ui/commands/quitCommand.js';

|

||||

import { restoreCommand } from '../ui/commands/restoreCommand.js';

|

||||

import { resumeCommand } from '../ui/commands/resumeCommand.js';

|

||||

import { settingsCommand } from '../ui/commands/settingsCommand.js';

|

||||

import { skillsCommand } from '../ui/commands/skillsCommand.js';

|

||||

import { statsCommand } from '../ui/commands/statsCommand.js';

|

||||

import { summaryCommand } from '../ui/commands/summaryCommand.js';

|

||||

import { terminalSetupCommand } from '../ui/commands/terminalSetupCommand.js';

|

||||

@@ -38,6 +39,7 @@ import { themeCommand } from '../ui/commands/themeCommand.js';

|

||||

import { toolsCommand } from '../ui/commands/toolsCommand.js';

|

||||

import { vimCommand } from '../ui/commands/vimCommand.js';

|

||||

import { setupGithubCommand } from '../ui/commands/setupGithubCommand.js';

|

||||

import { insightCommand } from '../ui/commands/insightCommand.js';

|

||||

|

||||

/**

|

||||

* Loads the core, hard-coded slash commands that are an integral part

|

||||

@@ -78,6 +80,7 @@ export class BuiltinCommandLoader implements ICommandLoader {

|

||||

quitCommand,

|

||||

restoreCommand(this.config),

|

||||

resumeCommand,

|

||||

...(this.config?.getExperimentalSkills?.() ? [skillsCommand] : []),

|

||||

statsCommand,

|

||||

summaryCommand,

|

||||

themeCommand,

|

||||

@@ -86,6 +89,7 @@ export class BuiltinCommandLoader implements ICommandLoader {

|

||||

vimCommand,

|

||||

setupGithubCommand,

|

||||

terminalSetupCommand,

|

||||

insightCommand,

|

||||

];

|

||||

|

||||

return allDefinitions.filter((cmd): cmd is SlashCommand => cmd !== null);

|

||||

|

||||

120

packages/cli/src/services/insight-page/README.md

Normal file

120

packages/cli/src/services/insight-page/README.md

Normal file

@@ -0,0 +1,120 @@

|

||||

# Qwen Code Insights Page

|

||||

|

||||

A React-based visualization dashboard for displaying coding activity insights and statistics.

|

||||

|

||||

## Development

|

||||

|

||||

This application consists of two parts:

|

||||

|

||||

1. **Backend (Express Server)**: Serves API endpoints and processes chat history data

|

||||

2. **Frontend (Vite + React)**: Development server with HMR

|

||||

|

||||

### Running in Development Mode

|

||||

|

||||

You need to run both the backend and frontend servers:

|

||||

|

||||

**Terminal 1 - Backend Server (Port 3001):**

|

||||

|

||||

```bash

|

||||

pnpm dev:server

|

||||

```

|

||||

|

||||

**Terminal 2 - Frontend Dev Server (Port 3000):**

|

||||

|

||||

```bash

|

||||

pnpm dev

|

||||

```

|

||||

|

||||

Then open <http://localhost:3000> in your browser.

|

||||

|

||||

The Vite dev server will proxy `/api` requests to the backend server at port 3001.

|

||||

|

||||

### Building for Production

|

||||

|

||||

```bash

|

||||

pnpm build

|

||||

```

|

||||

|

||||

This compiles TypeScript and builds the React application. The output will be in the `dist/` directory.

|

||||

|

||||

In production, the Express server serves both the static files and API endpoints from a single port.

|

||||

|

||||

## Architecture

|

||||

|

||||

- **Frontend**: React + TypeScript + Vite + Chart.js

|

||||

- **Backend**: Express + Node.js

|

||||

- **Data Source**: JSONL chat history files from `~/.qwen/projects/*/chats/`

|

||||

|

||||

## Original Vite Template Info

|

||||

|

||||

This template provides a minimal setup to get React working in Vite with HMR and some ESLint rules.

|

||||

|

||||

Currently, two official plugins are available:

|

||||

|

||||

- [@vitejs/plugin-react](https://github.com/vitejs/vite-plugin-react/blob/main/packages/plugin-react) uses [Babel](https://babeljs.io/) (or [oxc](https://oxc.rs) when used in [rolldown-vite](https://vite.dev/guide/rolldown)) for Fast Refresh

|

||||

- [@vitejs/plugin-react-swc](https://github.com/vitejs/vite-plugin-react/blob/main/packages/plugin-react-swc) uses [SWC](https://swc.rs/) for Fast Refresh

|

||||

|

||||

## React Compiler

|

||||

|

||||

The React Compiler is not enabled on this template because of its impact on dev & build performances. To add it, see [this documentation](https://react.dev/learn/react-compiler/installation).

|

||||

|

||||

## Expanding the ESLint configuration

|

||||

|

||||

If you are developing a production application, we recommend updating the configuration to enable type-aware lint rules:

|

||||

|

||||

```js

|

||||

export default defineConfig([

|

||||

globalIgnores(['dist']),

|

||||

{

|

||||

files: ['**/*.{ts,tsx}'],

|

||||

extends: [

|

||||

// Other configs...

|

||||

|

||||

// Remove tseslint.configs.recommended and replace with this

|

||||

tseslint.configs.recommendedTypeChecked,

|

||||

// Alternatively, use this for stricter rules

|

||||

tseslint.configs.strictTypeChecked,

|

||||

// Optionally, add this for stylistic rules

|

||||

tseslint.configs.stylisticTypeChecked,

|

||||

|

||||

// Other configs...

|

||||

],

|

||||

languageOptions: {

|

||||

parserOptions: {

|

||||

project: ['./tsconfig.node.json', './tsconfig.app.json'],

|

||||

tsconfigRootDir: import.meta.dirname,

|

||||

},

|

||||

// other options...

|

||||

},

|

||||

},

|

||||

]);

|

||||

```

|

||||

|

||||

You can also install [eslint-plugin-react-x](https://github.com/Rel1cx/eslint-react/tree/main/packages/plugins/eslint-plugin-react-x) and [eslint-plugin-react-dom](https://github.com/Rel1cx/eslint-react/tree/main/packages/plugins/eslint-plugin-react-dom) for React-specific lint rules:

|

||||

|

||||

```js

|

||||

// eslint.config.js

|

||||

import reactX from 'eslint-plugin-react-x';

|

||||

import reactDom from 'eslint-plugin-react-dom';

|

||||

|

||||

export default defineConfig([

|

||||

globalIgnores(['dist']),

|

||||

{

|

||||

files: ['**/*.{ts,tsx}'],

|

||||

extends: [

|

||||

// Other configs...

|

||||

// Enable lint rules for React

|

||||

reactX.configs['recommended-typescript'],

|

||||

// Enable lint rules for React DOM

|

||||

reactDom.configs.recommended,

|

||||

],

|

||||

languageOptions: {

|

||||

parserOptions: {

|

||||

project: ['./tsconfig.node.json', './tsconfig.app.json'],

|

||||

tsconfigRootDir: import.meta.dirname,

|

||||

},

|

||||

// other options...

|

||||

},

|

||||

},

|

||||

]);

|

||||

```

|

||||

13

packages/cli/src/services/insight-page/index.html

Normal file

13

packages/cli/src/services/insight-page/index.html

Normal file

@@ -0,0 +1,13 @@

|

||||

<!doctype html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="UTF-8" />

|

||||

<link rel="icon" type="image/svg+xml" href="/qwen.png" />

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

|

||||

<title>Qwen Code Insight</title>

|

||||

</head>

|

||||

<body>

|

||||

<div id="root"></div>

|

||||

<script type="module" src="/src/main.tsx"></script>

|

||||

</body>

|

||||

</html>

|

||||

42

packages/cli/src/services/insight-page/package.json

Normal file

42

packages/cli/src/services/insight-page/package.json

Normal file

@@ -0,0 +1,42 @@

|

||||

{

|

||||

"name": "insight-page",

|

||||

"private": true,

|

||||

"version": "0.0.0",

|

||||

"type": "module",

|

||||

"scripts": {

|

||||

"dev": "vite",

|

||||

"dev:server": "BASE_DIR=$HOME/.qwen/projects PORT=3001 tsx ../insightServer.ts",

|

||||

"build": "tsc -b && vite build",

|

||||

"lint": "eslint .",

|

||||

"preview": "vite preview"

|

||||

},

|

||||

"dependencies": {

|

||||

"@uiw/react-heat-map": "^2.3.3",

|

||||

"chart.js": "^4.5.1",

|

||||

"html2canvas": "^1.4.1",

|

||||

"react": "^19.2.0",

|

||||

"react-dom": "^19.2.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@eslint/js": "^9.39.1",

|

||||

"@types/node": "^24.10.1",

|

||||

"@types/react": "^19.2.5",

|

||||

"@types/react-dom": "^19.2.3",

|

||||

"@vitejs/plugin-react": "^5.1.1",

|

||||

"autoprefixer": "^10.4.20",

|

||||

"eslint": "^9.39.1",

|

||||

"eslint-plugin-react-hooks": "^7.0.1",

|

||||

"eslint-plugin-react-refresh": "^0.4.24",

|

||||

"globals": "^16.5.0",

|

||||

"postcss": "^8.4.49",

|

||||

"tailwindcss": "^3.4.17",

|

||||

"typescript": "~5.9.3",

|

||||

"typescript-eslint": "^8.46.4",

|

||||

"vite": "npm:rolldown-vite@7.2.5"

|

||||

},

|

||||

"pnpm": {

|

||||

"overrides": {

|

||||

"vite": "npm:rolldown-vite@7.2.5"

|

||||

}

|

||||

}

|

||||

}

|

||||

1968

packages/cli/src/services/insight-page/pnpm-lock.yaml

generated

Normal file

1968

packages/cli/src/services/insight-page/pnpm-lock.yaml

generated

Normal file

File diff suppressed because it is too large

Load Diff

6

packages/cli/src/services/insight-page/postcss.config.js

Normal file

6

packages/cli/src/services/insight-page/postcss.config.js

Normal file

@@ -0,0 +1,6 @@

|

||||

export default {

|

||||

plugins: {

|

||||

tailwindcss: {},

|

||||

autoprefixer: {},

|

||||

},

|

||||

};

|

||||

BIN

packages/cli/src/services/insight-page/public/qwen.png

Normal file

BIN

packages/cli/src/services/insight-page/public/qwen.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 79 KiB |

395

packages/cli/src/services/insight-page/src/App.tsx

Normal file

395

packages/cli/src/services/insight-page/src/App.tsx

Normal file

@@ -0,0 +1,395 @@

|

||||

import { useEffect, useRef, useState, type CSSProperties } from 'react';

|

||||

import {

|

||||

Chart,

|

||||

LineController,

|

||||

LineElement,

|

||||

BarController,

|

||||

BarElement,

|

||||

CategoryScale,

|

||||

LinearScale,

|

||||

PointElement,

|

||||

Legend,

|

||||

Title,

|

||||

Tooltip,

|

||||

} from 'chart.js';

|

||||

import type { ChartConfiguration } from 'chart.js';

|

||||

import HeatMap from '@uiw/react-heat-map';

|

||||

import html2canvas from 'html2canvas';

|

||||

|

||||

// Register Chart.js components

|

||||

Chart.register(

|

||||

LineController,

|

||||

LineElement,

|

||||

BarController,

|

||||

BarElement,

|

||||

CategoryScale,

|

||||

LinearScale,

|

||||

PointElement,

|

||||

Legend,

|

||||

Title,

|

||||

Tooltip,

|

||||

);

|

||||

|

||||

interface UsageMetadata {

|

||||

input: number;

|

||||

output: number;

|

||||

total: number;

|

||||

}

|

||||

|

||||

interface InsightData {

|

||||

heatmap: { [date: string]: number };

|

||||

tokenUsage: { [date: string]: UsageMetadata };

|

||||

currentStreak: number;

|

||||

longestStreak: number;

|

||||

longestWorkDate: string | null;

|

||||

longestWorkDuration: number;

|

||||

activeHours: { [hour: number]: number };

|

||||

latestActiveTime: string | null;

|

||||

achievements: Array<{

|

||||

id: string;

|

||||

name: string;

|

||||

description: string;

|

||||

}>;

|

||||

}

|

||||

|

||||

function App() {

|

||||

const [insights, setInsights] = useState<InsightData | null>(null);

|

||||

const [loading, setLoading] = useState(true);

|

||||

const [error, setError] = useState<string | null>(null);

|

||||

const hourChartRef = useRef<HTMLCanvasElement>(null);

|

||||

const hourChartInstance = useRef<Chart | null>(null);

|

||||

const containerRef = useRef<HTMLDivElement>(null);

|

||||

|

||||

// Load insights data

|

||||

useEffect(() => {

|

||||

const loadInsights = async () => {

|

||||

try {

|

||||

setLoading(true);

|

||||

const response = await fetch('/api/insights');

|

||||

if (!response.ok) {

|

||||

throw new Error('Failed to fetch insights');

|

||||

}

|

||||

const data: InsightData = await response.json();

|

||||

setInsights(data);

|

||||

setError(null);

|

||||

} catch (err) {

|

||||

setError((err as Error).message);

|

||||

setInsights(null);

|

||||

} finally {

|

||||

setLoading(false);

|

||||

}

|

||||

};

|

||||

|

||||

loadInsights();

|

||||

}, []);

|

||||

|

||||

// Create hour chart when insights change

|

||||

useEffect(() => {

|

||||

if (!insights || !hourChartRef.current) return;

|

||||

|

||||

// Destroy existing chart if it exists

|

||||

if (hourChartInstance.current) {

|

||||

hourChartInstance.current.destroy();

|

||||

}

|

||||

|

||||

const labels = Array.from({ length: 24 }, (_, i) => `${i}:00`);

|

||||

const data = labels.map((_, i) => insights.activeHours[i] || 0);

|

||||

|

||||

const ctx = hourChartRef.current.getContext('2d');

|

||||

if (!ctx) return;

|

||||

|

||||

hourChartInstance.current = new Chart(ctx, {

|

||||

type: 'bar',

|

||||

data: {

|

||||

labels,

|

||||

datasets: [

|

||||

{

|

||||

label: 'Activity per Hour',

|

||||

data,

|

||||

backgroundColor: 'rgba(52, 152, 219, 0.7)',

|

||||

borderColor: 'rgba(52, 152, 219, 1)',

|

||||

borderWidth: 1,

|

||||

},

|

||||

],

|

||||

},

|

||||

options: {

|

||||

indexAxis: 'y',

|

||||

responsive: true,

|

||||

maintainAspectRatio: false,

|

||||

scales: {

|

||||

x: {

|

||||

beginAtZero: true,

|

||||

},

|

||||

},

|

||||

plugins: {

|

||||

legend: {

|

||||

display: false,

|

||||

},

|

||||

},

|

||||

} as ChartConfiguration['options'],

|

||||

});

|

||||

}, [insights]);

|

||||

|

||||

const handleExport = async () => {

|

||||

if (!containerRef.current) return;

|

||||

|

||||

try {

|

||||

const button = document.getElementById('export-btn') as HTMLButtonElement;

|

||||

button.style.display = 'none';

|

||||

|

||||

const canvas = await html2canvas(containerRef.current, {

|

||||

scale: 2,

|

||||

useCORS: true,

|

||||

logging: false,

|

||||

});

|

||||

|

||||

const imgData = canvas.toDataURL('image/png');

|

||||

const link = document.createElement('a');

|

||||

link.href = imgData;

|

||||

link.download = `qwen-insights-${new Date().toISOString().slice(0, 10)}.png`;

|

||||

link.click();

|

||||

|

||||

button.style.display = 'block';

|

||||

} catch (err) {

|

||||

console.error('Error capturing image:', err);

|

||||

alert('Failed to export image. Please try again.');

|

||||

}

|