mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-16 13:59:14 +00:00

Compare commits

3 Commits

docs/code-

...

mingholy/f

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

da8c49cb9d | ||

|

|

d7d3371ddf | ||

|

|

4213d06ab9 |

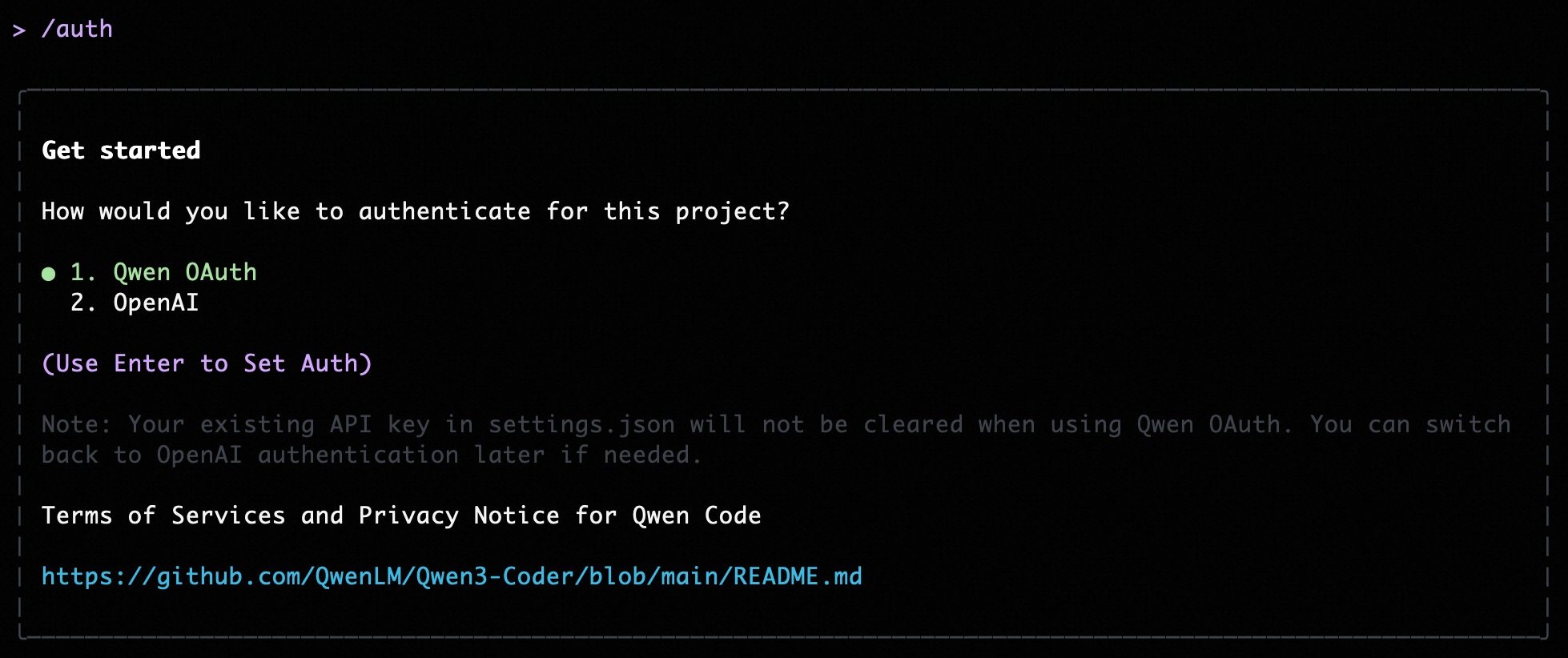

@@ -5,13 +5,11 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -26,54 +24,15 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

### Quick start (interactive, recommended for local use)

|

||||

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

12

package-lock.json

generated

12

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -17310,7 +17310,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17947,7 +17947,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -21408,7 +21408,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21420,7 +21420,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

|

||||

@@ -874,10 +874,11 @@ export async function loadCliConfig(

|

||||

}

|

||||

};

|

||||

|

||||

// ACP mode check: must include both --acp (current) and --experimental-acp (deprecated).

|

||||

// Without this check, edit, write_file, run_shell_command would be excluded in ACP mode.

|

||||

const isAcpMode = argv.acp || argv.experimentalAcp;

|

||||

if (!interactive && !isAcpMode && inputFormat !== InputFormat.STREAM_JSON) {

|

||||

if (

|

||||

!interactive &&

|

||||

!argv.experimentalAcp &&

|

||||

inputFormat !== InputFormat.STREAM_JSON

|

||||

) {

|

||||

switch (approvalMode) {

|

||||

case ApprovalMode.PLAN:

|

||||

case ApprovalMode.DEFAULT:

|

||||

|

||||

@@ -4,7 +4,11 @@

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import type { Config, ModelProvidersConfig } from '@qwen-code/qwen-code-core';

|

||||

import type {

|

||||

Config,

|

||||

ContentGeneratorConfig,

|

||||

ModelProvidersConfig,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import {

|

||||

AuthEvent,

|

||||

AuthType,

|

||||

@@ -214,11 +218,19 @@ export const useAuthCommand = (

|

||||

|

||||

if (authType === AuthType.USE_OPENAI) {

|

||||

if (credentials) {

|

||||

config.updateCredentials({

|

||||

apiKey: credentials.apiKey,

|

||||

baseUrl: credentials.baseUrl,

|

||||

model: credentials.model,

|

||||

});

|

||||

// Pass settings.model.generationConfig to updateCredentials so it can be merged

|

||||

// after clearing provider-sourced config. This ensures settings.json generationConfig

|

||||

// fields (e.g., samplingParams, timeout) are preserved.

|

||||

const settingsGenerationConfig = settings.merged.model

|

||||

?.generationConfig as Partial<ContentGeneratorConfig> | undefined;

|

||||

config.updateCredentials(

|

||||

{

|

||||

apiKey: credentials.apiKey,

|

||||

baseUrl: credentials.baseUrl,

|

||||

model: credentials.model,

|

||||

},

|

||||

settingsGenerationConfig,

|

||||

);

|

||||

await performAuth(authType, credentials);

|

||||

}

|

||||

return;

|

||||

@@ -226,7 +238,13 @@ export const useAuthCommand = (

|

||||

|

||||

await performAuth(authType);

|

||||

},

|

||||

[config, performAuth, isProviderManagedModel, onAuthError],

|

||||

[

|

||||

config,

|

||||

performAuth,

|

||||

isProviderManagedModel,

|

||||

onAuthError,

|

||||

settings.merged.model?.generationConfig,

|

||||

],

|

||||

);

|

||||

|

||||

const openAuthDialog = useCallback(() => {

|

||||

|

||||

@@ -275,7 +275,7 @@ export function ModelDialog({ onClose }: ModelDialogProps): React.JSX.Element {

|

||||

persistModelSelection(settings, effectiveModelId);

|

||||

persistAuthTypeSelection(settings, effectiveAuthType);

|

||||

|

||||

const baseUrl = after?.baseUrl ?? '(default)';

|

||||

const baseUrl = after?.baseUrl ?? t('(default)');

|

||||

const maskedKey = maskApiKey(after?.apiKey);

|

||||

uiState?.historyManager.addItem(

|

||||

{

|

||||

@@ -322,7 +322,7 @@ export function ModelDialog({ onClose }: ModelDialogProps): React.JSX.Element {

|

||||

<>

|

||||

<ConfigRow

|

||||

label="Base URL"

|

||||

value={effectiveConfig?.baseUrl ?? ''}

|

||||

value={effectiveConfig?.baseUrl ?? t('(default)')}

|

||||

badge={formatSourceBadge(sources['baseUrl'])}

|

||||

/>

|

||||

<ConfigRow

|

||||

|

||||

@@ -44,20 +44,24 @@ export interface ResolvedCliGenerationConfig {

|

||||

}

|

||||

|

||||

export function getAuthTypeFromEnv(): AuthType | undefined {

|

||||

if (process.env['OPENAI_API_KEY']) {

|

||||

if (process.env['OPENAI_API_KEY'] && process.env['OPENAI_MODEL']) {

|

||||

return AuthType.USE_OPENAI;

|

||||

}

|

||||

if (process.env['QWEN_OAUTH']) {

|

||||

return AuthType.QWEN_OAUTH;

|

||||

}

|

||||

|

||||

if (process.env['GEMINI_API_KEY']) {

|

||||

if (process.env['GEMINI_API_KEY'] && process.env['GEMINI_MODEL']) {

|

||||

return AuthType.USE_GEMINI;

|

||||

}

|

||||

if (process.env['GOOGLE_API_KEY']) {

|

||||

if (process.env['GOOGLE_API_KEY'] && process.env['GOOGLE_MODEL']) {

|

||||

return AuthType.USE_VERTEX_AI;

|

||||

}

|

||||

if (process.env['ANTHROPIC_API_KEY']) {

|

||||

if (

|

||||

process.env['ANTHROPIC_API_KEY'] &&

|

||||

process.env['ANTHROPIC_MODEL'] &&

|

||||

process.env['ANTHROPIC_BASE_URL']

|

||||

) {

|

||||

return AuthType.USE_ANTHROPIC;

|

||||

}

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"description": "Qwen Code Core",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

|

||||

@@ -404,7 +404,7 @@ export class Config {

|

||||

private toolRegistry!: ToolRegistry;

|

||||

private promptRegistry!: PromptRegistry;

|

||||

private subagentManager!: SubagentManager;

|

||||

private skillManager: SkillManager | null = null;

|

||||

private skillManager!: SkillManager;

|

||||

private fileSystemService: FileSystemService;

|

||||

private contentGeneratorConfig!: ContentGeneratorConfig;

|

||||

private contentGeneratorConfigSources: ContentGeneratorConfigSources = {};

|

||||

@@ -672,10 +672,8 @@ export class Config {

|

||||

}

|

||||

this.promptRegistry = new PromptRegistry();

|

||||

this.subagentManager = new SubagentManager(this);

|

||||

if (this.getExperimentalSkills()) {

|

||||

this.skillManager = new SkillManager(this);

|

||||

await this.skillManager.startWatching();

|

||||

}

|

||||

this.skillManager = new SkillManager(this);

|

||||

await this.skillManager.startWatching();

|

||||

|

||||

// Load session subagents if they were provided before initialization

|

||||

if (this.sessionSubagents.length > 0) {

|

||||

@@ -708,12 +706,15 @@ export class Config {

|

||||

* Exclusive for `OpenAIKeyPrompt` to update credentials via `/auth`

|

||||

* Delegates to ModelsConfig.

|

||||

*/

|

||||

updateCredentials(credentials: {

|

||||

apiKey?: string;

|

||||

baseUrl?: string;

|

||||

model?: string;

|

||||

}): void {

|

||||

this._modelsConfig.updateCredentials(credentials);

|

||||

updateCredentials(

|

||||

credentials: {

|

||||

apiKey?: string;

|

||||

baseUrl?: string;

|

||||

model?: string;

|

||||

},

|

||||

settingsGenerationConfig?: Partial<ContentGeneratorConfig>,

|

||||

): void {

|

||||

this._modelsConfig.updateCredentials(credentials, settingsGenerationConfig);

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -1441,7 +1442,7 @@ export class Config {

|

||||

return this.subagentManager;

|

||||

}

|

||||

|

||||

getSkillManager(): SkillManager | null {

|

||||

getSkillManager(): SkillManager {

|

||||

return this.skillManager;

|

||||

}

|

||||

|

||||

|

||||

@@ -270,28 +270,28 @@ export function createContentGeneratorConfig(

|

||||

}

|

||||

|

||||

export async function createContentGenerator(

|

||||

generatorConfig: ContentGeneratorConfig,

|

||||

config: Config,

|

||||

config: ContentGeneratorConfig,

|

||||

gcConfig: Config,

|

||||

isInitialAuth?: boolean,

|

||||

): Promise<ContentGenerator> {

|

||||

const validation = validateModelConfig(generatorConfig, false);

|

||||

const validation = validateModelConfig(config, false);

|

||||

if (!validation.valid) {

|

||||

throw new Error(validation.errors.map((e) => e.message).join('\n'));

|

||||

}

|

||||

|

||||

const authType = generatorConfig.authType;

|

||||

if (!authType) {

|

||||

throw new Error('ContentGeneratorConfig must have an authType');

|

||||

}

|

||||

|

||||

let baseGenerator: ContentGenerator;

|

||||

|

||||

if (authType === AuthType.USE_OPENAI) {

|

||||

if (config.authType === AuthType.USE_OPENAI) {

|

||||

// Import OpenAIContentGenerator dynamically to avoid circular dependencies

|

||||

const { createOpenAIContentGenerator } = await import(

|

||||

'./openaiContentGenerator/index.js'

|

||||

);

|

||||

baseGenerator = createOpenAIContentGenerator(generatorConfig, config);

|

||||

} else if (authType === AuthType.QWEN_OAUTH) {

|

||||

|

||||

// Always use OpenAIContentGenerator, logging is controlled by enableOpenAILogging flag

|

||||

const generator = createOpenAIContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

}

|

||||

|

||||

if (config.authType === AuthType.QWEN_OAUTH) {

|

||||

// Import required classes dynamically

|

||||

const { getQwenOAuthClient: getQwenOauthClient } = await import(

|

||||

'../qwen/qwenOAuth2.js'

|

||||

);

|

||||

@@ -300,38 +300,44 @@ export async function createContentGenerator(

|

||||

);

|

||||

|

||||

try {

|

||||

// Get the Qwen OAuth client (now includes integrated token management)

|

||||

// If this is initial auth, require cached credentials to detect missing credentials

|

||||

const qwenClient = await getQwenOauthClient(

|

||||

config,

|

||||

gcConfig,

|

||||

isInitialAuth ? { requireCachedCredentials: true } : undefined,

|

||||

);

|

||||

baseGenerator = new QwenContentGenerator(

|

||||

qwenClient,

|

||||

generatorConfig,

|

||||

config,

|

||||

);

|

||||

|

||||

// Create the content generator with dynamic token management

|

||||

const generator = new QwenContentGenerator(qwenClient, config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

} catch (error) {

|

||||

throw new Error(

|

||||

`${error instanceof Error ? error.message : String(error)}`,

|

||||

);

|

||||

}

|

||||

} else if (authType === AuthType.USE_ANTHROPIC) {

|

||||

}

|

||||

|

||||

if (config.authType === AuthType.USE_ANTHROPIC) {

|

||||

const { createAnthropicContentGenerator } = await import(

|

||||

'./anthropicContentGenerator/index.js'

|

||||

);

|

||||

baseGenerator = createAnthropicContentGenerator(generatorConfig, config);

|

||||

} else if (

|

||||

authType === AuthType.USE_GEMINI ||

|

||||

authType === AuthType.USE_VERTEX_AI

|

||||

|

||||

const generator = createAnthropicContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

}

|

||||

|

||||

if (

|

||||

config.authType === AuthType.USE_GEMINI ||

|

||||

config.authType === AuthType.USE_VERTEX_AI

|

||||

) {

|

||||

const { createGeminiContentGenerator } = await import(

|

||||

'./geminiContentGenerator/index.js'

|

||||

);

|

||||

baseGenerator = createGeminiContentGenerator(generatorConfig, config);

|

||||

} else {

|

||||

throw new Error(

|

||||

`Error creating contentGenerator: Unsupported authType: ${authType}`,

|

||||

);

|

||||

const generator = createGeminiContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

}

|

||||

|

||||

return new LoggingContentGenerator(baseGenerator, config, generatorConfig);

|

||||

throw new Error(

|

||||

`Error creating contentGenerator: Unsupported authType: ${config.authType}`,

|

||||

);

|

||||

}

|

||||

|

||||

@@ -12,7 +12,6 @@ import type {

|

||||

import { GenerateContentResponse } from '@google/genai';

|

||||

import type { Config } from '../../config/config.js';

|

||||

import type { ContentGenerator } from '../contentGenerator.js';

|

||||

import { AuthType } from '../contentGenerator.js';

|

||||

import { LoggingContentGenerator } from './index.js';

|

||||

import { OpenAIContentConverter } from '../openaiContentGenerator/converter.js';

|

||||

import {

|

||||

@@ -51,17 +50,14 @@ const convertGeminiResponseToOpenAISpy = vi

|

||||

choices: [],

|

||||

} as OpenAI.Chat.ChatCompletion);

|

||||

|

||||

const createConfig = (overrides: Record<string, unknown> = {}): Config => {

|

||||

const configContent = {

|

||||

authType: 'openai',

|

||||

enableOpenAILogging: false,

|

||||

...overrides,

|

||||

};

|

||||

return {

|

||||

getContentGeneratorConfig: () => configContent,

|

||||

getAuthType: () => configContent.authType as AuthType | undefined,

|

||||

} as Config;

|

||||

};

|

||||

const createConfig = (overrides: Record<string, unknown> = {}): Config =>

|

||||

({

|

||||

getContentGeneratorConfig: () => ({

|

||||

authType: 'openai',

|

||||

enableOpenAILogging: false,

|

||||

...overrides,

|

||||

}),

|

||||

}) as Config;

|

||||

|

||||

const createWrappedGenerator = (

|

||||

generateContent: ContentGenerator['generateContent'],

|

||||

@@ -128,17 +124,13 @@ describe('LoggingContentGenerator', () => {

|

||||

),

|

||||

vi.fn(),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

openAILoggingDir: 'logs',

|

||||

schemaCompliance: 'openapi_30' as const,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

createConfig({

|

||||

enableOpenAILogging: true,

|

||||

openAILoggingDir: 'logs',

|

||||

schemaCompliance: 'openapi_30',

|

||||

}),

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -233,15 +225,9 @@ describe('LoggingContentGenerator', () => {

|

||||

vi.fn().mockRejectedValue(error),

|

||||

vi.fn(),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -307,15 +293,9 @@ describe('LoggingContentGenerator', () => {

|

||||

})(),

|

||||

),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -365,15 +345,9 @@ describe('LoggingContentGenerator', () => {

|

||||

})(),

|

||||

),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

);

|

||||

|

||||

const request = {

|

||||

|

||||

@@ -31,10 +31,7 @@ import {

|

||||

logApiRequest,

|

||||

logApiResponse,

|

||||

} from '../../telemetry/loggers.js';

|

||||

import type {

|

||||

ContentGenerator,

|

||||

ContentGeneratorConfig,

|

||||

} from '../contentGenerator.js';

|

||||

import type { ContentGenerator } from '../contentGenerator.js';

|

||||

import { isStructuredError } from '../../utils/quotaErrorDetection.js';

|

||||

import { OpenAIContentConverter } from '../openaiContentGenerator/converter.js';

|

||||

import { OpenAILogger } from '../../utils/openaiLogger.js';

|

||||

@@ -53,11 +50,9 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

constructor(

|

||||

private readonly wrapped: ContentGenerator,

|

||||

private readonly config: Config,

|

||||

generatorConfig: ContentGeneratorConfig,

|

||||

) {

|

||||

// Extract fields needed for initialization from passed config

|

||||

// (config.getContentGeneratorConfig() may not be available yet during refreshAuth)

|

||||

if (generatorConfig.enableOpenAILogging) {

|

||||

const generatorConfig = this.config.getContentGeneratorConfig();

|

||||

if (generatorConfig?.enableOpenAILogging) {

|

||||

this.openaiLogger = new OpenAILogger(generatorConfig.openAILoggingDir);

|

||||

this.schemaCompliance = generatorConfig.schemaCompliance;

|

||||

}

|

||||

@@ -94,7 +89,7 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

model,

|

||||

durationMs,

|

||||

prompt_id,

|

||||

this.config.getAuthType(),

|

||||

this.config.getContentGeneratorConfig()?.authType,

|

||||

usageMetadata,

|

||||

responseText,

|

||||

),

|

||||

@@ -131,7 +126,7 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

errorMessage,

|

||||

durationMs,

|

||||

prompt_id,

|

||||

this.config.getAuthType(),

|

||||

this.config.getContentGeneratorConfig()?.authType,

|

||||

errorType,

|

||||

errorStatus,

|

||||

),

|

||||

|

||||

@@ -191,7 +191,7 @@ describe('ModelsConfig', () => {

|

||||

expect(gc.apiKeyEnvKey).toBe('API_KEY_SHARED');

|

||||

});

|

||||

|

||||

it('should preserve settings generationConfig when model is updated via updateCredentials even if it matches modelProviders', () => {

|

||||

it('should use provider config when modelId exists in registry even after updateCredentials', () => {

|

||||

const modelProvidersConfig: ModelProvidersConfig = {

|

||||

openai: [

|

||||

{

|

||||

@@ -213,7 +213,7 @@ describe('ModelsConfig', () => {

|

||||

initialAuthType: AuthType.USE_OPENAI,

|

||||

modelProvidersConfig,

|

||||

generationConfig: {

|

||||

model: 'model-a',

|

||||

model: 'custom-model',

|

||||

samplingParams: { temperature: 0.9, max_tokens: 999 },

|

||||

timeout: 9999,

|

||||

maxRetries: 9,

|

||||

@@ -235,30 +235,30 @@ describe('ModelsConfig', () => {

|

||||

},

|

||||

});

|

||||

|

||||

// User manually updates the model via updateCredentials (e.g. key prompt flow).

|

||||

// Even if the model ID matches a modelProviders entry, we must not apply provider defaults

|

||||

// that would overwrite settings.model.generationConfig.

|

||||

modelsConfig.updateCredentials({ model: 'model-a' });

|

||||

// User manually updates credentials via updateCredentials.

|

||||

// Note: In practice, handleAuthSelect prevents using a modelId that matches a provider model,

|

||||

// but if syncAfterAuthRefresh is called with a modelId that exists in registry,

|

||||

// we should use provider config.

|

||||

modelsConfig.updateCredentials({ apiKey: 'manual-key' });

|

||||

|

||||

modelsConfig.syncAfterAuthRefresh(

|

||||

AuthType.USE_OPENAI,

|

||||

modelsConfig.getModel(),

|

||||

);

|

||||

// syncAfterAuthRefresh with a modelId that exists in registry should use provider config

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.USE_OPENAI, 'model-a');

|

||||

|

||||

const gc = currentGenerationConfig(modelsConfig);

|

||||

expect(gc.model).toBe('model-a');

|

||||

expect(gc.samplingParams?.temperature).toBe(0.9);

|

||||

expect(gc.samplingParams?.max_tokens).toBe(999);

|

||||

expect(gc.timeout).toBe(9999);

|

||||

expect(gc.maxRetries).toBe(9);

|

||||

// Provider config should be applied

|

||||

expect(gc.samplingParams?.temperature).toBe(0.1);

|

||||

expect(gc.samplingParams?.max_tokens).toBe(123);

|

||||

expect(gc.timeout).toBe(111);

|

||||

expect(gc.maxRetries).toBe(1);

|

||||

});

|

||||

|

||||

it('should preserve settings generationConfig across multiple auth refreshes after updateCredentials', () => {

|

||||

it('should preserve settings generationConfig when modelId does not exist in registry', () => {

|

||||

const modelProvidersConfig: ModelProvidersConfig = {

|

||||

openai: [

|

||||

{

|

||||

id: 'model-a',

|

||||

name: 'Model A',

|

||||

id: 'provider-model',

|

||||

name: 'Provider Model',

|

||||

baseUrl: 'https://api.example.com/v1',

|

||||

envKey: 'API_KEY_A',

|

||||

generationConfig: {

|

||||

@@ -270,11 +270,12 @@ describe('ModelsConfig', () => {

|

||||

],

|

||||

};

|

||||

|

||||

// Simulate settings with a custom model (not in registry)

|

||||

const modelsConfig = new ModelsConfig({

|

||||

initialAuthType: AuthType.USE_OPENAI,

|

||||

modelProvidersConfig,

|

||||

generationConfig: {

|

||||

model: 'model-a',

|

||||

model: 'custom-model',

|

||||

samplingParams: { temperature: 0.9, max_tokens: 999 },

|

||||

timeout: 9999,

|

||||

maxRetries: 9,

|

||||

@@ -296,25 +297,21 @@ describe('ModelsConfig', () => {

|

||||

},

|

||||

});

|

||||

|

||||

// User manually sets credentials for a custom model (not in registry)

|

||||

modelsConfig.updateCredentials({

|

||||

apiKey: 'manual-key',

|

||||

baseUrl: 'https://manual.example.com/v1',

|

||||

model: 'model-a',

|

||||

model: 'custom-model',

|

||||

});

|

||||

|

||||

// First auth refresh

|

||||

modelsConfig.syncAfterAuthRefresh(

|

||||

AuthType.USE_OPENAI,

|

||||

modelsConfig.getModel(),

|

||||

);

|

||||

// First auth refresh - modelId doesn't exist in registry, so credentials should be preserved

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.USE_OPENAI, 'custom-model');

|

||||

// Second auth refresh should still preserve settings generationConfig

|

||||

modelsConfig.syncAfterAuthRefresh(

|

||||

AuthType.USE_OPENAI,

|

||||

modelsConfig.getModel(),

|

||||

);

|

||||

modelsConfig.syncAfterAuthRefresh(AuthType.USE_OPENAI, 'custom-model');

|

||||

|

||||

const gc = currentGenerationConfig(modelsConfig);

|

||||

expect(gc.model).toBe('model-a');

|

||||

expect(gc.model).toBe('custom-model');

|

||||

// Settings-sourced generation config should be preserved since modelId doesn't exist in registry

|

||||

expect(gc.samplingParams?.temperature).toBe(0.9);

|

||||

expect(gc.samplingParams?.max_tokens).toBe(999);

|

||||

expect(gc.timeout).toBe(9999);

|

||||

|

||||

@@ -307,6 +307,33 @@ export class ModelsConfig {

|

||||

return this.generationConfigSources;

|

||||

}

|

||||

|

||||

/**

|

||||

* Merge settings generation config, preserving existing values.

|

||||

* Used when provider-sourced config is cleared but settings should still apply.

|

||||

*/

|

||||

mergeSettingsGenerationConfig(

|

||||

settingsGenerationConfig?: Partial<ContentGeneratorConfig>,

|

||||

): void {

|

||||

if (!settingsGenerationConfig) {

|

||||

return;

|

||||

}

|

||||

|

||||

for (const field of MODEL_GENERATION_CONFIG_FIELDS) {

|

||||

if (

|

||||

!(field in this._generationConfig) &&

|

||||

field in settingsGenerationConfig

|

||||

) {

|

||||

// eslint-disable-next-line @typescript-eslint/no-explicit-any

|

||||

(this._generationConfig as any)[field] =

|

||||

settingsGenerationConfig[field];

|

||||

this.generationConfigSources[field] = {

|

||||

kind: 'settings',

|

||||

detail: `model.generationConfig.${field}`,

|

||||

};

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

* Update credentials in generation config.

|

||||

* Sets a flag to prevent syncAfterAuthRefresh from overriding these credentials.

|

||||

@@ -314,12 +341,20 @@ export class ModelsConfig {

|

||||

* When credentials are manually set, we clear all provider-sourced configuration

|

||||

* to maintain provider atomicity (either fully applied or not at all).

|

||||

* Other layers (CLI, env, settings, defaults) will participate in resolve.

|

||||

*

|

||||

* @param settingsGenerationConfig Optional generation config from settings.json

|

||||

* to merge after clearing provider-sourced config.

|

||||

* This ensures settings.model.generationConfig fields

|

||||

* (e.g., samplingParams, timeout) are preserved.

|

||||

*/

|

||||

updateCredentials(credentials: {

|

||||

apiKey?: string;

|

||||

baseUrl?: string;

|

||||

model?: string;

|

||||

}): void {

|

||||

updateCredentials(

|

||||

credentials: {

|

||||

apiKey?: string;

|

||||

baseUrl?: string;

|

||||

model?: string;

|

||||

},

|

||||

settingsGenerationConfig?: Partial<ContentGeneratorConfig>,

|

||||

): void {

|

||||

/**

|

||||

* If any fields are updated here, we treat the resulting config as manually overridden

|

||||

* and avoid applying modelProvider defaults during the next auth refresh.

|

||||

@@ -359,6 +394,14 @@ export class ModelsConfig {

|

||||

this.strictModelProviderSelection = false;

|

||||

// Clear apiKeyEnvKey to prevent validation from requiring environment variable

|

||||

this._generationConfig.apiKeyEnvKey = undefined;

|

||||

|

||||

// After clearing provider-sourced config, merge settings.model.generationConfig

|

||||

// to ensure fields like samplingParams, timeout, etc. are preserved.

|

||||

// This follows the resolution strategy where settings.model.generationConfig

|

||||

// has lower priority than programmatic overrides but should still be applied.

|

||||

if (settingsGenerationConfig) {

|

||||

this.mergeSettingsGenerationConfig(settingsGenerationConfig);

|

||||

}

|

||||

}

|

||||

|

||||

/**

|

||||

@@ -587,50 +630,88 @@ export class ModelsConfig {

|

||||

}

|

||||

|

||||

/**

|

||||

* Called by Config.refreshAuth to sync state after auth refresh.

|

||||

*

|

||||

* IMPORTANT: If credentials were manually set via updateCredentials(),

|

||||

* we should NOT override them with modelProvider defaults.

|

||||

* This handles the case where user inputs credentials via OpenAIKeyPrompt

|

||||

* after removing environment variables for a previously selected model.

|

||||

* Sync state after auth refresh with fallback strategy:

|

||||

* 1. If modelId can be found in modelRegistry, use the config from modelRegistry.

|

||||

* 2. Otherwise, if existing credentials exist in resolved generationConfig from other sources

|

||||

* (not modelProviders), preserve them and update authType/modelId only.

|

||||

* 3. Otherwise, fall back to default model for the authType.

|

||||

* 4. If no default is available, leave the generationConfig incomplete and let

|

||||

* resolveContentGeneratorConfigWithSources throw exceptions as expected.

|

||||

*/

|

||||

syncAfterAuthRefresh(authType: AuthType, modelId?: string): void {

|

||||

// Check if we have manually set credentials that should be preserved

|

||||

const preserveManualCredentials = this.hasManualCredentials;

|

||||

this.strictModelProviderSelection = false;

|

||||

const previousAuthType = this.currentAuthType;

|

||||

this.currentAuthType = authType;

|

||||

|

||||

// If credentials were manually set, don't apply modelProvider defaults

|

||||

// Just update the authType and preserve the manually set credentials

|

||||

if (preserveManualCredentials && authType === AuthType.USE_OPENAI) {

|

||||

this.strictModelProviderSelection = false;

|

||||

this.currentAuthType = authType;

|

||||

// Step 1: If modelId exists in registry, always use config from modelRegistry

|

||||

// Manual credentials won't have a modelId that matches a provider model (handleAuthSelect prevents it),

|

||||

// so if modelId exists in registry, we should always use provider config.

|

||||

// This handles provider switching even within the same authType.

|

||||

if (modelId && this.modelRegistry.hasModel(authType, modelId)) {

|

||||

const resolved = this.modelRegistry.getModel(authType, modelId);

|

||||

if (resolved) {

|

||||

this.applyResolvedModelDefaults(resolved);

|

||||

this.strictModelProviderSelection = true;

|

||||

return;

|

||||

}

|

||||

}

|

||||

|

||||

// Step 2: Check if there are existing credentials from other sources (not modelProviders)

|

||||

const apiKeySource = this.generationConfigSources['apiKey'];

|

||||

const baseUrlSource = this.generationConfigSources['baseUrl'];

|

||||

const hasExistingCredentials =

|

||||

(this._generationConfig.apiKey &&

|

||||

apiKeySource?.kind !== 'modelProviders') ||

|

||||

(this._generationConfig.baseUrl &&

|

||||

baseUrlSource?.kind !== 'modelProviders');

|

||||

|

||||

// Only preserve credentials if:

|

||||

// 1. AuthType hasn't changed (credentials are authType-specific), AND

|

||||

// 2. The modelId doesn't exist in the registry (if it did, we would have used provider config in Step 1), AND

|

||||

// 3. Either:

|

||||

// a. We have manual credentials (set via updateCredentials), OR

|

||||

// b. We have existing credentials

|

||||

// Note: Even if authType hasn't changed, switching to a different provider model (that exists in registry)

|

||||

// will use provider config (Step 1), not preserve old credentials. This ensures credentials change when

|

||||

// switching providers, independent of authType changes.

|

||||

const isAuthTypeChange = previousAuthType !== authType;

|

||||

const shouldPreserveCredentials =

|

||||

!isAuthTypeChange &&

|

||||

(modelId === undefined ||

|

||||

!this.modelRegistry.hasModel(authType, modelId)) &&

|

||||

(this.hasManualCredentials || hasExistingCredentials);

|

||||

|

||||

if (shouldPreserveCredentials) {

|

||||

// Preserve existing credentials, just update authType and modelId if provided

|

||||

if (modelId) {

|

||||

this._generationConfig.model = modelId;

|

||||

if (!this.generationConfigSources['model']) {

|

||||

this.generationConfigSources['model'] = {

|

||||

kind: 'programmatic',

|

||||

detail: 'auth refresh (preserved credentials)',

|

||||

};

|

||||

}

|

||||

}

|

||||

return;

|

||||

}

|

||||

|

||||

this.strictModelProviderSelection = false;

|

||||

// Step 3: Fall back to default model for the authType

|

||||

const defaultModel =

|

||||

this.modelRegistry.getDefaultModelForAuthType(authType);

|

||||

if (defaultModel) {

|

||||

this.applyResolvedModelDefaults(defaultModel);

|

||||

return;

|

||||

}

|

||||

|

||||

if (modelId && this.modelRegistry.hasModel(authType, modelId)) {

|

||||

const resolved = this.modelRegistry.getModel(authType, modelId);

|

||||

if (resolved) {

|

||||

// Ensure applyResolvedModelDefaults can correctly apply authType-specific

|

||||

// behavior (e.g., Qwen OAuth placeholder token) by setting currentAuthType

|

||||

// before applying defaults.

|

||||

this.currentAuthType = authType;

|

||||

this.applyResolvedModelDefaults(resolved);

|

||||

}

|

||||

} else {

|

||||

// If the provided modelId doesn't exist in the registry for the new authType,

|

||||

// use the default model for that authType instead of keeping the old model.

|

||||

// This handles the case where switching from one authType (e.g., OPENAI with

|

||||

// env vars) to another (e.g., qwen-oauth) - we should use the default model

|

||||

// for the new authType, not the old model.

|

||||

this.currentAuthType = authType;

|

||||

const defaultModel =

|

||||

this.modelRegistry.getDefaultModelForAuthType(authType);

|

||||

if (defaultModel) {

|

||||

this.applyResolvedModelDefaults(defaultModel);

|

||||

// Step 4: No default available - leave generationConfig incomplete

|

||||

// resolveContentGeneratorConfigWithSources will throw exceptions as expected

|

||||

if (modelId) {

|

||||

this._generationConfig.model = modelId;

|

||||

if (!this.generationConfigSources['model']) {

|

||||

this.generationConfigSources['model'] = {

|

||||

kind: 'programmatic',

|

||||

detail: 'auth refresh (no default model)',

|

||||

};

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -751,6 +751,7 @@ describe('getQwenOAuthClient', () => {

|

||||

beforeEach(() => {

|

||||

mockConfig = {

|

||||

isBrowserLaunchSuppressed: vi.fn().mockReturnValue(false),

|

||||

isInteractive: vi.fn().mockReturnValue(true),

|

||||

} as unknown as Config;

|

||||

|

||||

originalFetch = global.fetch;

|

||||

@@ -839,9 +840,7 @@ describe('getQwenOAuthClient', () => {

|

||||

requireCachedCredentials: true,

|

||||

}),

|

||||

),

|

||||

).rejects.toThrow(

|

||||

'No cached Qwen-OAuth credentials found. Please re-authenticate.',

|

||||

);

|

||||

).rejects.toThrow('Please use /auth to re-authenticate.');

|

||||

|

||||

expect(global.fetch).not.toHaveBeenCalled();

|

||||

|

||||

@@ -1007,6 +1006,7 @@ describe('getQwenOAuthClient - Enhanced Error Scenarios', () => {

|

||||

beforeEach(() => {

|

||||

mockConfig = {

|

||||

isBrowserLaunchSuppressed: vi.fn().mockReturnValue(false),

|

||||

isInteractive: vi.fn().mockReturnValue(true),

|

||||

} as unknown as Config;

|

||||

|

||||

originalFetch = global.fetch;

|

||||

@@ -1202,6 +1202,7 @@ describe('authWithQwenDeviceFlow - Comprehensive Testing', () => {

|

||||

beforeEach(() => {

|

||||

mockConfig = {

|

||||

isBrowserLaunchSuppressed: vi.fn().mockReturnValue(false),

|

||||

isInteractive: vi.fn().mockReturnValue(true),

|

||||

} as unknown as Config;

|

||||

|

||||

originalFetch = global.fetch;

|

||||

@@ -1405,6 +1406,7 @@ describe('Browser Launch and Error Handling', () => {

|

||||

beforeEach(() => {

|

||||

mockConfig = {

|

||||

isBrowserLaunchSuppressed: vi.fn().mockReturnValue(false),

|

||||

isInteractive: vi.fn().mockReturnValue(true),

|

||||

} as unknown as Config;

|

||||

|

||||

originalFetch = global.fetch;

|

||||

@@ -2043,6 +2045,7 @@ describe('SharedTokenManager Integration in QwenOAuth2Client', () => {

|

||||

it('should handle TokenManagerError types correctly in getQwenOAuthClient', async () => {

|

||||

const mockConfig = {

|

||||

isBrowserLaunchSuppressed: vi.fn().mockReturnValue(true),

|

||||

isInteractive: vi.fn().mockReturnValue(true),

|

||||

} as unknown as Config;

|

||||

|

||||

// Test different TokenManagerError types

|

||||

|

||||

@@ -516,9 +516,7 @@ export async function getQwenOAuthClient(

|

||||

}

|

||||

|

||||

if (options?.requireCachedCredentials) {

|

||||

throw new Error(

|

||||

'No cached Qwen-OAuth credentials found. Please re-authenticate.',

|

||||

);

|

||||

throw new Error('Please use /auth to re-authenticate.');

|

||||

}

|

||||

|

||||

// If we couldn't obtain valid credentials via SharedTokenManager, fall back to

|

||||

@@ -740,11 +738,9 @@ async function authWithQwenDeviceFlow(

|

||||

// Emit device authorization event for UI integration immediately

|

||||

qwenOAuth2Events.emit(QwenOAuth2Event.AuthUri, deviceAuth);

|

||||

|

||||

// Always show the fallback message in non-interactive environments to ensure

|

||||

// users can see the authorization URL even if browser launching is attempted.

|

||||

// This is critical for headless/remote environments where browser launching

|

||||

// may silently fail without throwing an error.

|

||||

showFallbackMessage(deviceAuth.verification_uri_complete);

|

||||

if (config.isBrowserLaunchSuppressed() || !config.isInteractive()) {

|

||||

showFallbackMessage(deviceAuth.verification_uri_complete);

|

||||

}

|

||||

|

||||

// Try to open browser if not suppressed

|

||||

if (!config.isBrowserLaunchSuppressed()) {

|

||||

|

||||

@@ -235,7 +235,6 @@ export class SkillManager {

|

||||

}

|

||||

|

||||

this.watchStarted = true;

|

||||

await this.ensureUserSkillsDir();

|

||||

await this.refreshCache();

|

||||

this.updateWatchersFromCache();

|

||||

}

|

||||

@@ -487,14 +486,29 @@ export class SkillManager {

|

||||

}

|

||||

|

||||

private updateWatchersFromCache(): void {

|

||||

const watchTargets = new Set<string>(

|

||||

(['project', 'user'] as const)

|

||||

.map((level) => this.getSkillsBaseDir(level))

|

||||

.filter((baseDir) => fsSync.existsSync(baseDir)),

|

||||

);

|

||||

const desiredPaths = new Set<string>();

|

||||

|

||||

for (const level of ['project', 'user'] as const) {

|

||||

const baseDir = this.getSkillsBaseDir(level);

|

||||

const parentDir = path.dirname(baseDir);

|

||||

if (fsSync.existsSync(parentDir)) {

|

||||

desiredPaths.add(parentDir);

|

||||

}

|

||||

if (fsSync.existsSync(baseDir)) {

|

||||

desiredPaths.add(baseDir);

|

||||

}

|

||||

|

||||

const levelSkills = this.skillsCache?.get(level) || [];

|

||||

for (const skill of levelSkills) {

|

||||

const skillDir = path.dirname(skill.filePath);

|

||||

if (fsSync.existsSync(skillDir)) {

|

||||

desiredPaths.add(skillDir);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

for (const existingPath of this.watchers.keys()) {

|

||||

if (!watchTargets.has(existingPath)) {

|

||||

if (!desiredPaths.has(existingPath)) {

|

||||

void this.watchers

|

||||

.get(existingPath)

|

||||

?.close()

|

||||

@@ -508,7 +522,7 @@ export class SkillManager {

|

||||

}

|

||||

}

|

||||

|

||||

for (const watchPath of watchTargets) {

|

||||

for (const watchPath of desiredPaths) {

|

||||

if (this.watchers.has(watchPath)) {

|

||||

continue;

|

||||

}

|

||||

@@ -543,16 +557,4 @@ export class SkillManager {

|

||||

void this.refreshCache().then(() => this.updateWatchersFromCache());

|

||||

}, 150);

|

||||

}

|

||||

|

||||

private async ensureUserSkillsDir(): Promise<void> {

|

||||

const baseDir = this.getSkillsBaseDir('user');

|

||||

try {

|

||||

await fs.mkdir(baseDir, { recursive: true });

|

||||

} catch (error) {

|

||||

console.warn(

|

||||

`Failed to create user skills directory at ${baseDir}:`,

|

||||

error,

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@@ -53,7 +53,7 @@ export class SkillTool extends BaseDeclarativeTool<SkillParams, ToolResult> {

|

||||

false, // canUpdateOutput

|

||||

);

|

||||

|

||||

this.skillManager = config.getSkillManager()!;

|

||||

this.skillManager = config.getSkillManager();

|

||||

this.skillManager.addChangeListener(() => {

|

||||

void this.refreshSkills();

|

||||

});

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"private": true,

|

||||

"main": "src/index.ts",

|

||||

"license": "Apache-2.0",

|

||||

|

||||

@@ -1,11 +1,6 @@

|

||||

# Qwen Code Companion

|

||||

|

||||

[](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion)

|

||||

[](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion)

|

||||

[](https://open-vsx.org/extension/qwenlm/qwen-code-vscode-ide-companion)

|

||||

[](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion)

|

||||

|

||||

Seamlessly integrate [Qwen Code](https://github.com/QwenLM/qwen-code) into Visual Studio Code with native IDE features and an intuitive chat interface. This extension bundles everything you need — no additional installation required.

|

||||

Seamlessly integrate [Qwen Code](https://github.com/QwenLM/qwen-code) into Visual Studio Code with native IDE features and an intuitive interface. This extension bundles everything you need to get started immediately.

|

||||

|

||||

## Demo

|

||||

|

||||

@@ -16,7 +11,7 @@ Seamlessly integrate [Qwen Code](https://github.com/QwenLM/qwen-code) into Visua

|

||||

|

||||

## Features

|

||||

|

||||

- **Native IDE experience**: Dedicated Qwen Code Chat panel accessed via the Qwen icon in the editor title bar

|

||||

- **Native IDE experience**: Dedicated Qwen Code sidebar panel accessed via the Qwen icon

|

||||

- **Native diffing**: Review, edit, and accept changes in VS Code's diff view

|

||||

- **Auto-accept edits mode**: Automatically apply Qwen's changes as they're made

|

||||

- **File management**: @-mention files or attach files and images using the system file picker

|

||||

@@ -25,46 +20,73 @@ Seamlessly integrate [Qwen Code](https://github.com/QwenLM/qwen-code) into Visua

|

||||

|

||||

## Requirements

|

||||

|

||||

- Visual Studio Code 1.85.0 or newer (also works with Cursor, Windsurf, and other VS Code-based editors)

|

||||

- Visual Studio Code 1.85.0 or newer

|

||||

|

||||

## Quick Start

|

||||

## Installation

|

||||

|

||||

1. **Install** from the [VS Code Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion) or [Open VSX Registry](https://open-vsx.org/extension/qwenlm/qwen-code-vscode-ide-companion)

|

||||

1. Install from the VS Code Marketplace: https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion

|

||||

|

||||

2. **Open the Chat panel** using one of these methods:

|

||||

- Click the **Qwen icon** in the top-right corner of the editor

|

||||

- Run `Qwen Code: Open` from the Command Palette (`Cmd+Shift+P` / `Ctrl+Shift+P`)

|

||||

2. Two ways to use

|

||||

- Chat panel: Click the Qwen icon in the Activity Bar, or run `Qwen Code: Open` from the Command Palette (`Cmd+Shift+P` / `Ctrl+Shift+P`).

|

||||

- Terminal session (classic): Run `Qwen Code: Run` to launch a session in the integrated terminal (bundled CLI).

|

||||

|

||||

3. **Start chatting** — Ask Qwen to help with coding tasks, explain code, fix bugs, or write new features

|

||||

## Development and Debugging

|

||||

|

||||

## Commands

|

||||

To debug and develop this extension locally:

|

||||

|

||||

| Command | Description |

|

||||

| -------------------------------- | ------------------------------------------------------ |

|

||||

| `Qwen Code: Open` | Open the Qwen Code Chat panel |

|

||||

| `Qwen Code: Run` | Launch a classic terminal session with the bundled CLI |

|

||||

| `Qwen Code: Accept Current Diff` | Accept the currently displayed diff |

|

||||

| `Qwen Code: Close Diff Editor` | Close/reject the current diff |

|

||||

1. **Clone the repository**

|

||||

|

||||

## Feedback & Issues

|

||||

```bash

|

||||

git clone https://github.com/QwenLM/qwen-code.git

|

||||

cd qwen-code

|

||||

```

|

||||

|

||||

- 🐛 [Report bugs](https://github.com/QwenLM/qwen-code/issues/new?template=bug_report.yml&labels=bug,vscode-ide-companion)

|

||||

- 💡 [Request features](https://github.com/QwenLM/qwen-code/issues/new?template=feature_request.yml&labels=enhancement,vscode-ide-companion)

|

||||

- 📖 [Documentation](https://qwenlm.github.io/qwen-code-docs/)

|

||||

- 📋 [Changelog](https://github.com/QwenLM/qwen-code/releases)

|

||||

2. **Install dependencies**

|

||||

|

||||

## Contributing

|

||||

```bash

|

||||

npm install

|

||||

# or if using pnpm

|

||||

pnpm install

|

||||

```

|

||||

|

||||

We welcome contributions! See our [Contributing Guide](https://github.com/QwenLM/qwen-code/blob/main/CONTRIBUTING.md) for details on:

|

||||

3. **Start debugging**

|

||||

|

||||

- Setting up the development environment

|

||||

- Building and debugging the extension locally

|

||||

- Submitting pull requests

|

||||

```bash

|

||||

code . # Open the project root in VS Code

|

||||

```

|

||||

- Open the `packages/vscode-ide-companion/src/extension.ts` file

|

||||

- Open Debug panel (`Ctrl+Shift+D` or `Cmd+Shift+D`)

|

||||

- Select **"Launch Companion VS Code Extension"** from the debug dropdown

|

||||

- Press `F5` to launch Extension Development Host

|

||||

|

||||

4. **Make changes and reload**

|

||||

- Edit the source code in the original VS Code window

|

||||

- To see your changes, reload the Extension Development Host window by:

|

||||

- Pressing `Ctrl+R` (Windows/Linux) or `Cmd+R` (macOS)

|

||||

- Or clicking the "Reload" button in the debug toolbar

|

||||

|

||||

5. **View logs and debug output**

|

||||

- Open the Debug Console in the original VS Code window to see extension logs

|

||||

- In the Extension Development Host window, open Developer Tools with `Help > Toggle Developer Tools` to see webview logs

|

||||

|

||||

## Build for Production

|

||||

|

||||

To build the extension for distribution:

|

||||

|

||||

```bash

|

||||

npm run compile

|

||||

# or

|

||||

pnpm run compile

|

||||

```

|

||||

|

||||

To package the extension as a VSIX file:

|

||||

|

||||

```bash

|

||||

npx vsce package

|

||||

# or

|

||||

pnpm vsce package

|

||||

```

|

||||

|

||||

## Terms of Service and Privacy Notice

|

||||

|

||||

By installing this extension, you agree to the [Terms of Service](https://github.com/QwenLM/qwen-code/blob/main/docs/tos-privacy.md).

|

||||

|

||||

## License

|

||||

|

||||

[Apache-2.0](https://github.com/QwenLM/qwen-code/blob/main/LICENSE)

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"displayName": "Qwen Code Companion",

|

||||

"description": "Enable Qwen Code with direct access to your VS Code workspace.",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"publisher": "qwenlm",

|

||||

"icon": "assets/icon.png",

|

||||

"repository": {

|

||||

|

||||

@@ -314,32 +314,34 @@ export async function activate(context: vscode.ExtensionContext) {

|

||||

'cli.js',

|

||||

).fsPath;

|

||||

const execPath = process.execPath;

|

||||

const lowerExecPath = execPath.toLowerCase();

|

||||

const needsElectronRunAsNode =

|

||||

lowerExecPath.includes('code') ||

|

||||

lowerExecPath.includes('electron');

|

||||

|

||||

let qwenCmd: string;

|

||||

const terminalOptions: vscode.TerminalOptions = {

|

||||

name: `Qwen Code (${selectedFolder.name})`,

|

||||

cwd: selectedFolder.uri.fsPath,

|

||||

location,

|

||||

};

|

||||

|

||||

let qwenCmd: string;

|

||||

|

||||

if (isWindows) {

|

||||

// On Windows, try multiple strategies to find a Node.js runtime:

|

||||

// 1. Check if VSCode ships a standalone node.exe alongside Code.exe

|

||||

// 2. Check VSCode's internal Node.js in resources directory

|

||||

// 3. Fall back to using Code.exe with ELECTRON_RUN_AS_NODE=1

|

||||

// Use system Node via cmd.exe; avoid PowerShell parsing issues

|

||||

const quoteCmd = (s: string) => `"${s.replace(/"/g, '""')}"`;

|

||||

const cliQuoted = quoteCmd(cliEntry);

|

||||

// TODO: @yiliang114, temporarily run through node, and later hope to decouple from the local node

|

||||

qwenCmd = `node ${cliQuoted}`;

|

||||

terminalOptions.shellPath = process.env.ComSpec;

|

||||

} else {

|

||||

// macOS/Linux: All VSCode-like IDEs (VSCode, Cursor, Windsurf, etc.)

|

||||

// are Electron-based, so we always need ELECTRON_RUN_AS_NODE=1

|

||||

// to run Node.js scripts using the IDE's bundled runtime.

|

||||

const quotePosix = (s: string) => `"${s.replace(/"/g, '\\"')}"`;

|

||||

const baseCmd = `${quotePosix(execPath)} ${quotePosix(cliEntry)}`;

|

||||

qwenCmd = `ELECTRON_RUN_AS_NODE=1 ${baseCmd}`;

|

||||

if (needsElectronRunAsNode) {

|

||||

// macOS Electron helper needs ELECTRON_RUN_AS_NODE=1;

|

||||

qwenCmd = `ELECTRON_RUN_AS_NODE=1 ${baseCmd}`;

|

||||

} else {

|

||||

qwenCmd = baseCmd;

|

||||

}

|

||||

}

|

||||

|

||||

const terminal = vscode.window.createTerminal(terminalOptions);

|

||||

|

||||

Reference in New Issue

Block a user