mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-16 05:49:13 +00:00

Compare commits

10 Commits

feat/suppo

...

feat/exten

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f00f76456c | ||

|

|

4c7605d900 | ||

|

|

b37ede07e8 | ||

|

|

0a88dd7861 | ||

|

|

70991e474f | ||

|

|

551e546974 | ||

|

|

74013bd8b2 | ||

|

|

18713ef2b0 | ||

|

|

50dac93c80 | ||

|

|

22504b0a5b |

@@ -201,11 +201,6 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -480,7 +480,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

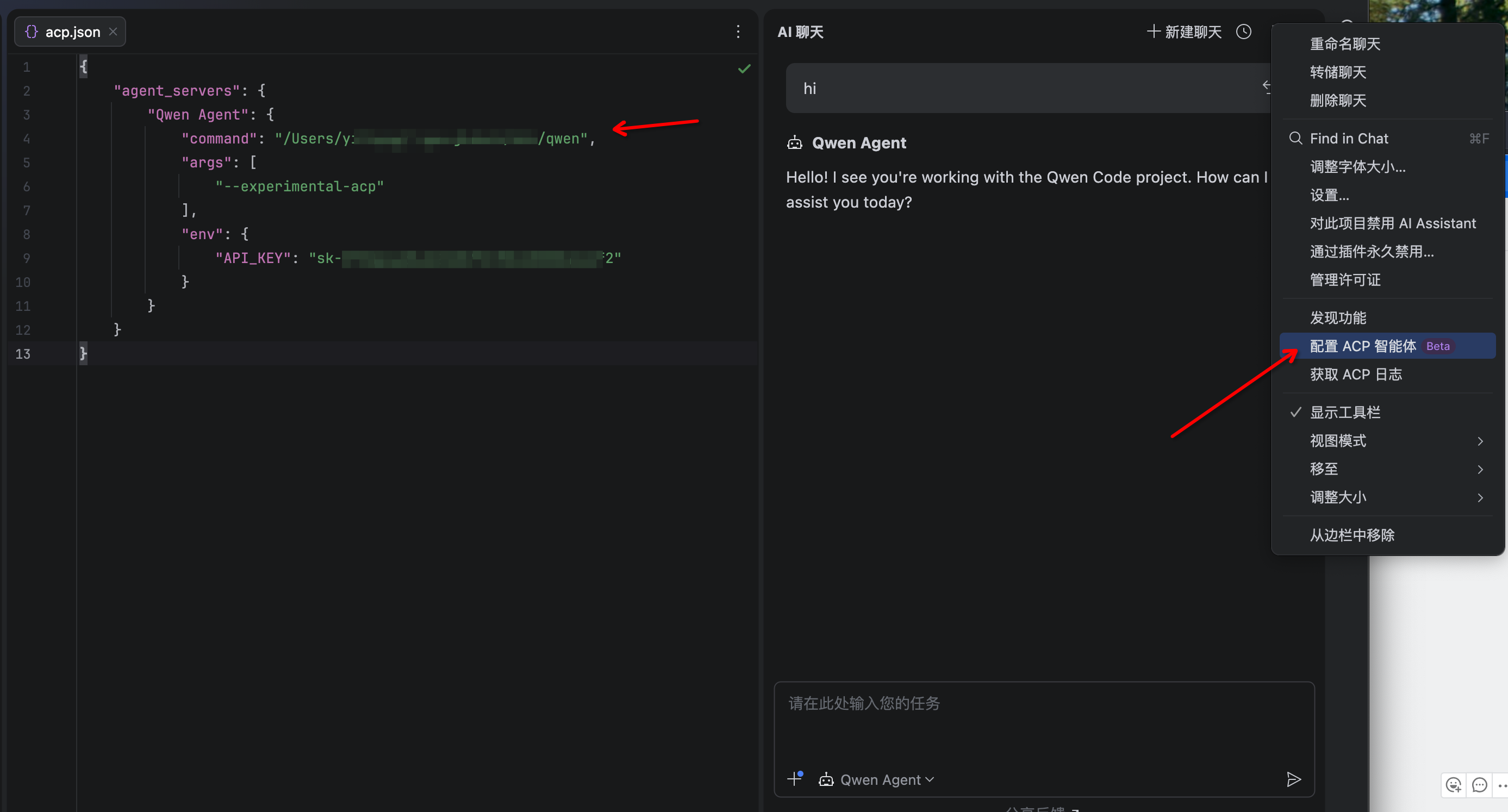

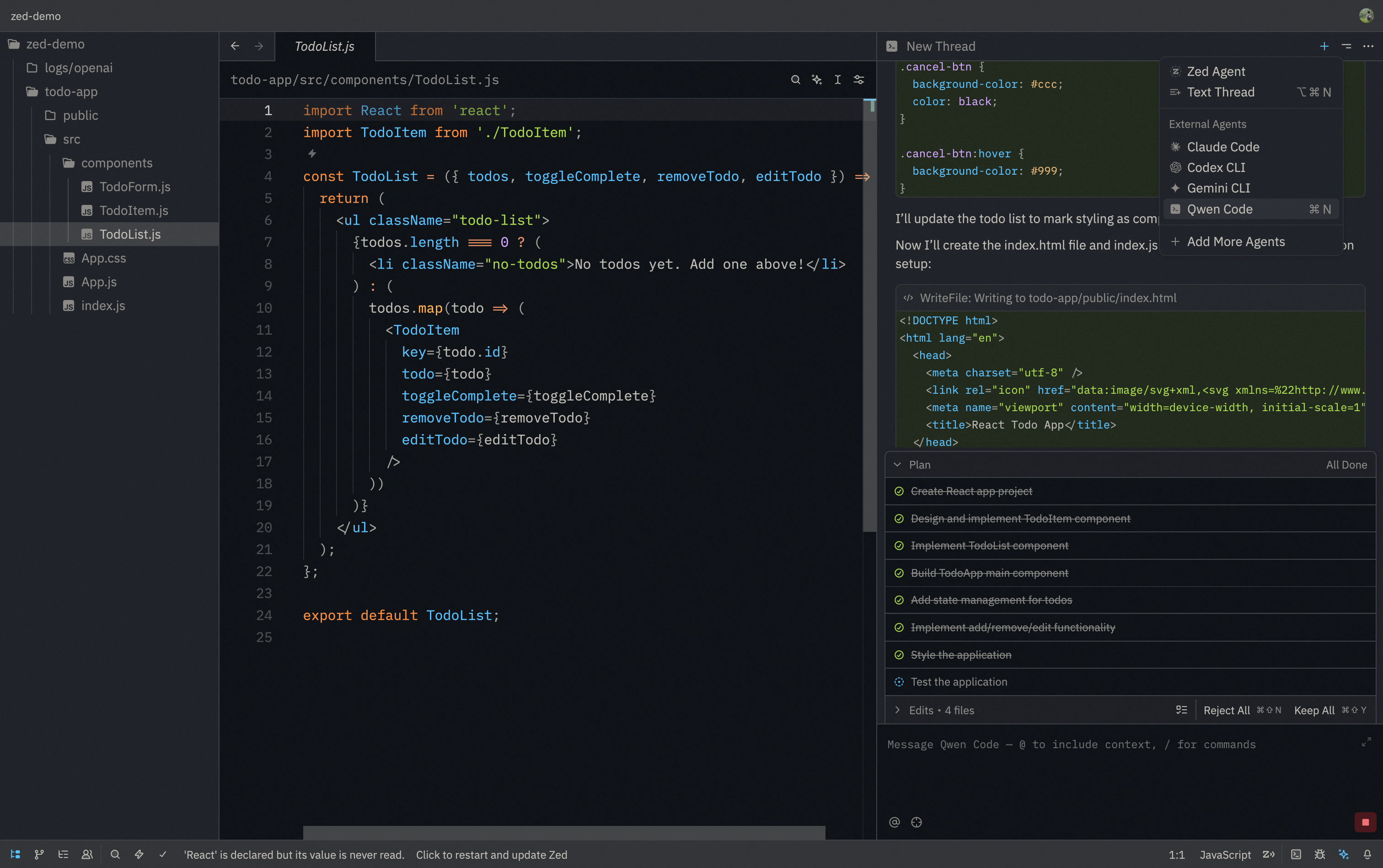

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

|

||||

BIN

docs/users/images/jetbrains-acp.png

Normal file

BIN

docs/users/images/jetbrains-acp.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 36 KiB |

@@ -1,11 +1,11 @@

|

||||

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Agent Control Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -22,7 +22,13 @@

|

||||

|

||||

### Installation

|

||||

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Zed Editor

|

||||

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

@@ -831,7 +831,7 @@ describe('Permission Control (E2E)', () => {

|

||||

TEST_TIMEOUT,

|

||||

);

|

||||

|

||||

it.skip(

|

||||

it(

|

||||

'should execute dangerous commands without confirmation',

|

||||

async () => {

|

||||

const q = query({

|

||||

|

||||

55

package-lock.json

generated

55

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -3875,6 +3875,17 @@

|

||||

"dev": true,

|

||||

"license": "MIT"

|

||||

},

|

||||

"node_modules/@types/prompts": {

|

||||

"version": "2.4.9",

|

||||

"resolved": "https://registry.npmjs.org/@types/prompts/-/prompts-2.4.9.tgz",

|

||||

"integrity": "sha512-qTxFi6Buiu8+50/+3DGIWLHM6QuWsEKugJnnP6iv2Mc4ncxE4A/OJkjuVOA+5X0X1S/nq5VJRa8Lu+nwcvbrKA==",

|

||||

"dev": true,

|

||||

"license": "MIT",

|

||||

"dependencies": {

|

||||

"@types/node": "*",

|

||||

"kleur": "^3.0.3"

|

||||

}

|

||||

},

|

||||

"node_modules/@types/prop-types": {

|

||||

"version": "15.7.15",

|

||||

"resolved": "https://registry.npmjs.org/@types/prop-types/-/prop-types-15.7.15.tgz",

|

||||

@@ -10981,6 +10992,15 @@

|

||||

"json-buffer": "3.0.1"

|

||||

}

|

||||

},

|

||||

"node_modules/kleur": {

|

||||

"version": "3.0.3",

|

||||

"resolved": "https://registry.npmjs.org/kleur/-/kleur-3.0.3.tgz",

|

||||

"integrity": "sha512-eTIzlVOSUR+JxdDFepEYcBMtZ9Qqdef+rnzWdRZuMbOywu5tO2w2N7rqjoANZ5k9vywhL6Br1VRjUIgTQx4E8w==",

|

||||

"license": "MIT",

|

||||

"engines": {

|

||||

"node": ">=6"

|

||||

}

|

||||

},

|

||||

"node_modules/ky": {

|

||||

"version": "1.8.1",

|

||||

"resolved": "https://registry.npmjs.org/ky/-/ky-1.8.1.tgz",

|

||||

@@ -13390,6 +13410,19 @@

|

||||

"dev": true,

|

||||

"license": "MIT"

|

||||

},

|

||||

"node_modules/prompts": {

|

||||

"version": "2.4.2",

|

||||

"resolved": "https://registry.npmjs.org/prompts/-/prompts-2.4.2.tgz",

|

||||

"integrity": "sha512-NxNv/kLguCA7p3jE8oL2aEBsrJWgAakBpgmgK6lpPWV+WuOmY6r2/zbAVnP+T8bQlA0nzHXSJSJW0Hq7ylaD2Q==",

|

||||

"license": "MIT",

|

||||

"dependencies": {

|

||||

"kleur": "^3.0.3",

|

||||

"sisteransi": "^1.0.5"

|

||||

},

|

||||

"engines": {

|

||||

"node": ">= 6"

|

||||

}

|

||||

},

|

||||

"node_modules/prop-types": {

|

||||

"version": "15.8.1",

|

||||

"resolved": "https://registry.npmjs.org/prop-types/-/prop-types-15.8.1.tgz",

|

||||

@@ -14747,6 +14780,12 @@

|

||||

"url": "https://github.com/steveukx/git-js?sponsor=1"

|

||||

}

|

||||

},

|

||||

"node_modules/sisteransi": {

|

||||

"version": "1.0.5",

|

||||

"resolved": "https://registry.npmjs.org/sisteransi/-/sisteransi-1.0.5.tgz",

|

||||

"integrity": "sha512-bLGGlR1QxBcynn2d5YmDX4MGjlZvy2MRBDRNHLJ8VI6l6+9FUiyTFNJ0IveOSP0bcXgVDPRcfGqA0pjaqUpfVg==",

|

||||

"license": "MIT"

|

||||

},

|

||||

"node_modules/slash": {

|

||||

"version": "5.1.0",

|

||||

"resolved": "https://registry.npmjs.org/slash/-/slash-5.1.0.tgz",

|

||||

@@ -17310,7 +17349,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17332,6 +17371,7 @@

|

||||

"ink-spinner": "^5.0.0",

|

||||

"lowlight": "^3.3.0",

|

||||

"open": "^10.1.2",

|

||||

"prompts": "^2.4.2",

|

||||

"qrcode-terminal": "^0.12.0",

|

||||

"react": "^19.1.0",

|

||||

"read-package-up": "^11.0.0",

|

||||

@@ -17360,6 +17400,7 @@

|

||||

"@types/diff": "^7.0.2",

|

||||

"@types/dotenv": "^6.1.1",

|

||||

"@types/node": "^20.11.24",

|

||||

"@types/prompts": "^2.4.9",

|

||||

"@types/react": "^19.1.8",

|

||||

"@types/react-dom": "^19.1.6",

|

||||

"@types/semver": "^7.7.0",

|

||||

@@ -17947,7 +17988,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -18588,7 +18629,7 @@

|

||||

},

|

||||

"packages/sdk-typescript": {

|

||||

"name": "@qwen-code/sdk",

|

||||

"version": "0.1.3",

|

||||

"version": "0.1.2",

|

||||

"license": "Apache-2.0",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

@@ -21408,7 +21449,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21420,7 +21461,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.1",

|

||||

"version": "0.7.0",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

@@ -46,6 +46,7 @@

|

||||

"comment-json": "^4.2.5",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"prompts": "^2.4.2",

|

||||

"fzf": "^0.5.2",

|

||||

"glob": "^10.5.0",

|

||||

"highlight.js": "^11.11.1",

|

||||

@@ -79,6 +80,7 @@

|

||||

"@types/command-exists": "^1.2.3",

|

||||

"@types/diff": "^7.0.2",

|

||||

"@types/dotenv": "^6.1.1",

|

||||

"@types/prompts": "^2.4.9",

|

||||

"@types/node": "^20.11.24",

|

||||

"@types/react": "^19.1.8",

|

||||

"@types/react-dom": "^19.1.6",

|

||||

|

||||

@@ -27,10 +27,8 @@ import { Readable, Writable } from 'node:stream';

|

||||

import type { LoadedSettings } from '../config/settings.js';

|

||||

import { SettingScope } from '../config/settings.js';

|

||||

import { z } from 'zod';

|

||||

import { ExtensionStorage, type Extension } from '../config/extension.js';

|

||||

import type { CliArgs } from '../config/config.js';

|

||||

import { loadCliConfig } from '../config/config.js';

|

||||

import { ExtensionEnablementManager } from '../config/extensions/extensionEnablement.js';

|

||||

|

||||

// Import the modular Session class

|

||||

import { Session } from './session/Session.js';

|

||||

@@ -38,7 +36,6 @@ import { Session } from './session/Session.js';

|

||||

export async function runAcpAgent(

|

||||

config: Config,

|

||||

settings: LoadedSettings,

|

||||

extensions: Extension[],

|

||||

argv: CliArgs,

|

||||

) {

|

||||

const stdout = Writable.toWeb(process.stdout) as WritableStream;

|

||||

@@ -51,8 +48,7 @@ export async function runAcpAgent(

|

||||

console.debug = console.error;

|

||||

|

||||

new acp.AgentSideConnection(

|

||||

(client: acp.Client) =>

|

||||

new GeminiAgent(config, settings, extensions, argv, client),

|

||||

(client: acp.Client) => new GeminiAgent(config, settings, argv, client),

|

||||

stdout,

|

||||

stdin,

|

||||

);

|

||||

@@ -65,7 +61,6 @@ class GeminiAgent {

|

||||

constructor(

|

||||

private config: Config,

|

||||

private settings: LoadedSettings,

|

||||

private extensions: Extension[],

|

||||

private argv: CliArgs,

|

||||

private client: acp.Client,

|

||||

) {}

|

||||

@@ -215,16 +210,7 @@ class GeminiAgent {

|

||||

continue: false,

|

||||

};

|

||||

|

||||

const config = await loadCliConfig(

|

||||

settings,

|

||||

this.extensions,

|

||||

new ExtensionEnablementManager(

|

||||

ExtensionStorage.getUserExtensionsDir(),

|

||||

this.argv.extensions,

|

||||

),

|

||||

argvForSession,

|

||||

cwd,

|

||||

);

|

||||

const config = await loadCliConfig(settings, argvForSession, cwd);

|

||||

|

||||

await config.initialize();

|

||||

return config;

|

||||

|

||||

87

packages/cli/src/commands/extensions/consent.ts

Normal file

87

packages/cli/src/commands/extensions/consent.ts

Normal file

@@ -0,0 +1,87 @@

|

||||

import type { ConfirmationRequest } from '../../ui/types.js';

|

||||

|

||||

/**

|

||||

* Requests consent from the user to perform an action, by reading a Y/n

|

||||

* character from stdin.

|

||||

*

|

||||

* This should not be called from interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param consentDescription The description of the thing they will be consenting to.

|

||||

* @returns boolean, whether they consented or not.

|

||||

*/

|

||||

export async function requestConsentNonInteractive(

|

||||

consentDescription: string,

|

||||

): Promise<boolean> {

|

||||

console.info(consentDescription);

|

||||

const result = await promptForConsentNonInteractive(

|

||||

'Do you want to continue? [Y/n]: ',

|

||||

);

|

||||

return result;

|

||||

}

|

||||

|

||||

/**

|

||||

* Requests consent from the user to perform an action, in interactive mode.

|

||||

*

|

||||

* This should not be called from non-interactive mode as it will not work.

|

||||

*

|

||||

* @param consentDescription The description of the thing they will be consenting to.

|

||||

* @param addExtensionUpdateConfirmationRequest A function to actually add a prompt to the UI.

|

||||

* @returns boolean, whether they consented or not.

|

||||

*/

|

||||

export async function requestConsentInteractive(

|

||||

consentDescription: string,

|

||||

addExtensionUpdateConfirmationRequest: (value: ConfirmationRequest) => void,

|

||||

): Promise<boolean> {

|

||||

return promptForConsentInteractive(

|

||||

consentDescription + '\n\nDo you want to continue?',

|

||||

addExtensionUpdateConfirmationRequest,

|

||||

);

|

||||

}

|

||||

|

||||

/**

|

||||

* Asks users a prompt and awaits for a y/n response on stdin.

|

||||

*

|

||||

* This should not be called from interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param prompt A yes/no prompt to ask the user

|

||||

* @returns Whether or not the user answers 'y' (yes). Defaults to 'yes' on enter.

|

||||

*/

|

||||

async function promptForConsentNonInteractive(

|

||||

prompt: string,

|

||||

): Promise<boolean> {

|

||||

const readline = await import('node:readline');

|

||||

const rl = readline.createInterface({

|

||||

input: process.stdin,

|

||||

output: process.stdout,

|

||||

});

|

||||

|

||||

return new Promise((resolve) => {

|

||||

rl.question(prompt, (answer) => {

|

||||

rl.close();

|

||||

resolve(['y', ''].includes(answer.trim().toLowerCase()));

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Asks users an interactive yes/no prompt.

|

||||

*

|

||||

* This should not be called from non-interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param prompt A markdown prompt to ask the user

|

||||

* @param addExtensionUpdateConfirmationRequest Function to update the UI state with the confirmation request.

|

||||

* @returns Whether or not the user answers yes.

|

||||

*/

|

||||

async function promptForConsentInteractive(

|

||||

prompt: string,

|

||||

addExtensionUpdateConfirmationRequest: (value: ConfirmationRequest) => void,

|

||||

): Promise<boolean> {

|

||||

return new Promise<boolean>((resolve) => {

|

||||

addExtensionUpdateConfirmationRequest({

|

||||

prompt,

|

||||

onConfirm: (resolvedConfirmed) => {

|

||||

resolve(resolvedConfirmed);

|

||||

},

|

||||

});

|

||||

});

|

||||

}

|

||||

@@ -5,21 +5,22 @@

|

||||

*/

|

||||

|

||||

import { type CommandModule } from 'yargs';

|

||||

import { disableExtension } from '../../config/extension.js';

|

||||

import { SettingScope } from '../../config/settings.js';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface DisableArgs {

|

||||

name: string;

|

||||

scope?: string;

|

||||

}

|

||||

|

||||

export function handleDisable(args: DisableArgs) {

|

||||

export async function handleDisable(args: DisableArgs) {

|

||||

const extensionManager = await getExtensionManager();

|

||||

try {

|

||||

if (args.scope?.toLowerCase() === 'workspace') {

|

||||

disableExtension(args.name, SettingScope.Workspace);

|

||||

extensionManager.disableExtension(args.name, SettingScope.Workspace);

|

||||

} else {

|

||||

disableExtension(args.name, SettingScope.User);

|

||||

extensionManager.disableExtension(args.name, SettingScope.User);

|

||||

}

|

||||

console.log(

|

||||

`Extension "${args.name}" successfully disabled for scope "${args.scope}".`,

|

||||

@@ -61,8 +62,8 @@ export const disableCommand: CommandModule = {

|

||||

}

|

||||

return true;

|

||||

}),

|

||||

handler: (argv) => {

|

||||

handleDisable({

|

||||

handler: async (argv) => {

|

||||

await handleDisable({

|

||||

name: argv['name'] as string,

|

||||

scope: argv['scope'] as string,

|

||||

});

|

||||

|

||||

@@ -6,20 +6,22 @@

|

||||

|

||||

import { type CommandModule } from 'yargs';

|

||||

import { FatalConfigError, getErrorMessage } from '@qwen-code/qwen-code-core';

|

||||

import { enableExtension } from '../../config/extension.js';

|

||||

import { SettingScope } from '../../config/settings.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface EnableArgs {

|

||||

name: string;

|

||||

scope?: string;

|

||||

}

|

||||

|

||||

export function handleEnable(args: EnableArgs) {

|

||||

export async function handleEnable(args: EnableArgs) {

|

||||

const extensionManager = await getExtensionManager();

|

||||

|

||||

try {

|

||||

if (args.scope?.toLowerCase() === 'workspace') {

|

||||

enableExtension(args.name, SettingScope.Workspace);

|

||||

extensionManager.enableExtension(args.name, SettingScope.Workspace);

|

||||

} else {

|

||||

enableExtension(args.name, SettingScope.User);

|

||||

extensionManager.enableExtension(args.name, SettingScope.User);

|

||||

}

|

||||

if (args.scope) {

|

||||

console.log(

|

||||

@@ -66,8 +68,8 @@ export const enableCommand: CommandModule = {

|

||||

}

|

||||

return true;

|

||||

}),

|

||||

handler: (argv) => {

|

||||

handleEnable({

|

||||

handler: async (argv) => {

|

||||

await handleEnable({

|

||||

name: argv['name'] as string,

|

||||

scope: argv['scope'] as string,

|

||||

});

|

||||

|

||||

@@ -5,58 +5,64 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

|

||||

import {

|

||||

installExtension,

|

||||

requestConsentNonInteractive,

|

||||

} from '../../config/extension.js';

|

||||

import type { ExtensionInstallMetadata } from '@qwen-code/qwen-code-core';

|

||||

ExtensionManager,

|

||||

parseInstallSource,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { stat } from 'node:fs/promises';

|

||||

import { isWorkspaceTrusted } from '../../config/trustedFolders.js';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

import { requestConsentNonInteractive } from './consent.js';

|

||||

|

||||

interface InstallArgs {

|

||||

source: string;

|

||||

ref?: string;

|

||||

autoUpdate?: boolean;

|

||||

allowPreRelease?: boolean;

|

||||

consent?: boolean;

|

||||

}

|

||||

|

||||

export async function handleInstall(args: InstallArgs) {

|

||||

try {

|

||||

let installMetadata: ExtensionInstallMetadata;

|

||||

const { source } = args;

|

||||

const installMetadata = await parseInstallSource(args.source);

|

||||

|

||||

if (

|

||||

source.startsWith('http://') ||

|

||||

source.startsWith('https://') ||

|

||||

source.startsWith('git@') ||

|

||||

source.startsWith('sso://')

|

||||

installMetadata.type !== 'git' &&

|

||||

installMetadata.type !== 'github-release'

|

||||

) {

|

||||

installMetadata = {

|

||||

source,

|

||||

type: 'git',

|

||||

ref: args.ref,

|

||||

autoUpdate: args.autoUpdate,

|

||||

};

|

||||

} else {

|

||||

if (args.ref || args.autoUpdate) {

|

||||

throw new Error(

|

||||

'--ref and --auto-update are not applicable for local extensions.',

|

||||

'--ref and --auto-update are not applicable for marketplace extensions.',

|

||||

);

|

||||

}

|

||||

try {

|

||||

await stat(source);

|

||||

installMetadata = {

|

||||

source,

|

||||

type: 'local',

|

||||

};

|

||||

} catch {

|

||||

throw new Error('Install source not found.');

|

||||

}

|

||||

}

|

||||

|

||||

const name = await installExtension(

|

||||

installMetadata,

|

||||

requestConsentNonInteractive,

|

||||

const requestConsent = args.consent

|

||||

? () => Promise.resolve(true)

|

||||

: requestConsentNonInteractive;

|

||||

const workspaceDir = process.cwd();

|

||||

const extensionManager = new ExtensionManager({

|

||||

workspaceDir,

|

||||

isWorkspaceTrusted: !!isWorkspaceTrusted(

|

||||

loadSettings(workspaceDir).merged,

|

||||

),

|

||||

requestConsent,

|

||||

});

|

||||

await extensionManager.refreshCache();

|

||||

|

||||

const extension = await extensionManager.installExtension(

|

||||

{

|

||||

...installMetadata,

|

||||

ref: args.ref,

|

||||

autoUpdate: args.autoUpdate,

|

||||

allowPreRelease: args.allowPreRelease,

|

||||

},

|

||||

requestConsent,

|

||||

);

|

||||

console.log(

|

||||

`Extension "${extension.name}" installed successfully and enabled.`,

|

||||

);

|

||||

console.log(`Extension "${name}" installed successfully and enabled.`);

|

||||

} catch (error) {

|

||||

console.error(getErrorMessage(error));

|

||||

process.exit(1);

|

||||

@@ -65,11 +71,13 @@ export async function handleInstall(args: InstallArgs) {

|

||||

|

||||

export const installCommand: CommandModule = {

|

||||

command: 'install <source>',

|

||||

describe: 'Installs an extension from a git repository URL or a local path.',

|

||||

describe:

|

||||

'Installs an extension from a git repository URL, local path, or claude marketplace (marketplace-url:plugin-name).',

|

||||

builder: (yargs) =>

|

||||

yargs

|

||||

.positional('source', {

|

||||

describe: 'The github URL or local path of the extension to install.',

|

||||

describe:

|

||||

'The github URL, local path, or marketplace source (marketplace-url:plugin-name) of the extension to install.',

|

||||

type: 'string',

|

||||

demandOption: true,

|

||||

})

|

||||

@@ -81,6 +89,16 @@ export const installCommand: CommandModule = {

|

||||

describe: 'Enable auto-update for this extension.',

|

||||

type: 'boolean',

|

||||

})

|

||||

.option('pre-release', {

|

||||

describe: 'Enable pre-release versions for this extension.',

|

||||

type: 'boolean',

|

||||

})

|

||||

.option('consent', {

|

||||

describe:

|

||||

'Acknowledge the security risks of installing an extension and skip the confirmation prompt.',

|

||||

type: 'boolean',

|

||||

default: false,

|

||||

})

|

||||

.check((argv) => {

|

||||

if (!argv.source) {

|

||||

throw new Error('The source argument must be provided.');

|

||||

@@ -92,6 +110,8 @@ export const installCommand: CommandModule = {

|

||||

source: argv['source'] as string,

|

||||

ref: argv['ref'] as string | undefined,

|

||||

autoUpdate: argv['auto-update'] as boolean | undefined,

|

||||

allowPreRelease: argv['pre-release'] as boolean | undefined,

|

||||

consent: argv['consent'] as boolean | undefined,

|

||||

});

|

||||

},

|

||||

};

|

||||

|

||||

@@ -5,13 +5,10 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

import {

|

||||

installExtension,

|

||||

requestConsentNonInteractive,

|

||||

} from '../../config/extension.js';

|

||||

import type { ExtensionInstallMetadata } from '@qwen-code/qwen-code-core';

|

||||

|

||||

import { type ExtensionInstallMetadata } from '@qwen-code/qwen-code-core';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { requestConsentNonInteractive } from './consent.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface InstallArgs {

|

||||

path: string;

|

||||

@@ -23,12 +20,14 @@ export async function handleLink(args: InstallArgs) {

|

||||

source: args.path,

|

||||

type: 'link',

|

||||

};

|

||||

const extensionName = await installExtension(

|

||||

const extensionManager = await getExtensionManager();

|

||||

|

||||

const extension = await extensionManager.installExtension(

|

||||

installMetadata,

|

||||

requestConsentNonInteractive,

|

||||

);

|

||||

console.log(

|

||||

`Extension "${extensionName}" linked successfully and enabled.`,

|

||||

`Extension "${extension.name}" linked successfully and enabled.`,

|

||||

);

|

||||

} catch (error) {

|

||||

console.error(getErrorMessage(error));

|

||||

|

||||

@@ -5,19 +5,23 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

import { loadUserExtensions, toOutputString } from '../../config/extension.js';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

export async function handleList() {

|

||||

try {

|

||||

const extensions = loadUserExtensions();

|

||||

const extensionManager = await getExtensionManager();

|

||||

const extensions = extensionManager.getLoadedExtensions();

|

||||

|

||||

if (extensions.length === 0) {

|

||||

console.log('No extensions installed.');

|

||||

return;

|

||||

}

|

||||

console.log(

|

||||

extensions

|

||||

.map((extension, _): string => toOutputString(extension, process.cwd()))

|

||||

.map((extension, _): string =>

|

||||

extensionManager.toOutputString(extension, process.cwd()),

|

||||

)

|

||||

.join('\n\n'),

|

||||

);

|

||||

} catch (error) {

|

||||

|

||||

@@ -5,8 +5,11 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

import { uninstallExtension } from '../../config/extension.js';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { ExtensionManager } from '@qwen-code/qwen-code-core';

|

||||

import { requestConsentNonInteractive } from './consent.js';

|

||||

import { isWorkspaceTrusted } from '../../config/trustedFolders.js';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

|

||||

interface UninstallArgs {

|

||||

name: string; // can be extension name or source URL.

|

||||

@@ -14,7 +17,16 @@ interface UninstallArgs {

|

||||

|

||||

export async function handleUninstall(args: UninstallArgs) {

|

||||

try {

|

||||

await uninstallExtension(args.name);

|

||||

const workspaceDir = process.cwd();

|

||||

const extensionManager = new ExtensionManager({

|

||||

workspaceDir,

|

||||

requestConsent: requestConsentNonInteractive,

|

||||

isWorkspaceTrusted: !!isWorkspaceTrusted(

|

||||

loadSettings(workspaceDir).merged,

|

||||

),

|

||||

});

|

||||

await extensionManager.refreshCache();

|

||||

await extensionManager.uninstallExtension(args.name, false);

|

||||

console.log(`Extension "${args.name}" successfully uninstalled.`);

|

||||

} catch (error) {

|

||||

console.error(getErrorMessage(error));

|

||||

|

||||

@@ -5,22 +5,13 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

import {

|

||||

loadExtensions,

|

||||

annotateActiveExtensions,

|

||||

ExtensionStorage,

|

||||

requestConsentNonInteractive,

|

||||

} from '../../config/extension.js';

|

||||

import {

|

||||

updateAllUpdatableExtensions,

|

||||

type ExtensionUpdateInfo,

|

||||

checkForAllExtensionUpdates,

|

||||

updateExtension,

|

||||

} from '../../config/extensions/update.js';

|

||||

import { checkForExtensionUpdate } from '../../config/extensions/github.js';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { ExtensionUpdateState } from '../../ui/state/extensions.js';

|

||||

import { ExtensionEnablementManager } from '../../config/extensions/extensionEnablement.js';

|

||||

import {

|

||||

checkForExtensionUpdate,

|

||||

type ExtensionUpdateInfo,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface UpdateArgs {

|

||||

name?: string;

|

||||

@@ -31,19 +22,9 @@ const updateOutput = (info: ExtensionUpdateInfo) =>

|

||||

`Extension "${info.name}" successfully updated: ${info.originalVersion} → ${info.updatedVersion}.`;

|

||||

|

||||

export async function handleUpdate(args: UpdateArgs) {

|

||||

const workingDir = process.cwd();

|

||||

const extensionEnablementManager = new ExtensionEnablementManager(

|

||||

ExtensionStorage.getUserExtensionsDir(),

|

||||

// Force enable named extensions, otherwise we will only update the enabled

|

||||

// ones.

|

||||

args.name ? [args.name] : [],

|

||||

);

|

||||

const allExtensions = loadExtensions(extensionEnablementManager);

|

||||

const extensions = annotateActiveExtensions(

|

||||

allExtensions,

|

||||

workingDir,

|

||||

extensionEnablementManager,

|

||||

);

|

||||

const extensionManager = await getExtensionManager();

|

||||

const extensions = extensionManager.getLoadedExtensions();

|

||||

|

||||

if (args.name) {

|

||||

try {

|

||||

const extension = extensions.find(

|

||||

@@ -53,25 +34,23 @@ export async function handleUpdate(args: UpdateArgs) {

|

||||

console.log(`Extension "${args.name}" not found.`);

|

||||

return;

|

||||

}

|

||||

let updateState: ExtensionUpdateState | undefined;

|

||||

if (!extension.installMetadata) {

|

||||

console.log(

|

||||

`Unable to install extension "${args.name}" due to missing install metadata`,

|

||||

);

|

||||

return;

|

||||

}

|

||||

await checkForExtensionUpdate(extension, (newState) => {

|

||||

updateState = newState;

|

||||

});

|

||||

const updateState = await checkForExtensionUpdate(

|

||||

extension,

|

||||

extensionManager,

|

||||

);

|

||||

if (updateState !== ExtensionUpdateState.UPDATE_AVAILABLE) {

|

||||

console.log(`Extension "${args.name}" is already up to date.`);

|

||||

return;

|

||||

}

|

||||

// TODO(chrstnb): we should list extensions if the requested extension is not installed.

|

||||

const updatedExtensionInfo = (await updateExtension(

|

||||

const updatedExtensionInfo = (await extensionManager.updateExtension(

|

||||

extension,

|

||||

workingDir,

|

||||

requestConsentNonInteractive,

|

||||

updateState,

|

||||

() => {},

|

||||

))!;

|

||||

@@ -92,18 +71,15 @@ export async function handleUpdate(args: UpdateArgs) {

|

||||

if (args.all) {

|

||||

try {

|

||||

const extensionState = new Map();

|

||||

await checkForAllExtensionUpdates(extensions, (action) => {

|

||||

if (action.type === 'SET_STATE') {

|

||||

extensionState.set(action.payload.name, {

|

||||

status: action.payload.state,

|

||||

await extensionManager.checkForAllExtensionUpdates(

|

||||

(extensionName, state) => {

|

||||

extensionState.set(extensionName, {

|

||||

status: state,

|

||||

processed: true, // No need to process as we will force the update.

|

||||

});

|

||||

}

|

||||

});

|

||||

let updateInfos = await updateAllUpdatableExtensions(

|

||||

workingDir,

|

||||

requestConsentNonInteractive,

|

||||

extensions,

|

||||

},

|

||||

);

|

||||

let updateInfos = await extensionManager.updateAllUpdatableExtensions(

|

||||

extensionState,

|

||||

() => {},

|

||||

);

|

||||

|

||||

21

packages/cli/src/commands/extensions/utils.ts

Normal file

21

packages/cli/src/commands/extensions/utils.ts

Normal file

@@ -0,0 +1,21 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Google LLC

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import { ExtensionManager } from '@qwen-code/qwen-code-core';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

import { requestConsentNonInteractive } from './consent.js';

|

||||

import { isWorkspaceTrusted } from '../../config/trustedFolders.js';

|

||||

|

||||

export async function getExtensionManager(): Promise<ExtensionManager> {

|

||||

const workspaceDir = process.cwd();

|

||||

const extensionManager = new ExtensionManager({

|

||||

workspaceDir,

|

||||

requestConsent: requestConsentNonInteractive,

|

||||

isWorkspaceTrusted: !!isWorkspaceTrusted(loadSettings(workspaceDir).merged),

|

||||

});

|

||||

await extensionManager.refreshCache();

|

||||

return extensionManager;

|

||||

}

|

||||

@@ -7,7 +7,8 @@

|

||||

import { vi, describe, it, expect, beforeEach, afterEach } from 'vitest';

|

||||

import { listMcpServers } from './list.js';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

import { ExtensionStorage, loadExtensions } from '../../config/extension.js';

|

||||

import { loadExtensions } from '../../config/extension.js';

|

||||

import { ExtensionStorage } from '../../config/extensions/storage.js';

|

||||

import { createTransport } from '@qwen-code/qwen-code-core';

|

||||

import { Client } from '@modelcontextprotocol/sdk/client/index.js';

|

||||

|

||||

|

||||

@@ -8,10 +8,13 @@

|

||||

import type { CommandModule } from 'yargs';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

import type { MCPServerConfig } from '@qwen-code/qwen-code-core';

|

||||

import { MCPServerStatus, createTransport } from '@qwen-code/qwen-code-core';

|

||||

import {

|

||||

MCPServerStatus,

|

||||

createTransport,

|

||||

ExtensionManager,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import { Client } from '@modelcontextprotocol/sdk/client/index.js';

|

||||

import { ExtensionStorage, loadExtensions } from '../../config/extension.js';

|

||||

import { ExtensionEnablementManager } from '../../config/extensions/extensionEnablement.js';

|

||||

import { isWorkspaceTrusted } from '../../config/trustedFolders.js';

|

||||

|

||||

const COLOR_GREEN = '\u001b[32m';

|

||||

const COLOR_YELLOW = '\u001b[33m';

|

||||

@@ -22,22 +25,27 @@ async function getMcpServersFromConfig(): Promise<

|

||||

Record<string, MCPServerConfig>

|

||||

> {

|

||||

const settings = loadSettings();

|

||||

const extensions = loadExtensions(

|

||||

new ExtensionEnablementManager(ExtensionStorage.getUserExtensionsDir()),

|

||||

);

|

||||

const extensionManager = new ExtensionManager({

|

||||

isWorkspaceTrusted: !!isWorkspaceTrusted(settings.merged),

|

||||

telemetrySettings: settings.merged.telemetry,

|

||||

});

|

||||

await extensionManager.refreshCache();

|

||||

const extensions = extensionManager.getLoadedExtensions();

|

||||

const mcpServers = { ...(settings.merged.mcpServers || {}) };

|

||||

for (const extension of extensions) {

|

||||

Object.entries(extension.config.mcpServers || {}).forEach(

|

||||

([key, server]) => {

|

||||

if (mcpServers[key]) {

|

||||

return;

|

||||

}

|

||||

mcpServers[key] = {

|

||||

...server,

|

||||

extensionName: extension.config.name,

|

||||

};

|

||||

},

|

||||

);

|

||||

if (extension.isActive) {

|

||||

Object.entries(extension.config.mcpServers || {}).forEach(

|

||||

([key, server]) => {

|

||||

if (mcpServers[key]) {

|

||||

return;

|

||||

}

|

||||

mcpServers[key] = {

|

||||

...server,

|

||||

extensionName: extension.config.name,

|

||||

};

|

||||

},

|

||||

);

|

||||

}

|

||||

}

|

||||

return mcpServers;

|

||||

}

|

||||

|

||||

@@ -16,7 +16,8 @@ import {

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import { loadCliConfig, parseArguments, type CliArgs } from './config.js';

|

||||

import type { Settings } from './settings.js';

|

||||

import { ExtensionStorage, type Extension } from './extension.js';

|

||||

import type { Extension } from './extension.js';

|

||||

import { ExtensionStorage } from './extensions/storage.js';

|

||||

import * as ServerConfig from '@qwen-code/qwen-code-core';

|

||||

import { isWorkspaceTrusted } from './trustedFolders.js';

|

||||

import { ExtensionEnablementManager } from './extensions/extensionEnablement.js';

|

||||

|

||||

@@ -9,7 +9,6 @@ import {

|

||||

AuthType,

|

||||

Config,

|

||||

DEFAULT_QWEN_EMBEDDING_MODEL,

|

||||

DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

FileDiscoveryService,

|

||||

getCurrentGeminiMdFilename,

|

||||

loadServerHierarchicalMemory,

|

||||

@@ -23,7 +22,6 @@ import {

|

||||

SessionService,

|

||||

type ResumedSessionData,

|

||||

type FileFilteringOptions,

|

||||

type MCPServerConfig,

|

||||

type ToolName,

|

||||

EditTool,

|

||||

ShellTool,

|

||||

@@ -43,14 +41,11 @@ import { homedir } from 'node:os';

|

||||

|

||||

import { resolvePath } from '../utils/resolvePath.js';

|

||||

import { getCliVersion } from '../utils/version.js';

|

||||

import type { Extension } from './extension.js';

|

||||

import { annotateActiveExtensions } from './extension.js';

|

||||

import { loadSandboxConfig } from './sandboxConfig.js';

|

||||

import { appEvents } from '../utils/events.js';

|

||||

import { mcpCommand } from '../commands/mcp.js';

|

||||

|

||||

import { isWorkspaceTrusted } from './trustedFolders.js';

|

||||

import type { ExtensionEnablementManager } from './extensions/extensionEnablement.js';

|

||||

import { buildWebSearchConfig } from './webSearch.js';

|

||||

|

||||

// Simple console logger for now - replace with actual logger if available

|

||||

@@ -169,7 +164,7 @@ function normalizeOutputFormat(

|

||||

return OutputFormat.TEXT;

|

||||

}

|

||||

|

||||

export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

export async function parseArguments(): Promise<CliArgs> {

|

||||

let rawArgv = hideBin(process.argv);

|

||||

|

||||

// hack: if the first argument is the CLI entry point, remove it

|

||||

@@ -560,11 +555,9 @@ export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

}),

|

||||

)

|

||||

// Register MCP subcommands

|

||||

.command(mcpCommand);

|

||||

|

||||

if (settings?.experimental?.extensionManagement ?? true) {

|

||||

yargsInstance.command(extensionsCommand);

|

||||

}

|

||||

.command(mcpCommand)

|

||||

// Register Extension subcommands

|

||||

.command(extensionsCommand);

|

||||

|

||||

yargsInstance

|

||||

.version(await getCliVersion()) // This will enable the --version flag based on package.json

|

||||

@@ -639,11 +632,11 @@ export async function loadHierarchicalGeminiMemory(

|

||||

includeDirectoriesToReadGemini: readonly string[] = [],

|

||||

debugMode: boolean,

|

||||

fileService: FileDiscoveryService,

|

||||

settings: Settings,

|

||||

extensionContextFilePaths: string[] = [],

|

||||

folderTrust: boolean,

|

||||

memoryImportFormat: 'flat' | 'tree' = 'tree',

|

||||

fileFilteringOptions?: FileFilteringOptions,

|

||||

maxDirs: number = 200,

|

||||

): Promise<{ memoryContent: string; fileCount: number }> {

|

||||

// FIX: Use real, canonical paths for a reliable comparison to handle symlinks.

|

||||

const realCwd = fs.realpathSync(path.resolve(currentWorkingDirectory));

|

||||

@@ -670,7 +663,7 @@ export async function loadHierarchicalGeminiMemory(

|

||||

folderTrust,

|

||||

memoryImportFormat,

|

||||

fileFilteringOptions,

|

||||

settings.context?.discoveryMaxDirs,

|

||||

maxDirs,

|

||||

);

|

||||

}

|

||||

|

||||

@@ -685,30 +678,17 @@ export function isDebugMode(argv: CliArgs): boolean {

|

||||

|

||||

export async function loadCliConfig(

|

||||

settings: Settings,

|

||||

extensions: Extension[],

|

||||

extensionEnablementManager: ExtensionEnablementManager,

|

||||

argv: CliArgs,

|

||||

cwd: string = process.cwd(),

|

||||

overrideExtensions?: string[],

|

||||

): Promise<Config> {

|

||||

const debugMode = isDebugMode(argv);

|

||||

|

||||

const memoryImportFormat = settings.context?.importFormat || 'tree';

|

||||

|

||||

const ideMode = settings.ide?.enabled ?? false;

|

||||

|

||||

const folderTrust = settings.security?.folderTrust?.enabled ?? false;

|

||||

const trustedFolder = isWorkspaceTrusted(settings)?.isTrusted ?? true;

|

||||

|

||||

const allExtensions = annotateActiveExtensions(

|

||||

extensions,

|

||||

cwd,

|

||||

extensionEnablementManager,

|

||||

);

|

||||

|

||||

const activeExtensions = extensions.filter(

|

||||

(_, i) => allExtensions[i].isActive,

|

||||

);

|

||||

|

||||

// Set the context filename in the server's memoryTool module BEFORE loading memory

|

||||

// TODO(b/343434939): This is a bit of a hack. The contextFileName should ideally be passed

|

||||

// directly to the Config constructor in core, and have core handle setGeminiMdFilename.

|

||||

@@ -720,51 +700,27 @@ export async function loadCliConfig(

|

||||

setServerGeminiMdFilename(getCurrentGeminiMdFilename());

|

||||

}

|

||||

|

||||

const extensionContextFilePaths = activeExtensions.flatMap(

|

||||

(e) => e.contextFiles,

|

||||

);

|

||||

|

||||

// Automatically load output-language.md if it exists

|

||||

const outputLanguageFilePath = path.join(

|

||||

let outputLanguageFilePath: string | undefined = path.join(

|

||||

Storage.getGlobalQwenDir(),

|

||||

'output-language.md',

|

||||

);

|

||||

if (fs.existsSync(outputLanguageFilePath)) {

|

||||

extensionContextFilePaths.push(outputLanguageFilePath);

|

||||

if (debugMode) {

|

||||

logger.debug(

|

||||

`Found output-language.md, adding to context files: ${outputLanguageFilePath}`,

|

||||

);

|

||||

}

|

||||

} else {

|

||||

outputLanguageFilePath = undefined;

|

||||

}

|

||||

|

||||

const fileService = new FileDiscoveryService(cwd);

|

||||

|

||||

const fileFiltering = {

|

||||

...DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

...settings.context?.fileFiltering,

|

||||

};

|

||||

|

||||

const includeDirectories = (settings.context?.includeDirectories || [])

|

||||

.map(resolvePath)

|

||||

.concat((argv.includeDirectories || []).map(resolvePath));

|

||||

|

||||

// Call the (now wrapper) loadHierarchicalGeminiMemory which calls the server's version

|

||||

const { memoryContent, fileCount } = await loadHierarchicalGeminiMemory(

|

||||

cwd,

|

||||

settings.context?.loadMemoryFromIncludeDirectories

|

||||

? includeDirectories

|

||||

: [],

|

||||

debugMode,

|

||||

fileService,

|

||||

settings,

|

||||

extensionContextFilePaths,

|

||||

trustedFolder,

|

||||

memoryImportFormat,

|

||||

fileFiltering,

|

||||

);

|

||||

|

||||

let mcpServers = mergeMcpServers(settings, activeExtensions);

|

||||

const question = argv.promptInteractive || argv.prompt || '';

|

||||

const inputFormat: InputFormat =

|

||||

(argv.inputFormat as InputFormat | undefined) ?? InputFormat.TEXT;

|

||||

@@ -874,10 +830,11 @@ export async function loadCliConfig(

|

||||

}

|

||||

};

|

||||

|

||||

// ACP mode check: must include both --acp (current) and --experimental-acp (deprecated).

|

||||

// Without this check, edit, write_file, run_shell_command would be excluded in ACP mode.

|

||||

const isAcpMode = argv.acp || argv.experimentalAcp;

|

||||

if (!interactive && !isAcpMode && inputFormat !== InputFormat.STREAM_JSON) {

|

||||

if (

|

||||

!interactive &&

|

||||

!argv.experimentalAcp &&

|

||||

inputFormat !== InputFormat.STREAM_JSON

|

||||

) {

|

||||

switch (approvalMode) {

|

||||

case ApprovalMode.PLAN:

|

||||

case ApprovalMode.DEFAULT:

|

||||

@@ -902,38 +859,18 @@ export async function loadCliConfig(

|

||||

|

||||

const excludeTools = mergeExcludeTools(

|

||||

settings,

|

||||

activeExtensions,

|

||||

extraExcludes.length > 0 ? extraExcludes : undefined,

|

||||

argv.excludeTools,

|

||||

);

|

||||

const blockedMcpServers: Array<{ name: string; extensionName: string }> = [];

|

||||

|

||||

if (!argv.allowedMcpServerNames) {

|

||||

if (settings.mcp?.allowed) {

|

||||

mcpServers = allowedMcpServers(

|

||||

mcpServers,

|

||||

settings.mcp.allowed,

|

||||

blockedMcpServers,

|

||||

);

|

||||

}

|

||||

|

||||

if (settings.mcp?.excluded) {

|

||||

const excludedNames = new Set(settings.mcp.excluded.filter(Boolean));

|

||||

if (excludedNames.size > 0) {

|

||||

mcpServers = Object.fromEntries(

|

||||

Object.entries(mcpServers).filter(([key]) => !excludedNames.has(key)),

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

if (argv.allowedMcpServerNames) {

|

||||

mcpServers = allowedMcpServers(

|

||||

mcpServers,

|

||||

argv.allowedMcpServerNames,

|

||||

blockedMcpServers,

|

||||

);

|

||||

}

|

||||

const allowedMcpServers = argv.allowedMcpServerNames

|

||||

? new Set(argv.allowedMcpServerNames.filter(Boolean))

|

||||

: settings.mcp?.allowed

|

||||

? new Set(settings.mcp.allowed.filter(Boolean))

|

||||

: undefined;

|

||||

const excludedMcpServers = settings.mcp?.excluded

|

||||

? new Set(settings.mcp.excluded.filter(Boolean))

|

||||

: undefined;

|

||||

|

||||

const selectedAuthType =

|

||||

(argv.authType as AuthType | undefined) ||

|

||||

@@ -1000,6 +937,8 @@ export async function loadCliConfig(

|

||||

includeDirectories,

|

||||

loadMemoryFromIncludeDirectories:

|

||||

settings.context?.loadMemoryFromIncludeDirectories || false,

|

||||

importFormat: settings.context?.importFormat || 'tree',

|

||||

discoveryMaxDirs: settings.context?.discoveryMaxDirs || 200,

|

||||

debugMode,

|

||||

question,

|

||||

fullContext: argv.allFiles || false,

|

||||

@@ -1009,9 +948,13 @@ export async function loadCliConfig(

|

||||

toolDiscoveryCommand: settings.tools?.discoveryCommand,

|

||||

toolCallCommand: settings.tools?.callCommand,

|

||||

mcpServerCommand: settings.mcp?.serverCommand,

|

||||

mcpServers,

|

||||

userMemory: memoryContent,

|

||||

geminiMdFileCount: fileCount,

|

||||

mcpServers: settings.mcpServers || {},

|

||||

allowedMcpServers: allowedMcpServers

|

||||

? Array.from(allowedMcpServers)

|

||||

: undefined,

|

||||

excludedMcpServers: excludedMcpServers

|

||||

? Array.from(excludedMcpServers)

|

||||

: undefined,

|

||||

approvalMode,

|

||||

showMemoryUsage:

|

||||

argv.showMemoryUsage || settings.ui?.showMemoryUsage || false,

|

||||

@@ -1034,15 +977,14 @@ export async function loadCliConfig(

|

||||

fileDiscoveryService: fileService,

|

||||

bugCommand: settings.advanced?.bugCommand,

|

||||

model: resolvedModel,

|

||||

extensionContextFilePaths,

|

||||

outputLanguageFilePath,

|

||||

sessionTokenLimit: settings.model?.sessionTokenLimit ?? -1,

|

||||

maxSessionTurns:

|

||||

argv.maxSessionTurns ?? settings.model?.maxSessionTurns ?? -1,

|

||||

experimentalZedIntegration: argv.acp || argv.experimentalAcp || false,

|

||||

experimentalSkills: argv.experimentalSkills || false,

|

||||

listExtensions: argv.listExtensions || false,

|

||||

extensions: allExtensions,

|

||||

blockedMcpServers,

|

||||

overrideExtensions: overrideExtensions || argv.extensions,

|

||||

noBrowser: !!process.env['NO_BROWSER'],

|

||||

authType: selectedAuthType,

|

||||

inputFormat,

|

||||

@@ -1084,61 +1026,8 @@ export async function loadCliConfig(

|

||||

});

|

||||

}

|

||||

|

||||

function allowedMcpServers(

|

||||

mcpServers: { [x: string]: MCPServerConfig },

|

||||

allowMCPServers: string[],

|

||||

blockedMcpServers: Array<{ name: string; extensionName: string }>,

|

||||

) {

|

||||

const allowedNames = new Set(allowMCPServers.filter(Boolean));

|

||||

if (allowedNames.size > 0) {

|

||||

mcpServers = Object.fromEntries(

|

||||

Object.entries(mcpServers).filter(([key, server]) => {

|

||||

const isAllowed = allowedNames.has(key);

|

||||

if (!isAllowed) {

|

||||

blockedMcpServers.push({

|

||||

name: key,

|

||||

extensionName: server.extensionName || '',

|

||||

});

|

||||

}

|

||||

return isAllowed;

|

||||

}),

|

||||

);

|

||||

} else {

|

||||

blockedMcpServers.push(

|

||||

...Object.entries(mcpServers).map(([key, server]) => ({

|

||||

name: key,

|

||||

extensionName: server.extensionName || '',

|

||||

})),

|

||||

);

|

||||

mcpServers = {};

|

||||

}

|

||||

return mcpServers;

|

||||

}

|

||||

|

||||

function mergeMcpServers(settings: Settings, extensions: Extension[]) {

|

||||

const mcpServers = { ...(settings.mcpServers || {}) };

|

||||

for (const extension of extensions) {

|

||||

Object.entries(extension.config.mcpServers || {}).forEach(

|

||||

([key, server]) => {

|

||||

if (mcpServers[key]) {

|

||||

logger.warn(

|

||||

`Skipping extension MCP config for server with key "${key}" as it already exists.`,

|

||||

);

|

||||

return;

|

||||

}

|

||||

mcpServers[key] = {

|

||||

...server,

|

||||

extensionName: extension.config.name,

|

||||

};

|

||||

},

|

||||

);

|

||||

}

|

||||

return mcpServers;

|

||||

}

|

||||

|

||||

function mergeExcludeTools(

|

||||

settings: Settings,

|

||||

extensions: Extension[],

|

||||

extraExcludes?: string[] | undefined,

|

||||

cliExcludeTools?: string[] | undefined,

|

||||

): string[] {

|

||||

@@ -1147,10 +1036,5 @@ function mergeExcludeTools(

|

||||

...(settings.tools?.exclude || []),

|

||||

...(extraExcludes || []),

|

||||

]);

|

||||

for (const extension of extensions) {

|

||||

for (const tool of extension.config.excludeTools || []) {

|

||||

allExcludeTools.add(tool);

|

||||

}

|

||||

}

|

||||

return [...allExcludeTools];

|

||||

}

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

@@ -1,786 +0,0 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Google LLC

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import type {

|

||||

MCPServerConfig,

|

||||

GeminiCLIExtension,

|

||||

ExtensionInstallMetadata,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import {

|

||||

QWEN_DIR,

|

||||

Storage,

|

||||

Config,

|

||||

ExtensionInstallEvent,

|

||||

ExtensionUninstallEvent,

|

||||

ExtensionDisableEvent,

|

||||

ExtensionEnableEvent,

|

||||

logExtensionEnable,

|

||||

logExtensionInstallEvent,

|

||||

logExtensionUninstall,

|

||||

logExtensionDisable,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import * as fs from 'node:fs';

|

||||

import * as path from 'node:path';

|

||||

import * as os from 'node:os';

|

||||

import { SettingScope, loadSettings } from '../config/settings.js';

|

||||

import { getErrorMessage } from '../utils/errors.js';

|

||||

import { recursivelyHydrateStrings } from './extensions/variables.js';

|

||||

import { isWorkspaceTrusted } from './trustedFolders.js';

|

||||

import { resolveEnvVarsInObject } from '../utils/envVarResolver.js';

|

||||

import {

|

||||

cloneFromGit,

|

||||

downloadFromGitHubRelease,

|

||||

} from './extensions/github.js';

|

||||

import type { LoadExtensionContext } from './extensions/variableSchema.js';

|

||||

import { ExtensionEnablementManager } from './extensions/extensionEnablement.js';

|

||||

import chalk from 'chalk';

|

||||

import type { ConfirmationRequest } from '../ui/types.js';

|

||||

|

||||

export const EXTENSIONS_DIRECTORY_NAME = path.join(QWEN_DIR, 'extensions');

|

||||

|

||||

export const EXTENSIONS_CONFIG_FILENAME = 'qwen-extension.json';

|

||||

export const INSTALL_METADATA_FILENAME = '.qwen-extension-install.json';

|

||||

|

||||

export interface Extension {

|

||||

path: string;

|

||||

config: ExtensionConfig;

|

||||

contextFiles: string[];

|

||||

installMetadata?: ExtensionInstallMetadata | undefined;

|

||||

}

|

||||

|

||||

export interface ExtensionConfig {

|

||||

name: string;

|

||||

version: string;

|

||||

mcpServers?: Record<string, MCPServerConfig>;

|

||||

contextFileName?: string | string[];

|

||||

excludeTools?: string[];

|

||||

}

|

||||

|

||||

export interface ExtensionUpdateInfo {

|

||||

name: string;

|

||||

originalVersion: string;

|

||||

updatedVersion: string;

|

||||

}

|

||||

|

||||

export class ExtensionStorage {

|

||||

private readonly extensionName: string;

|

||||

|

||||

constructor(extensionName: string) {

|

||||

this.extensionName = extensionName;

|

||||

}

|

||||

|

||||

getExtensionDir(): string {

|

||||

return path.join(

|

||||

ExtensionStorage.getUserExtensionsDir(),

|

||||

this.extensionName,

|

||||

);

|

||||

}

|

||||

|

||||

getConfigPath(): string {

|

||||