mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-21 08:16:21 +00:00

Compare commits

4 Commits

feat/suppo

...

v0.6.2-pre

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

56e0d2fbbf | ||

|

|

5c884fd395 | ||

|

|

0073c77267 | ||

|

|

418aeb069d |

3

.github/CODEOWNERS

vendored

3

.github/CODEOWNERS

vendored

@@ -1,3 +0,0 @@

|

||||

* @tanzhenxin @DennisYu07 @gwinthis @LaZzyMan @pomelo-nwu @Mingholy

|

||||

# SDK TypeScript package changes require review from Mingholy

|

||||

packages/sdk-typescript/** @Mingholy

|

||||

17

.github/workflows/release-sdk.yml

vendored

17

.github/workflows/release-sdk.yml

vendored

@@ -241,7 +241,7 @@ jobs:

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

id: 'pr'

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

RELEASE_BRANCH: '${{ steps.release_branch.outputs.BRANCH_NAME }}'

|

||||

RELEASE_TAG: '${{ steps.version.outputs.RELEASE_TAG }}'

|

||||

run: |-

|

||||

@@ -258,15 +258,26 @@ jobs:

|

||||

|

||||

echo "PR_URL=${pr_url}" >> "${GITHUB_OUTPUT}"

|

||||

|

||||

- name: 'Wait for CI checks to complete'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

echo "Waiting for CI checks to complete..."

|

||||

gh pr checks "${PR_URL}" --watch --interval 30

|

||||

|

||||

- name: 'Enable auto-merge for release PR'

|

||||

if: |-

|

||||

${{ steps.vars.outputs.is_dry_run == 'false' && steps.vars.outputs.is_nightly == 'false' && steps.vars.outputs.is_preview == 'false' }}

|

||||

env:

|

||||

GITHUB_TOKEN: '${{ secrets.CI_BOT_PAT }}'

|

||||

GITHUB_TOKEN: '${{ secrets.GITHUB_TOKEN }}'

|

||||

PR_URL: '${{ steps.pr.outputs.PR_URL }}'

|

||||

run: |-

|

||||

set -euo pipefail

|

||||

gh pr merge "${PR_URL}" --merge --auto --delete-branch

|

||||

gh pr merge "${PR_URL}" --merge --auto

|

||||

|

||||

- name: 'Create Issue on Failure'

|

||||

if: |-

|

||||

|

||||

7

.vscode/settings.json

vendored

7

.vscode/settings.json

vendored

@@ -13,10 +13,5 @@

|

||||

"[javascript]": {

|

||||

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

||||

},

|

||||

"vitest.disableWorkspaceWarning": true,

|

||||

"lsp": {

|

||||

"enabled": true,

|

||||

"allowed": ["typescript-language-server"],

|

||||

"excluded": ["gopls"]

|

||||

}

|

||||

"vitest.disableWorkspaceWarning": true

|

||||

}

|

||||

|

||||

10

README.md

10

README.md

@@ -25,7 +25,7 @@ Qwen Code is an open-source AI agent for the terminal, optimized for [Qwen3-Code

|

||||

- **OpenAI-compatible, OAuth free tier**: use an OpenAI-compatible API, or sign in with Qwen OAuth to get 2,000 free requests/day.

|

||||

- **Open-source, co-evolving**: both the framework and the Qwen3-Coder model are open-source—and they ship and evolve together.

|

||||

- **Agentic workflow, feature-rich**: rich built-in tools (Skills, SubAgents, Plan Mode) for a full agentic workflow and a Claude Code-like experience.

|

||||

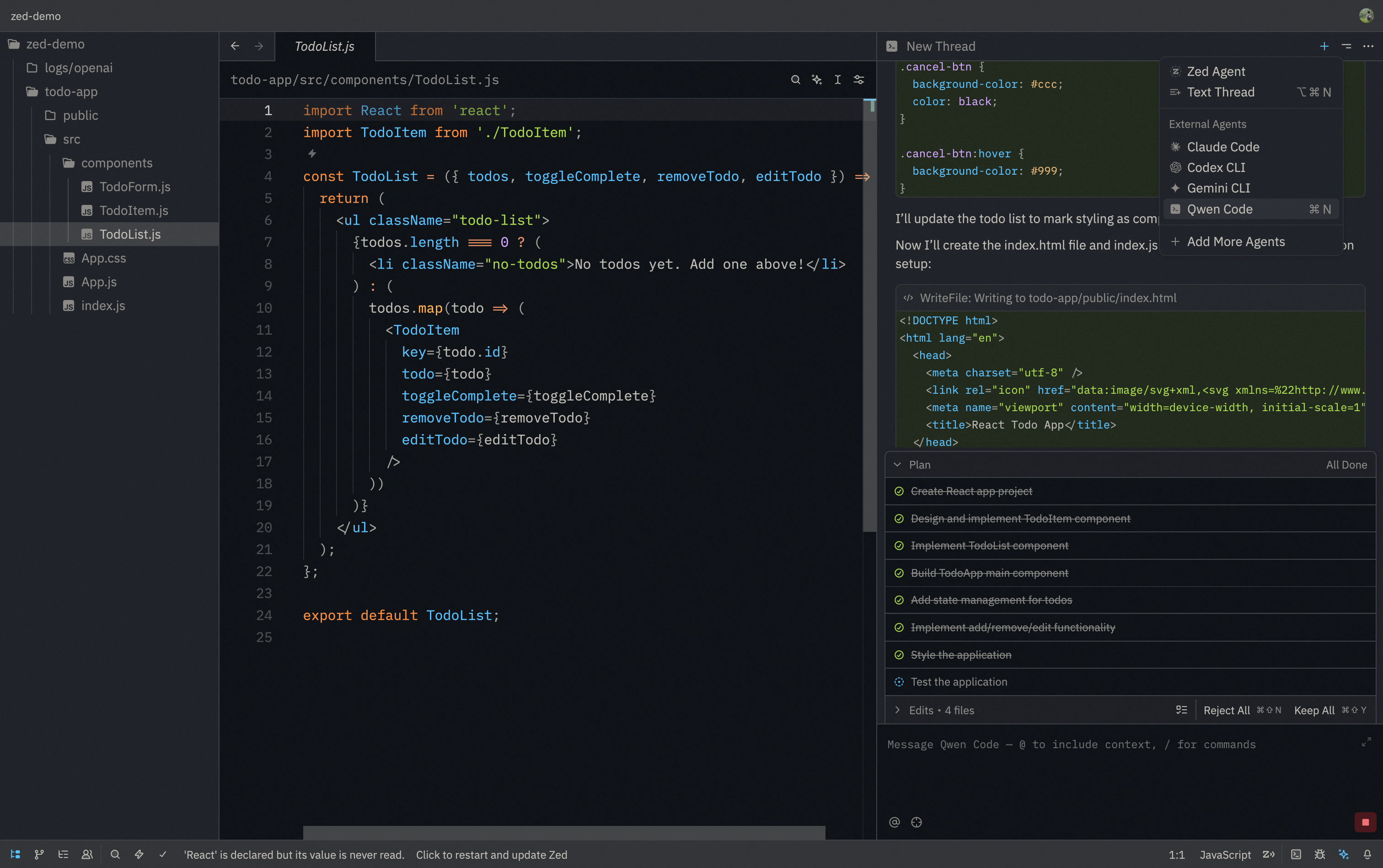

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code, Zed, and JetBrains IDEs.

|

||||

- **Terminal-first, IDE-friendly**: built for developers who live in the command line, with optional integration for VS Code and Zed.

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -137,11 +137,10 @@ Use `-p` to run Qwen Code without the interactive UI—ideal for scripts, automa

|

||||

|

||||

#### IDE integration

|

||||

|

||||

Use Qwen Code inside your editor (VS Code, Zed, and JetBrains IDEs):

|

||||

Use Qwen Code inside your editor (VS Code and Zed):

|

||||

|

||||

- [Use in VS Code](https://qwenlm.github.io/qwen-code-docs/en/users/integration-vscode/)

|

||||

- [Use in Zed](https://qwenlm.github.io/qwen-code-docs/en/users/integration-zed/)

|

||||

- [Use in JetBrains IDEs](https://qwenlm.github.io/qwen-code-docs/en/users/integration-jetbrains/)

|

||||

|

||||

#### TypeScript SDK

|

||||

|

||||

@@ -201,11 +200,6 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

@@ -1,147 +0,0 @@

|

||||

# Qwen Code CLI LSP 集成实现方案分析

|

||||

|

||||

## 1. 项目概述

|

||||

|

||||

本方案旨在将 LSP(Language Server Protocol)能力原生集成到 Qwen Code CLI 中,使 AI 代理能够利用代码导航、定义查找、引用查找等功能。LSP 将作为与 MCP 并行的一级扩展机制实现。

|

||||

|

||||

## 2. 技术方案对比

|

||||

|

||||

### 2.1 Piebald-AI/claude-code-lsps 方案

|

||||

- **架构**: 客户端直接与每个 LSP 通信,通过 `.lsp.json` 配置文件声明服务器命令/参数、stdio 传输和文件扩展名路由

|

||||

- **用户配置**: 低摩擦,只需放置 `.lsp.json` 配置并确保 LSP 二进制文件已安装

|

||||

- **安全**: LSP 子进程以用户权限运行,无内置信任门控

|

||||

- **功能覆盖**: 可以暴露完整的 LSP 表面(hover、诊断、代码操作、重命名等)

|

||||

|

||||

### 2.2 原生 LSP 客户端方案(推荐方案)

|

||||

- **架构**: Qwen Code CLI 直接作为 LSP 客户端,与语言服务器建立 JSON-RPC 连接

|

||||

- **用户配置**: 支持内置预设 + 用户自定义 `.lsp.json` 配置

|

||||

- **安全**: 与 MCP 共享相同的安全控制(信任工作区、允许/拒绝列表、确认提示)

|

||||

- **功能覆盖**: 暴露完整的 LSP 功能(流式诊断、代码操作、重命名、语义标记等)

|

||||

|

||||

### 2.3 cclsp + MCP 方案(备选)

|

||||

- **架构**: 通过 MCP 协议调用 cclsp 作为 LSP 桥接

|

||||

- **用户配置**: 需要 MCP 配置

|

||||

- **安全**: 通过 MCP 安全控制

|

||||

- **功能覆盖**: 依赖于 cclsp 映射的 MCP 工具

|

||||

|

||||

## 3. 原生 LSP 集成详细计划

|

||||

|

||||

### 3.1 方案选择

|

||||

- **推荐方案**: 原生 LSP 客户端作为主要路径,因为它提供完整 LSP 功能、更低延迟和更好的用户体验

|

||||

- **兼容层**: 保留 cclsp+MCP 作为现有 MCP 工作流的兼容桥接

|

||||

- **并行架构**: LSP 和 MCP 作为独立的扩展机制共存,共享安全策略

|

||||

|

||||

### 3.2 实现步骤

|

||||

|

||||

#### 3.2.1 创建原生 LSP 服务

|

||||

在 `packages/cli/src/services/lsp/` 目录下创建 `NativeLspService` 类,处理:

|

||||

- 工作区语言检测

|

||||

- 自动发现和启动语言服务器

|

||||

- 与现有文档/编辑模型同步

|

||||

- LSP 能力直接暴露给代理

|

||||

|

||||

#### 3.2.2 配置支持

|

||||

- 支持内置预设配置(常见语言服务器)

|

||||

- 支持用户自定义 `.lsp.json` 配置文件

|

||||

- 与 MCP 配置共存,共享信任控制

|

||||

|

||||

#### 3.2.3 集成启动流程

|

||||

- 在 `packages/cli/src/config/config.ts` 中的 `loadCliConfig` 函数内集成

|

||||

- 确保 LSP 服务与 MCP 服务共享相同的安全控制机制

|

||||

- 处理沙箱预检和主运行的重复调用问题

|

||||

|

||||

#### 3.2.4 功能标志配置

|

||||

- 在 `packages/cli/src/config/settingsSchema.ts` 中添加新的设置项

|

||||

- 提供全局开关(如 `lsp.enabled=false`)允许用户禁用 LSP 功能

|

||||

- 尊重 `mcp.allowed`/`mcp.excluded` 和文件夹信任设置

|

||||

|

||||

#### 3.2.5 安全控制

|

||||

- 与 MCP 共享相同的安全控制机制

|

||||

- 在信任工作区中自动启用,在非信任工作区中提示用户

|

||||

- 实现路径允许列表和进程启动确认

|

||||

|

||||

#### 3.2.6 错误处理与用户通知

|

||||

- 检测缺失的语言服务器并提供安装命令

|

||||

- 通过现有 MCP 状态 UI 显示错误信息

|

||||

- 实现重试/退避机制,检测沙箱环境并抑制自动启动

|

||||

|

||||

### 3.3 需要确认的不确定项

|

||||

|

||||

1. **启动集成点**:在 `loadCliConfig` 中集成原生 LSP 服务,需确保与 MCP 服务的协调

|

||||

|

||||

2. **配置优先级**:如果用户已有 cclsp MCP 配置,应保持并存还是优先使用原生 LSP

|

||||

|

||||

3. **功能开关设计**:开关应该是全局级别的,LSP 和 MCP 可独立启用/禁用

|

||||

|

||||

4. **共享安全模型**:如何在代码中复用 MCP 的信任/安全控制逻辑

|

||||

|

||||

5. **语言服务器管理**:如何管理 LSP 服务器生命周期并与文档编辑模型同步

|

||||

|

||||

6. **依赖检测机制**:检测 LSP 服务器可用性,失败时提供降级选项

|

||||

|

||||

7. **测试策略**:需要测试 LSP 与 MCP 的并行运行,以及共享安全控制

|

||||

|

||||

### 3.4 安全考虑

|

||||

|

||||

- 与 MCP 共享相同的安全控制模型

|

||||

- 仅在受信任工作区中启用自动 LSP 功能

|

||||

- 提供用户确认机制用于启动新的 LSP 服务器

|

||||

- 防止路径劫持,使用安全的路径解析

|

||||

|

||||

### 3.5 高级 LSP 功能支持

|

||||

|

||||

- **完整 LSP 功能**: 支持流式诊断、代码操作、重命名、语义高亮、工作区编辑等

|

||||

- **兼容 Claude 配置**: 支持导入 Claude Code 风格的 `.lsp.json` 配置

|

||||

- **性能优化**: 优化 LSP 服务器启动时间和内存使用

|

||||

|

||||

### 3.6 用户体验

|

||||

|

||||

- 提供安装提示而非自动安装

|

||||

- 在统一的状态界面显示 LSP 和 MCP 服务器状态

|

||||

- 提供独立开关让用户控制 LSP 和 MCP 功能

|

||||

- 为只读/沙箱环境提供安全的配置处理和清晰的错误消息

|

||||

|

||||

## 4. 实施总结

|

||||

|

||||

### 4.1 已完成的工作

|

||||

1. **NativeLspService 类**:创建了核心服务类,包含语言检测、配置合并、LSP 连接管理等功能

|

||||

2. **LSP 连接工厂**:实现了基于 stdio 的 LSP 连接创建和管理

|

||||

3. **语言检测机制**:实现了基于文件扩展名和项目配置文件的语言自动检测

|

||||

4. **配置系统**:实现了内置预设、用户配置和 Claude 兼容配置的合并

|

||||

5. **安全控制**:实现了与 MCP 共享的安全控制机制,包括信任检查、用户确认、路径安全验证

|

||||

6. **CLI 集成**:在 `loadCliConfig` 函数中集成了 LSP 服务初始化点

|

||||

|

||||

### 4.2 关键组件

|

||||

|

||||

#### 4.2.1 LspConnectionFactory

|

||||

- 使用 `vscode-jsonrpc` 和 `vscode-languageserver-protocol` 实现 LSP 连接

|

||||

- 支持 stdio 传输方式,可以扩展支持 TCP 传输

|

||||

- 提供连接创建、初始化和关闭的完整生命周期管理

|

||||

|

||||

#### 4.2.2 NativeLspService

|

||||

- **语言检测**:扫描项目文件和配置文件来识别编程语言

|

||||

- **配置合并**:按优先级合并内置预设、用户配置和兼容层配置

|

||||

- **LSP 服务器管理**:启动、停止和状态管理

|

||||

- **安全控制**:与 MCP 共享的信任和确认机制

|

||||

|

||||

#### 4.2.3 配置架构

|

||||

- **内置预设**:为常见语言提供默认 LSP 服务器配置

|

||||

- **用户配置**:支持 `.lsp.json` 文件格式

|

||||

- **Claude 兼容**:可导入 Claude Code 的 LSP 配置

|

||||

|

||||

### 4.3 依赖管理

|

||||

- 使用 `vscode-languageserver-protocol` 进行 LSP 协议通信

|

||||

- 使用 `vscode-jsonrpc` 进行 JSON-RPC 消息传递

|

||||

- 使用 `vscode-languageserver-textdocument` 管理文档版本

|

||||

|

||||

### 4.4 安全特性

|

||||

- 工作区信任检查

|

||||

- 用户确认机制(对于非信任工作区)

|

||||

- 命令存在性验证

|

||||

- 路径安全性检查

|

||||

|

||||

## 5. 总结

|

||||

|

||||

原生 LSP 客户端是当前最符合 Qwen Code 架构的选择,它提供了完整的 LSP 功能、更低的延迟和更好的用户体验。LSP 作为与 MCP 并行的一级扩展机制,将与 MCP 共享安全控制策略,但提供更丰富的代码智能功能。cclsp+MCP 可作为兼容层保留,以支持现有的 MCP 工作流。

|

||||

|

||||

该实现方案将使 Qwen Code CLI 具备完整的 LSP 功能,包括代码跳转、引用查找、自动补全、代码诊断等,为 AI 代理提供更丰富的代码理解能力。

|

||||

@@ -10,5 +10,4 @@ export default {

|

||||

'web-search': 'Web Search',

|

||||

memory: 'Memory',

|

||||

'mcp-server': 'MCP Servers',

|

||||

sandbox: 'Sandboxing',

|

||||

};

|

||||

|

||||

@@ -1,90 +0,0 @@

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

### Currently, the project does not support the use of the BUILD_SANDBOX function after installation through the npm package

|

||||

|

||||

1. To build a custom sandbox, you need to access the build scripts (scripts/build_sandbox.js) in the source code repository.

|

||||

2. These build scripts are not included in the packages released by npm.

|

||||

3. The code contains hard-coded path checks that explicitly reject build requests from non-source code environments.

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile, The specific operation is as follows

|

||||

|

||||

#### 1、Clone qwen code project first, https://github.com/QwenLM/qwen-code.git

|

||||

|

||||

#### 2、Make sure you perform the following operation in the source code repository directory

|

||||

|

||||

```bash

|

||||

# 1. First, install the dependencies of the project

|

||||

npm install

|

||||

|

||||

# 2. Build the Qwen Code project

|

||||

npm run build

|

||||

|

||||

# 3. Verify that the dist directory has been generated

|

||||

ls -la packages/cli/dist/

|

||||

|

||||

# 4. Create a global link in the CLI package directory

|

||||

cd packages/cli

|

||||

npm link

|

||||

|

||||

# 5. Verification link (it should now point to the source code)

|

||||

which qwen

|

||||

# Expected output: /xxx/xxx/.nvm/versions/node/v24.11.1/bin/qwen

|

||||

# Or similar paths, but it should be a symbolic link

|

||||

|

||||

# 6. For details of the symbolic link, you can see the specific source code path

|

||||

ls -la $(dirname $(which qwen))/../lib/node_modules/@qwen-code/qwen-code

|

||||

# It should show that this is a symbolic link pointing to your source code directory

|

||||

|

||||

# 7.Test the version of qwen

|

||||

qwen -v

|

||||

# npm link will overwrite the global qwen. To avoid being unable to distinguish the same version number, you can uninstall the global CLI first

|

||||

```

|

||||

|

||||

#### 3、Create your sandbox Dockerfile under the root directory of your own project

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

|

||||

- Official mirror image address:https://github.com/QwenLM/qwen-code/pkgs/container/qwen-code

|

||||

|

||||

```bash

|

||||

# Based on the official Qwen sandbox image (It is recommended to explicitly specify the version)

|

||||

FROM ghcr.io/qwenlm/qwen-code:sha-570ec43

|

||||

# Add your extra tools here

|

||||

RUN apt-get update && apt-get install -y \

|

||||

git \

|

||||

python3 \

|

||||

ripgrep

|

||||

```

|

||||

|

||||

#### 4、Create the first sandbox image under the root directory of your project

|

||||

|

||||

```bash

|

||||

GEMINI_SANDBOX=docker BUILD_SANDBOX=1 qwen -s

|

||||

# Observe whether the sandbox version of the tool you launched is consistent with the version of your custom image. If they are consistent, the startup will be successful

|

||||

```

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

#### Remove npm link

|

||||

|

||||

- If you want to restore the official CLI of qwen, please remove the npm link

|

||||

|

||||

```bash

|

||||

# Method 1: Unlink globally

|

||||

npm unlink -g @qwen-code/qwen-code

|

||||

|

||||

# Method 2: Remove it in the packages/cli directory

|

||||

cd packages/cli

|

||||

npm unlink

|

||||

|

||||

# Verification has been lifted

|

||||

which qwen

|

||||

# It should display "qwen not found"

|

||||

|

||||

# Reinstall the global version if necessary

|

||||

npm install -g @qwen-code/qwen-code

|

||||

|

||||

# Verification Recovery

|

||||

which qwen

|

||||

qwen --version

|

||||

```

|

||||

@@ -12,7 +12,6 @@ export default {

|

||||

},

|

||||

'integration-vscode': 'Visual Studio Code',

|

||||

'integration-zed': 'Zed IDE',

|

||||

'integration-jetbrains': 'JetBrains IDEs',

|

||||

'integration-github-action': 'Github Actions',

|

||||

'Code with Qwen Code': {

|

||||

type: 'separator',

|

||||

|

||||

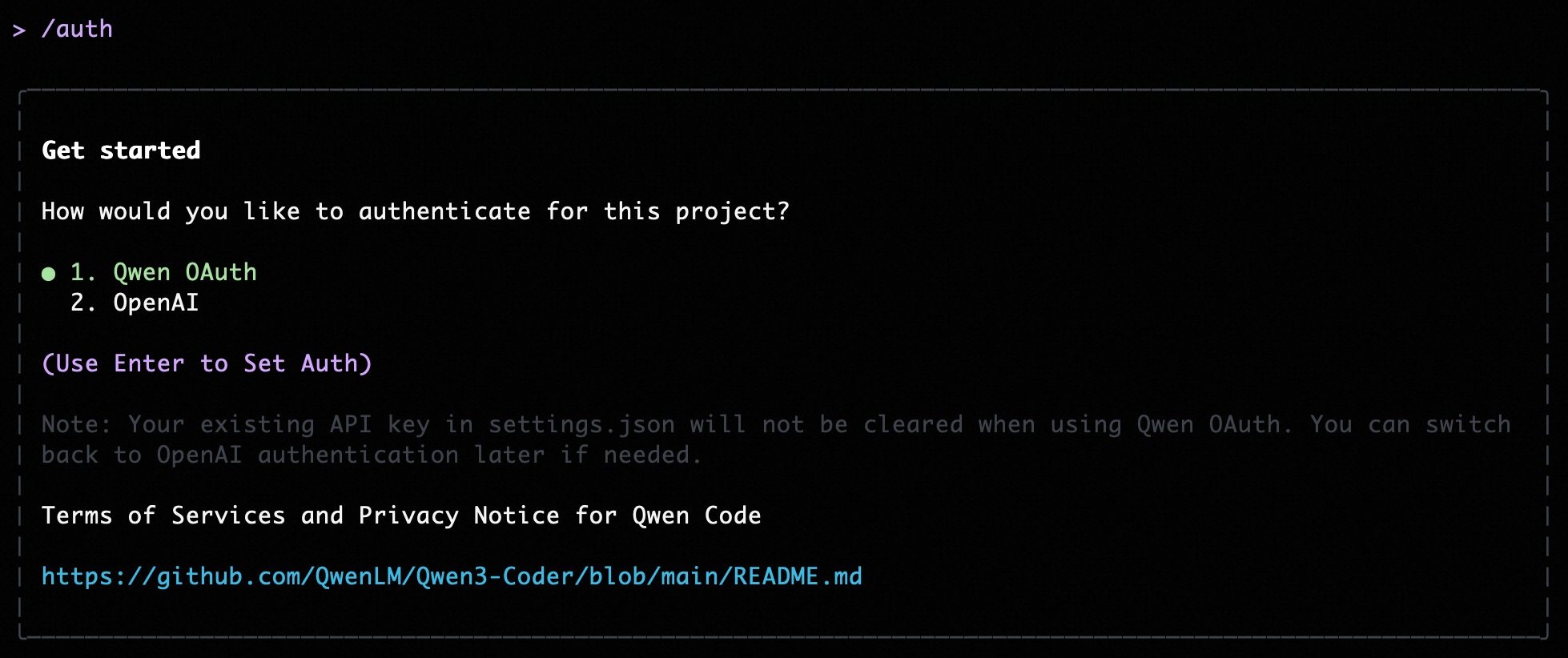

@@ -5,13 +5,11 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -26,54 +24,15 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

### Quick start (interactive, recommended for local use)

|

||||

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -104,7 +104,7 @@ Settings are organized into categories. All settings should be placed within the

|

||||

| `model.name` | string | The Qwen model to use for conversations. | `undefined` |

|

||||

| `model.maxSessionTurns` | number | Maximum number of user/model/tool turns to keep in a session. -1 means unlimited. | `-1` |

|

||||

| `model.summarizeToolOutput` | object | Enables or disables the summarization of tool output. You can specify the token budget for the summarization using the `tokenBudget` setting. Note: Currently only the `run_shell_command` tool is supported. For example `{"run_shell_command": {"tokenBudget": 2000}}` | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, `disableCacheControl`, and `customHeaders` (custom HTTP headers for API requests), along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.generationConfig` | object | Advanced overrides passed to the underlying content generator. Supports request controls such as `timeout`, `maxRetries`, and `disableCacheControl`, along with fine-tuning knobs under `samplingParams` (for example `temperature`, `top_p`, `max_tokens`). Leave unset to rely on provider defaults. | `undefined` |

|

||||

| `model.chatCompression.contextPercentageThreshold` | number | Sets the threshold for chat history compression as a percentage of the model's total token limit. This is a value between 0 and 1 that applies to both automatic compression and the manual `/compress` command. For example, a value of `0.6` will trigger compression when the chat history exceeds 60% of the token limit. Use `0` to disable compression entirely. | `0.7` |

|

||||

| `model.skipNextSpeakerCheck` | boolean | Skip the next speaker check. | `false` |

|

||||

| `model.skipLoopDetection` | boolean | Disables loop detection checks. Loop detection prevents infinite loops in AI responses but can generate false positives that interrupt legitimate workflows. Enable this option if you experience frequent false positive loop detection interruptions. | `false` |

|

||||

@@ -114,16 +114,12 @@ Settings are organized into categories. All settings should be placed within the

|

||||

|

||||

**Example model.generationConfig:**

|

||||

|

||||

```json

|

||||

```

|

||||

{

|

||||

"model": {

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"disableCacheControl": false,

|

||||

"customHeaders": {

|

||||

"X-Request-ID": "req-123",

|

||||

"X-User-ID": "user-456"

|

||||

},

|

||||

"samplingParams": {

|

||||

"temperature": 0.2,

|

||||

"top_p": 0.8,

|

||||

@@ -134,113 +130,19 @@ Settings are organized into categories. All settings should be placed within the

|

||||

}

|

||||

```

|

||||

|

||||

The `customHeaders` field allows you to add custom HTTP headers to all API requests. This is useful for request tracing, monitoring, API gateway routing, or when different models require different headers. If `customHeaders` is defined in `modelProviders[].generationConfig.customHeaders`, it will be used directly; otherwise, headers from `model.generationConfig.customHeaders` will be used. No merging occurs between the two levels.

|

||||

|

||||

**model.openAILoggingDir examples:**

|

||||

|

||||

- `"~/qwen-logs"` - Logs to `~/qwen-logs` directory

|

||||

- `"./custom-logs"` - Logs to `./custom-logs` relative to current directory

|

||||

- `"/tmp/openai-logs"` - Logs to absolute path `/tmp/openai-logs`

|

||||

|

||||

#### modelProviders

|

||||

|

||||

Use `modelProviders` to declare curated model lists per auth type that the `/model` picker can switch between. Keys must be valid auth types (`openai`, `anthropic`, `gemini`, `vertex-ai`, etc.). Each entry requires an `id` and **must include `envKey`**, with optional `name`, `description`, `baseUrl`, and `generationConfig`. Credentials are never persisted in settings; the runtime reads them from `process.env[envKey]`. Qwen OAuth models remain hard-coded and cannot be overridden.

|

||||

|

||||

##### Example

|

||||

|

||||

```json

|

||||

{

|

||||

"modelProviders": {

|

||||

"openai": [

|

||||

{

|

||||

"id": "gpt-4o",

|

||||

"name": "GPT-4o",

|

||||

"envKey": "OPENAI_API_KEY",

|

||||

"baseUrl": "https://api.openai.com/v1",

|

||||

"generationConfig": {

|

||||

"timeout": 60000,

|

||||

"maxRetries": 3,

|

||||

"customHeaders": {

|

||||

"X-Model-Version": "v1.0",

|

||||

"X-Request-Priority": "high"

|

||||

},

|

||||

"samplingParams": { "temperature": 0.2 }

|

||||

}

|

||||

}

|

||||

],

|

||||

"anthropic": [

|

||||

{

|

||||

"id": "claude-3-5-sonnet",

|

||||

"envKey": "ANTHROPIC_API_KEY",

|

||||

"baseUrl": "https://api.anthropic.com/v1"

|

||||

}

|

||||

],

|

||||

"gemini": [

|

||||

{

|

||||

"id": "gemini-2.0-flash",

|

||||

"name": "Gemini 2.0 Flash",

|

||||

"envKey": "GEMINI_API_KEY",

|

||||

"baseUrl": "https://generativelanguage.googleapis.com"

|

||||

}

|

||||

],

|

||||

"vertex-ai": [

|

||||

{

|

||||

"id": "gemini-1.5-pro-vertex",

|

||||

"envKey": "GOOGLE_API_KEY",

|

||||

"baseUrl": "https://generativelanguage.googleapis.com"

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

> [!note]

|

||||

> Only the `/model` command exposes non-default auth types. Anthropic, Gemini, Vertex AI, etc., must be defined via `modelProviders`. The `/auth` command intentionally lists only the built-in Qwen OAuth and OpenAI flows.

|

||||

|

||||

##### Resolution layers and atomicity

|

||||

|

||||

The effective auth/model/credential values are chosen per field using the following precedence (first present wins). You can combine `--auth-type` with `--model` to point directly at a provider entry; these CLI flags run before other layers.

|

||||

|

||||

| Layer (highest → lowest) | authType | model | apiKey | baseUrl | apiKeyEnvKey | proxy |

|

||||

| -------------------------- | ----------------------------------- | ----------------------------------------------- | --------------------------------------------------- | ---------------------------------------------------- | ---------------------- | --------------------------------- |

|

||||

| Programmatic overrides | `/auth ` | `/auth` input | `/auth` input | `/auth` input | — | — |

|

||||

| Model provider selection | — | `modelProvider.id` | `env[modelProvider.envKey]` | `modelProvider.baseUrl` | `modelProvider.envKey` | — |

|

||||

| CLI arguments | `--auth-type` | `--model` | `--openaiApiKey` (or provider-specific equivalents) | `--openaiBaseUrl` (or provider-specific equivalents) | — | — |

|

||||

| Environment variables | — | Provider-specific mapping (e.g. `OPENAI_MODEL`) | Provider-specific mapping (e.g. `OPENAI_API_KEY`) | Provider-specific mapping (e.g. `OPENAI_BASE_URL`) | — | — |

|

||||

| Settings (`settings.json`) | `security.auth.selectedType` | `model.name` | `security.auth.apiKey` | `security.auth.baseUrl` | — | — |

|

||||

| Default / computed | Falls back to `AuthType.QWEN_OAUTH` | Built-in default (OpenAI ⇒ `qwen3-coder-plus`) | — | — | — | `Config.getProxy()` if configured |

|

||||

|

||||

\*When present, CLI auth flags override settings. Otherwise, `security.auth.selectedType` or the implicit default determine the auth type. Qwen OAuth and OpenAI are the only auth types surfaced without extra configuration.

|

||||

|

||||

Model-provider sourced values are applied atomically: once a provider model is active, every field it defines is protected from lower layers until you manually clear credentials via `/auth`. The final `generationConfig` is the projection across all layers—lower layers only fill gaps left by higher ones, and the provider layer remains impenetrable.

|

||||

|

||||

The merge strategy for `modelProviders` is REPLACE: the entire `modelProviders` from project settings will override the corresponding section in user settings, rather than merging the two.

|

||||

|

||||

##### Generation config layering

|

||||

|

||||

Per-field precedence for `generationConfig`:

|

||||

|

||||

1. Programmatic overrides (e.g. runtime `/model`, `/auth` changes)

|

||||

2. `modelProviders[authType][].generationConfig`

|

||||

3. `settings.model.generationConfig`

|

||||

4. Content-generator defaults (`getDefaultGenerationConfig` for OpenAI, `getParameterValue` for Gemini, etc.)

|

||||

|

||||

`samplingParams` and `customHeaders` are both treated atomically; provider values replace the entire object. If `modelProviders[].generationConfig` defines these fields, they are used directly; otherwise, values from `model.generationConfig` are used. No merging occurs between provider and global configuration levels. Defaults from the content generator apply last so each provider retains its tuned baseline.

|

||||

|

||||

##### Selection persistence and recommendations

|

||||

|

||||

> [!important]

|

||||

> Define `modelProviders` in the user-scope `~/.qwen/settings.json` whenever possible and avoid persisting credential overrides in any scope. Keeping the provider catalog in user settings prevents merge/override conflicts between project and user scopes and ensures `/auth` and `/model` updates always write back to a consistent scope.

|

||||

|

||||

- `/model` and `/auth` persist `model.name` (where applicable) and `security.auth.selectedType` to the closest writable scope that already defines `modelProviders`; otherwise they fall back to the user scope. This keeps workspace/user files in sync with the active provider catalog.

|

||||

- Without `modelProviders`, the resolver mixes CLI/env/settings layers, which is fine for single-provider setups but cumbersome when frequently switching. Define provider catalogs whenever multi-model workflows are common so that switches stay atomic, source-attributed, and debuggable.

|

||||

|

||||

#### context

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| ------------------------------------------------- | -------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `context.fileName` | string or array of strings | The name of the context file(s). | `undefined` |

|

||||

| `context.importFormat` | string | The format to use when importing memory. | `undefined` |

|

||||

| `context.discoveryMaxDirs` | number | Maximum number of directories to search for memory. | `200` |

|

||||

| `context.includeDirectories` | array | Additional directories to include in the workspace context. Specifies an array of additional absolute or relative paths to include in the workspace context. Missing directories will be skipped with a warning by default. Paths can use `~` to refer to the user's home directory. This setting can be combined with the `--include-directories` command-line flag. | `[]` |

|

||||

| `context.loadFromIncludeDirectories` | boolean | Controls the behavior of the `/memory refresh` command. If set to `true`, `QWEN.md` files should be loaded from all directories that are added. If set to `false`, `QWEN.md` should only be loaded from the current directory. | `false` |

|

||||

| `context.fileFiltering.respectGitIgnore` | boolean | Respect .gitignore files when searching. | `true` |

|

||||

@@ -287,26 +189,6 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

>

|

||||

> **Security Note for MCP servers:** These settings use simple string matching on MCP server names, which can be modified. If you're a system administrator looking to prevent users from bypassing this, consider configuring the `mcpServers` at the system settings level such that the user will not be able to configure any MCP servers of their own. This should not be used as an airtight security mechanism.

|

||||

|

||||

#### lsp

|

||||

|

||||

> [!warning]

|

||||

> **Experimental Feature**: LSP support is currently experimental and disabled by default. Enable it using the `--experimental-lsp` command line flag.

|

||||

|

||||

Language Server Protocol (LSP) settings for code intelligence features like go-to-definition, find references, and diagnostics. See the [LSP documentation](../features/lsp) for more details.

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| ------------------ | ---------------- | ---------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `lsp.enabled` | boolean | Enable/disable LSP support. Has no effect unless `--experimental-lsp` is provided. | `false` |

|

||||

| `lsp.autoDetect` | boolean | Automatically detect and start language servers based on project files. | `true` |

|

||||

| `lsp.serverTimeout`| number | LSP server startup timeout in milliseconds. | `10000` |

|

||||

| `lsp.allowed` | array of strings | An allowlist of LSP servers to allow. Empty means allow all detected servers. | `[]` |

|

||||

| `lsp.excluded` | array of strings | A denylist of LSP servers to exclude. A server listed in both is excluded. | `[]` |

|

||||

| `lsp.languageServers` | object | Custom language server configurations. See the [LSP documentation](../features/lsp#custom-language-servers) for configuration format. | `{}` |

|

||||

|

||||

> [!note]

|

||||

>

|

||||

> **Security Note for LSP servers:** LSP servers run with your user permissions and can execute code. They are only started in trusted workspaces by default. You can configure per-server trust requirements in the `.lsp.json` configuration file.

|

||||

|

||||

#### security

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

@@ -330,12 +212,6 @@ Language Server Protocol (LSP) settings for code intelligence features like go-t

|

||||

>

|

||||

> **Note about advanced.tavilyApiKey:** This is a legacy configuration format. For Qwen OAuth users, DashScope provider is automatically available without any configuration. For other authentication types, configure Tavily or Google providers using the new `webSearch` configuration format.

|

||||

|

||||

#### experimental

|

||||

|

||||

| Setting | Type | Description | Default |

|

||||

| --------------------- | ------- | -------------------------------- | ------- |

|

||||

| `experimental.skills` | boolean | Enable experimental Agent Skills | `false` |

|

||||

|

||||

#### mcpServers

|

||||

|

||||

Configures connections to one or more Model-Context Protocol (MCP) servers for discovering and using custom tools. Qwen Code attempts to connect to each configured MCP server to discover available tools. If multiple MCP servers expose a tool with the same name, the tool names will be prefixed with the server alias you defined in the configuration (e.g., `serverAlias__actualToolName`) to avoid conflicts. Note that the system might strip certain schema properties from MCP tool definitions for compatibility. At least one of `command`, `url`, or `httpUrl` must be provided. If multiple are specified, the order of precedence is `httpUrl`, then `url`, then `command`.

|

||||

@@ -505,9 +381,8 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--experimental-lsp` | | Enables experimental [LSP (Language Server Protocol)](../features/lsp) feature for code intelligence (go-to-definition, find references, diagnostics, etc.). | | Experimental. Requires language servers to be installed. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

| `--proxy` | | Sets the proxy for the CLI. | Proxy URL | Example: `--proxy http://localhost:7890`. |

|

||||

@@ -555,13 +430,16 @@ Here's a conceptual example of what a context file at the root of a TypeScript p

|

||||

|

||||

This example demonstrates how you can provide general project context, specific coding conventions, and even notes about particular files or components. The more relevant and precise your context files are, the better the AI can assist you. Project-specific context files are highly encouraged to establish conventions and context.

|

||||

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a sophisticated hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

1. **Global Context File:**

|

||||

- Location: `~/.qwen/<configured-context-filename>` (e.g., `~/.qwen/QWEN.md` in your user home directory).

|

||||

- Scope: Provides default instructions for all your projects.

|

||||

2. **Project Root & Ancestors Context Files:**

|

||||

- Location: The CLI searches for the configured context file in the current working directory and then in each parent directory up to either the project root (identified by a `.git` folder) or your home directory.

|

||||

- Scope: Provides context relevant to the entire project or a significant portion of it.

|

||||

3. **Sub-directory Context Files (Contextual/Local):**

|

||||

- Location: The CLI also scans for the configured context file in subdirectories _below_ the current working directory (respecting common ignore patterns like `node_modules`, `.git`, etc.). The breadth of this search is limited to 200 directories by default, but can be configured with the `context.discoveryMaxDirs` setting in your `settings.json` file.

|

||||

- Scope: Allows for highly specific instructions relevant to a particular component, module, or subsection of your project.

|

||||

- **Concatenation & UI Indication:** The contents of all found context files are concatenated (with separators indicating their origin and path) and provided as part of the system prompt. The CLI footer displays the count of loaded context files, giving you a quick visual cue about the active instructional context.

|

||||

- **Importing Content:** You can modularize your context files by importing other Markdown files using the `@path/to/file.md` syntax. For more details, see the [Memory Import Processor documentation](../configuration/memory).

|

||||

- **Commands for Memory Management:**

|

||||

|

||||

@@ -8,7 +8,6 @@ export default {

|

||||

},

|

||||

'approval-mode': 'Approval Mode',

|

||||

mcp: 'MCP',

|

||||

lsp: 'LSP (Language Server Protocol)',

|

||||

'token-caching': 'Token Caching',

|

||||

sandbox: 'Sandboxing',

|

||||

language: 'i18n',

|

||||

|

||||

@@ -59,7 +59,6 @@ Commands for managing AI tools and models.

|

||||

| ---------------- | --------------------------------------------- | --------------------------------------------- |

|

||||

| `/mcp` | List configured MCP servers and tools | `/mcp`, `/mcp desc` |

|

||||

| `/tools` | Display currently available tool list | `/tools`, `/tools desc` |

|

||||

| `/skills` | List and run available skills (experimental) | `/skills`, `/skills <name>` |

|

||||

| `/approval-mode` | Change approval mode for tool usage | `/approval-mode <mode (auto-edit)> --project` |

|

||||

| →`plan` | Analysis only, no execution | Secure review |

|

||||

| →`default` | Require approval for edits | Daily use |

|

||||

|

||||

@@ -1,383 +0,0 @@

|

||||

# Language Server Protocol (LSP) Support

|

||||

|

||||

Qwen Code provides native Language Server Protocol (LSP) support, enabling advanced code intelligence features like go-to-definition, find references, diagnostics, and code actions. This integration allows the AI agent to understand your code more deeply and provide more accurate assistance.

|

||||

|

||||

## Overview

|

||||

|

||||

LSP support in Qwen Code works by connecting to language servers that understand your code. When you work with TypeScript, Python, Go, or other supported languages, Qwen Code can automatically start the appropriate language server and use it to:

|

||||

|

||||

- Navigate to symbol definitions

|

||||

- Find all references to a symbol

|

||||

- Get hover information (documentation, type info)

|

||||

- View diagnostic messages (errors, warnings)

|

||||

- Access code actions (quick fixes, refactorings)

|

||||

- Analyze call hierarchies

|

||||

|

||||

## Quick Start

|

||||

|

||||

LSP is enabled by default in Qwen Code. For most common languages, Qwen Code will automatically detect and start the appropriate language server if it's installed on your system.

|

||||

|

||||

### Prerequisites

|

||||

|

||||

You need to have the language server for your programming language installed:

|

||||

|

||||

| Language | Language Server | Install Command |

|

||||

|----------|----------------|-----------------|

|

||||

| TypeScript/JavaScript | typescript-language-server | `npm install -g typescript-language-server typescript` |

|

||||

| Python | pylsp | `pip install python-lsp-server` |

|

||||

| Go | gopls | `go install golang.org/x/tools/gopls@latest` |

|

||||

| Rust | rust-analyzer | [Installation guide](https://rust-analyzer.github.io/manual.html#installation) |

|

||||

|

||||

## Configuration

|

||||

|

||||

### Settings

|

||||

|

||||

You can configure LSP behavior in your `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"lsp": {

|

||||

"enabled": true,

|

||||

"autoDetect": true,

|

||||

"serverTimeout": 10000,

|

||||

"allowed": [],

|

||||

"excluded": []

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

| Setting | Type | Default | Description |

|

||||

|---------|------|---------|-------------|

|

||||

| `lsp.enabled` | boolean | `true` | Enable/disable LSP support |

|

||||

| `lsp.autoDetect` | boolean | `true` | Automatically detect and start language servers |

|

||||

| `lsp.serverTimeout` | number | `10000` | Server startup timeout in milliseconds |

|

||||

| `lsp.allowed` | string[] | `[]` | Allow only these servers (empty = allow all) |

|

||||

| `lsp.excluded` | string[] | `[]` | Exclude these servers from starting |

|

||||

|

||||

### Custom Language Servers

|

||||

|

||||

You can configure custom language servers using a `.lsp.json` file in your project root:

|

||||

|

||||

```json

|

||||

{

|

||||

"languageServers": {

|

||||

"my-custom-lsp": {

|

||||

"languages": ["mylang"],

|

||||

"command": "my-lsp-server",

|

||||

"args": ["--stdio"],

|

||||

"transport": "stdio",

|

||||

"initializationOptions": {},

|

||||

"settings": {}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

#### Configuration Options

|

||||

|

||||

| Option | Type | Required | Description |

|

||||

|--------|------|----------|-------------|

|

||||

| `languages` | string[] | Yes | Languages this server handles |

|

||||

| `command` | string | Yes* | Command to start the server |

|

||||

| `args` | string[] | No | Command line arguments |

|

||||

| `transport` | string | No | Transport type: `stdio` (default), `tcp`, or `socket` |

|

||||

| `env` | object | No | Environment variables |

|

||||

| `initializationOptions` | object | No | LSP initialization options |

|

||||

| `settings` | object | No | Server settings |

|

||||

| `workspaceFolder` | string | No | Override workspace folder |

|

||||

| `startupTimeout` | number | No | Startup timeout in ms |

|

||||

| `shutdownTimeout` | number | No | Shutdown timeout in ms |

|

||||

| `restartOnCrash` | boolean | No | Auto-restart on crash |

|

||||

| `maxRestarts` | number | No | Maximum restart attempts |

|

||||

| `trustRequired` | boolean | No | Require trusted workspace |

|

||||

|

||||

*Required for `stdio` transport

|

||||

|

||||

#### TCP/Socket Transport

|

||||

|

||||

For servers that use TCP or Unix socket transport:

|

||||

|

||||

```json

|

||||

{

|

||||

"languageServers": {

|

||||

"remote-lsp": {

|

||||

"languages": ["custom"],

|

||||

"transport": "tcp",

|

||||

"socket": {

|

||||

"host": "127.0.0.1",

|

||||

"port": 9999

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Available LSP Operations

|

||||

|

||||

Qwen Code exposes LSP functionality through the unified `lsp` tool. Here are the available operations:

|

||||

|

||||

### Code Navigation

|

||||

|

||||

#### Go to Definition

|

||||

Find where a symbol is defined.

|

||||

|

||||

```

|

||||

Operation: goToDefinition

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Line number (1-based)

|

||||

- character: Column number (1-based)

|

||||

```

|

||||

|

||||

#### Find References

|

||||

Find all references to a symbol.

|

||||

|

||||

```

|

||||

Operation: findReferences

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Line number (1-based)

|

||||

- character: Column number (1-based)

|

||||

- includeDeclaration: Include the declaration itself (optional)

|

||||

```

|

||||

|

||||

#### Go to Implementation

|

||||

Find implementations of an interface or abstract method.

|

||||

|

||||

```

|

||||

Operation: goToImplementation

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Line number (1-based)

|

||||

- character: Column number (1-based)

|

||||

```

|

||||

|

||||

### Symbol Information

|

||||

|

||||

#### Hover

|

||||

Get documentation and type information for a symbol.

|

||||

|

||||

```

|

||||

Operation: hover

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Line number (1-based)

|

||||

- character: Column number (1-based)

|

||||

```

|

||||

|

||||

#### Document Symbols

|

||||

Get all symbols in a document.

|

||||

|

||||

```

|

||||

Operation: documentSymbol

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

```

|

||||

|

||||

#### Workspace Symbol Search

|

||||

Search for symbols across the workspace.

|

||||

|

||||

```

|

||||

Operation: workspaceSymbol

|

||||

Parameters:

|

||||

- query: Search query string

|

||||

- limit: Maximum results (optional)

|

||||

```

|

||||

|

||||

### Call Hierarchy

|

||||

|

||||

#### Prepare Call Hierarchy

|

||||

Get the call hierarchy item at a position.

|

||||

|

||||

```

|

||||

Operation: prepareCallHierarchy

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Line number (1-based)

|

||||

- character: Column number (1-based)

|

||||

```

|

||||

|

||||

#### Incoming Calls

|

||||

Find all functions that call the given function.

|

||||

|

||||

```

|

||||

Operation: incomingCalls

|

||||

Parameters:

|

||||

- callHierarchyItem: Item from prepareCallHierarchy

|

||||

```

|

||||

|

||||

#### Outgoing Calls

|

||||

Find all functions called by the given function.

|

||||

|

||||

```

|

||||

Operation: outgoingCalls

|

||||

Parameters:

|

||||

- callHierarchyItem: Item from prepareCallHierarchy

|

||||

```

|

||||

|

||||

### Diagnostics

|

||||

|

||||

#### File Diagnostics

|

||||

Get diagnostic messages (errors, warnings) for a file.

|

||||

|

||||

```

|

||||

Operation: diagnostics

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

```

|

||||

|

||||

#### Workspace Diagnostics

|

||||

Get all diagnostic messages across the workspace.

|

||||

|

||||

```

|

||||

Operation: workspaceDiagnostics

|

||||

Parameters:

|

||||

- limit: Maximum results (optional)

|

||||

```

|

||||

|

||||

### Code Actions

|

||||

|

||||

#### Get Code Actions

|

||||

Get available code actions (quick fixes, refactorings) at a location.

|

||||

|

||||

```

|

||||

Operation: codeActions

|

||||

Parameters:

|

||||

- filePath: Path to the file

|

||||

- line: Start line number (1-based)

|

||||

- character: Start column number (1-based)

|

||||

- endLine: End line number (optional, defaults to line)

|

||||

- endCharacter: End column (optional, defaults to character)

|

||||

- diagnostics: Diagnostics to get actions for (optional)

|

||||

- codeActionKinds: Filter by action kind (optional)

|

||||

```

|

||||

|

||||

Code action kinds:

|

||||

- `quickfix` - Quick fixes for errors/warnings

|

||||

- `refactor` - Refactoring operations

|

||||

- `refactor.extract` - Extract to function/variable

|

||||

- `refactor.inline` - Inline function/variable

|

||||

- `source` - Source code actions

|

||||

- `source.organizeImports` - Organize imports

|

||||

- `source.fixAll` - Fix all auto-fixable issues

|

||||

|

||||

## Security

|

||||

|

||||

LSP servers are only started in trusted workspaces by default. This is because language servers run with your user permissions and can execute code.

|

||||

|

||||

### Trust Controls

|

||||

|

||||

- **Trusted Workspace**: LSP servers start automatically

|

||||

- **Untrusted Workspace**: LSP servers won't start unless `trustRequired: false`

|

||||

|

||||

To mark a workspace as trusted, use the `/trust` command or configure trusted folders in settings.

|

||||

|

||||

### Server Allowlists

|

||||

|

||||

You can restrict which servers are allowed to run:

|

||||

|

||||

```json

|

||||

{

|

||||

"lsp": {

|

||||

"allowed": ["typescript-language-server", "gopls"],

|

||||

"excluded": ["untrusted-server"]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Server Not Starting

|

||||

|

||||

1. **Check if the server is installed**: Run the command manually to verify

|

||||

2. **Check the PATH**: Ensure the server binary is in your system PATH

|

||||

3. **Check workspace trust**: The workspace must be trusted for LSP

|

||||

4. **Check logs**: Look for error messages in the console output

|

||||

|

||||

### Slow Performance

|

||||

|

||||

1. **Large projects**: Consider excluding `node_modules` and other large directories

|

||||

2. **Server timeout**: Increase `lsp.serverTimeout` for slow servers

|

||||

3. **Multiple servers**: Exclude unused language servers

|

||||

|

||||

### No Results

|

||||

|

||||

1. **Server not ready**: The server may still be indexing

|

||||

2. **File not saved**: Save your file for the server to pick up changes

|

||||

3. **Wrong language**: Check if the correct server is running for your language

|

||||

|

||||

### Debugging

|

||||

|

||||

Enable debug logging to see LSP communication:

|

||||

|

||||

```bash

|

||||

DEBUG=lsp* qwen

|

||||

```

|

||||

|

||||

Or check the LSP debugging guide at `packages/cli/LSP_DEBUGGING_GUIDE.md`.

|

||||

|

||||

## Claude Code Compatibility

|

||||

|

||||

Qwen Code supports Claude Code-style `.lsp.json` configuration files. If you're migrating from Claude Code, your existing LSP configuration should work with minimal changes.

|

||||

|

||||

### Legacy Format

|

||||

|

||||

The legacy format (used by earlier versions) is still supported but deprecated:

|

||||

|

||||

```json

|

||||

{

|

||||

"typescript": {

|

||||

"command": "typescript-language-server",

|

||||

"args": ["--stdio"],

|

||||

"transport": "stdio"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

We recommend migrating to the new `languageServers` format:

|

||||

|

||||

```json

|

||||

{

|

||||

"languageServers": {

|

||||

"typescript-language-server": {

|

||||

"languages": ["typescript", "javascript"],

|

||||

"command": "typescript-language-server",

|

||||

"args": ["--stdio"],

|

||||

"transport": "stdio"

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Best Practices

|

||||

|

||||

1. **Install language servers globally**: This ensures they're available in all projects

|

||||

2. **Use project-specific settings**: Configure server options per project when needed

|

||||

3. **Keep servers updated**: Update your language servers regularly for best results

|

||||

4. **Trust wisely**: Only trust workspaces from trusted sources

|

||||

|

||||

## FAQ

|

||||

|

||||

### Q: How do I know which language servers are running?

|

||||

|

||||

Use the `/lsp status` command to see all configured and running language servers.

|

||||

|

||||

### Q: Can I use multiple language servers for the same file type?

|

||||

|

||||

Yes, but only one will be used for each operation. The first server that returns results wins.

|

||||

|

||||

### Q: Does LSP work in sandbox mode?

|

||||

|

||||

LSP servers run outside the sandbox to access your code. They're subject to workspace trust controls.

|

||||

|

||||

### Q: How do I disable LSP for a specific project?

|

||||

|

||||

Add to your project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"lsp": {

|

||||

"enabled": false

|

||||

}

|

||||

}

|

||||

```

|

||||

@@ -49,8 +49,6 @@ Cross-platform sandboxing with complete process isolation.

|

||||

|

||||

By default, Qwen Code uses a published sandbox image (configured in the CLI package) and will pull it as needed.

|

||||

|

||||

The container sandbox mounts your workspace and your `~/.qwen` directory into the container so auth and settings persist between runs.

|

||||

|

||||

**Best for**: Strong isolation on any OS, consistent tooling inside a known image.

|

||||

|

||||

### Choosing a method

|

||||

@@ -159,13 +157,22 @@ For a working allowlist-style proxy example, see: [Example Proxy Script](/develo

|

||||

|

||||

## Linux UID/GID handling

|

||||

|

||||

On Linux, Qwen Code defaults to enabling UID/GID mapping so the sandbox runs as your user (and reuses the mounted `~/.qwen`). Override with:

|

||||

The sandbox automatically handles user permissions on Linux. Override these permissions with:

|

||||

|

||||

```bash

|

||||

export SANDBOX_SET_UID_GID=true # Force host UID/GID

|

||||

export SANDBOX_SET_UID_GID=false # Disable UID/GID mapping

|

||||

```

|

||||

|

||||

## Customizing the sandbox environment (Docker/Podman)

|

||||

|

||||

If you need extra tools inside the container (e.g., `git`, `python`, `rg`), create a custom Dockerfile:

|

||||

|

||||

- Path: `.qwen/sandbox.Dockerfile`

|

||||

- Then run with: `BUILD_SANDBOX=1 qwen -s ...`

|

||||

|

||||

This builds a project-specific image based on the default sandbox image.

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common issues

|

||||

|

||||

@@ -11,29 +11,12 @@ This guide shows you how to create, use, and manage Agent Skills in **Qwen Code*

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

|

||||

## How to enable

|

||||

|

||||

### Via CLI flag

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

### Via settings.json

|

||||

|

||||

Add to your `~/.qwen/settings.json` or project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": {

|

||||

"experimental": {

|

||||

"skills": true

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

@@ -44,14 +27,6 @@ Agent Skills package expertise into discoverable capabilities. Each Skill consis

|

||||

|

||||

Skills are **model-invoked** — the model autonomously decides when to use them based on your request and the Skill’s description. This is different from slash commands, which are **user-invoked** (you explicitly type `/command`).

|

||||

|

||||

If you want to invoke a Skill explicitly, use the `/skills` slash command:

|

||||

|

||||

```bash

|

||||

/skills <skill-name>

|

||||

```

|

||||

|

||||

The `/skills` command is only available when you run with `--experimental-skills`. Use autocomplete to browse available Skills and descriptions.

|

||||

|

||||

### Benefits

|

||||

|

||||

- Extend Qwen Code for your workflows

|

||||

|

||||

@@ -1,57 +0,0 @@

|

||||

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

### Requirements

|

||||

|

||||

- JetBrains IDE with ACP support (IntelliJ IDEA, WebStorm, PyCharm, etc.)

|

||||

- Qwen Code CLI installed

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Open your JetBrains IDE and navigate to AI Chat tool window.

|

||||

|

||||

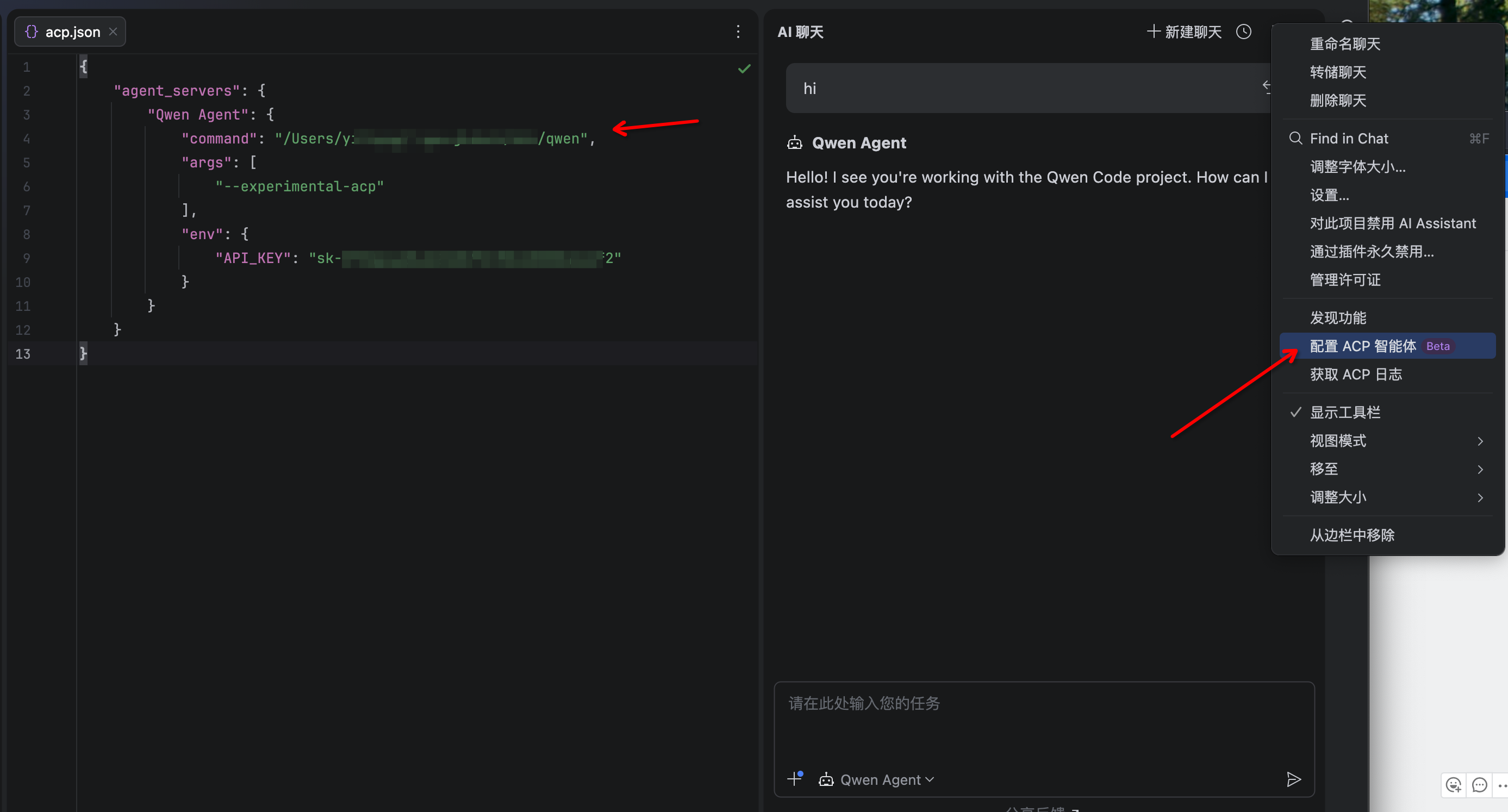

3. Click the 3-dot menu in the upper-right corner and select **Configure ACP Agent** and configure Qwen Code with the following settings:

|

||||

|

||||

```json

|

||||

{

|

||||

"agent_servers": {

|

||||

"qwen": {

|

||||

"command": "/path/to/qwen",

|

||||

"args": ["--acp"],

|

||||

"env": {}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Agent not appearing

|

||||

|

||||

- Run `qwen --version` in terminal to verify installation

|

||||

- Ensure your JetBrains IDE version supports ACP

|

||||

- Restart your JetBrains IDE

|

||||

|

||||

### Qwen Code not responding

|

||||

|

||||

- Check your internet connection

|

||||

- Verify CLI works by running `qwen` in terminal

|

||||

- [File an issue on GitHub](https://github.com/qwenlm/qwen-code/issues) if the problem persists

|

||||

@@ -18,17 +18,23 @@

|

||||

|

||||

### Requirements

|

||||

|

||||

- VS Code 1.85.0 or higher

|

||||

- VS Code 1.98.0 or higher

|

||||

|

||||

### Installation

|

||||

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Extension not installing

|

||||

|

||||

- Ensure you have VS Code 1.85.0 or higher

|

||||

- Ensure you have VS Code 1.98.0 or higher

|

||||