mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-19 07:16:19 +00:00

Compare commits

3 Commits

feature/ad

...

feat/suppo

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7475ffcbeb | ||

|

|

ccd51a6a00 | ||

|

|

a7e14255c3 |

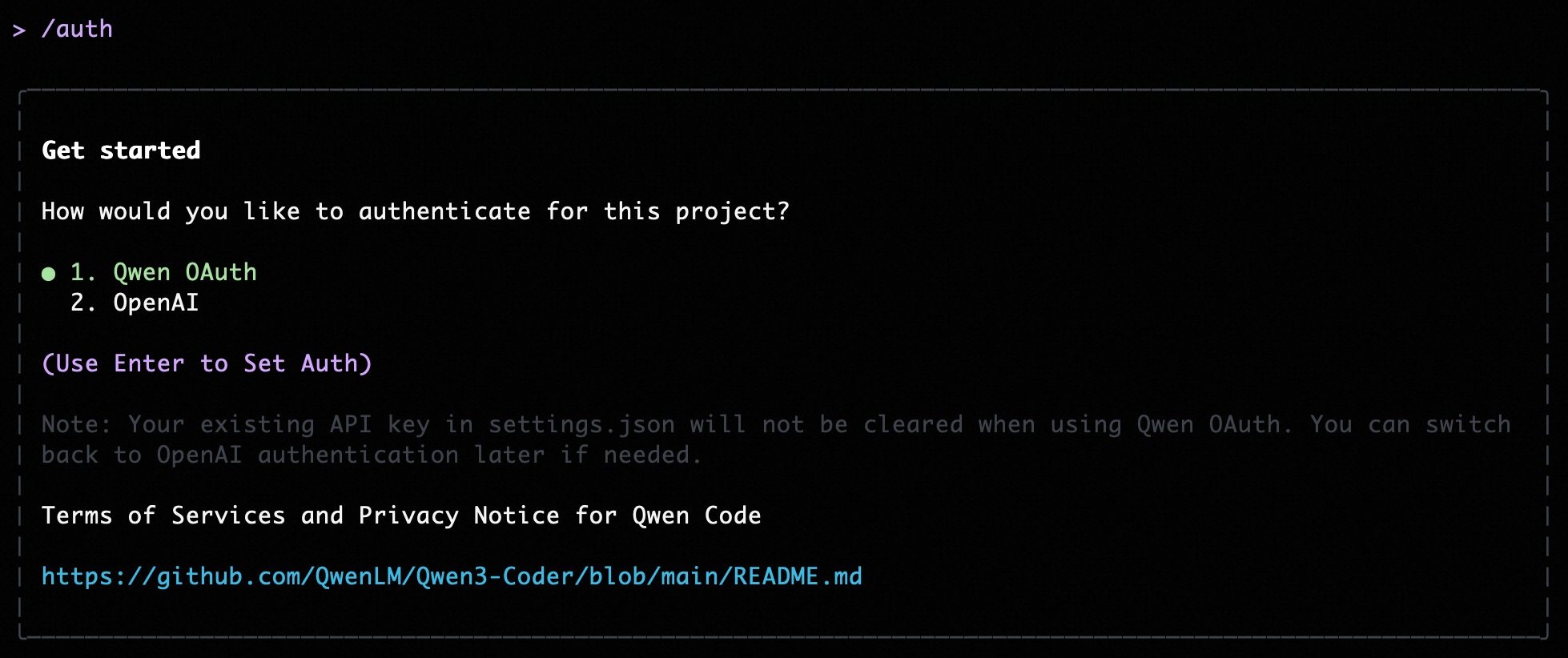

@@ -5,13 +5,11 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -26,54 +24,15 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

### Quick start (interactive, recommended for local use)

|

||||

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -241,6 +241,7 @@ Per-field precedence for `generationConfig`:

|

||||

| ------------------------------------------------- | -------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `context.fileName` | string or array of strings | The name of the context file(s). | `undefined` |

|

||||

| `context.importFormat` | string | The format to use when importing memory. | `undefined` |

|

||||

| `context.discoveryMaxDirs` | number | Maximum number of directories to search for memory. | `200` |

|

||||

| `context.includeDirectories` | array | Additional directories to include in the workspace context. Specifies an array of additional absolute or relative paths to include in the workspace context. Missing directories will be skipped with a warning by default. Paths can use `~` to refer to the user's home directory. This setting can be combined with the `--include-directories` command-line flag. | `[]` |

|

||||

| `context.loadFromIncludeDirectories` | boolean | Controls the behavior of the `/memory refresh` command. If set to `true`, `QWEN.md` files should be loaded from all directories that are added. If set to `false`, `QWEN.md` should only be loaded from the current directory. | `false` |

|

||||

| `context.fileFiltering.respectGitIgnore` | boolean | Respect .gitignore files when searching. | `true` |

|

||||

@@ -274,7 +275,6 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

| `tools.truncateToolOutputThreshold` | number | Truncate tool output if it is larger than this many characters. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `25000` | Requires restart: Yes |

|

||||

| `tools.truncateToolOutputLines` | number | Maximum lines or entries kept when truncating tool output. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `1000` | Requires restart: Yes |

|

||||

| `tools.autoAccept` | boolean | Controls whether the CLI automatically accepts and executes tool calls that are considered safe (e.g., read-only operations) without explicit user confirmation. If set to `true`, the CLI will bypass the confirmation prompt for tools deemed safe. | `false` | |

|

||||

| `tools.experimental.skills` | boolean | Enable experimental Agent Skills feature | `false` | |

|

||||

|

||||

#### mcp

|

||||

|

||||

@@ -529,13 +529,16 @@ Here's a conceptual example of what a context file at the root of a TypeScript p

|

||||

|

||||

This example demonstrates how you can provide general project context, specific coding conventions, and even notes about particular files or components. The more relevant and precise your context files are, the better the AI can assist you. Project-specific context files are highly encouraged to establish conventions and context.

|

||||

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a sophisticated hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

1. **Global Context File:**

|

||||

- Location: `~/.qwen/<configured-context-filename>` (e.g., `~/.qwen/QWEN.md` in your user home directory).

|

||||

- Scope: Provides default instructions for all your projects.

|

||||

2. **Project Root & Ancestors Context Files:**

|

||||

- Location: The CLI searches for the configured context file in the current working directory and then in each parent directory up to either the project root (identified by a `.git` folder) or your home directory.

|

||||

- Scope: Provides context relevant to the entire project or a significant portion of it.

|

||||

3. **Sub-directory Context Files (Contextual/Local):**

|

||||

- Location: The CLI also scans for the configured context file in subdirectories _below_ the current working directory (respecting common ignore patterns like `node_modules`, `.git`, etc.). The breadth of this search is limited to 200 directories by default, but can be configured with the `context.discoveryMaxDirs` setting in your `settings.json` file.

|

||||

- Scope: Allows for highly specific instructions relevant to a particular component, module, or subsection of your project.

|

||||

- **Concatenation & UI Indication:** The contents of all found context files are concatenated (with separators indicating their origin and path) and provided as part of the system prompt. The CLI footer displays the count of loaded context files, giving you a quick visual cue about the active instructional context.

|

||||

- **Importing Content:** You can modularize your context files by importing other Markdown files using the `@path/to/file.md` syntax. For more details, see the [Memory Import Processor documentation](../configuration/memory).

|

||||

- **Commands for Memory Management:**

|

||||

|

||||

@@ -11,29 +11,12 @@ This guide shows you how to create, use, and manage Agent Skills in **Qwen Code*

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

|

||||

## How to enable

|

||||

|

||||

### Via CLI flag

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

### Via settings.json

|

||||

|

||||

Add to your `~/.qwen/settings.json` or project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": {

|

||||

"experimental": {

|

||||

"skills": true

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

|

||||

@@ -26,6 +26,7 @@ export default tseslint.config(

|

||||

'dist/**',

|

||||

'docs-site/.next/**',

|

||||

'docs-site/out/**',

|

||||

'packages/cli/src/services/insight-page/**',

|

||||

],

|

||||

},

|

||||

eslint.configs.recommended,

|

||||

|

||||

@@ -125,7 +125,7 @@

|

||||

"lint-staged": {

|

||||

"*.{js,jsx,ts,tsx}": [

|

||||

"prettier --write",

|

||||

"eslint --fix --max-warnings 0"

|

||||

"eslint --fix --max-warnings 0 --no-warn-ignored"

|

||||

],

|

||||

"*.{json,md}": [

|

||||

"prettier --write"

|

||||

|

||||

@@ -1196,6 +1196,11 @@ describe('Hierarchical Memory Loading (config.ts) - Placeholder Suite', () => {

|

||||

],

|

||||

true,

|

||||

'tree',

|

||||

{

|

||||

respectGitIgnore: false,

|

||||

respectQwenIgnore: true,

|

||||

},

|

||||

undefined, // maxDirs

|

||||

);

|

||||

});

|

||||

|

||||

|

||||

@@ -9,6 +9,7 @@ import {

|

||||

AuthType,

|

||||

Config,

|

||||

DEFAULT_QWEN_EMBEDDING_MODEL,

|

||||

DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

FileDiscoveryService,

|

||||

getCurrentGeminiMdFilename,

|

||||

loadServerHierarchicalMemory,

|

||||

@@ -21,6 +22,7 @@ import {

|

||||

isToolEnabled,

|

||||

SessionService,

|

||||

type ResumedSessionData,

|

||||

type FileFilteringOptions,

|

||||

type MCPServerConfig,

|

||||

type ToolName,

|

||||

EditTool,

|

||||

@@ -332,7 +334,7 @@ export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

.option('experimental-skills', {

|

||||

type: 'boolean',

|

||||

description: 'Enable experimental Skills feature',

|

||||

default: settings.tools?.experimental?.skills ?? false,

|

||||

default: false,

|

||||

})

|

||||

.option('channel', {

|

||||

type: 'string',

|

||||

@@ -641,6 +643,7 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths: string[] = [],

|

||||

folderTrust: boolean,

|

||||

memoryImportFormat: 'flat' | 'tree' = 'tree',

|

||||

fileFilteringOptions?: FileFilteringOptions,

|

||||

): Promise<{ memoryContent: string; fileCount: number }> {

|

||||

// FIX: Use real, canonical paths for a reliable comparison to handle symlinks.

|

||||

const realCwd = fs.realpathSync(path.resolve(currentWorkingDirectory));

|

||||

@@ -666,6 +669,8 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths,

|

||||

folderTrust,

|

||||

memoryImportFormat,

|

||||

fileFilteringOptions,

|

||||

settings.context?.discoveryMaxDirs,

|

||||

);

|

||||

}

|

||||

|

||||

@@ -735,6 +740,11 @@ export async function loadCliConfig(

|

||||

|

||||

const fileService = new FileDiscoveryService(cwd);

|

||||

|

||||

const fileFiltering = {

|

||||

...DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

...settings.context?.fileFiltering,

|

||||

};

|

||||

|

||||

const includeDirectories = (settings.context?.includeDirectories || [])

|

||||

.map(resolvePath)

|

||||

.concat((argv.includeDirectories || []).map(resolvePath));

|

||||

@@ -751,6 +761,7 @@ export async function loadCliConfig(

|

||||

extensionContextFilePaths,

|

||||

trustedFolder,

|

||||

memoryImportFormat,

|

||||

fileFiltering,

|

||||

);

|

||||

|

||||

let mcpServers = mergeMcpServers(settings, activeExtensions);

|

||||

|

||||

@@ -106,6 +106,7 @@ const MIGRATION_MAP: Record<string, string> = {

|

||||

mcpServers: 'mcpServers',

|

||||

mcpServerCommand: 'mcp.serverCommand',

|

||||

memoryImportFormat: 'context.importFormat',

|

||||

memoryDiscoveryMaxDirs: 'context.discoveryMaxDirs',

|

||||

model: 'model.name',

|

||||

preferredEditor: 'general.preferredEditor',

|

||||

sandbox: 'tools.sandbox',

|

||||

|

||||

@@ -690,18 +690,6 @@ const SETTINGS_SCHEMA = {

|

||||

{ value: 'openapi_30', label: 'OpenAPI 3.0 Strict' },

|

||||

],

|

||||

},

|

||||

contextWindowSize: {

|

||||

type: 'number',

|

||||

label: 'Context Window Size',

|

||||

category: 'Generation Configuration',

|

||||

requiresRestart: false,

|

||||

default: -1,

|

||||

description:

|

||||

'Override the automatic context window size detection. Set to -1 to use automatic detection based on the model. Set to a positive number to use a custom context window size.',

|

||||

parentKey: 'generationConfig',

|

||||

childKey: 'contextWindowSize',

|

||||

showInDialog: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

@@ -734,6 +722,15 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'The format to use when importing memory.',

|

||||

showInDialog: false,

|

||||

},

|

||||

discoveryMaxDirs: {

|

||||

type: 'number',

|

||||

label: 'Memory Discovery Max Dirs',

|

||||

category: 'Context',

|

||||

requiresRestart: false,

|

||||

default: 200,

|

||||

description: 'Maximum number of directories to search for memory.',

|

||||

showInDialog: true,

|

||||

},

|

||||

includeDirectories: {

|

||||

type: 'array',

|

||||

label: 'Include Directories',

|

||||

@@ -984,27 +981,6 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'The number of lines to keep when truncating tool output.',

|

||||

showInDialog: true,

|

||||

},

|

||||

experimental: {

|

||||

type: 'object',

|

||||

label: 'Experimental',

|

||||

category: 'Tools',

|

||||

requiresRestart: true,

|

||||

default: {},

|

||||

description: 'Experimental tool features.',

|

||||

showInDialog: false,

|

||||

properties: {

|

||||

skills: {

|

||||

type: 'boolean',

|

||||

label: 'Skills',

|

||||

category: 'Tools',

|

||||

requiresRestart: true,

|

||||

default: false,

|

||||

description:

|

||||

'Enable experimental Agent Skills feature. When enabled, Qwen Code can use Skills from .qwen/skills/ and ~/.qwen/skills/.',

|

||||

showInDialog: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

|

||||

|

||||

@@ -873,11 +873,11 @@ export default {

|

||||

'Session Stats': '会话统计',

|

||||

'Model Usage': '模型使用情况',

|

||||

Reqs: '请求数',

|

||||

'Input Tokens': '输入 token 数',

|

||||

'Output Tokens': '输出 token 数',

|

||||

'Input Tokens': '输入令牌',

|

||||

'Output Tokens': '输出令牌',

|

||||

'Savings Highlight:': '节省亮点:',

|

||||

'of input tokens were served from the cache, reducing costs.':

|

||||

'从缓存载入 token ,降低了成本',

|

||||

'的输入令牌来自缓存,降低了成本',

|

||||

'Tip: For a full token breakdown, run `/stats model`.':

|

||||

'提示:要查看完整的令牌明细,请运行 `/stats model`',

|

||||

'Model Stats For Nerds': '模型统计(技术细节)',

|

||||

|

||||

@@ -39,6 +39,7 @@ import { themeCommand } from '../ui/commands/themeCommand.js';

|

||||

import { toolsCommand } from '../ui/commands/toolsCommand.js';

|

||||

import { vimCommand } from '../ui/commands/vimCommand.js';

|

||||

import { setupGithubCommand } from '../ui/commands/setupGithubCommand.js';

|

||||

import { insightCommand } from '../ui/commands/insightCommand.js';

|

||||

|

||||

/**

|

||||

* Loads the core, hard-coded slash commands that are an integral part

|

||||

@@ -88,6 +89,7 @@ export class BuiltinCommandLoader implements ICommandLoader {

|

||||

vimCommand,

|

||||

setupGithubCommand,

|

||||

terminalSetupCommand,

|

||||

insightCommand,

|

||||

];

|

||||

|

||||

return allDefinitions.filter((cmd): cmd is SlashCommand => cmd !== null);

|

||||

|

||||

120

packages/cli/src/services/insight-page/README.md

Normal file

120

packages/cli/src/services/insight-page/README.md

Normal file

@@ -0,0 +1,120 @@

|

||||

# Qwen Code Insights Page

|

||||

|

||||

A React-based visualization dashboard for displaying coding activity insights and statistics.

|

||||

|

||||

## Development

|

||||

|

||||

This application consists of two parts:

|

||||

|

||||

1. **Backend (Express Server)**: Serves API endpoints and processes chat history data

|

||||

2. **Frontend (Vite + React)**: Development server with HMR

|

||||

|

||||

### Running in Development Mode

|

||||

|

||||

You need to run both the backend and frontend servers:

|

||||

|

||||

**Terminal 1 - Backend Server (Port 3001):**

|

||||

|

||||

```bash

|

||||

pnpm dev:server

|

||||

```

|

||||

|

||||

**Terminal 2 - Frontend Dev Server (Port 3000):**

|

||||

|

||||

```bash

|

||||

pnpm dev

|

||||

```

|

||||

|

||||

Then open <http://localhost:3000> in your browser.

|

||||

|

||||

The Vite dev server will proxy `/api` requests to the backend server at port 3001.

|

||||

|

||||

### Building for Production

|

||||

|

||||

```bash

|

||||

pnpm build

|

||||

```

|

||||

|

||||

This compiles TypeScript and builds the React application. The output will be in the `dist/` directory.

|

||||

|

||||

In production, the Express server serves both the static files and API endpoints from a single port.

|

||||

|

||||

## Architecture

|

||||

|

||||

- **Frontend**: React + TypeScript + Vite + Chart.js

|

||||

- **Backend**: Express + Node.js

|

||||

- **Data Source**: JSONL chat history files from `~/.qwen/projects/*/chats/`

|

||||

|

||||

## Original Vite Template Info

|

||||

|

||||

This template provides a minimal setup to get React working in Vite with HMR and some ESLint rules.

|

||||

|

||||

Currently, two official plugins are available:

|

||||

|

||||

- [@vitejs/plugin-react](https://github.com/vitejs/vite-plugin-react/blob/main/packages/plugin-react) uses [Babel](https://babeljs.io/) (or [oxc](https://oxc.rs) when used in [rolldown-vite](https://vite.dev/guide/rolldown)) for Fast Refresh

|

||||

- [@vitejs/plugin-react-swc](https://github.com/vitejs/vite-plugin-react/blob/main/packages/plugin-react-swc) uses [SWC](https://swc.rs/) for Fast Refresh

|

||||

|

||||

## React Compiler

|

||||

|

||||

The React Compiler is not enabled on this template because of its impact on dev & build performances. To add it, see [this documentation](https://react.dev/learn/react-compiler/installation).

|

||||

|

||||

## Expanding the ESLint configuration

|

||||

|

||||

If you are developing a production application, we recommend updating the configuration to enable type-aware lint rules:

|

||||

|

||||

```js

|

||||

export default defineConfig([

|

||||

globalIgnores(['dist']),

|

||||

{

|

||||

files: ['**/*.{ts,tsx}'],

|

||||

extends: [

|

||||

// Other configs...

|

||||

|

||||

// Remove tseslint.configs.recommended and replace with this

|

||||

tseslint.configs.recommendedTypeChecked,

|

||||

// Alternatively, use this for stricter rules

|

||||

tseslint.configs.strictTypeChecked,

|

||||

// Optionally, add this for stylistic rules

|

||||

tseslint.configs.stylisticTypeChecked,

|

||||

|

||||

// Other configs...

|

||||

],

|

||||

languageOptions: {

|

||||

parserOptions: {

|

||||

project: ['./tsconfig.node.json', './tsconfig.app.json'],

|

||||

tsconfigRootDir: import.meta.dirname,

|

||||

},

|

||||

// other options...

|

||||

},

|

||||

},

|

||||

]);

|

||||

```

|

||||

|

||||

You can also install [eslint-plugin-react-x](https://github.com/Rel1cx/eslint-react/tree/main/packages/plugins/eslint-plugin-react-x) and [eslint-plugin-react-dom](https://github.com/Rel1cx/eslint-react/tree/main/packages/plugins/eslint-plugin-react-dom) for React-specific lint rules:

|

||||

|

||||

```js

|

||||

// eslint.config.js

|

||||

import reactX from 'eslint-plugin-react-x';

|

||||

import reactDom from 'eslint-plugin-react-dom';

|

||||

|

||||

export default defineConfig([

|

||||

globalIgnores(['dist']),

|

||||

{

|

||||

files: ['**/*.{ts,tsx}'],

|

||||

extends: [

|

||||

// Other configs...

|

||||

// Enable lint rules for React

|

||||

reactX.configs['recommended-typescript'],

|

||||

// Enable lint rules for React DOM

|

||||

reactDom.configs.recommended,

|

||||

],

|

||||

languageOptions: {

|

||||

parserOptions: {

|

||||

project: ['./tsconfig.node.json', './tsconfig.app.json'],

|

||||

tsconfigRootDir: import.meta.dirname,

|

||||

},

|

||||

// other options...

|

||||

},

|

||||

},

|

||||

]);

|

||||

```

|

||||

13

packages/cli/src/services/insight-page/index.html

Normal file

13

packages/cli/src/services/insight-page/index.html

Normal file

@@ -0,0 +1,13 @@

|

||||

<!doctype html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="UTF-8" />

|

||||

<link rel="icon" type="image/svg+xml" href="/qwen.png" />

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

|

||||

<title>Qwen Code Insight</title>

|

||||

</head>

|

||||

<body>

|

||||

<div id="root"></div>

|

||||

<script type="module" src="/src/main.tsx"></script>

|

||||

</body>

|

||||

</html>

|

||||

42

packages/cli/src/services/insight-page/package.json

Normal file

42

packages/cli/src/services/insight-page/package.json

Normal file

@@ -0,0 +1,42 @@

|

||||

{

|

||||

"name": "insight-page",

|

||||

"private": true,

|

||||

"version": "0.0.0",

|

||||

"type": "module",

|

||||

"scripts": {

|

||||

"dev": "vite",

|

||||

"dev:server": "BASE_DIR=$HOME/.qwen/projects PORT=3001 tsx ../insightServer.ts",

|

||||

"build": "tsc -b && vite build",

|

||||

"lint": "eslint .",

|

||||

"preview": "vite preview"

|

||||

},

|

||||

"dependencies": {

|

||||

"@uiw/react-heat-map": "^2.3.3",

|

||||

"chart.js": "^4.5.1",

|

||||

"html2canvas": "^1.4.1",

|

||||

"react": "^19.2.0",

|

||||

"react-dom": "^19.2.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

"@eslint/js": "^9.39.1",

|

||||

"@types/node": "^24.10.1",

|

||||

"@types/react": "^19.2.5",

|

||||

"@types/react-dom": "^19.2.3",

|

||||

"@vitejs/plugin-react": "^5.1.1",

|

||||

"autoprefixer": "^10.4.20",

|

||||

"eslint": "^9.39.1",

|

||||

"eslint-plugin-react-hooks": "^7.0.1",

|

||||

"eslint-plugin-react-refresh": "^0.4.24",

|

||||

"globals": "^16.5.0",

|

||||

"postcss": "^8.4.49",

|

||||

"tailwindcss": "^3.4.17",

|

||||

"typescript": "~5.9.3",

|

||||

"typescript-eslint": "^8.46.4",

|

||||

"vite": "npm:rolldown-vite@7.2.5"

|

||||

},

|

||||

"pnpm": {

|

||||

"overrides": {

|

||||

"vite": "npm:rolldown-vite@7.2.5"

|

||||

}

|

||||

}

|

||||

}

|

||||

1968

packages/cli/src/services/insight-page/pnpm-lock.yaml

generated

Normal file

1968

packages/cli/src/services/insight-page/pnpm-lock.yaml

generated

Normal file

File diff suppressed because it is too large

Load Diff

6

packages/cli/src/services/insight-page/postcss.config.js

Normal file

6

packages/cli/src/services/insight-page/postcss.config.js

Normal file

@@ -0,0 +1,6 @@

|

||||

export default {

|

||||

plugins: {

|

||||

tailwindcss: {},

|

||||

autoprefixer: {},

|

||||

},

|

||||

};

|

||||

BIN

packages/cli/src/services/insight-page/public/qwen.png

Normal file

BIN

packages/cli/src/services/insight-page/public/qwen.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 79 KiB |

395

packages/cli/src/services/insight-page/src/App.tsx

Normal file

395

packages/cli/src/services/insight-page/src/App.tsx

Normal file

@@ -0,0 +1,395 @@

|

||||

import { useEffect, useRef, useState, type CSSProperties } from 'react';

|

||||

import {

|

||||

Chart,

|

||||

LineController,

|

||||

LineElement,

|

||||

BarController,

|

||||

BarElement,

|

||||

CategoryScale,

|

||||

LinearScale,

|

||||

PointElement,

|

||||

Legend,

|

||||

Title,

|

||||

Tooltip,

|

||||

} from 'chart.js';

|

||||

import type { ChartConfiguration } from 'chart.js';

|

||||

import HeatMap from '@uiw/react-heat-map';

|

||||

import html2canvas from 'html2canvas';

|

||||

|

||||

// Register Chart.js components

|

||||

Chart.register(

|

||||

LineController,

|

||||

LineElement,

|

||||

BarController,

|

||||

BarElement,

|

||||

CategoryScale,

|

||||

LinearScale,

|

||||

PointElement,

|

||||

Legend,

|

||||

Title,

|

||||

Tooltip,

|

||||

);

|

||||

|

||||

interface UsageMetadata {

|

||||

input: number;

|

||||

output: number;

|

||||

total: number;

|

||||

}

|

||||

|

||||

interface InsightData {

|

||||

heatmap: { [date: string]: number };

|

||||

tokenUsage: { [date: string]: UsageMetadata };

|

||||

currentStreak: number;

|

||||

longestStreak: number;

|

||||

longestWorkDate: string | null;

|

||||

longestWorkDuration: number;

|

||||

activeHours: { [hour: number]: number };

|

||||

latestActiveTime: string | null;

|

||||

achievements: Array<{

|

||||

id: string;

|

||||

name: string;

|

||||

description: string;

|

||||

}>;

|

||||

}

|

||||

|

||||

function App() {

|

||||

const [insights, setInsights] = useState<InsightData | null>(null);

|

||||

const [loading, setLoading] = useState(true);

|

||||

const [error, setError] = useState<string | null>(null);

|

||||

const hourChartRef = useRef<HTMLCanvasElement>(null);

|

||||

const hourChartInstance = useRef<Chart | null>(null);

|

||||

const containerRef = useRef<HTMLDivElement>(null);

|

||||

|

||||

// Load insights data

|

||||

useEffect(() => {

|

||||

const loadInsights = async () => {

|

||||

try {

|

||||

setLoading(true);

|

||||

const response = await fetch('/api/insights');

|

||||

if (!response.ok) {

|

||||

throw new Error('Failed to fetch insights');

|

||||

}

|

||||

const data: InsightData = await response.json();

|

||||

setInsights(data);

|

||||

setError(null);

|

||||

} catch (err) {

|

||||

setError((err as Error).message);

|

||||

setInsights(null);

|

||||

} finally {

|

||||

setLoading(false);

|

||||

}

|

||||

};

|

||||

|

||||

loadInsights();

|

||||

}, []);

|

||||

|

||||

// Create hour chart when insights change

|

||||

useEffect(() => {

|

||||

if (!insights || !hourChartRef.current) return;

|

||||

|

||||

// Destroy existing chart if it exists

|

||||

if (hourChartInstance.current) {

|

||||

hourChartInstance.current.destroy();

|

||||

}

|

||||

|

||||

const labels = Array.from({ length: 24 }, (_, i) => `${i}:00`);

|

||||

const data = labels.map((_, i) => insights.activeHours[i] || 0);

|

||||

|

||||

const ctx = hourChartRef.current.getContext('2d');

|

||||

if (!ctx) return;

|

||||

|

||||

hourChartInstance.current = new Chart(ctx, {

|

||||

type: 'bar',

|

||||

data: {

|

||||

labels,

|

||||

datasets: [

|

||||

{

|

||||

label: 'Activity per Hour',

|

||||

data,

|

||||

backgroundColor: 'rgba(52, 152, 219, 0.7)',

|

||||

borderColor: 'rgba(52, 152, 219, 1)',

|

||||

borderWidth: 1,

|

||||

},

|

||||

],

|

||||

},

|

||||

options: {

|

||||

indexAxis: 'y',

|

||||

responsive: true,

|

||||

maintainAspectRatio: false,

|

||||

scales: {

|

||||

x: {

|

||||

beginAtZero: true,

|

||||

},

|

||||

},

|

||||

plugins: {

|

||||

legend: {

|

||||

display: false,

|

||||

},

|

||||

},

|

||||

} as ChartConfiguration['options'],

|

||||

});

|

||||

}, [insights]);

|

||||

|

||||

const handleExport = async () => {

|

||||

if (!containerRef.current) return;

|

||||

|

||||

try {

|

||||

const button = document.getElementById('export-btn') as HTMLButtonElement;

|

||||

button.style.display = 'none';

|

||||

|

||||

const canvas = await html2canvas(containerRef.current, {

|

||||

scale: 2,

|

||||

useCORS: true,

|

||||

logging: false,

|

||||

});

|

||||

|

||||

const imgData = canvas.toDataURL('image/png');

|

||||

const link = document.createElement('a');

|

||||

link.href = imgData;

|

||||

link.download = `qwen-insights-${new Date().toISOString().slice(0, 10)}.png`;

|

||||

link.click();

|

||||

|

||||

button.style.display = 'block';

|

||||

} catch (err) {

|

||||

console.error('Error capturing image:', err);

|

||||

alert('Failed to export image. Please try again.');

|

||||

}

|

||||

};

|

||||

|

||||

if (loading) {

|

||||

return (

|

||||

<div className="flex min-h-screen items-center justify-center bg-gradient-to-br from-slate-50 via-white to-slate-100">

|

||||

<div className="glass-card px-8 py-6 text-center">

|

||||

<h2 className="text-xl font-semibold text-slate-900">

|

||||

Loading insights...

|

||||

</h2>

|

||||

<p className="mt-2 text-sm text-slate-600">

|

||||

Fetching your coding patterns

|

||||

</p>

|

||||

</div>

|

||||

</div>

|

||||

);

|

||||

}

|

||||

|

||||

if (error || !insights) {

|

||||

return (

|

||||

<div className="flex min-h-screen items-center justify-center bg-gradient-to-br from-slate-50 via-white to-slate-100">

|

||||

<div className="glass-card px-8 py-6 text-center">

|

||||

<h2 className="text-xl font-semibold text-rose-700">

|

||||

Error loading insights

|

||||

</h2>

|

||||

<p className="mt-2 text-sm text-slate-600">

|

||||

{error || 'Please try again later.'}

|

||||

</p>

|

||||

</div>

|

||||

</div>

|

||||

);

|

||||

}

|

||||

|

||||

// Prepare heatmap data for react-heat-map

|

||||

const heatmapData = Object.entries(insights.heatmap).map(([date, count]) => ({

|

||||

date,

|

||||

count,

|

||||

}));

|

||||

|

||||

const cardClass = 'glass-card p-6';

|

||||

const sectionTitleClass =

|

||||

'text-lg font-semibold tracking-tight text-slate-900';

|

||||

const captionClass = 'text-sm font-medium text-slate-500';

|

||||

|

||||

return (

|

||||

<div className="min-h-screen" ref={containerRef}>

|

||||

<div className="mx-auto max-w-6xl px-6 py-10 md:py-12">

|

||||

<header className="mb-8 space-y-3 text-center">

|

||||

<p className="text-xs font-semibold uppercase tracking-[0.2em] text-slate-500">

|

||||

Insights

|

||||

</p>

|

||||

<h1 className="text-3xl font-semibold text-slate-900 md:text-4xl">

|

||||

Qwen Code Insights

|

||||

</h1>

|

||||

<p className="text-sm text-slate-600">

|

||||

Your personalized coding journey and patterns

|

||||

</p>

|

||||

</header>

|

||||

|

||||

<div className="grid gap-4 md:grid-cols-3 md:gap-6">

|

||||

<div className={`${cardClass} h-full`}>

|

||||

<div className="flex items-start justify-between">

|

||||

<div>

|

||||

<p className={captionClass}>Current Streak</p>

|

||||

<p className="mt-1 text-4xl font-bold text-slate-900">

|

||||

{insights.currentStreak}

|

||||

<span className="ml-2 text-base font-semibold text-slate-500">

|

||||

days

|

||||

</span>

|

||||

</p>

|

||||

</div>

|

||||

<span className="rounded-full bg-emerald-50 px-4 py-2 text-sm font-semibold text-emerald-700">

|

||||

Longest {insights.longestStreak}d

|

||||

</span>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div className={`${cardClass} h-full`}>

|

||||

<div className="flex items-center justify-between">

|

||||

<h3 className={sectionTitleClass}>Active Hours</h3>

|

||||

<span className="rounded-full bg-slate-100 px-3 py-1 text-xs font-semibold text-slate-600">

|

||||

24h

|

||||

</span>

|

||||

</div>

|

||||

<div className="mt-4 h-56 w-full">

|

||||

<canvas ref={hourChartRef}></canvas>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div className={`${cardClass} h-full space-y-3`}>

|

||||

<h3 className={sectionTitleClass}>Work Session</h3>

|

||||

<div className="grid grid-cols-2 gap-3 text-sm text-slate-700">

|

||||

<div className="rounded-xl bg-slate-50 px-3 py-2">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Longest

|

||||

</p>

|

||||

<p className="mt-1 text-lg font-semibold text-slate-900">

|

||||

{insights.longestWorkDuration}m

|

||||

</p>

|

||||

</div>

|

||||

<div className="rounded-xl bg-slate-50 px-3 py-2">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Date

|

||||

</p>

|

||||

<p className="mt-1 text-lg font-semibold text-slate-900">

|

||||

{insights.longestWorkDate || '-'}

|

||||

</p>

|

||||

</div>

|

||||

<div className="col-span-2 rounded-xl bg-slate-50 px-3 py-2">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Last Active

|

||||

</p>

|

||||

<p className="mt-1 text-lg font-semibold text-slate-900">

|

||||

{insights.latestActiveTime || '-'}

|

||||

</p>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div className={`${cardClass} mt-4 space-y-4 md:mt-6`}>

|

||||

<div className="flex items-center justify-between">

|

||||

<h3 className={sectionTitleClass}>Activity Heatmap</h3>

|

||||

<span className="text-xs font-semibold text-slate-500">

|

||||

Past year

|

||||

</span>

|

||||

</div>

|

||||

<div className="overflow-x-auto">

|

||||

<div className="min-w-[720px] rounded-xl border border-slate-100 bg-white/70 p-4 shadow-inner shadow-slate-100">

|

||||

<HeatMap

|

||||

value={heatmapData}

|

||||

width={1000}

|

||||

style={{ color: '#0f172a' } satisfies CSSProperties}

|

||||

startDate={

|

||||

new Date(new Date().setFullYear(new Date().getFullYear() - 1))

|

||||

}

|

||||

endDate={new Date()}

|

||||

rectSize={14}

|

||||

legendCellSize={12}

|

||||

rectProps={{

|

||||

rx: 2,

|

||||

}}

|

||||

panelColors={{

|

||||

0: '#e2e8f0',

|

||||

2: '#a5d8ff',

|

||||

4: '#74c0fc',

|

||||

10: '#339af0',

|

||||

20: '#1c7ed6',

|

||||

}}

|

||||

/>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div className={`${cardClass} mt-4 md:mt-6`}>

|

||||

<div className="space-y-3">

|

||||

<h3 className={sectionTitleClass}>Token Usage</h3>

|

||||

<div className="grid grid-cols-3 gap-3">

|

||||

<div className="rounded-xl bg-slate-50 px-4 py-3">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Input

|

||||

</p>

|

||||

<p className="mt-1 text-2xl font-bold text-slate-900">

|

||||

{Object.values(insights.tokenUsage)

|

||||

.reduce((acc, usage) => acc + usage.input, 0)

|

||||

.toLocaleString()}

|

||||

</p>

|

||||

</div>

|

||||

<div className="rounded-xl bg-slate-50 px-4 py-3">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Output

|

||||

</p>

|

||||

<p className="mt-1 text-2xl font-bold text-slate-900">

|

||||

{Object.values(insights.tokenUsage)

|

||||

.reduce((acc, usage) => acc + usage.output, 0)

|

||||

.toLocaleString()}

|

||||

</p>

|

||||

</div>

|

||||

<div className="rounded-xl bg-slate-50 px-4 py-3">

|

||||

<p className="text-xs font-semibold uppercase tracking-wide text-slate-500">

|

||||

Total

|

||||

</p>

|

||||

<p className="mt-1 text-2xl font-bold text-slate-900">

|

||||

{Object.values(insights.tokenUsage)

|

||||

.reduce((acc, usage) => acc + usage.total, 0)

|

||||

.toLocaleString()}

|

||||

</p>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

|

||||

<div className={`${cardClass} mt-4 space-y-4 md:mt-6`}>

|

||||

<div className="flex items-center justify-between">

|

||||

<h3 className={sectionTitleClass}>Achievements</h3>

|

||||

<span className="text-xs font-semibold text-slate-500">

|

||||

{insights.achievements.length} total

|

||||

</span>

|

||||

</div>

|

||||

{insights.achievements.length === 0 ? (

|

||||

<p className="text-sm text-slate-600">

|

||||

No achievements yet. Keep coding!

|

||||

</p>

|

||||

) : (

|

||||

<div className="divide-y divide-slate-200">

|

||||

{insights.achievements.map((achievement) => (

|

||||

<div

|

||||

key={achievement.id}

|

||||

className="flex flex-col gap-1 py-3 text-left"

|

||||

>

|

||||

<span className="text-base font-semibold text-slate-900">

|

||||

{achievement.name}

|

||||

</span>

|

||||

<p className="text-sm text-slate-600">

|

||||

{achievement.description}

|

||||

</p>

|

||||

</div>

|

||||

))}

|

||||

</div>

|

||||

)}

|

||||

</div>

|

||||

|

||||

<div className="mt-6 flex justify-center">

|

||||

<button

|

||||

id="export-btn"

|

||||

className="group inline-flex items-center gap-2 rounded-full bg-slate-900 px-5 py-3 text-sm font-semibold text-white shadow-soft transition focus-visible:outline focus-visible:outline-2 focus-visible:outline-offset-2 focus-visible:outline-slate-400 hover:-translate-y-[1px] hover:shadow-lg active:translate-y-[1px]"

|

||||

onClick={handleExport}

|

||||

>

|

||||

Export as Image

|

||||

<span className="text-slate-200 transition group-hover:translate-x-0.5">

|

||||

→

|

||||

</span>

|

||||

</button>

|

||||

</div>

|

||||

</div>

|

||||

</div>

|

||||

);

|

||||

}

|

||||

|

||||

export default App;

|

||||

15

packages/cli/src/services/insight-page/src/index.css

Normal file

15

packages/cli/src/services/insight-page/src/index.css

Normal file

@@ -0,0 +1,15 @@

|

||||

@tailwind base;

|

||||

@tailwind components;

|

||||

@tailwind utilities;

|

||||

|

||||

@layer base {

|

||||

body {

|

||||

@apply min-h-screen bg-gradient-to-br from-slate-50 via-white to-slate-100 text-slate-900 antialiased;

|

||||

}

|

||||

}

|

||||

|

||||

@layer components {

|

||||

.glass-card {

|

||||

@apply rounded-2xl border border-slate-200 bg-white/80 shadow-soft backdrop-blur;

|

||||

}

|

||||

}

|

||||

10

packages/cli/src/services/insight-page/src/main.tsx

Normal file

10

packages/cli/src/services/insight-page/src/main.tsx

Normal file

@@ -0,0 +1,10 @@

|

||||

import { StrictMode } from 'react';

|

||||

import { createRoot } from 'react-dom/client';

|

||||

import './index.css';

|

||||

import App from './App.tsx';

|

||||

|

||||

createRoot(document.getElementById('root')!).render(

|

||||

<StrictMode>

|

||||

<App />

|

||||

</StrictMode>,

|

||||

);

|

||||

18

packages/cli/src/services/insight-page/tailwind.config.ts

Normal file

18

packages/cli/src/services/insight-page/tailwind.config.ts

Normal file

@@ -0,0 +1,18 @@

|

||||

import type { Config } from 'tailwindcss';

|

||||

|

||||

const config: Config = {

|

||||

content: ['./index.html', './src/**/*.{ts,tsx}'],

|

||||

theme: {

|

||||

extend: {

|

||||

boxShadow: {

|

||||

soft: '0 10px 40px rgba(15, 23, 42, 0.08)',

|

||||

},

|

||||

borderRadius: {

|

||||

xl: '1.25rem',

|

||||

},

|

||||

},

|

||||

},

|

||||

plugins: [],

|

||||

};

|

||||

|

||||

export default config;

|

||||

28

packages/cli/src/services/insight-page/tsconfig.app.json

Normal file

28

packages/cli/src/services/insight-page/tsconfig.app.json

Normal file

@@ -0,0 +1,28 @@

|

||||

{

|

||||

"compilerOptions": {

|

||||

"tsBuildInfoFile": "./node_modules/.tmp/tsconfig.app.tsbuildinfo",

|

||||

"target": "ES2022",

|

||||

"useDefineForClassFields": true,

|

||||

"lib": ["ES2022", "DOM", "DOM.Iterable"],

|

||||

"module": "ESNext",

|

||||

"types": ["vite/client"],

|

||||

"skipLibCheck": true,

|

||||

|

||||

/* Bundler mode */

|

||||

"moduleResolution": "bundler",

|

||||

"allowImportingTsExtensions": true,

|

||||

"verbatimModuleSyntax": true,

|

||||

"moduleDetection": "force",

|

||||

"noEmit": true,

|

||||

"jsx": "react-jsx",

|

||||

|

||||

/* Linting */

|

||||

"strict": true,

|

||||

"noUnusedLocals": true,

|

||||

"noUnusedParameters": true,

|

||||

"erasableSyntaxOnly": true,

|

||||

"noFallthroughCasesInSwitch": true,

|

||||

"noUncheckedSideEffectImports": true

|

||||

},

|

||||

"include": ["src"]

|

||||

}

|

||||

7

packages/cli/src/services/insight-page/tsconfig.json

Normal file

7

packages/cli/src/services/insight-page/tsconfig.json

Normal file

@@ -0,0 +1,7 @@

|

||||

{

|

||||

"files": [],

|

||||

"references": [

|

||||

{ "path": "./tsconfig.app.json" },

|

||||

{ "path": "./tsconfig.node.json" }

|

||||

]

|

||||

}

|

||||

26

packages/cli/src/services/insight-page/tsconfig.node.json

Normal file

26

packages/cli/src/services/insight-page/tsconfig.node.json

Normal file

@@ -0,0 +1,26 @@

|

||||

{

|

||||

"compilerOptions": {

|

||||

"tsBuildInfoFile": "./node_modules/.tmp/tsconfig.node.tsbuildinfo",

|

||||

"target": "ES2023",

|

||||

"lib": ["ES2023"],

|

||||

"module": "ESNext",

|

||||

"types": ["node"],

|

||||

"skipLibCheck": true,

|

||||

|

||||

/* Bundler mode */

|

||||

"moduleResolution": "bundler",

|

||||

"allowImportingTsExtensions": true,

|

||||

"verbatimModuleSyntax": true,

|

||||

"moduleDetection": "force",

|

||||

"noEmit": true,

|

||||

|

||||

/* Linting */

|

||||

"strict": true,

|

||||

"noUnusedLocals": true,

|

||||

"noUnusedParameters": true,

|

||||

"erasableSyntaxOnly": true,

|

||||

"noFallthroughCasesInSwitch": true,

|

||||

"noUncheckedSideEffectImports": true

|

||||

},

|

||||

"include": ["vite.config.ts"]

|

||||

}

|

||||

File diff suppressed because one or more lines are too long

31210

packages/cli/src/services/insight-page/views/assets/index-D7obW1Jn.js

Normal file

31210

packages/cli/src/services/insight-page/views/assets/index-D7obW1Jn.js

Normal file

File diff suppressed because one or more lines are too long

14

packages/cli/src/services/insight-page/views/index.html

Normal file

14

packages/cli/src/services/insight-page/views/index.html

Normal file

@@ -0,0 +1,14 @@

|

||||

<!doctype html>

|

||||

<html lang="en">

|

||||

<head>

|

||||

<meta charset="UTF-8" />

|

||||

<link rel="icon" type="image/svg+xml" href="/qwen.png" />

|

||||

<meta name="viewport" content="width=device-width, initial-scale=1.0" />

|

||||

<title>insight-page</title>

|

||||

<script type="module" crossorigin src="/assets/index-D7obW1Jn.js"></script>

|

||||

<link rel="stylesheet" crossorigin href="/assets/index-CV6J1oXz.css">

|

||||

</head>

|

||||

<body>

|

||||

<div id="root"></div>

|

||||

</body>

|

||||

</html>

|

||||

BIN

packages/cli/src/services/insight-page/views/qwen.png

Normal file

BIN

packages/cli/src/services/insight-page/views/qwen.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 79 KiB |

19

packages/cli/src/services/insight-page/vite.config.ts

Normal file

19

packages/cli/src/services/insight-page/vite.config.ts

Normal file

@@ -0,0 +1,19 @@

|

||||

import { defineConfig } from 'vite';

|

||||

import react from '@vitejs/plugin-react';

|

||||

|

||||

// https://vite.dev/config/

|

||||

export default defineConfig({

|

||||

plugins: [react()],

|

||||

build: {

|

||||

outDir: 'views',

|

||||

},

|

||||

server: {

|

||||

port: 3000,

|

||||

proxy: {

|

||||

'/api': {

|

||||

target: 'http://localhost:3001',

|

||||

changeOrigin: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

});

|

||||

404

packages/cli/src/services/insightServer.ts

Normal file

404

packages/cli/src/services/insightServer.ts

Normal file

@@ -0,0 +1,404 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Qwen Code

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import express from 'express';

|

||||

import fs from 'fs/promises';

|

||||

import path, { dirname } from 'path';

|

||||

import { fileURLToPath } from 'url';

|

||||

import type { ChatRecord } from '@qwen-code/qwen-code-core';

|

||||

import { read } from '@qwen-code/qwen-code-core/src/utils/jsonl-utils.js';

|

||||

|

||||

interface StreakData {

|

||||

currentStreak: number;

|

||||

longestStreak: number;

|

||||

dates: string[];

|

||||

}

|

||||

|

||||

// For heat map data

|

||||

interface HeatMapData {

|

||||

[date: string]: number;

|

||||

}

|

||||

|

||||

// For token usage data

|

||||

interface TokenUsageData {

|

||||

[date: string]: {

|

||||

input: number;

|

||||

output: number;

|

||||

total: number;

|

||||

};

|

||||

}

|

||||

|

||||

// For achievement data

|

||||

interface AchievementData {

|

||||

id: string;

|

||||

name: string;

|

||||

description: string;

|

||||

}

|

||||

|

||||

// For the final insight data

|

||||

interface InsightData {

|

||||

heatmap: HeatMapData;

|

||||

tokenUsage: TokenUsageData;

|

||||

currentStreak: number;

|

||||

longestStreak: number;

|

||||

longestWorkDate: string | null;

|

||||

longestWorkDuration: number; // in minutes

|

||||

activeHours: { [hour: number]: number };

|

||||

latestActiveTime: string | null;

|

||||

achievements: AchievementData[];

|

||||

}

|

||||

|

||||

function debugLog(message: string) {

|

||||

const timestamp = new Date().toISOString();

|

||||

const logMessage = `[${timestamp}] ${message}\n`;

|

||||

console.log(logMessage);

|

||||

}

|

||||

|

||||

debugLog('Insight server starting...');

|

||||

|

||||

const __filename = fileURLToPath(import.meta.url);

|

||||

const __dirname = dirname(__filename);

|

||||

|

||||

const app = express();

|

||||

const PORT = process.env['PORT'];

|

||||

const BASE_DIR = process.env['BASE_DIR'];

|

||||

|

||||

if (!BASE_DIR) {

|

||||

debugLog('BASE_DIR environment variable is required');

|

||||

process.exit(1);

|

||||

}

|

||||

|

||||

// Serve static assets from the views/assets directory

|

||||

app.use(

|

||||

'/assets',

|

||||

express.static(path.join(__dirname, 'insight-page', 'views', 'assets')),

|

||||

);

|

||||

|

||||

app.get('/', (_req, res) => {

|

||||

res.sendFile(path.join(__dirname, 'insight-page', 'views', 'index.html'));

|

||||

});

|

||||

|

||||

// API endpoint to get insight data

|

||||

app.get('/api/insights', async (_req, res) => {

|

||||

try {

|

||||

debugLog('Received request for insights data');

|

||||

const insights = await generateInsights(BASE_DIR);

|

||||

res.json(insights);

|

||||

} catch (error) {

|

||||

debugLog(`Error generating insights: ${error}`);

|

||||

res.status(500).json({ error: 'Failed to generate insights' });

|

||||

}

|

||||

});

|

||||

|

||||

// Process chat files from all projects in the base directory and generate insights

|

||||

async function generateInsights(baseDir: string): Promise<InsightData> {

|

||||

// Initialize data structures

|

||||

const heatmap: HeatMapData = {};

|

||||

const tokenUsage: TokenUsageData = {};

|

||||

const activeHours: { [hour: number]: number } = {};

|

||||

const sessionStartTimes: { [sessionId: string]: Date } = {};

|

||||

const sessionEndTimes: { [sessionId: string]: Date } = {};

|

||||

|

||||

try {

|

||||

// Get all project directories in the base directory

|

||||

const projectDirs = await fs.readdir(baseDir);

|

||||

|

||||

// Process each project directory

|

||||

for (const projectDir of projectDirs) {

|

||||

const projectPath = path.join(baseDir, projectDir);

|

||||

const stats = await fs.stat(projectPath);

|

||||

|

||||

// Only process if it's a directory

|

||||

if (stats.isDirectory()) {

|

||||

const chatsDir = path.join(projectPath, 'chats');

|

||||

|

||||

let chatFiles: string[] = [];

|

||||

try {

|

||||

// Get all chat files in the chats directory

|

||||

const files = await fs.readdir(chatsDir);

|

||||

chatFiles = files.filter((file) => file.endsWith('.jsonl'));

|

||||

} catch (error) {

|

||||

if ((error as NodeJS.ErrnoException).code !== 'ENOENT') {

|

||||

debugLog(

|

||||

`Error reading chats directory for project ${projectDir}: ${error}`,

|

||||

);

|

||||

}

|

||||

// Continue to next project if chats directory doesn't exist

|

||||

continue;

|

||||

}

|

||||

|

||||

// Process each chat file in this project

|

||||

for (const file of chatFiles) {

|

||||

const filePath = path.join(chatsDir, file);

|

||||

const records = await read<ChatRecord>(filePath);

|

||||

|

||||

// Process each record

|

||||

for (const record of records) {

|

||||

const timestamp = new Date(record.timestamp);

|

||||

const dateKey = formatDate(timestamp);

|

||||

const hour = timestamp.getHours();

|

||||

|

||||

// Update heatmap (count of interactions per day)

|

||||

heatmap[dateKey] = (heatmap[dateKey] || 0) + 1;

|

||||

|

||||

// Update active hours

|

||||

activeHours[hour] = (activeHours[hour] || 0) + 1;

|

||||

|

||||

// Update token usage

|

||||

if (record.usageMetadata) {

|

||||

const usage = tokenUsage[dateKey] || {

|

||||

input: 0,

|

||||

output: 0,

|

||||

total: 0,

|

||||

};

|

||||

|

||||

usage.input += record.usageMetadata.promptTokenCount || 0;

|

||||

usage.output += record.usageMetadata.candidatesTokenCount || 0;

|

||||

usage.total += record.usageMetadata.totalTokenCount || 0;

|

||||

|

||||

tokenUsage[dateKey] = usage;

|

||||

}

|

||||

|

||||

// Track session times

|

||||

if (!sessionStartTimes[record.sessionId]) {

|

||||

sessionStartTimes[record.sessionId] = timestamp;

|

||||

}

|

||||

sessionEndTimes[record.sessionId] = timestamp;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

} catch (error) {

|

||||

if ((error as NodeJS.ErrnoException).code === 'ENOENT') {

|

||||

// Base directory doesn't exist, return empty insights

|

||||

debugLog(`Base directory does not exist: ${baseDir}`);

|

||||

} else {

|

||||

debugLog(`Error reading base directory: ${error}`);

|

||||

}

|

||||

}

|

||||

|

||||

// Calculate streak data

|

||||

const streakData = calculateStreaks(Object.keys(heatmap));

|

||||

|

||||

// Calculate longest work session

|

||||

let longestWorkDuration = 0;

|

||||

let longestWorkDate: string | null = null;

|

||||

for (const sessionId in sessionStartTimes) {

|

||||

const start = sessionStartTimes[sessionId];

|

||||

const end = sessionEndTimes[sessionId];

|

||||

const durationMinutes = Math.round(

|

||||

(end.getTime() - start.getTime()) / (1000 * 60),

|

||||

);

|

||||

|

||||

if (durationMinutes > longestWorkDuration) {

|

||||

longestWorkDuration = durationMinutes;

|

||||

longestWorkDate = formatDate(start);

|

||||

}

|

||||

}

|

||||

|

||||

// Calculate latest active time

|

||||

let latestActiveTime: string | null = null;

|

||||

let latestTimestamp = new Date(0);

|

||||

for (const dateStr in heatmap) {

|

||||

const date = new Date(dateStr);

|

||||

if (date > latestTimestamp) {

|

||||

latestTimestamp = date;

|

||||

latestActiveTime = date.toLocaleTimeString([], {

|

||||

hour: '2-digit',

|

||||

minute: '2-digit',

|

||||

});

|

||||

}

|

||||

}

|

||||

|

||||

// Calculate achievements

|

||||

const achievements = calculateAchievements(activeHours, heatmap, tokenUsage);

|

||||

|

||||

return {

|

||||

heatmap,

|

||||

tokenUsage,

|

||||

currentStreak: streakData.currentStreak,

|

||||

longestStreak: streakData.longestStreak,

|

||||

longestWorkDate,

|

||||

longestWorkDuration,

|

||||

activeHours,

|

||||

latestActiveTime,

|

||||

achievements,

|

||||

};

|

||||

}

|

||||

|

||||

// Helper function to format date as YYYY-MM-DD

|

||||

function formatDate(date: Date): string {

|

||||

return date.toISOString().split('T')[0];

|

||||

}

|

||||

|

||||

// Calculate streaks from activity dates

|

||||

function calculateStreaks(dates: string[]): StreakData {

|

||||

if (dates.length === 0) {

|

||||

return { currentStreak: 0, longestStreak: 0, dates: [] };

|

||||

}

|

||||

|

||||

// Convert string dates to Date objects and sort them

|

||||

const dateObjects = dates.map((dateStr) => new Date(dateStr));

|

||||

dateObjects.sort((a, b) => a.getTime() - b.getTime());

|

||||

|

||||

let currentStreak = 1;

|

||||

let maxStreak = 1;

|

||||

let currentDate = new Date(dateObjects[0]);

|

||||

currentDate.setHours(0, 0, 0, 0); // Normalize to start of day

|

||||

|

||||

for (let i = 1; i < dateObjects.length; i++) {

|

||||

const nextDate = new Date(dateObjects[i]);

|

||||

nextDate.setHours(0, 0, 0, 0); // Normalize to start of day

|

||||

|

||||

// Calculate difference in days

|

||||

const diffDays = Math.floor(

|

||||

(nextDate.getTime() - currentDate.getTime()) / (1000 * 60 * 60 * 24),

|

||||

);

|

||||

|

||||

if (diffDays === 1) {

|

||||

// Consecutive day

|

||||

currentStreak++;

|

||||

maxStreak = Math.max(maxStreak, currentStreak);

|

||||

} else if (diffDays > 1) {

|

||||

// Gap in streak

|

||||

currentStreak = 1;

|

||||

}

|

||||

// If diffDays === 0, same day, so streak continues

|

||||

|

||||

currentDate = nextDate;

|

||||

}

|

||||

|

||||

// Check if the streak is still ongoing (if last activity was yesterday or today)

|

||||

const today = new Date();

|

||||

today.setHours(0, 0, 0, 0);

|

||||

const yesterday = new Date(today);

|

||||

yesterday.setDate(yesterday.getDate() - 1);

|

||||

|

||||

if (

|

||||

currentDate.getTime() === today.getTime() ||

|

||||

currentDate.getTime() === yesterday.getTime()

|

||||

) {

|

||||