mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-19 07:16:19 +00:00

Compare commits

21 Commits

feat/ide-t

...

feat/exten

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f8e41fb7fa | ||

|

|

6e641b8def | ||

|

|

a546e84887 | ||

|

|

706cdb2ac1 | ||

|

|

df33029589 | ||

|

|

c8b0efa4d9 | ||

|

|

d0104dc487 | ||

|

|

592bf2bad1 | ||

|

|

f10fcc8dc9 | ||

|

|

f7fb624af9 | ||

|

|

2852f48a4a | ||

|

|

f00f76456c | ||

|

|

4c7605d900 | ||

|

|

b37ede07e8 | ||

|

|

0a88dd7861 | ||

|

|

70991e474f | ||

|

|

551e546974 | ||

|

|

74013bd8b2 | ||

|

|

18713ef2b0 | ||

|

|

50dac93c80 | ||

|

|

22504b0a5b |

279

.github/workflows/vscode-extension-test.yml

vendored

279

.github/workflows/vscode-extension-test.yml

vendored

@@ -1,279 +0,0 @@

|

||||

name: 'VSCode Extension Tests'

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- 'main'

|

||||

- 'release/**'

|

||||

- feat/ide-test-ci

|

||||

paths:

|

||||

- 'packages/vscode-ide-companion/**'

|

||||

- '.github/workflows/vscode-extension-test.yml'

|

||||

pull_request:

|

||||

branches:

|

||||

- 'main'

|

||||

- 'release/**'

|

||||

paths:

|

||||

- 'packages/vscode-ide-companion/**'

|

||||

- '.github/workflows/vscode-extension-test.yml'

|

||||

workflow_dispatch:

|

||||

|

||||

concurrency:

|

||||

group: '${{ github.workflow }}-${{ github.head_ref || github.ref }}'

|

||||

cancel-in-progress: true

|

||||

|

||||

permissions:

|

||||

contents: 'read'

|

||||

checks: 'write'

|

||||

pull-requests: 'write' # Needed to comment on PRs

|

||||

|

||||

jobs:

|

||||

unit-test:

|

||||

name: 'Unit Tests'

|

||||

runs-on: '${{ matrix.os }}'

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

os:

|

||||

- 'ubuntu-latest'

|

||||

node-version:

|

||||

- '20.x'

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Setup Node.js'

|

||||

uses: 'actions/setup-node@v4'

|

||||

with:

|

||||

node-version: '${{ matrix.node-version }}'

|

||||

cache: 'npm'

|

||||

|

||||

- name: 'Install dependencies'

|

||||

run: 'npm ci'

|

||||

|

||||

- name: 'Build project'

|

||||

run: 'npm run build'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Run unit tests'

|

||||

run: 'npm run test:ci'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Upload coverage'

|

||||

if: matrix.os == 'ubuntu-latest' && matrix.node-version == '20.x'

|

||||

uses: 'actions/upload-artifact@v4'

|

||||

with:

|

||||

name: 'coverage-unit-test'

|

||||

path: 'packages/vscode-ide-companion/coverage'

|

||||

|

||||

integration-test:

|

||||

name: 'Integration Tests'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs: 'unit-test'

|

||||

if: needs.unit-test.result == 'success'

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Setup Node.js'

|

||||

uses: 'actions/setup-node@v4'

|

||||

with:

|

||||

node-version: '20.x'

|

||||

cache: 'npm'

|

||||

|

||||

- name: 'Install dependencies'

|

||||

run: 'npm ci'

|

||||

|

||||

- name: 'Build project'

|

||||

run: 'npm run build'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Bundle CLI'

|

||||

run: 'node scripts/prepackage.js'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Run integration tests'

|

||||

run: 'xvfb-run -a npm run test:integration'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

e2e-test:

|

||||

name: 'E2E Tests'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs: 'integration-test'

|

||||

if: needs.integration-test.result == 'success'

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Setup Node.js'

|

||||

uses: 'actions/setup-node@v4'

|

||||

with:

|

||||

node-version: '20.x'

|

||||

cache: 'npm'

|

||||

|

||||

- name: 'Install dependencies'

|

||||

run: 'npm ci'

|

||||

|

||||

- name: 'Install Playwright browsers'

|

||||

run: 'npx playwright install --with-deps chromium'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Build project'

|

||||

run: 'npm run build'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Bundle CLI'

|

||||

run: 'node scripts/prepackage.js'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Run E2E tests'

|

||||

run: 'xvfb-run -a npm run test:e2e'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Upload E2E test results'

|

||||

if: always()

|

||||

uses: 'actions/upload-artifact@v4'

|

||||

with:

|

||||

name: 'e2e-test-results'

|

||||

path: 'packages/vscode-ide-companion/e2e/test-results'

|

||||

|

||||

- name: 'Upload Playwright report'

|

||||

if: always()

|

||||

uses: 'actions/upload-artifact@v4'

|

||||

with:

|

||||

name: 'playwright-report'

|

||||

path: 'packages/vscode-ide-companion/e2e/playwright-report'

|

||||

|

||||

e2e-vscode-test:

|

||||

name: 'VSCode E2E Tests'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs: 'e2e-test'

|

||||

if: needs.e2e-test.result == 'success'

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Setup Node.js'

|

||||

uses: 'actions/setup-node@v4'

|

||||

with:

|

||||

node-version: '20.x'

|

||||

cache: 'npm'

|

||||

|

||||

- name: 'Install dependencies'

|

||||

run: 'npm ci'

|

||||

|

||||

- name: 'Install Playwright browsers'

|

||||

run: 'npx playwright install --with-deps'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Build project'

|

||||

run: 'npm run build'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Bundle CLI'

|

||||

run: 'node scripts/prepackage.js'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Run VSCode E2E tests'

|

||||

run: 'xvfb-run -a npm run test:e2e:vscode'

|

||||

working-directory: 'packages/vscode-ide-companion'

|

||||

|

||||

- name: 'Upload VSCode E2E test results'

|

||||

if: always()

|

||||

uses: 'actions/upload-artifact@v4'

|

||||

with:

|

||||

name: 'vscode-e2e-test-results'

|

||||

path: 'packages/vscode-ide-companion/e2e-vscode/test-results'

|

||||

|

||||

- name: 'Upload VSCode Playwright report'

|

||||

if: always()

|

||||

uses: 'actions/upload-artifact@v4'

|

||||

with:

|

||||

name: 'vscode-playwright-report'

|

||||

path: 'packages/vscode-ide-companion/e2e-vscode/playwright-report'

|

||||

|

||||

# Job to comment test results on PR if tests fail

|

||||

comment-on-pr:

|

||||

name: 'Comment PR with Test Results'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs: [unit-test, integration-test, e2e-test, e2e-vscode-test]

|

||||

if: always() && github.event_name == 'pull_request' && (needs.unit-test.result == 'failure' || needs.integration-test.result == 'failure' || needs.e2e-test.result == 'failure' || needs.e2e-vscode-test.result == 'failure')

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Find Comment'

|

||||

uses: 'peter-evans/find-comment@v3'

|

||||

id: 'find-comment'

|

||||

with:

|

||||

issue-number: '${{ github.event.pull_request.number }}'

|

||||

comment-author: 'github-actions[bot]'

|

||||

body-includes: 'VSCode Extension Test Results'

|

||||

|

||||

- name: 'Comment on PR'

|

||||

uses: 'peter-evans/create-or-update-comment@v4'

|

||||

with:

|

||||

comment-id: '${{ steps.find-comment.outputs.comment-id }}'

|

||||

issue-number: '${{ github.event.pull_request.number }}'

|

||||

edit-mode: 'replace'

|

||||

body: |

|

||||

## VSCode Extension Test Results

|

||||

|

||||

Tests have failed for this pull request. Please check the following jobs:

|

||||

|

||||

- Unit Tests: `${{ needs.unit-test.result }}`

|

||||

- Integration Tests: `${{ needs.integration-test.result }}`

|

||||

- E2E Tests: `${{ needs.e2e-test.result }}`

|

||||

- VSCode E2E Tests: `${{ needs.e2e-vscode-test.result }}`

|

||||

|

||||

[Check the workflow run](${{ github.server_url }}/${{ github.repository }}/actions/runs/${{ github.run_id }}) for details.

|

||||

|

||||

# Job to create an issue if tests fail when not on a PR (e.g. direct push to main)

|

||||

create-issue:

|

||||

name: 'Create Issue for Failed Tests'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs: [unit-test, integration-test, e2e-test, e2e-vscode-test]

|

||||

if: always() && github.event_name == 'push' && (needs.unit-test.result == 'failure' || needs.integration-test.result == 'failure' || needs.e2e-test.result == 'failure' || needs.e2e-vscode-test.result == 'failure')

|

||||

|

||||

steps:

|

||||

- name: 'Checkout'

|

||||

uses: 'actions/checkout@v4'

|

||||

|

||||

- name: 'Create Issue'

|

||||

uses: 'actions/github-script@v7'

|

||||

with:

|

||||

script: |

|

||||

const { owner, repo } = context.repo;

|

||||

const result = await github.rest.issues.create({

|

||||

owner,

|

||||

repo,

|

||||

title: `VSCode Extension Tests Failed - ${context.sha.substring(0, 7)}`,

|

||||

body: `VSCode Extension Tests failed on commit ${context.sha}\n\nResults:\n- Unit Tests: ${{ needs.unit-test.result }}\n- Integration Tests: ${{ needs.integration-test.result }}\n- E2E Tests: ${{ needs.e2e-test.result }}\n- VSCode E2E Tests: ${{ needs.e2e-vscode-test.result }}\n\nWorkflow run: ${{ github.server_url }}/${{ github.repository }}/actions/runs/${{ github.run_id }}`

|

||||

});

|

||||

|

||||

# Summary job to pass/fail the entire workflow based on test results

|

||||

vscode-extension-tests:

|

||||

name: 'VSCode Extension Tests Summary'

|

||||

runs-on: 'ubuntu-latest'

|

||||

needs:

|

||||

- 'unit-test'

|

||||

- 'integration-test'

|

||||

- 'e2e-test'

|

||||

- 'e2e-vscode-test'

|

||||

if: always()

|

||||

steps:

|

||||

- name: 'Check test results'

|

||||

run: |

|

||||

if [[ "${{ needs.unit-test.result }}" == "failure" ]] || \

|

||||

[[ "${{ needs.integration-test.result }}" == "failure" ]] || \

|

||||

[[ "${{ needs.e2e-test.result }}" == "failure" ]] || \

|

||||

[[ "${{ needs.e2e-vscode-test.result }}" == "failure" ]]; then

|

||||

echo "One or more test jobs failed"

|

||||

exit 1

|

||||

fi

|

||||

echo "All tests passed!"

|

||||

5

.gitignore

vendored

5

.gitignore

vendored

@@ -63,8 +63,3 @@ patch_output.log

|

||||

docs-site/.next

|

||||

# content is a symlink to ../docs

|

||||

docs-site/content

|

||||

|

||||

# vscode-ida-companion test files

|

||||

.vscode-test/

|

||||

test-results/

|

||||

e2e-vscode/

|

||||

|

||||

@@ -4,11 +4,25 @@ Qwen Code extensions package prompts, MCP servers, and custom commands into a fa

|

||||

|

||||

## Extension management

|

||||

|

||||

We offer a suite of extension management tools using `qwen extensions` commands.

|

||||

We offer a suite of extension management tools using both `qwen extensions` CLI commands and `/extensions` slash commands within the interactive CLI.

|

||||

|

||||

Note that these commands are not supported from within the CLI, although you can list installed extensions using the `/extensions list` subcommand.

|

||||

### Runtime Extension Management (Slash Commands)

|

||||

|

||||

Note that all of these commands will only be reflected in active CLI sessions on restart.

|

||||

You can manage extensions at runtime within the interactive CLI using `/extensions` slash commands. These commands support hot-reloading, meaning changes take effect immediately without restarting the application.

|

||||

|

||||

| Command | Description |

|

||||

| ------------------------------------------------------ | --------------------------------------------------------------- |

|

||||

| `/extensions` or `/extensions list` | List all installed extensions with their status |

|

||||

| `/extensions install <source>` | Install an extension from a git URL, local path, or marketplace |

|

||||

| `/extensions uninstall <name>` | Uninstall an extension |

|

||||

| `/extensions enable <name> --scope <user\|workspace>` | Enable an extension |

|

||||

| `/extensions disable <name> --scope <user\|workspace>` | Disable an extension |

|

||||

| `/extensions update <name>` | Update a specific extension |

|

||||

| `/extensions update --all` | Update all extensions with available updates |

|

||||

|

||||

### CLI Extension Management

|

||||

|

||||

You can also manage extensions using `qwen extensions` CLI commands. Note that changes made via CLI commands will be reflected in active CLI sessions on restart.

|

||||

|

||||

### Installing an extension

|

||||

|

||||

@@ -98,7 +112,18 @@ The `qwen-extension.json` file contains the configuration for the extension. The

|

||||

}

|

||||

},

|

||||

"contextFileName": "QWEN.md",

|

||||

"excludeTools": ["run_shell_command"]

|

||||

"excludeTools": ["run_shell_command"],

|

||||

"commands": "commands",

|

||||

"skills": "skills",

|

||||

"agents": "agents",

|

||||

"settings": [

|

||||

{

|

||||

"name": "API Key",

|

||||

"description": "Your API key for the service",

|

||||

"envVar": "MY_API_KEY",

|

||||

"sensitive": true

|

||||

}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

@@ -108,12 +133,18 @@ The `qwen-extension.json` file contains the configuration for the extension. The

|

||||

- Note that all MCP server configuration options are supported except for `trust`.

|

||||

- `contextFileName`: The name of the file that contains the context for the extension. This will be used to load the context from the extension directory. If this property is not used but a `QWEN.md` file is present in your extension directory, then that file will be loaded.

|

||||

- `excludeTools`: An array of tool names to exclude from the model. You can also specify command-specific restrictions for tools that support it, like the `run_shell_command` tool. For example, `"excludeTools": ["run_shell_command(rm -rf)"]` will block the `rm -rf` command. Note that this differs from the MCP server `excludeTools` functionality, which can be listed in the MCP server config. **Important:** Tools specified in `excludeTools` will be disabled for the entire conversation context and will affect all subsequent queries in the current session.

|

||||

- `commands`: The directory containing custom commands (default: `commands`). Commands are `.md` files that define prompts.

|

||||

- `skills`: The directory containing custom skills (default: `skills`). Skills are discovered automatically and become available via the `/skills` command.

|

||||

- `agents`: The directory containing custom subagents (default: `agents`). Subagents are `.yaml` or `.md` files that define specialized AI assistants.

|

||||

- `settings`: An array of settings that the extension requires. When installing, users will be prompted to provide values for these settings. The values are stored securely and passed to MCP servers as environment variables.

|

||||

|

||||

When Qwen Code starts, it loads all the extensions and merges their configurations. If there are any conflicts, the workspace configuration takes precedence.

|

||||

|

||||

### Custom commands

|

||||

|

||||

Extensions can provide [custom commands](./cli/commands.md#custom-commands) by placing TOML files in a `commands/` subdirectory within the extension directory. These commands follow the same format as user and project custom commands and use standard naming conventions.

|

||||

Extensions can provide [custom commands](./cli/commands.md#custom-commands) by placing Markdown files in a `commands/` subdirectory within the extension directory. These commands follow the same format as user and project custom commands and use standard naming conventions.

|

||||

|

||||

> **Note:** The command format has been updated from TOML to Markdown. TOML files are deprecated but still supported. You can migrate existing TOML commands using the automatic migration prompt that appears when TOML files are detected.

|

||||

|

||||

**Example**

|

||||

|

||||

@@ -123,15 +154,46 @@ An extension named `gcp` with the following structure:

|

||||

.qwen/extensions/gcp/

|

||||

├── qwen-extension.json

|

||||

└── commands/

|

||||

├── deploy.toml

|

||||

├── deploy.md

|

||||

└── gcs/

|

||||

└── sync.toml

|

||||

└── sync.md

|

||||

```

|

||||

|

||||

Would provide these commands:

|

||||

|

||||

- `/deploy` - Shows as `[gcp] Custom command from deploy.toml` in help

|

||||

- `/gcs:sync` - Shows as `[gcp] Custom command from sync.toml` in help

|

||||

- `/deploy` - Shows as `[gcp] Custom command from deploy.md` in help

|

||||

- `/gcs:sync` - Shows as `[gcp] Custom command from sync.md` in help

|

||||

|

||||

### Custom skills

|

||||

|

||||

Extensions can provide custom skills by placing skill files in a `skills/` subdirectory within the extension directory. Each skill should have a `SKILL.md` file with YAML frontmatter defining the skill's name and description.

|

||||

|

||||

**Example**

|

||||

|

||||

```

|

||||

.qwen/extensions/my-extension/

|

||||

├── qwen-extension.json

|

||||

└── skills/

|

||||

└── pdf-processor/

|

||||

└── SKILL.md

|

||||

```

|

||||

|

||||

The skill will be available via the `/skills` command when the extension is active.

|

||||

|

||||

### Custom subagents

|

||||

|

||||

Extensions can provide custom subagents by placing agent configuration files in an `agents/` subdirectory within the extension directory. Agents are defined using YAML or Markdown files.

|

||||

|

||||

**Example**

|

||||

|

||||

```

|

||||

.qwen/extensions/my-extension/

|

||||

├── qwen-extension.json

|

||||

└── agents/

|

||||

└── testing-expert.yaml

|

||||

```

|

||||

|

||||

Extension subagents appear in the subagent manager dialog under "Extension Agents" section.

|

||||

|

||||

### Conflict resolution

|

||||

|

||||

|

||||

@@ -148,22 +148,119 @@ Custom commands provide a way to create shortcuts for complex prompts. Let's add

|

||||

mkdir -p commands/fs

|

||||

```

|

||||

|

||||

2. Create a file named `commands/fs/grep-code.toml`:

|

||||

2. Create a file named `commands/fs/grep-code.md`:

|

||||

|

||||

```markdown

|

||||

---

|

||||

description: Search for a pattern in code and summarize findings

|

||||

---

|

||||

|

||||

```toml

|

||||

prompt = """

|

||||

Please summarize the findings for the pattern `{{args}}`.

|

||||

|

||||

Search Results:

|

||||

!{grep -r {{args}} .}

|

||||

"""

|

||||

```

|

||||

|

||||

This command, `/fs:grep-code`, will take an argument, run the `grep` shell command with it, and pipe the results into a prompt for summarization.

|

||||

|

||||

> **Note:** Commands use Markdown format with optional YAML frontmatter. TOML format is deprecated but still supported for backwards compatibility.

|

||||

|

||||

After saving the file, restart the Qwen Code. You can now run `/fs:grep-code "some pattern"` to use your new command.

|

||||

|

||||

## Step 5: Add a Custom `QWEN.md`

|

||||

## Step 5: Add Custom Skills and Subagents (Optional)

|

||||

|

||||

Extensions can also provide custom skills and subagents to extend Qwen Code's capabilities.

|

||||

|

||||

### Adding a Custom Skill

|

||||

|

||||

Skills are model-invoked capabilities that the AI can automatically use when relevant.

|

||||

|

||||

1. Create a `skills` directory with a skill subdirectory:

|

||||

|

||||

```bash

|

||||

mkdir -p skills/code-analyzer

|

||||

```

|

||||

|

||||

2. Create a `skills/code-analyzer/SKILL.md` file:

|

||||

|

||||

```markdown

|

||||

---

|

||||

name: code-analyzer

|

||||

description: Analyzes code structure and provides insights about complexity, dependencies, and potential improvements

|

||||

---

|

||||

|

||||

# Code Analyzer

|

||||

|

||||

## Instructions

|

||||

|

||||

When analyzing code, focus on:

|

||||

|

||||

- Code complexity and maintainability

|

||||

- Dependencies and coupling

|

||||

- Potential performance issues

|

||||

- Suggestions for improvements

|

||||

|

||||

## Examples

|

||||

|

||||

- "Analyze the complexity of this function"

|

||||

- "What are the dependencies of this module?"

|

||||

```

|

||||

|

||||

### Adding a Custom Subagent

|

||||

|

||||

Subagents are specialized AI assistants for specific tasks.

|

||||

|

||||

1. Create an `agents` directory:

|

||||

|

||||

```bash

|

||||

mkdir -p agents

|

||||

```

|

||||

|

||||

2. Create an `agents/refactoring-expert.md` file:

|

||||

|

||||

```markdown

|

||||

---

|

||||

name: refactoring-expert

|

||||

description: Specialized in code refactoring, improving code structure and maintainability

|

||||

tools:

|

||||

- read_file

|

||||

- write_file

|

||||

- read_many_files

|

||||

---

|

||||

|

||||

You are a refactoring specialist focused on improving code quality.

|

||||

|

||||

Your expertise includes:

|

||||

|

||||

- Identifying code smells and anti-patterns

|

||||

- Applying SOLID principles

|

||||

- Improving code readability and maintainability

|

||||

- Safe refactoring with minimal risk

|

||||

|

||||

For each refactoring task:

|

||||

|

||||

1. Analyze the current code structure

|

||||

2. Identify areas for improvement

|

||||

3. Propose refactoring steps

|

||||

4. Implement changes incrementally

|

||||

5. Verify functionality is preserved

|

||||

```

|

||||

|

||||

3. Update your `qwen-extension.json` to include the new directories:

|

||||

|

||||

```json

|

||||

{

|

||||

"name": "my-first-extension",

|

||||

"version": "1.0.0",

|

||||

"skills": "skills",

|

||||

"agents": "agents",

|

||||

"mcpServers": { ... }

|

||||

}

|

||||

```

|

||||

|

||||

After restarting Qwen Code, your custom skills will be available via `/skills` and subagents via `/agents manage`.

|

||||

|

||||

## Step 6: Add a Custom `QWEN.md`

|

||||

|

||||

You can provide persistent context to the model by adding a `QWEN.md` file to your extension. This is useful for giving the model instructions on how to behave or information about your extension's tools. Note that you may not always need this for extensions built to expose commands and prompts.

|

||||

|

||||

@@ -194,7 +291,7 @@ You can provide persistent context to the model by adding a `QWEN.md` file to yo

|

||||

|

||||

Restart the CLI again. The model will now have the context from your `QWEN.md` file in every session where the extension is active.

|

||||

|

||||

## Step 6: Releasing Your Extension

|

||||

## Step 7: Releasing Your Extension

|

||||

|

||||

Once you are happy with your extension, you can share it with others. The two primary ways of releasing extensions are via a Git repository or through GitHub Releases. Using a public Git repository is the simplest method.

|

||||

|

||||

@@ -207,6 +304,7 @@ You've successfully created a Qwen Code extension! You learned how to:

|

||||

- Bootstrap a new extension from a template.

|

||||

- Add custom tools with an MCP server.

|

||||

- Create convenient custom commands.

|

||||

- Add custom skills and subagents.

|

||||

- Provide persistent context to the model.

|

||||

- Link your extension for local development.

|

||||

|

||||

|

||||

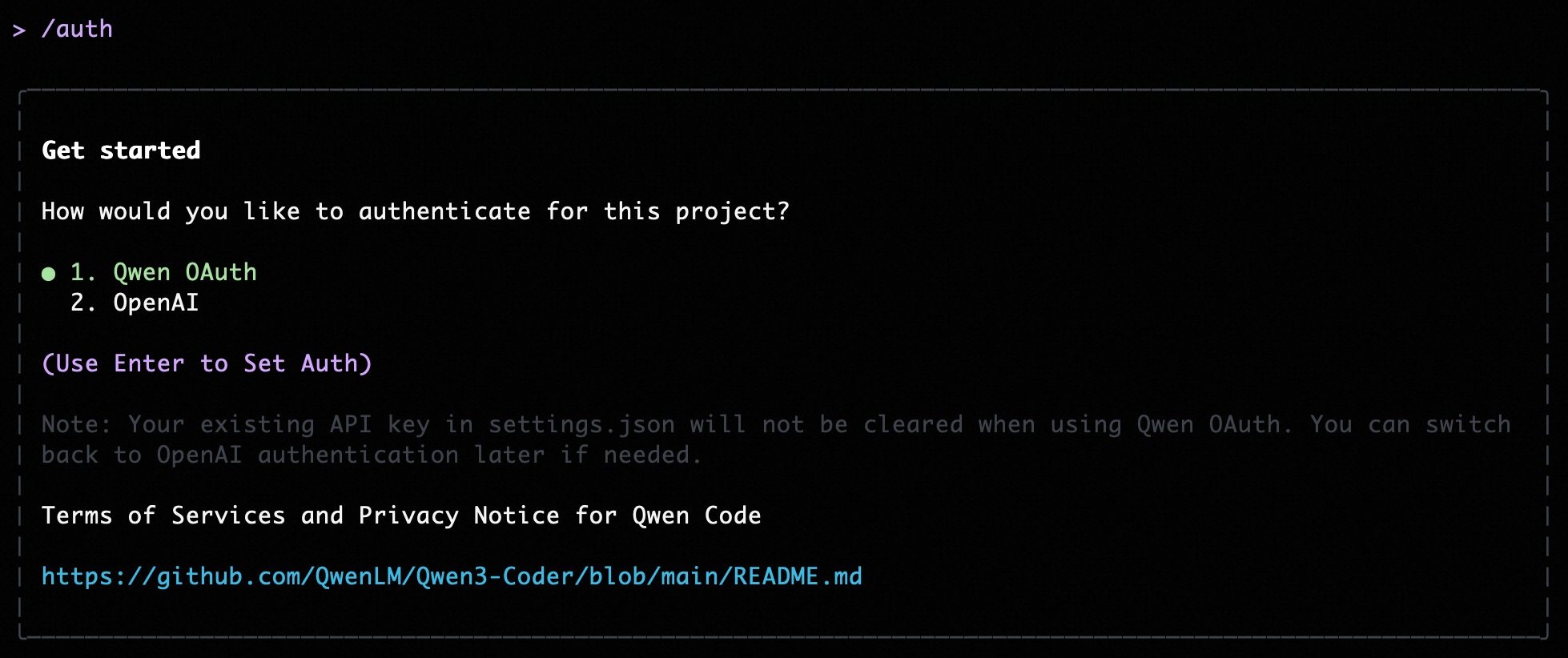

@@ -5,11 +5,13 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -24,15 +26,54 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Quick start (interactive, recommended for local use)

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -275,7 +275,7 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

| `tools.truncateToolOutputThreshold` | number | Truncate tool output if it is larger than this many characters. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `25000` | Requires restart: Yes |

|

||||

| `tools.truncateToolOutputLines` | number | Maximum lines or entries kept when truncating tool output. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `1000` | Requires restart: Yes |

|

||||

| `tools.autoAccept` | boolean | Controls whether the CLI automatically accepts and executes tool calls that are considered safe (e.g., read-only operations) without explicit user confirmation. If set to `true`, the CLI will bypass the confirmation prompt for tools deemed safe. | `false` | |

|

||||

| `tools.experimental.skills` | boolean | Enable experimental Agent Skills feature | `false` | |

|

||||

| `tools.experimental.skills` | boolean | Enable experimental Agent Skills feature | `false` | |

|

||||

|

||||

#### mcp

|

||||

|

||||

|

||||

@@ -121,6 +121,8 @@ Environment Variables: Commands executed via `!` will set the `QWEN_CODE=1` envi

|

||||

|

||||

Save frequently used prompts as shortcut commands to improve work efficiency and ensure consistency.

|

||||

|

||||

> **Note:** Custom commands now use Markdown format with optional YAML frontmatter. TOML format is deprecated but still supported for backwards compatibility. When TOML files are detected, an automatic migration prompt will be displayed.

|

||||

|

||||

### Quick Overview

|

||||

|

||||

| Function | Description | Advantages | Priority | Applicable Scenarios |

|

||||

@@ -135,14 +137,34 @@ Priority Rules: Project commands > User commands (project command used when name

|

||||

|

||||

#### File Path to Command Name Mapping Table

|

||||

|

||||

| File Location | Generated Command | Example Call |

|

||||

| ---------------------------- | ----------------- | --------------------- |

|

||||

| `~/.qwen/commands/test.toml` | `/test` | `/test Parameter` |

|

||||

| `<project>/git/commit.toml` | `/git:commit` | `/git:commit Message` |

|

||||

| File Location | Generated Command | Example Call |

|

||||

| -------------------------- | ----------------- | --------------------- |

|

||||

| `~/.qwen/commands/test.md` | `/test` | `/test Parameter` |

|

||||

| `<project>/git/commit.md` | `/git:commit` | `/git:commit Message` |

|

||||

|

||||

Naming Rules: Path separator (`/` or `\`) converted to colon (`:`)

|

||||

|

||||

### TOML File Format Specification

|

||||

### Markdown File Format Specification (Recommended)

|

||||

|

||||

Custom commands use Markdown files with optional YAML frontmatter:

|

||||

|

||||

```markdown

|

||||

---

|

||||

description: Optional description (displayed in /help)

|

||||

---

|

||||

|

||||

Your prompt content here.

|

||||

Use {{args}} for parameter injection.

|

||||

```

|

||||

|

||||

| Field | Required | Description | Example |

|

||||

| ------------- | -------- | ---------------------------------------- | ------------------------------------------ |

|

||||

| `description` | Optional | Command description (displayed in /help) | `description: Code analysis tool` |

|

||||

| Prompt body | Required | Prompt content sent to model | Any Markdown content after the frontmatter |

|

||||

|

||||

### TOML File Format (Deprecated)

|

||||

|

||||

> **Deprecated:** TOML format is still supported but will be removed in a future version. Please migrate to Markdown format.

|

||||

|

||||

| Field | Required | Description | Example |

|

||||

| ------------- | -------- | ---------------------------------------- | ------------------------------------------ |

|

||||

@@ -191,15 +213,19 @@ Naming Rules: Path separator (`/` or `\`) converted to colon (`:`)

|

||||

|

||||

Example: Git Commit Message Generation

|

||||

|

||||

```

|

||||

# git/commit.toml

|

||||

description = "Generate Commit message based on staged changes"

|

||||

prompt = """

|

||||

````markdown

|

||||

---

|

||||

description: Generate Commit message based on staged changes

|

||||

---

|

||||

|

||||

Please generate a Commit message based on the following diff:

|

||||

diff

|

||||

|

||||

```diff

|

||||

!{git diff --staged}

|

||||

"""

|

||||

```

|

||||

````

|

||||

|

||||

````

|

||||

|

||||

#### 4. File Content Injection (`@{...}`)

|

||||

|

||||

@@ -212,36 +238,38 @@ diff

|

||||

|

||||

Example: Code Review Command

|

||||

|

||||

```

|

||||

# review.toml

|

||||

description = "Code review based on best practices"

|

||||

prompt = """

|

||||

```markdown

|

||||

---

|

||||

description: Code review based on best practices

|

||||

---

|

||||

|

||||

Review {{args}}, reference standards:

|

||||

|

||||

@{docs/code-standards.md}

|

||||

"""

|

||||

```

|

||||

````

|

||||

|

||||

### Practical Creation Example

|

||||

|

||||

#### "Pure Function Refactoring" Command Creation Steps Table

|

||||

|

||||

| Operation | Command/Code |

|

||||

| ----------------------------- | ------------------------------------------- |

|

||||

| 1. Create directory structure | `mkdir -p ~/.qwen/commands/refactor` |

|

||||

| 2. Create command file | `touch ~/.qwen/commands/refactor/pure.toml` |

|

||||

| 3. Edit command content | Refer to the complete code below. |

|

||||

| 4. Test command | `@file.js` → `/refactor:pure` |

|

||||

| Operation | Command/Code |

|

||||

| ----------------------------- | ----------------------------------------- |

|

||||

| 1. Create directory structure | `mkdir -p ~/.qwen/commands/refactor` |

|

||||

| 2. Create command file | `touch ~/.qwen/commands/refactor/pure.md` |

|

||||

| 3. Edit command content | Refer to the complete code below. |

|

||||

| 4. Test command | `@file.js` → `/refactor:pure` |

|

||||

|

||||

```# ~/.qwen/commands/refactor/pure.toml

|

||||

description = "Refactor code to pure function"

|

||||

prompt = """

|

||||

Please analyze code in current context, refactor to pure function.

|

||||

Requirements:

|

||||

1. Provide refactored code

|

||||

2. Explain key changes and pure function characteristic implementation

|

||||

3. Maintain function unchanged

|

||||

"""

|

||||

```markdown

|

||||

---

|

||||

description: Refactor code to pure function

|

||||

---

|

||||

|

||||

Please analyze code in current context, refactor to pure function.

|

||||

Requirements:

|

||||

|

||||

1. Provide refactored code

|

||||

2. Explain key changes and pure function characteristic implementation

|

||||

3. Maintain function unchanged

|

||||

```

|

||||

|

||||

### Custom Command Best Practices Summary

|

||||

|

||||

@@ -157,6 +157,18 @@ When `--experimental-skills` is enabled, Qwen Code discovers Skills from:

|

||||

|

||||

- Personal Skills: `~/.qwen/skills/`

|

||||

- Project Skills: `.qwen/skills/`

|

||||

- Extension Skills: Skills provided by installed extensions

|

||||

|

||||

### Extension Skills

|

||||

|

||||

Extensions can provide custom skills that become available when the extension is enabled. These skills are stored in the extension's `skills/` directory and follow the same format as personal and project skills.

|

||||

|

||||

Extension skills are automatically discovered and loaded when:

|

||||

|

||||

- The extension is installed and enabled

|

||||

- The `--experimental-skills` flag is enabled

|

||||

|

||||

To see which extensions provide skills, check the extension's `qwen-extension.json` file for a `skills` field.

|

||||

|

||||

To view available Skills, ask Qwen Code directly:

|

||||

|

||||

|

||||

@@ -6,11 +6,11 @@ Subagents are specialized AI assistants that handle specific types of tasks with

|

||||

|

||||

Subagents are independent AI assistants that:

|

||||

|

||||

- **Specialize in specific tasks** - Each Subagent is configured with a focused system prompt for particular types of work

|

||||

- **Have separate context** - They maintain their own conversation history, separate from your main chat

|

||||

- **Use controlled tools** - You can configure which tools each Subagent has access to

|

||||

- **Work autonomously** - Once given a task, they work independently until completion or failure

|

||||

- **Provide detailed feedback** - You can see their progress, tool usage, and execution statistics in real-time

|

||||

- **Specialize in specific tasks** - Each Subagent is configured with a focused system prompt for particular types of work

|

||||

- **Have separate context** - They maintain their own conversation history, separate from your main chat

|

||||

- **Use controlled tools** - You can configure which tools each Subagent has access to

|

||||

- **Work autonomously** - Once given a task, they work independently until completion or failure

|

||||

- **Provide detailed feedback** - You can see their progress, tool usage, and execution statistics in real-time

|

||||

|

||||

## Key Benefits

|

||||

|

||||

@@ -59,7 +59,7 @@ AI: I'll delegate this to your testing specialist Subagents.

|

||||

|

||||

### CLI Commands

|

||||

|

||||

Subagents are managed through the `/agents` slash command and its subcommands:

|

||||

Subagents are managed through the `/agents` slash command and its subcommands:

|

||||

|

||||

**Usage:**:`/agents create`。Creates a new Subagent through a guided step wizard.

|

||||

|

||||

@@ -67,12 +67,26 @@ Subagents are managed through the `/agents` slash command and its subcommands:

|

||||

|

||||

### Storage Locations

|

||||

|

||||

Subagents are stored as Markdown files in two locations:

|

||||

Subagents are stored as Markdown files in multiple locations:

|

||||

|

||||

- **Project-level**: `.qwen/agents/` (takes precedence)

|

||||

- **User-level**: `~/.qwen/agents/` (fallback)

|

||||

- **Project-level**: `.qwen/agents/` (highest precedence)

|

||||

- **User-level**: `~/.qwen/agents/` (fallback)

|

||||

- **Extension-level**: Provided by installed extensions

|

||||

|

||||

This allows you to have both project-specific agents and personal agents that work across all projects.

|

||||

This allows you to have project-specific agents, personal agents that work across all projects, and extension-provided agents that add specialized capabilities.

|

||||

|

||||

### Extension Subagents

|

||||

|

||||

Extensions can provide custom subagents that become available when the extension is enabled. These agents are stored in the extension's `agents/` directory and follow the same format as personal and project agents.

|

||||

|

||||

Extension subagents:

|

||||

|

||||

- Are automatically discovered when the extension is enabled

|

||||

- Appear in the `/agents manage` dialog under "Extension Agents" section

|

||||

- Cannot be edited directly (edit the extension source instead)

|

||||

- Follow the same configuration format as user-defined agents

|

||||

|

||||

To see which extensions provide subagents, check the extension's `qwen-extension.json` file for an `agents` field.

|

||||

|

||||

### File Format

|

||||

|

||||

@@ -398,7 +412,7 @@ description: Helps with testing, documentation, code review, and deployment

|

||||

---

|

||||

```

|

||||

|

||||

**Why:** Focused agents produce better results and are easier to maintain.

|

||||

**Why:** Focused agents produce better results and are easier to maintain.

|

||||

|

||||

#### Clear Specialization

|

||||

|

||||

@@ -422,7 +436,7 @@ description: Works on frontend development tasks

|

||||

---

|

||||

```

|

||||

|

||||

**Why:** Specific expertise leads to more targeted and effective assistance.

|

||||

**Why:** Specific expertise leads to more targeted and effective assistance.

|

||||

|

||||

#### Actionable Descriptions

|

||||

|

||||

@@ -440,7 +454,7 @@ description: Reviews code for security vulnerabilities, performance issues, and

|

||||

description: A helpful code reviewer

|

||||

```

|

||||

|

||||

**Why:** Clear descriptions help the main AI choose the right agent for each task.

|

||||

**Why:** Clear descriptions help the main AI choose the right agent for each task.

|

||||

|

||||

### Configuration Best Practices

|

||||

|

||||

|

||||

1132

package-lock.json

generated

1132

package-lock.json

generated

File diff suppressed because it is too large

Load Diff

@@ -46,6 +46,7 @@

|

||||

"comment-json": "^4.2.5",

|

||||

"diff": "^7.0.0",

|

||||

"dotenv": "^17.1.0",

|

||||

"prompts": "^2.4.2",

|

||||

"fzf": "^0.5.2",

|

||||

"glob": "^10.5.0",

|

||||

"highlight.js": "^11.11.1",

|

||||

@@ -79,6 +80,7 @@

|

||||

"@types/command-exists": "^1.2.3",

|

||||

"@types/diff": "^7.0.2",

|

||||

"@types/dotenv": "^6.1.1",

|

||||

"@types/prompts": "^2.4.9",

|

||||

"@types/node": "^20.11.24",

|

||||

"@types/react": "^19.1.8",

|

||||

"@types/react-dom": "^19.1.6",

|

||||

|

||||

@@ -27,10 +27,8 @@ import { Readable, Writable } from 'node:stream';

|

||||

import type { LoadedSettings } from '../config/settings.js';

|

||||

import { SettingScope } from '../config/settings.js';

|

||||

import { z } from 'zod';

|

||||

import { ExtensionStorage, type Extension } from '../config/extension.js';

|

||||

import type { CliArgs } from '../config/config.js';

|

||||

import { loadCliConfig } from '../config/config.js';

|

||||

import { ExtensionEnablementManager } from '../config/extensions/extensionEnablement.js';

|

||||

|

||||

// Import the modular Session class

|

||||

import { Session } from './session/Session.js';

|

||||

@@ -38,7 +36,6 @@ import { Session } from './session/Session.js';

|

||||

export async function runAcpAgent(

|

||||

config: Config,

|

||||

settings: LoadedSettings,

|

||||

extensions: Extension[],

|

||||

argv: CliArgs,

|

||||

) {

|

||||

const stdout = Writable.toWeb(process.stdout) as WritableStream;

|

||||

@@ -51,8 +48,7 @@ export async function runAcpAgent(

|

||||

console.debug = console.error;

|

||||

|

||||

new acp.AgentSideConnection(

|

||||

(client: acp.Client) =>

|

||||

new GeminiAgent(config, settings, extensions, argv, client),

|

||||

(client: acp.Client) => new GeminiAgent(config, settings, argv, client),

|

||||

stdout,

|

||||

stdin,

|

||||

);

|

||||

@@ -65,7 +61,6 @@ class GeminiAgent {

|

||||

constructor(

|

||||

private config: Config,

|

||||

private settings: LoadedSettings,

|

||||

private extensions: Extension[],

|

||||

private argv: CliArgs,

|

||||

private client: acp.Client,

|

||||

) {}

|

||||

@@ -215,16 +210,7 @@ class GeminiAgent {

|

||||

continue: false,

|

||||

};

|

||||

|

||||

const config = await loadCliConfig(

|

||||

settings,

|

||||

this.extensions,

|

||||

new ExtensionEnablementManager(

|

||||

ExtensionStorage.getUserExtensionsDir(),

|

||||

this.argv.extensions,

|

||||

),

|

||||

argvForSession,

|

||||

cwd,

|

||||

);

|

||||

const config = await loadCliConfig(settings, argvForSession, cwd);

|

||||

|

||||

await config.initialize();

|

||||

return config;

|

||||

|

||||

218

packages/cli/src/commands/extensions/consent.ts

Normal file

218

packages/cli/src/commands/extensions/consent.ts

Normal file

@@ -0,0 +1,218 @@

|

||||

import type {

|

||||

ExtensionConfig,

|

||||

ExtensionRequestOptions,

|

||||

SkillConfig,

|

||||

SubagentConfig,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import type { ConfirmationRequest } from '../../ui/types.js';

|

||||

import chalk from 'chalk';

|

||||

import { t } from '../../i18n/index.js';

|

||||

|

||||

/**

|

||||

* Requests consent from the user to perform an action, by reading a Y/n

|

||||

* character from stdin.

|

||||

*

|

||||

* This should not be called from interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param consentDescription The description of the thing they will be consenting to.

|

||||

* @returns boolean, whether they consented or not.

|

||||

*/

|

||||

export async function requestConsentNonInteractive(

|

||||

consentDescription: string,

|

||||

): Promise<boolean> {

|

||||

console.info(consentDescription);

|

||||

const result = await promptForConsentNonInteractive(

|

||||

t('Do you want to continue? [Y/n]: '),

|

||||

);

|

||||

return result;

|

||||

}

|

||||

|

||||

/**

|

||||

* Requests consent from the user to perform an action, in interactive mode.

|

||||

*

|

||||

* This should not be called from non-interactive mode as it will not work.

|

||||

*

|

||||

* @param consentDescription The description of the thing they will be consenting to.

|

||||

* @param addExtensionUpdateConfirmationRequest A function to actually add a prompt to the UI.

|

||||

* @returns boolean, whether they consented or not.

|

||||

*/

|

||||

export async function requestConsentInteractive(

|

||||

consentDescription: string,

|

||||

addExtensionUpdateConfirmationRequest: (value: ConfirmationRequest) => void,

|

||||

): Promise<boolean> {

|

||||

return promptForConsentInteractive(

|

||||

consentDescription + '\n\n' + t('Do you want to continue?'),

|

||||

addExtensionUpdateConfirmationRequest,

|

||||

);

|

||||

}

|

||||

|

||||

/**

|

||||

* Asks users a prompt and awaits for a y/n response on stdin.

|

||||

*

|

||||

* This should not be called from interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param prompt A yes/no prompt to ask the user

|

||||

* @returns Whether or not the user answers 'y' (yes). Defaults to 'yes' on enter.

|

||||

*/

|

||||

async function promptForConsentNonInteractive(

|

||||

prompt: string,

|

||||

): Promise<boolean> {

|

||||

const readline = await import('node:readline');

|

||||

const rl = readline.createInterface({

|

||||

input: process.stdin,

|

||||

output: process.stdout,

|

||||

});

|

||||

|

||||

return new Promise((resolve) => {

|

||||

rl.question(prompt, (answer) => {

|

||||

rl.close();

|

||||

resolve(['y', ''].includes(answer.trim().toLowerCase()));

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Asks users an interactive yes/no prompt.

|

||||

*

|

||||

* This should not be called from non-interactive mode as it will break the CLI.

|

||||

*

|

||||

* @param prompt A markdown prompt to ask the user

|

||||

* @param addExtensionUpdateConfirmationRequest Function to update the UI state with the confirmation request.

|

||||

* @returns Whether or not the user answers yes.

|

||||

*/

|

||||

async function promptForConsentInteractive(

|

||||

prompt: string,

|

||||

addExtensionUpdateConfirmationRequest: (value: ConfirmationRequest) => void,

|

||||

): Promise<boolean> {

|

||||

return new Promise<boolean>((resolve) => {

|

||||

addExtensionUpdateConfirmationRequest({

|

||||

prompt,

|

||||

onConfirm: (resolvedConfirmed) => {

|

||||

resolve(resolvedConfirmed);

|

||||

},

|

||||

});

|

||||

});

|

||||

}

|

||||

|

||||

/**

|

||||

* Builds a consent string for installing an extension based on it's

|

||||

* extensionConfig.

|

||||

*/

|

||||

export function extensionConsentString(

|

||||

extensionConfig: ExtensionConfig,

|

||||

commands: string[] = [],

|

||||

skills: SkillConfig[] = [],

|

||||

subagents: SubagentConfig[] = [],

|

||||

): string {

|

||||

const output: string[] = [];

|

||||

const mcpServerEntries = Object.entries(extensionConfig.mcpServers || {});

|

||||

output.push(

|

||||

t('Installing extension "{{name}}".', { name: extensionConfig.name }),

|

||||

);

|

||||

output.push(

|

||||

t(

|

||||

'**Extensions may introduce unexpected behavior. Ensure you have investigated the extension source and trust the author.**',

|

||||

),

|

||||

);

|

||||

|

||||

if (mcpServerEntries.length) {

|

||||

output.push(t('This extension will run the following MCP servers:'));

|

||||

for (const [key, mcpServer] of mcpServerEntries) {

|

||||

const isLocal = !!mcpServer.command;

|

||||

const source =

|

||||

mcpServer.httpUrl ??

|

||||

`${mcpServer.command || ''}${mcpServer.args ? ' ' + mcpServer.args.join(' ') : ''}`;

|

||||

output.push(

|

||||

` * ${key} (${isLocal ? t('local') : t('remote')}): ${source}`,

|

||||

);

|

||||

}

|

||||

}

|

||||

if (commands && commands.length > 0) {

|

||||

output.push(

|

||||

t('This extension will add the following commands: {{commands}}.', {

|

||||

commands: commands.join(', '),

|

||||

}),

|

||||

);

|

||||

}

|

||||

if (extensionConfig.contextFileName) {

|

||||

const fileName = Array.isArray(extensionConfig.contextFileName)

|

||||

? extensionConfig.contextFileName.join(', ')

|

||||

: extensionConfig.contextFileName;

|

||||

output.push(

|

||||

t(

|

||||

'This extension will append info to your QWEN.md context using {{fileName}}',

|

||||

{ fileName },

|

||||

),

|

||||

);

|

||||

}

|

||||

if (extensionConfig.excludeTools) {

|

||||

output.push(

|

||||

t('This extension will exclude the following core tools: {{tools}}', {

|

||||

tools: extensionConfig.excludeTools.join(', '),

|

||||

}),

|

||||

);

|

||||

}

|

||||

if (skills.length > 0) {

|

||||

output.push(t('This extension will install the following skills:'));

|

||||

for (const skill of skills) {

|

||||

output.push(` * ${chalk.bold(skill.name)}: ${skill.description}`);

|

||||

}

|

||||

}

|

||||

if (subagents.length > 0) {

|

||||

output.push(t('This extension will install the following subagents:'));

|

||||

for (const subagent of subagents) {

|

||||

output.push(` * ${chalk.bold(subagent.name)}: ${subagent.description}`);

|

||||

}

|

||||

}

|

||||

return output.join('\n');

|

||||

}

|

||||

|

||||

/**

|

||||

* Requests consent from the user to install an extension (extensionConfig), if

|

||||

* there is any difference between the consent string for `extensionConfig` and

|

||||

* `previousExtensionConfig`.

|

||||

*

|

||||

* Always requests consent if previousExtensionConfig is null.

|

||||

*

|

||||

* Throws if the user does not consent.

|

||||

*/

|

||||

export const requestConsentOrFail = async (

|

||||

requestConsent: (consent: string) => Promise<boolean>,

|

||||

options?: ExtensionRequestOptions,

|

||||

) => {

|

||||

if (!options) return;

|

||||

const {

|

||||

extensionConfig,

|

||||

commands = [],

|

||||

skills = [],

|

||||

subagents = [],

|

||||

previousExtensionConfig,

|

||||

previousCommands = [],

|

||||

previousSkills = [],

|

||||

previousSubagents = [],

|

||||

} = options;

|

||||

const extensionConsent = extensionConsentString(

|

||||

extensionConfig,

|

||||

commands,

|

||||

skills,

|

||||

subagents,

|

||||

);

|

||||

if (previousExtensionConfig) {

|

||||

const previousExtensionConsent = extensionConsentString(

|

||||

previousExtensionConfig,

|

||||

previousCommands,

|

||||

previousSkills,

|

||||

previousSubagents,

|

||||

);

|

||||

if (previousExtensionConsent === extensionConsent) {

|

||||

return;

|

||||

}

|

||||

}

|

||||

if (!(await requestConsent(extensionConsent))) {

|

||||

throw new Error(

|

||||

t('Installation cancelled for "{{name}}".', {

|

||||

name: extensionConfig.name,

|

||||

}),

|

||||

);

|

||||

}

|

||||

};

|

||||

@@ -5,21 +5,22 @@

|

||||

*/

|

||||

|

||||

import { type CommandModule } from 'yargs';

|

||||

import { disableExtension } from '../../config/extension.js';

|

||||

import { SettingScope } from '../../config/settings.js';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface DisableArgs {

|

||||

name: string;

|

||||

scope?: string;

|

||||

}

|

||||

|

||||

export function handleDisable(args: DisableArgs) {

|

||||

export async function handleDisable(args: DisableArgs) {

|

||||

const extensionManager = await getExtensionManager();

|

||||

try {

|

||||

if (args.scope?.toLowerCase() === 'workspace') {

|

||||

disableExtension(args.name, SettingScope.Workspace);

|

||||

extensionManager.disableExtension(args.name, SettingScope.Workspace);

|

||||

} else {

|

||||

disableExtension(args.name, SettingScope.User);

|

||||

extensionManager.disableExtension(args.name, SettingScope.User);

|

||||

}

|

||||

console.log(

|

||||

`Extension "${args.name}" successfully disabled for scope "${args.scope}".`,

|

||||

@@ -61,8 +62,8 @@ export const disableCommand: CommandModule = {

|

||||

}

|

||||

return true;

|

||||

}),

|

||||

handler: (argv) => {

|

||||

handleDisable({

|

||||

handler: async (argv) => {

|

||||

await handleDisable({

|

||||

name: argv['name'] as string,

|

||||

scope: argv['scope'] as string,

|

||||

});

|

||||

|

||||

@@ -6,20 +6,22 @@

|

||||

|

||||

import { type CommandModule } from 'yargs';

|

||||

import { FatalConfigError, getErrorMessage } from '@qwen-code/qwen-code-core';

|

||||

import { enableExtension } from '../../config/extension.js';

|

||||

import { SettingScope } from '../../config/settings.js';

|

||||

import { getExtensionManager } from './utils.js';

|

||||

|

||||

interface EnableArgs {

|

||||

name: string;

|

||||

scope?: string;

|

||||

}

|

||||

|

||||

export function handleEnable(args: EnableArgs) {

|

||||

export async function handleEnable(args: EnableArgs) {

|

||||

const extensionManager = await getExtensionManager();

|

||||

|

||||

try {

|

||||

if (args.scope?.toLowerCase() === 'workspace') {

|

||||

enableExtension(args.name, SettingScope.Workspace);

|

||||

extensionManager.enableExtension(args.name, SettingScope.Workspace);

|

||||

} else {

|

||||

enableExtension(args.name, SettingScope.User);

|

||||

extensionManager.enableExtension(args.name, SettingScope.User);

|

||||

}

|

||||

if (args.scope) {

|

||||

console.log(

|

||||

@@ -66,8 +68,8 @@ export const enableCommand: CommandModule = {

|

||||

}

|

||||

return true;

|

||||

}),

|

||||

handler: (argv) => {

|

||||

handleEnable({

|

||||

handler: async (argv) => {

|

||||

await handleEnable({

|

||||

name: argv['name'] as string,

|

||||

scope: argv['scope'] as string,

|

||||

});

|

||||

|

||||

@@ -1,6 +1,3 @@

|

||||

prompt = """

|

||||

Please summarize the findings for the pattern `{{args}}`.

|

||||

|

||||

Search Results:

|

||||

!{grep -r {{args}} .}

|

||||

"""

|

||||

@@ -5,58 +5,67 @@

|

||||

*/

|

||||

|

||||

import type { CommandModule } from 'yargs';

|

||||

|

||||

import {

|

||||

installExtension,

|

||||

requestConsentNonInteractive,

|

||||

} from '../../config/extension.js';

|

||||

import type { ExtensionInstallMetadata } from '@qwen-code/qwen-code-core';

|

||||

ExtensionManager,

|

||||

parseInstallSource,

|

||||

} from '@qwen-code/qwen-code-core';

|

||||

import { getErrorMessage } from '../../utils/errors.js';

|

||||

import { stat } from 'node:fs/promises';

|

||||

import { isWorkspaceTrusted } from '../../config/trustedFolders.js';

|

||||

import { loadSettings } from '../../config/settings.js';

|

||||

import {

|

||||

requestConsentOrFail,

|

||||

requestConsentNonInteractive,

|

||||

} from './consent.js';

|

||||

|

||||

interface InstallArgs {

|

||||

source: string;

|

||||

ref?: string;

|

||||

autoUpdate?: boolean;

|

||||

allowPreRelease?: boolean;

|

||||

consent?: boolean;

|

||||

}

|

||||

|

||||

export async function handleInstall(args: InstallArgs) {

|

||||

try {

|

||||

let installMetadata: ExtensionInstallMetadata;

|

||||

const { source } = args;

|

||||

const installMetadata = await parseInstallSource(args.source);

|

||||

|

||||

if (

|

||||

source.startsWith('http://') ||

|

||||

source.startsWith('https://') ||

|

||||

source.startsWith('git@') ||

|

||||

source.startsWith('sso://')

|

||||

installMetadata.type !== 'git' &&

|

||||

installMetadata.type !== 'github-release'

|

||||

) {

|

||||

installMetadata = {

|

||||

source,

|

||||

type: 'git',

|

||||

ref: args.ref,

|

||||

autoUpdate: args.autoUpdate,

|

||||

};

|

||||

} else {

|

||||

if (args.ref || args.autoUpdate) {

|

||||

throw new Error(

|

||||

'--ref and --auto-update are not applicable for local extensions.',

|

||||

'--ref and --auto-update are not applicable for marketplace extensions.',

|

||||

);

|

||||

}

|

||||

try {

|

||||

await stat(source);

|

||||

installMetadata = {

|

||||

source,

|

||||

type: 'local',

|

||||

};

|

||||

} catch {

|

||||

throw new Error('Install source not found.');

|

||||

}

|

||||

}

|

||||

|

||||

const name = await installExtension(

|

||||

installMetadata,

|

||||

requestConsentNonInteractive,

|

||||