mirror of

https://github.com/QwenLM/qwen-code.git

synced 2026-01-19 07:16:19 +00:00

Compare commits

46 Commits

release/v0

...

main

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ec2aa6d86d | ||

|

|

66ad936c31 | ||

|

|

8b5f198e3c | ||

|

|

79cce84280 | ||

|

|

b9207c5884 | ||

|

|

baf848a4d9 | ||

|

|

d0104dc487 | ||

|

|

531062aeaf | ||

|

|

0681c71894 | ||

|

|

155c4b9728 | ||

|

|

57ca2823b3 | ||

|

|

620341eeae | ||

|

|

2852f48a4a | ||

|

|

c6c33233c5 | ||

|

|

106b69e5c0 | ||

|

|

6afe0f8c29 | ||

|

|

0b3be1a82c | ||

|

|

8af43e3ac3 | ||

|

|

04a11aa111 | ||

|

|

d5ad3aebe4 | ||

|

|

98c680642f | ||

|

|

e4efd3a15d | ||

|

|

886f914fb3 | ||

|

|

90365af2f8 | ||

|

|

cbef5ffd89 | ||

|

|

63406b4ba4 | ||

|

|

52db3a766d | ||

|

|

5e80e80387 | ||

|

|

985f65f8fa | ||

|

|

9b9c5fadd5 | ||

|

|

372c67cad4 | ||

|

|

af3864b5de | ||

|

|

1e3791f30a | ||

|

|

9bf626d051 | ||

|

|

6f33d92b2c | ||

|

|

a35af6550f | ||

|

|

d6607e134e | ||

|

|

9024a41723 | ||

|

|

bde056b62e | ||

|

|

ff5ea3c6d7 | ||

|

|

0faaac8fa4 | ||

|

|

b923acd278 | ||

|

|

c2e62b9122 | ||

|

|

f54b62cda3 | ||

|

|

9521987a09 | ||

|

|

97497457a8 |

@@ -201,6 +201,11 @@ If you encounter issues, check the [troubleshooting guide](https://qwenlm.github

|

||||

|

||||

To report a bug from within the CLI, run `/bug` and include a short title and repro steps.

|

||||

|

||||

## Connect with Us

|

||||

|

||||

- Discord: https://discord.gg/ycKBjdNd

|

||||

- Dingtalk: https://qr.dingtalk.com/action/joingroup?code=v1,k1,+FX6Gf/ZDlTahTIRi8AEQhIaBlqykA0j+eBKKdhLeAE=&_dt_no_comment=1&origin=1

|

||||

|

||||

## Acknowledgments

|

||||

|

||||

This project is based on [Google Gemini CLI](https://github.com/google-gemini/gemini-cli). We acknowledge and appreciate the excellent work of the Gemini CLI team. Our main contribution focuses on parser-level adaptations to better support Qwen-Coder models.

|

||||

|

||||

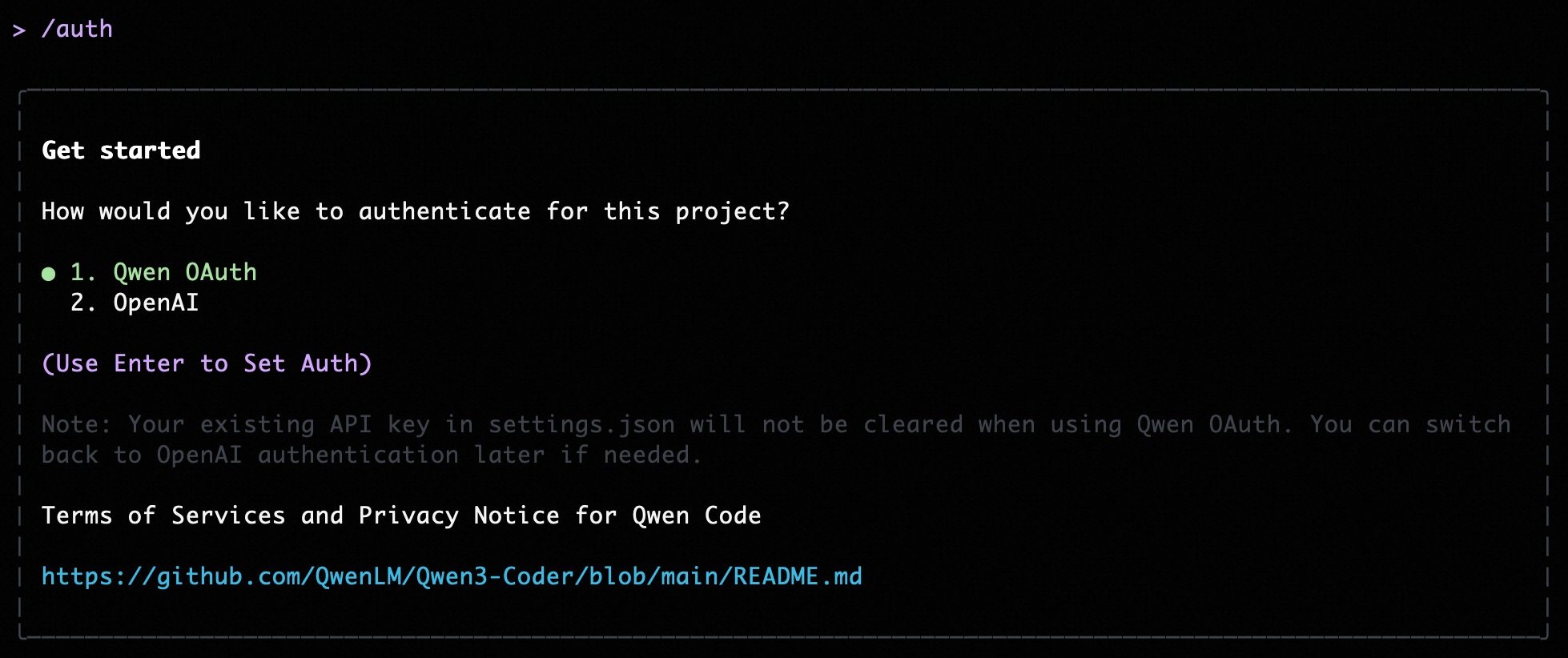

@@ -5,11 +5,13 @@ Qwen Code supports two authentication methods. Pick the one that matches how you

|

||||

- **Qwen OAuth (recommended)**: sign in with your `qwen.ai` account in a browser.

|

||||

- **OpenAI-compatible API**: use an API key (OpenAI or any OpenAI-compatible provider / endpoint).

|

||||

|

||||

|

||||

|

||||

## Option 1: Qwen OAuth (recommended & free) 👍

|

||||

|

||||

Use this if you want the simplest setup and you’re using Qwen models.

|

||||

Use this if you want the simplest setup and you're using Qwen models.

|

||||

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won’t need to log in again.

|

||||

- **How it works**: on first start, Qwen Code opens a browser login page. After you finish, credentials are cached locally so you usually won't need to log in again.

|

||||

- **Requirements**: a `qwen.ai` account + internet access (at least for the first login).

|

||||

- **Benefits**: no API key management, automatic credential refresh.

|

||||

- **Cost & quota**: free, with a quota of **60 requests/minute** and **2,000 requests/day**.

|

||||

@@ -24,15 +26,54 @@ qwen

|

||||

|

||||

Use this if you want to use OpenAI models or any provider that exposes an OpenAI-compatible API (e.g. OpenAI, Azure OpenAI, OpenRouter, ModelScope, Alibaba Cloud Bailian, or a self-hosted compatible endpoint).

|

||||

|

||||

### Quick start (interactive, recommended for local use)

|

||||

### Recommended: Coding Plan (subscription-based) 🚀

|

||||

|

||||

When you choose the OpenAI-compatible option in the CLI, it will prompt you for:

|

||||

Use this if you want predictable costs with higher usage quotas for the qwen3-coder-plus model.

|

||||

|

||||

- **API key**

|

||||

- **Base URL** (default: `https://api.openai.com/v1`)

|

||||

- **Model** (default: `gpt-4o`)

|

||||

> [!IMPORTANT]

|

||||

>

|

||||

> Coding Plan is only available for users in China mainland (Beijing region).

|

||||

|

||||

> **Note:** the CLI may display the key in plain text for verification. Make sure your terminal is not being recorded or shared.

|

||||

- **How it works**: subscribe to the Coding Plan with a fixed monthly fee, then configure Qwen Code to use the dedicated endpoint and your subscription API key.

|

||||

- **Requirements**: an active Coding Plan subscription from [Alibaba Cloud Bailian](https://bailian.console.aliyun.com/cn-beijing/?tab=globalset#/efm/coding_plan).

|

||||

- **Benefits**: higher usage quotas, predictable monthly costs, access to latest qwen3-coder-plus model.

|

||||

- **Cost & quota**: varies by plan (see table below).

|

||||

|

||||

#### Coding Plan Pricing & Quotas

|

||||

|

||||

| Feature | Lite Basic Plan | Pro Advanced Plan |

|

||||

| :------------------ | :-------------------- | :-------------------- |

|

||||

| **Price** | ¥40/month | ¥200/month |

|

||||

| **5-Hour Limit** | Up to 1,200 requests | Up to 6,000 requests |

|

||||

| **Weekly Limit** | Up to 9,000 requests | Up to 45,000 requests |

|

||||

| **Monthly Limit** | Up to 18,000 requests | Up to 90,000 requests |

|

||||

| **Supported Model** | qwen3-coder-plus | qwen3-coder-plus |

|

||||

|

||||

#### Quick Setup for Coding Plan

|

||||

|

||||

When you select the OpenAI-compatible option in the CLI, enter these values:

|

||||

|

||||

- **API key**: `sk-sp-xxxxx`

|

||||

- **Base URL**: `https://coding.dashscope.aliyuncs.com/v1`

|

||||

- **Model**: `qwen3-coder-plus`

|

||||

|

||||

> **Note**: Coding Plan API keys have the format `sk-sp-xxxxx`, which is different from standard Alibaba Cloud API keys.

|

||||

|

||||

#### Configure via Environment Variables

|

||||

|

||||

Set these environment variables to use Coding Plan:

|

||||

|

||||

```bash

|

||||

export OPENAI_API_KEY="your-coding-plan-api-key" # Format: sk-sp-xxxxx

|

||||

export OPENAI_BASE_URL="https://coding.dashscope.aliyuncs.com/v1"

|

||||

export OPENAI_MODEL="qwen3-coder-plus"

|

||||

```

|

||||

|

||||

For more details about Coding Plan, including subscription options and troubleshooting, see the [full Coding Plan documentation](https://bailian.console.aliyun.com/cn-beijing/?tab=doc#/doc/?type=model&url=3005961).

|

||||

|

||||

### Other OpenAI-compatible Providers

|

||||

|

||||

If you are using other providers (OpenAI, Azure, local LLMs, etc.), use the following configuration methods.

|

||||

|

||||

### Configure via command-line arguments

|

||||

|

||||

|

||||

@@ -241,7 +241,6 @@ Per-field precedence for `generationConfig`:

|

||||

| ------------------------------------------------- | -------------------------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ----------- |

|

||||

| `context.fileName` | string or array of strings | The name of the context file(s). | `undefined` |

|

||||

| `context.importFormat` | string | The format to use when importing memory. | `undefined` |

|

||||

| `context.discoveryMaxDirs` | number | Maximum number of directories to search for memory. | `200` |

|

||||

| `context.includeDirectories` | array | Additional directories to include in the workspace context. Specifies an array of additional absolute or relative paths to include in the workspace context. Missing directories will be skipped with a warning by default. Paths can use `~` to refer to the user's home directory. This setting can be combined with the `--include-directories` command-line flag. | `[]` |

|

||||

| `context.loadFromIncludeDirectories` | boolean | Controls the behavior of the `/memory refresh` command. If set to `true`, `QWEN.md` files should be loaded from all directories that are added. If set to `false`, `QWEN.md` should only be loaded from the current directory. | `false` |

|

||||

| `context.fileFiltering.respectGitIgnore` | boolean | Respect .gitignore files when searching. | `true` |

|

||||

@@ -275,6 +274,7 @@ If you are experiencing performance issues with file searching (e.g., with `@` c

|

||||

| `tools.truncateToolOutputThreshold` | number | Truncate tool output if it is larger than this many characters. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `25000` | Requires restart: Yes |

|

||||

| `tools.truncateToolOutputLines` | number | Maximum lines or entries kept when truncating tool output. Applies to Shell, Grep, Glob, ReadFile and ReadManyFiles tools. | `1000` | Requires restart: Yes |

|

||||

| `tools.autoAccept` | boolean | Controls whether the CLI automatically accepts and executes tool calls that are considered safe (e.g., read-only operations) without explicit user confirmation. If set to `true`, the CLI will bypass the confirmation prompt for tools deemed safe. | `false` | |

|

||||

| `tools.experimental.skills` | boolean | Enable experimental Agent Skills feature | `false` | |

|

||||

|

||||

#### mcp

|

||||

|

||||

@@ -480,7 +480,7 @@ Arguments passed directly when running the CLI can override other configurations

|

||||

| `--telemetry-otlp-protocol` | | Sets the OTLP protocol for telemetry (`grpc` or `http`). | | Defaults to `grpc`. See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--telemetry-log-prompts` | | Enables logging of prompts for telemetry. | | See [telemetry](../../developers/development/telemetry) for more information. |

|

||||

| `--checkpointing` | | Enables [checkpointing](../features/checkpointing). | | |

|

||||

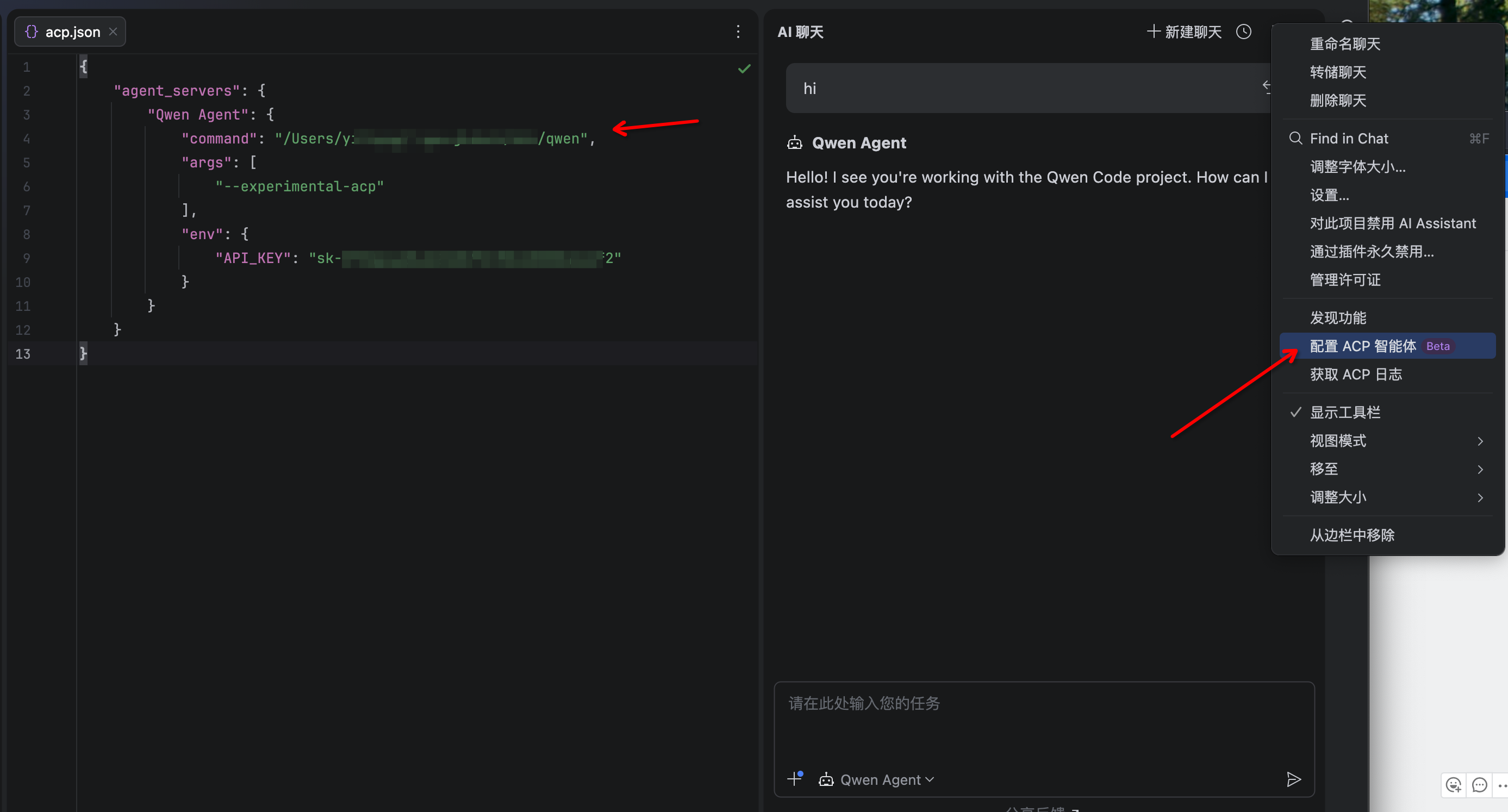

| `--acp` | | Enables ACP mode (Agent Control Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--acp` | | Enables ACP mode (Agent Client Protocol). Useful for IDE/editor integrations like [Zed](../integration-zed). | | Stable. Replaces the deprecated `--experimental-acp` flag. |

|

||||

| `--experimental-skills` | | Enables experimental [Agent Skills](../features/skills) (registers the `skill` tool and loads Skills from `.qwen/skills/` and `~/.qwen/skills/`). | | Experimental. |

|

||||

| `--extensions` | `-e` | Specifies a list of extensions to use for the session. | Extension names | If not provided, all available extensions are used. Use the special term `qwen -e none` to disable all extensions. Example: `qwen -e my-extension -e my-other-extension` |

|

||||

| `--list-extensions` | `-l` | Lists all available extensions and exits. | | |

|

||||

@@ -529,16 +529,13 @@ Here's a conceptual example of what a context file at the root of a TypeScript p

|

||||

|

||||

This example demonstrates how you can provide general project context, specific coding conventions, and even notes about particular files or components. The more relevant and precise your context files are, the better the AI can assist you. Project-specific context files are highly encouraged to establish conventions and context.

|

||||

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a sophisticated hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

- **Hierarchical Loading and Precedence:** The CLI implements a hierarchical memory system by loading context files (e.g., `QWEN.md`) from several locations. Content from files lower in this list (more specific) typically overrides or supplements content from files higher up (more general). The exact concatenation order and final context can be inspected using the `/memory show` command. The typical loading order is:

|

||||

1. **Global Context File:**

|

||||

- Location: `~/.qwen/<configured-context-filename>` (e.g., `~/.qwen/QWEN.md` in your user home directory).

|

||||

- Scope: Provides default instructions for all your projects.

|

||||

2. **Project Root & Ancestors Context Files:**

|

||||

- Location: The CLI searches for the configured context file in the current working directory and then in each parent directory up to either the project root (identified by a `.git` folder) or your home directory.

|

||||

- Scope: Provides context relevant to the entire project or a significant portion of it.

|

||||

3. **Sub-directory Context Files (Contextual/Local):**

|

||||

- Location: The CLI also scans for the configured context file in subdirectories _below_ the current working directory (respecting common ignore patterns like `node_modules`, `.git`, etc.). The breadth of this search is limited to 200 directories by default, but can be configured with the `context.discoveryMaxDirs` setting in your `settings.json` file.

|

||||

- Scope: Allows for highly specific instructions relevant to a particular component, module, or subsection of your project.

|

||||

- **Concatenation & UI Indication:** The contents of all found context files are concatenated (with separators indicating their origin and path) and provided as part of the system prompt. The CLI footer displays the count of loaded context files, giving you a quick visual cue about the active instructional context.

|

||||

- **Importing Content:** You can modularize your context files by importing other Markdown files using the `@path/to/file.md` syntax. For more details, see the [Memory Import Processor documentation](../configuration/memory).

|

||||

- **Commands for Memory Management:**

|

||||

|

||||

@@ -11,12 +11,29 @@ This guide shows you how to create, use, and manage Agent Skills in **Qwen Code*

|

||||

## Prerequisites

|

||||

|

||||

- Qwen Code (recent version)

|

||||

- Run with the experimental flag enabled:

|

||||

|

||||

## How to enable

|

||||

|

||||

### Via CLI flag

|

||||

|

||||

```bash

|

||||

qwen --experimental-skills

|

||||

```

|

||||

|

||||

### Via settings.json

|

||||

|

||||

Add to your `~/.qwen/settings.json` or project's `.qwen/settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"tools": {

|

||||

"experimental": {

|

||||

"skills": true

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- Basic familiarity with Qwen Code ([Quickstart](../quickstart.md))

|

||||

|

||||

## What are Agent Skills?

|

||||

|

||||

Binary file not shown.

|

Before Width: | Height: | Size: 36 KiB |

@@ -1,11 +1,11 @@

|

||||

# JetBrains IDEs

|

||||

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

> JetBrains IDEs provide native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within your JetBrains IDE with real-time code suggestions.

|

||||

|

||||

### Features

|

||||

|

||||

- **Native agent experience**: Integrated AI assistant panel within your JetBrains IDE

|

||||

- **Agent Control Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Agent Client Protocol**: Full support for ACP enabling advanced IDE interactions

|

||||

- **Symbol management**: #-mention files to add them to the conversation context

|

||||

- **Conversation history**: Access to past conversations within the IDE

|

||||

|

||||

@@ -40,7 +40,7 @@

|

||||

|

||||

4. The Qwen Code agent should now be available in the AI Assistant panel

|

||||

|

||||

|

||||

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -22,13 +22,7 @@

|

||||

|

||||

### Installation

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

|

||||

2. Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

Download and install the extension from the [Visual Studio Code Extension Marketplace](https://marketplace.visualstudio.com/items?itemName=qwenlm.qwen-code-vscode-ide-companion).

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

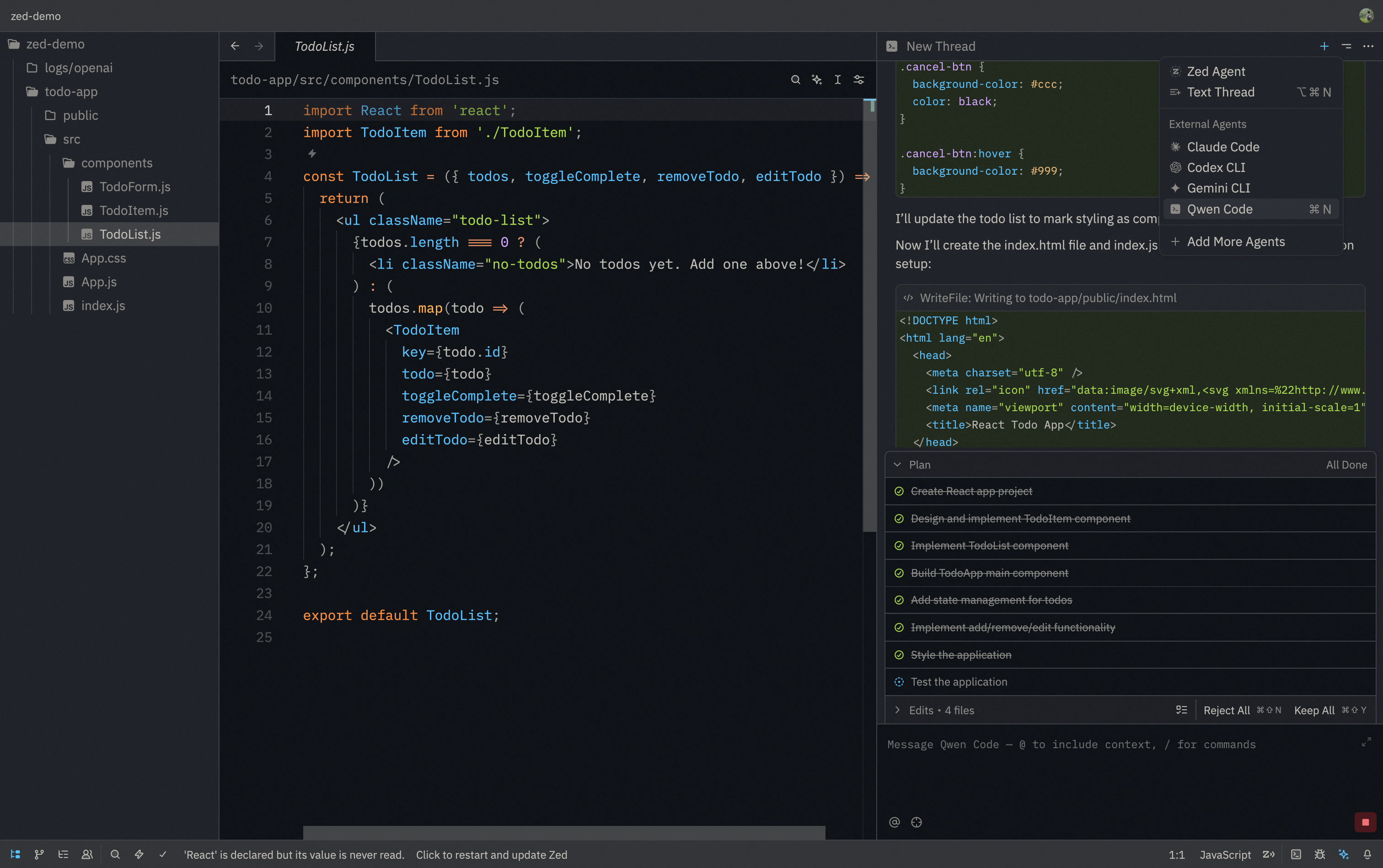

# Zed Editor

|

||||

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Control Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

> Zed Editor provides native support for AI coding assistants through the Agent Client Protocol (ACP). This integration allows you to use Qwen Code directly within Zed's interface with real-time code suggestions.

|

||||

|

||||

|

||||

|

||||

@@ -20,9 +20,9 @@

|

||||

|

||||

1. Install Qwen Code CLI:

|

||||

|

||||

```bash

|

||||

npm install -g qwen-code

|

||||

```

|

||||

```bash

|

||||

npm install -g @qwen-code/qwen-code

|

||||

```

|

||||

|

||||

2. Download and install [Zed Editor](https://zed.dev/)

|

||||

|

||||

|

||||

12

package-lock.json

generated

12

package-lock.json

generated

@@ -1,12 +1,12 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"lockfileVersion": 3,

|

||||

"requires": true,

|

||||

"packages": {

|

||||

"": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"workspaces": [

|

||||

"packages/*"

|

||||

],

|

||||

@@ -17310,7 +17310,7 @@

|

||||

},

|

||||

"packages/cli": {

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

"@iarna/toml": "^2.2.5",

|

||||

@@ -17947,7 +17947,7 @@

|

||||

},

|

||||

"packages/core": {

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"hasInstallScript": true,

|

||||

"dependencies": {

|

||||

"@anthropic-ai/sdk": "^0.36.1",

|

||||

@@ -21408,7 +21408,7 @@

|

||||

},

|

||||

"packages/test-utils": {

|

||||

"name": "@qwen-code/qwen-code-test-utils",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"dev": true,

|

||||

"license": "Apache-2.0",

|

||||

"devDependencies": {

|

||||

@@ -21420,7 +21420,7 @@

|

||||

},

|

||||

"packages/vscode-ide-companion": {

|

||||

"name": "qwen-code-vscode-ide-companion",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"license": "LICENSE",

|

||||

"dependencies": {

|

||||

"@modelcontextprotocol/sdk": "^1.25.1",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"engines": {

|

||||

"node": ">=20.0.0"

|

||||

},

|

||||

@@ -13,7 +13,7 @@

|

||||

"url": "git+https://github.com/QwenLM/qwen-code.git"

|

||||

},

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0-preview.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

},

|

||||

"scripts": {

|

||||

"start": "cross-env node scripts/start.js",

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"description": "Qwen Code",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

@@ -33,7 +33,7 @@

|

||||

"dist"

|

||||

],

|

||||

"config": {

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.0-preview.1"

|

||||

"sandboxImageUri": "ghcr.io/qwenlm/qwen-code:0.7.1"

|

||||

},

|

||||

"dependencies": {

|

||||

"@google/genai": "1.30.0",

|

||||

|

||||

@@ -1196,11 +1196,6 @@ describe('Hierarchical Memory Loading (config.ts) - Placeholder Suite', () => {

|

||||

],

|

||||

true,

|

||||

'tree',

|

||||

{

|

||||

respectGitIgnore: false,

|

||||

respectQwenIgnore: true,

|

||||

},

|

||||

undefined, // maxDirs

|

||||

);

|

||||

});

|

||||

|

||||

|

||||

@@ -9,7 +9,6 @@ import {

|

||||

AuthType,

|

||||

Config,

|

||||

DEFAULT_QWEN_EMBEDDING_MODEL,

|

||||

DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

FileDiscoveryService,

|

||||

getCurrentGeminiMdFilename,

|

||||

loadServerHierarchicalMemory,

|

||||

@@ -22,7 +21,6 @@ import {

|

||||

isToolEnabled,

|

||||

SessionService,

|

||||

type ResumedSessionData,

|

||||

type FileFilteringOptions,

|

||||

type MCPServerConfig,

|

||||

type ToolName,

|

||||

EditTool,

|

||||

@@ -334,7 +332,7 @@ export async function parseArguments(settings: Settings): Promise<CliArgs> {

|

||||

.option('experimental-skills', {

|

||||

type: 'boolean',

|

||||

description: 'Enable experimental Skills feature',

|

||||

default: false,

|

||||

default: settings.tools?.experimental?.skills ?? false,

|

||||

})

|

||||

.option('channel', {

|

||||

type: 'string',

|

||||

@@ -643,7 +641,6 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths: string[] = [],

|

||||

folderTrust: boolean,

|

||||

memoryImportFormat: 'flat' | 'tree' = 'tree',

|

||||

fileFilteringOptions?: FileFilteringOptions,

|

||||

): Promise<{ memoryContent: string; fileCount: number }> {

|

||||

// FIX: Use real, canonical paths for a reliable comparison to handle symlinks.

|

||||

const realCwd = fs.realpathSync(path.resolve(currentWorkingDirectory));

|

||||

@@ -669,8 +666,6 @@ export async function loadHierarchicalGeminiMemory(

|

||||

extensionContextFilePaths,

|

||||

folderTrust,

|

||||

memoryImportFormat,

|

||||

fileFilteringOptions,

|

||||

settings.context?.discoveryMaxDirs,

|

||||

);

|

||||

}

|

||||

|

||||

@@ -740,11 +735,6 @@ export async function loadCliConfig(

|

||||

|

||||

const fileService = new FileDiscoveryService(cwd);

|

||||

|

||||

const fileFiltering = {

|

||||

...DEFAULT_MEMORY_FILE_FILTERING_OPTIONS,

|

||||

...settings.context?.fileFiltering,

|

||||

};

|

||||

|

||||

const includeDirectories = (settings.context?.includeDirectories || [])

|

||||

.map(resolvePath)

|

||||

.concat((argv.includeDirectories || []).map(resolvePath));

|

||||

@@ -761,7 +751,6 @@ export async function loadCliConfig(

|

||||

extensionContextFilePaths,

|

||||

trustedFolder,

|

||||

memoryImportFormat,

|

||||

fileFiltering,

|

||||

);

|

||||

|

||||

let mcpServers = mergeMcpServers(settings, activeExtensions);

|

||||

@@ -874,11 +863,10 @@ export async function loadCliConfig(

|

||||

}

|

||||

};

|

||||

|

||||

if (

|

||||

!interactive &&

|

||||

!argv.experimentalAcp &&

|

||||

inputFormat !== InputFormat.STREAM_JSON

|

||||

) {

|

||||

// ACP mode check: must include both --acp (current) and --experimental-acp (deprecated).

|

||||

// Without this check, edit, write_file, run_shell_command would be excluded in ACP mode.

|

||||

const isAcpMode = argv.acp || argv.experimentalAcp;

|

||||

if (!interactive && !isAcpMode && inputFormat !== InputFormat.STREAM_JSON) {

|

||||

switch (approvalMode) {

|

||||

case ApprovalMode.PLAN:

|

||||

case ApprovalMode.DEFAULT:

|

||||

|

||||

@@ -106,7 +106,6 @@ const MIGRATION_MAP: Record<string, string> = {

|

||||

mcpServers: 'mcpServers',

|

||||

mcpServerCommand: 'mcp.serverCommand',

|

||||

memoryImportFormat: 'context.importFormat',

|

||||

memoryDiscoveryMaxDirs: 'context.discoveryMaxDirs',

|

||||

model: 'model.name',

|

||||

preferredEditor: 'general.preferredEditor',

|

||||

sandbox: 'tools.sandbox',

|

||||

|

||||

@@ -722,15 +722,6 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'The format to use when importing memory.',

|

||||

showInDialog: false,

|

||||

},

|

||||

discoveryMaxDirs: {

|

||||

type: 'number',

|

||||

label: 'Memory Discovery Max Dirs',

|

||||

category: 'Context',

|

||||

requiresRestart: false,

|

||||

default: 200,

|

||||

description: 'Maximum number of directories to search for memory.',

|

||||

showInDialog: true,

|

||||

},

|

||||

includeDirectories: {

|

||||

type: 'array',

|

||||

label: 'Include Directories',

|

||||

@@ -981,6 +972,27 @@ const SETTINGS_SCHEMA = {

|

||||

description: 'The number of lines to keep when truncating tool output.',

|

||||

showInDialog: true,

|

||||

},

|

||||

experimental: {

|

||||

type: 'object',

|

||||

label: 'Experimental',

|

||||

category: 'Tools',

|

||||

requiresRestart: true,

|

||||

default: {},

|

||||

description: 'Experimental tool features.',

|

||||

showInDialog: false,

|

||||

properties: {

|

||||

skills: {

|

||||

type: 'boolean',

|

||||

label: 'Skills',

|

||||

category: 'Tools',

|

||||

requiresRestart: true,

|

||||

default: false,

|

||||

description:

|

||||

'Enable experimental Agent Skills feature. When enabled, Qwen Code can use Skills from .qwen/skills/ and ~/.qwen/skills/.',

|

||||

showInDialog: true,

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

},

|

||||

|

||||

|

||||

@@ -873,11 +873,11 @@ export default {

|

||||

'Session Stats': '会话统计',

|

||||

'Model Usage': '模型使用情况',

|

||||

Reqs: '请求数',

|

||||

'Input Tokens': '输入令牌',

|

||||

'Output Tokens': '输出令牌',

|

||||

'Input Tokens': '输入 token 数',

|

||||

'Output Tokens': '输出 token 数',

|

||||

'Savings Highlight:': '节省亮点:',

|

||||

'of input tokens were served from the cache, reducing costs.':

|

||||

'的输入令牌来自缓存,降低了成本',

|

||||

'从缓存载入 token ,降低了成本',

|

||||

'Tip: For a full token breakdown, run `/stats model`.':

|

||||

'提示:要查看完整的令牌明细,请运行 `/stats model`',

|

||||

'Model Stats For Nerds': '模型统计(技术细节)',

|

||||

|

||||

@@ -575,7 +575,6 @@ export const AppContainer = (props: AppContainerProps) => {

|

||||

config.getExtensionContextFilePaths(),

|

||||

config.isTrustedFolder(),

|

||||

settings.merged.context?.importFormat || 'tree', // Use setting or default to 'tree'

|

||||

config.getFileFilteringOptions(),

|

||||

);

|

||||

|

||||

config.setUserMemory(memoryContent);

|

||||

|

||||

@@ -54,9 +54,7 @@ describe('directoryCommand', () => {

|

||||

services: {

|

||||

config: mockConfig,

|

||||

settings: {

|

||||

merged: {

|

||||

memoryDiscoveryMaxDirs: 1000,

|

||||

},

|

||||

merged: {},

|

||||

},

|

||||

},

|

||||

ui: {

|

||||

|

||||

@@ -119,8 +119,6 @@ export const directoryCommand: SlashCommand = {

|

||||

config.getFolderTrust(),

|

||||

context.services.settings.merged.context?.importFormat ||

|

||||

'tree', // Use setting or default to 'tree'

|

||||

config.getFileFilteringOptions(),

|

||||

context.services.settings.merged.context?.discoveryMaxDirs,

|

||||

);

|

||||

config.setUserMemory(memoryContent);

|

||||

config.setGeminiMdFileCount(fileCount);

|

||||

|

||||

@@ -299,9 +299,7 @@ describe('memoryCommand', () => {

|

||||

services: {

|

||||

config: mockConfig,

|

||||

settings: {

|

||||

merged: {

|

||||

memoryDiscoveryMaxDirs: 1000,

|

||||

},

|

||||

merged: {},

|

||||

} as LoadedSettings,

|

||||

},

|

||||

});

|

||||

|

||||

@@ -315,8 +315,6 @@ export const memoryCommand: SlashCommand = {

|

||||

config.getFolderTrust(),

|

||||

context.services.settings.merged.context?.importFormat ||

|

||||

'tree', // Use setting or default to 'tree'

|

||||

config.getFileFilteringOptions(),

|

||||

context.services.settings.merged.context?.discoveryMaxDirs,

|

||||

);

|

||||

config.setUserMemory(memoryContent);

|

||||

config.setGeminiMdFileCount(fileCount);

|

||||

|

||||

@@ -1331,9 +1331,7 @@ describe('SettingsDialog', () => {

|

||||

truncateToolOutputThreshold: 50000,

|

||||

truncateToolOutputLines: 1000,

|

||||

},

|

||||

context: {

|

||||

discoveryMaxDirs: 500,

|

||||

},

|

||||

context: {},

|

||||

model: {

|

||||

maxSessionTurns: 100,

|

||||

skipNextSpeakerCheck: false,

|

||||

@@ -1466,7 +1464,6 @@ describe('SettingsDialog', () => {

|

||||

disableFuzzySearch: true,

|

||||

},

|

||||

loadMemoryFromIncludeDirectories: true,

|

||||

discoveryMaxDirs: 100,

|

||||

},

|

||||

});

|

||||

const onSelect = vi.fn();

|

||||

|

||||

@@ -8,7 +8,10 @@ import { describe, it, expect, beforeEach, afterEach, vi } from 'vitest';

|

||||

import * as fs from 'node:fs';

|

||||

import * as path from 'node:path';

|

||||

import * as os from 'node:os';

|

||||

import { updateSettingsFilePreservingFormat } from './commentJson.js';

|

||||

import {

|

||||

updateSettingsFilePreservingFormat,

|

||||

applyUpdates,

|

||||

} from './commentJson.js';

|

||||

|

||||

describe('commentJson', () => {

|

||||

let tempDir: string;

|

||||

@@ -180,3 +183,18 @@ describe('commentJson', () => {

|

||||

});

|

||||

});

|

||||

});

|

||||

|

||||

describe('applyUpdates', () => {

|

||||

it('should apply updates correctly', () => {

|

||||

const original = { a: 1, b: { c: 2 } };

|

||||

const updates = { b: { c: 3 } };

|

||||

const result = applyUpdates(original, updates);

|

||||

expect(result).toEqual({ a: 1, b: { c: 3 } });

|

||||

});

|

||||

it('should apply updates correctly when empty', () => {

|

||||

const original = { a: 1, b: { c: 2 } };

|

||||

const updates = { b: {} };

|

||||

const result = applyUpdates(original, updates);

|

||||

expect(result).toEqual({ a: 1, b: {} });

|

||||

});

|

||||

});

|

||||

|

||||

@@ -38,7 +38,7 @@ export function updateSettingsFilePreservingFormat(

|

||||

fs.writeFileSync(filePath, updatedContent, 'utf-8');

|

||||

}

|

||||

|

||||

function applyUpdates(

|

||||

export function applyUpdates(

|

||||

current: Record<string, unknown>,

|

||||

updates: Record<string, unknown>,

|

||||

): Record<string, unknown> {

|

||||

@@ -50,6 +50,7 @@ function applyUpdates(

|

||||

typeof value === 'object' &&

|

||||

value !== null &&

|

||||

!Array.isArray(value) &&

|

||||

Object.keys(value).length > 0 &&

|

||||

typeof result[key] === 'object' &&

|

||||

result[key] !== null &&

|

||||

!Array.isArray(result[key])

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"name": "@qwen-code/qwen-code-core",

|

||||

"version": "0.7.0-preview.1",

|

||||

"version": "0.7.1",

|

||||

"description": "Qwen Code Core",

|

||||

"repository": {

|

||||

"type": "git",

|

||||

|

||||

@@ -404,7 +404,7 @@ export class Config {

|

||||

private toolRegistry!: ToolRegistry;

|

||||

private promptRegistry!: PromptRegistry;

|

||||

private subagentManager!: SubagentManager;

|

||||

private skillManager!: SkillManager;

|

||||

private skillManager: SkillManager | null = null;

|

||||

private fileSystemService: FileSystemService;

|

||||

private contentGeneratorConfig!: ContentGeneratorConfig;

|

||||

private contentGeneratorConfigSources: ContentGeneratorConfigSources = {};

|

||||

@@ -672,8 +672,10 @@ export class Config {

|

||||

}

|

||||

this.promptRegistry = new PromptRegistry();

|

||||

this.subagentManager = new SubagentManager(this);

|

||||

this.skillManager = new SkillManager(this);

|

||||

await this.skillManager.startWatching();

|

||||

if (this.getExperimentalSkills()) {

|

||||

this.skillManager = new SkillManager(this);

|

||||

await this.skillManager.startWatching();

|

||||

}

|

||||

|

||||

// Load session subagents if they were provided before initialization

|

||||

if (this.sessionSubagents.length > 0) {

|

||||

@@ -1439,7 +1441,7 @@ export class Config {

|

||||

return this.subagentManager;

|

||||

}

|

||||

|

||||

getSkillManager(): SkillManager {

|

||||

getSkillManager(): SkillManager | null {

|

||||

return this.skillManager;

|

||||

}

|

||||

|

||||

|

||||

@@ -270,28 +270,28 @@ export function createContentGeneratorConfig(

|

||||

}

|

||||

|

||||

export async function createContentGenerator(

|

||||

config: ContentGeneratorConfig,

|

||||

gcConfig: Config,

|

||||

generatorConfig: ContentGeneratorConfig,

|

||||

config: Config,

|

||||

isInitialAuth?: boolean,

|

||||

): Promise<ContentGenerator> {

|

||||

const validation = validateModelConfig(config, false);

|

||||

const validation = validateModelConfig(generatorConfig, false);

|

||||

if (!validation.valid) {

|

||||

throw new Error(validation.errors.map((e) => e.message).join('\n'));

|

||||

}

|

||||

|

||||

if (config.authType === AuthType.USE_OPENAI) {

|

||||

// Import OpenAIContentGenerator dynamically to avoid circular dependencies

|

||||

const authType = generatorConfig.authType;

|

||||

if (!authType) {

|

||||

throw new Error('ContentGeneratorConfig must have an authType');

|

||||

}

|

||||

|

||||

let baseGenerator: ContentGenerator;

|

||||

|

||||

if (authType === AuthType.USE_OPENAI) {

|

||||

const { createOpenAIContentGenerator } = await import(

|

||||

'./openaiContentGenerator/index.js'

|

||||

);

|

||||

|

||||

// Always use OpenAIContentGenerator, logging is controlled by enableOpenAILogging flag

|

||||

const generator = createOpenAIContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

}

|

||||

|

||||

if (config.authType === AuthType.QWEN_OAUTH) {

|

||||

// Import required classes dynamically

|

||||

baseGenerator = createOpenAIContentGenerator(generatorConfig, config);

|

||||

} else if (authType === AuthType.QWEN_OAUTH) {

|

||||

const { getQwenOAuthClient: getQwenOauthClient } = await import(

|

||||

'../qwen/qwenOAuth2.js'

|

||||

);

|

||||

@@ -300,44 +300,38 @@ export async function createContentGenerator(

|

||||

);

|

||||

|

||||

try {

|

||||

// Get the Qwen OAuth client (now includes integrated token management)

|

||||

// If this is initial auth, require cached credentials to detect missing credentials

|

||||

const qwenClient = await getQwenOauthClient(

|

||||

gcConfig,

|

||||

config,

|

||||

isInitialAuth ? { requireCachedCredentials: true } : undefined,

|

||||

);

|

||||

|

||||

// Create the content generator with dynamic token management

|

||||

const generator = new QwenContentGenerator(qwenClient, config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

baseGenerator = new QwenContentGenerator(

|

||||

qwenClient,

|

||||

generatorConfig,

|

||||

config,

|

||||

);

|

||||

} catch (error) {

|

||||

throw new Error(

|

||||

`${error instanceof Error ? error.message : String(error)}`,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

if (config.authType === AuthType.USE_ANTHROPIC) {

|

||||

} else if (authType === AuthType.USE_ANTHROPIC) {

|

||||

const { createAnthropicContentGenerator } = await import(

|

||||

'./anthropicContentGenerator/index.js'

|

||||

);

|

||||

|

||||

const generator = createAnthropicContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

}

|

||||

|

||||

if (

|

||||

config.authType === AuthType.USE_GEMINI ||

|

||||

config.authType === AuthType.USE_VERTEX_AI

|

||||

baseGenerator = createAnthropicContentGenerator(generatorConfig, config);

|

||||

} else if (

|

||||

authType === AuthType.USE_GEMINI ||

|

||||

authType === AuthType.USE_VERTEX_AI

|

||||

) {

|

||||

const { createGeminiContentGenerator } = await import(

|

||||

'./geminiContentGenerator/index.js'

|

||||

);

|

||||

const generator = createGeminiContentGenerator(config, gcConfig);

|

||||

return new LoggingContentGenerator(generator, gcConfig);

|

||||

baseGenerator = createGeminiContentGenerator(generatorConfig, config);

|

||||

} else {

|

||||

throw new Error(

|

||||

`Error creating contentGenerator: Unsupported authType: ${authType}`,

|

||||

);

|

||||

}

|

||||

|

||||

throw new Error(

|

||||

`Error creating contentGenerator: Unsupported authType: ${config.authType}`,

|

||||

);

|

||||

return new LoggingContentGenerator(baseGenerator, config, generatorConfig);

|

||||

}

|

||||

|

||||

@@ -12,6 +12,7 @@ import type {

|

||||

import { GenerateContentResponse } from '@google/genai';

|

||||

import type { Config } from '../../config/config.js';

|

||||

import type { ContentGenerator } from '../contentGenerator.js';

|

||||

import { AuthType } from '../contentGenerator.js';

|

||||

import { LoggingContentGenerator } from './index.js';

|

||||

import { OpenAIContentConverter } from '../openaiContentGenerator/converter.js';

|

||||

import {

|

||||

@@ -50,14 +51,17 @@ const convertGeminiResponseToOpenAISpy = vi

|

||||

choices: [],

|

||||

} as OpenAI.Chat.ChatCompletion);

|

||||

|

||||

const createConfig = (overrides: Record<string, unknown> = {}): Config =>

|

||||

({

|

||||

getContentGeneratorConfig: () => ({

|

||||

authType: 'openai',

|

||||

enableOpenAILogging: false,

|

||||

...overrides,

|

||||

}),

|

||||

}) as Config;

|

||||

const createConfig = (overrides: Record<string, unknown> = {}): Config => {

|

||||

const configContent = {

|

||||

authType: 'openai',

|

||||

enableOpenAILogging: false,

|

||||

...overrides,

|

||||

};

|

||||

return {

|

||||

getContentGeneratorConfig: () => configContent,

|

||||

getAuthType: () => configContent.authType as AuthType | undefined,

|

||||

} as Config;

|

||||

};

|

||||

|

||||

const createWrappedGenerator = (

|

||||

generateContent: ContentGenerator['generateContent'],

|

||||

@@ -124,13 +128,17 @@ describe('LoggingContentGenerator', () => {

|

||||

),

|

||||

vi.fn(),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

openAILoggingDir: 'logs',

|

||||

schemaCompliance: 'openapi_30' as const,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig({

|

||||

enableOpenAILogging: true,

|

||||

openAILoggingDir: 'logs',

|

||||

schemaCompliance: 'openapi_30',

|

||||

}),

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -225,9 +233,15 @@ describe('LoggingContentGenerator', () => {

|

||||

vi.fn().mockRejectedValue(error),

|

||||

vi.fn(),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -293,9 +307,15 @@ describe('LoggingContentGenerator', () => {

|

||||

})(),

|

||||

),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

);

|

||||

|

||||

const request = {

|

||||

@@ -345,9 +365,15 @@ describe('LoggingContentGenerator', () => {

|

||||

})(),

|

||||

),

|

||||

);

|

||||

const generatorConfig = {

|

||||

model: 'test-model',

|

||||

authType: AuthType.USE_OPENAI,

|

||||

enableOpenAILogging: true,

|

||||

};

|

||||

const generator = new LoggingContentGenerator(

|

||||

wrapped,

|

||||

createConfig({ enableOpenAILogging: true }),

|

||||

createConfig(),

|

||||

generatorConfig,

|

||||

);

|

||||

|

||||

const request = {

|

||||

|

||||

@@ -31,7 +31,10 @@ import {

|

||||

logApiRequest,

|

||||

logApiResponse,

|

||||

} from '../../telemetry/loggers.js';

|

||||

import type { ContentGenerator } from '../contentGenerator.js';

|

||||

import type {

|

||||

ContentGenerator,

|

||||

ContentGeneratorConfig,

|

||||

} from '../contentGenerator.js';

|

||||

import { isStructuredError } from '../../utils/quotaErrorDetection.js';

|

||||

import { OpenAIContentConverter } from '../openaiContentGenerator/converter.js';

|

||||

import { OpenAILogger } from '../../utils/openaiLogger.js';

|

||||

@@ -50,9 +53,11 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

constructor(

|

||||

private readonly wrapped: ContentGenerator,

|

||||

private readonly config: Config,

|

||||

generatorConfig: ContentGeneratorConfig,

|

||||

) {

|

||||

const generatorConfig = this.config.getContentGeneratorConfig();

|

||||

if (generatorConfig?.enableOpenAILogging) {

|

||||

// Extract fields needed for initialization from passed config

|

||||

// (config.getContentGeneratorConfig() may not be available yet during refreshAuth)

|

||||

if (generatorConfig.enableOpenAILogging) {

|

||||

this.openaiLogger = new OpenAILogger(generatorConfig.openAILoggingDir);

|

||||

this.schemaCompliance = generatorConfig.schemaCompliance;

|

||||

}

|

||||

@@ -89,7 +94,7 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

model,

|

||||

durationMs,

|

||||

prompt_id,

|

||||

this.config.getContentGeneratorConfig()?.authType,

|

||||

this.config.getAuthType(),

|

||||

usageMetadata,

|

||||

responseText,

|

||||

),

|

||||

@@ -126,7 +131,7 @@ export class LoggingContentGenerator implements ContentGenerator {

|

||||

errorMessage,

|

||||

durationMs,

|

||||

prompt_id,

|

||||

this.config.getContentGeneratorConfig()?.authType,

|

||||

this.config.getAuthType(),

|

||||

errorType,

|

||||

errorStatus,

|

||||

),

|

||||

|

||||

@@ -112,6 +112,62 @@ You are a helpful assistant with this skill.

|

||||

expect(config.filePath).toBe(validSkillConfig.filePath);

|

||||

});

|

||||

|

||||

it('should parse markdown with CRLF line endings', () => {

|

||||

const markdownCrlf = `---\r

|

||||

name: test-skill\r

|

||||

description: A test skill\r

|

||||

---\r

|

||||

\r

|

||||

You are a helpful assistant with this skill.\r

|

||||

`;

|

||||

|

||||

const config = manager.parseSkillContent(

|

||||

markdownCrlf,

|

||||

validSkillConfig.filePath,

|

||||

'project',

|

||||

);

|

||||

|

||||

expect(config.name).toBe('test-skill');

|

||||

expect(config.description).toBe('A test skill');

|

||||

expect(config.body).toBe('You are a helpful assistant with this skill.');

|

||||

});

|

||||

|

||||

it('should parse markdown with UTF-8 BOM', () => {

|

||||

const markdownWithBom = `\uFEFF---

|

||||

name: test-skill

|

||||

description: A test skill

|

||||

---

|

||||

|

||||

You are a helpful assistant with this skill.

|

||||

`;

|

||||

|

||||

const config = manager.parseSkillContent(

|

||||

markdownWithBom,

|

||||

validSkillConfig.filePath,

|

||||

'project',

|

||||

);

|

||||

|

||||

expect(config.name).toBe('test-skill');

|

||||

expect(config.description).toBe('A test skill');

|

||||

});

|

||||

|

||||

it('should parse markdown when body is empty and file ends after frontmatter', () => {

|

||||

const frontmatterOnly = `---

|

||||

name: test-skill

|

||||

description: A test skill

|

||||

---`;

|

||||

|

||||

const config = manager.parseSkillContent(

|

||||

frontmatterOnly,

|

||||

validSkillConfig.filePath,

|

||||

'project',

|

||||

);

|

||||

|

||||

expect(config.name).toBe('test-skill');

|

||||

expect(config.description).toBe('A test skill');

|

||||

expect(config.body).toBe('');

|

||||

});

|

||||

|

||||

it('should parse content with allowedTools', () => {

|

||||

const markdownWithTools = `---

|

||||

name: test-skill

|

||||

|

||||

@@ -235,6 +235,7 @@ export class SkillManager {

|

||||

}

|

||||

|

||||

this.watchStarted = true;

|

||||

await this.ensureUserSkillsDir();

|

||||

await this.refreshCache();

|

||||

this.updateWatchersFromCache();

|

||||

}

|

||||

@@ -306,9 +307,11 @@ export class SkillManager {

|

||||

level: SkillLevel,

|

||||

): SkillConfig {

|

||||

try {

|

||||

const normalizedContent = normalizeSkillFileContent(content);

|

||||

|

||||

// Split frontmatter and content

|

||||

const frontmatterRegex = /^---\n([\s\S]*?)\n---\n([\s\S]*)$/;

|

||||

const match = content.match(frontmatterRegex);

|

||||

const frontmatterRegex = /^---\n([\s\S]*?)\n---(?:\n|$)([\s\S]*)$/;

|

||||

const match = normalizedContent.match(frontmatterRegex);

|

||||

|

||||

if (!match) {

|

||||

throw new Error('Invalid format: missing YAML frontmatter');

|

||||

@@ -486,29 +489,14 @@ export class SkillManager {

|

||||

}

|

||||

|

||||

private updateWatchersFromCache(): void {

|

||||

const desiredPaths = new Set<string>();

|

||||

|

||||

for (const level of ['project', 'user'] as const) {

|

||||

const baseDir = this.getSkillsBaseDir(level);

|

||||

const parentDir = path.dirname(baseDir);

|

||||

if (fsSync.existsSync(parentDir)) {

|

||||

desiredPaths.add(parentDir);

|

||||

}

|

||||

if (fsSync.existsSync(baseDir)) {

|

||||

desiredPaths.add(baseDir);

|

||||

}

|

||||

|

||||

const levelSkills = this.skillsCache?.get(level) || [];

|

||||

for (const skill of levelSkills) {

|

||||

const skillDir = path.dirname(skill.filePath);

|

||||

if (fsSync.existsSync(skillDir)) {

|

||||

desiredPaths.add(skillDir);

|

||||

}

|

||||

}

|

||||

}

|

||||

const watchTargets = new Set<string>(

|

||||

(['project', 'user'] as const)

|

||||

.map((level) => this.getSkillsBaseDir(level))

|

||||

.filter((baseDir) => fsSync.existsSync(baseDir)),

|

||||

);

|

||||

|

||||

for (const existingPath of this.watchers.keys()) {

|

||||

if (!desiredPaths.has(existingPath)) {

|

||||

if (!watchTargets.has(existingPath)) {

|

||||

void this.watchers

|

||||

.get(existingPath)

|

||||

?.close()

|

||||

@@ -522,7 +510,7 @@ export class SkillManager {

|

||||

}

|

||||

}

|

||||

|

||||

for (const watchPath of desiredPaths) {

|

||||

for (const watchPath of watchTargets) {

|

||||

if (this.watchers.has(watchPath)) {

|

||||

continue;

|

||||

}

|

||||

@@ -557,4 +545,26 @@ export class SkillManager {

|

||||

void this.refreshCache().then(() => this.updateWatchersFromCache());

|

||||

}, 150);

|

||||

}

|

||||

|

||||

private async ensureUserSkillsDir(): Promise<void> {

|

||||

const baseDir = this.getSkillsBaseDir('user');

|

||||

try {

|

||||

await fs.mkdir(baseDir, { recursive: true });

|

||||

} catch (error) {

|

||||

console.warn(

|

||||

`Failed to create user skills directory at ${baseDir}:`,

|

||||

error,

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

function normalizeSkillFileContent(content: string): string {

|

||||

// Strip UTF-8 BOM to ensure frontmatter starts at the first character.

|

||||

let normalized = content.replace(/^\uFEFF/, '');

|

||||

|

||||

// Normalize line endings so skills authored on Windows (CRLF) parse correctly.

|

||||

normalized = normalized.replace(/\r\n/g, '\n').replace(/\r/g, '\n');

|

||||

|

||||

return normalized;

|

||||

}

|

||||

|

||||

@@ -53,7 +53,7 @@ export class SkillTool extends BaseDeclarativeTool<SkillParams, ToolResult> {

|

||||

false, // canUpdateOutput

|

||||

);

|

||||

|

||||

this.skillManager = config.getSkillManager();

|

||||

this.skillManager = config.getSkillManager()!;

|

||||

this.skillManager.addChangeListener(() => {

|

||||

void this.refreshSkills();

|

||||

});

|

||||

|

||||

@@ -1,232 +0,0 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Google LLC

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||

import { describe, it, expect, beforeEach, afterEach } from 'vitest';

|

||||

import * as fsPromises from 'node:fs/promises';

|

||||

import * as path from 'node:path';

|

||||

import * as os from 'node:os';

|

||||

import { bfsFileSearch } from './bfsFileSearch.js';

|

||||

import { FileDiscoveryService } from '../services/fileDiscoveryService.js';

|

||||

|

||||

describe('bfsFileSearch', () => {

|

||||

let testRootDir: string;

|

||||

|

||||

async function createEmptyDir(...pathSegments: string[]) {

|

||||

const fullPath = path.join(testRootDir, ...pathSegments);

|

||||

await fsPromises.mkdir(fullPath, { recursive: true });

|

||||

return fullPath;

|

||||

}

|

||||

|

||||

async function createTestFile(content: string, ...pathSegments: string[]) {

|

||||

const fullPath = path.join(testRootDir, ...pathSegments);

|

||||

await fsPromises.mkdir(path.dirname(fullPath), { recursive: true });

|

||||

await fsPromises.writeFile(fullPath, content);

|

||||

return fullPath;

|

||||

}

|

||||

|

||||

beforeEach(async () => {

|

||||

testRootDir = await fsPromises.mkdtemp(

|

||||

path.join(os.tmpdir(), 'bfs-file-search-test-'),

|

||||

);

|

||||

});

|

||||

|

||||

afterEach(async () => {

|

||||

await fsPromises.rm(testRootDir, { recursive: true, force: true });

|

||||

});

|

||||

|

||||

it('should find a file in the root directory', async () => {

|

||||

const targetFilePath = await createTestFile('content', 'target.txt');

|

||||

const result = await bfsFileSearch(testRootDir, { fileName: 'target.txt' });

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

it('should find a file in a nested directory', async () => {

|

||||

const targetFilePath = await createTestFile(

|

||||

'content',

|

||||

'a',

|

||||

'b',

|

||||

'target.txt',

|

||||

);

|

||||

const result = await bfsFileSearch(testRootDir, { fileName: 'target.txt' });

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

it('should find multiple files with the same name', async () => {

|

||||

const targetFilePath1 = await createTestFile('content1', 'a', 'target.txt');

|

||||

const targetFilePath2 = await createTestFile('content2', 'b', 'target.txt');

|

||||

const result = await bfsFileSearch(testRootDir, { fileName: 'target.txt' });

|

||||

result.sort();

|

||||

expect(result).toEqual([targetFilePath1, targetFilePath2].sort());

|

||||

});

|

||||

|

||||

it('should return an empty array if no file is found', async () => {

|

||||

await createTestFile('content', 'other.txt');

|

||||

const result = await bfsFileSearch(testRootDir, { fileName: 'target.txt' });

|

||||

expect(result).toEqual([]);

|

||||

});

|

||||

|

||||

it('should ignore directories specified in ignoreDirs', async () => {

|

||||

await createTestFile('content', 'ignored', 'target.txt');

|

||||

const targetFilePath = await createTestFile(

|

||||

'content',

|

||||

'not-ignored',

|

||||

'target.txt',

|

||||

);

|

||||

const result = await bfsFileSearch(testRootDir, {

|

||||

fileName: 'target.txt',

|

||||

ignoreDirs: ['ignored'],

|

||||

});

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

it('should respect the maxDirs limit and not find the file', async () => {

|

||||

await createTestFile('content', 'a', 'b', 'c', 'target.txt');

|

||||

const result = await bfsFileSearch(testRootDir, {

|

||||

fileName: 'target.txt',

|

||||

maxDirs: 3,

|

||||

});

|

||||

expect(result).toEqual([]);

|

||||

});

|

||||

|

||||

it('should respect the maxDirs limit and find the file', async () => {

|

||||

const targetFilePath = await createTestFile(

|

||||

'content',

|

||||

'a',

|

||||

'b',

|

||||

'c',

|

||||

'target.txt',

|

||||

);

|

||||

const result = await bfsFileSearch(testRootDir, {

|

||||

fileName: 'target.txt',

|

||||

maxDirs: 4,

|

||||

});

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

describe('with FileDiscoveryService', () => {

|

||||

let projectRoot: string;

|

||||

|

||||

beforeEach(async () => {

|

||||

projectRoot = await createEmptyDir('project');

|

||||

});

|

||||

|

||||

it('should ignore gitignored files', async () => {

|

||||

await createEmptyDir('project', '.git');

|

||||

await createTestFile('node_modules/', 'project', '.gitignore');

|

||||

await createTestFile('content', 'project', 'node_modules', 'target.txt');

|

||||

const targetFilePath = await createTestFile(

|

||||

'content',

|

||||

'project',

|

||||

'not-ignored',

|

||||

'target.txt',

|

||||

);

|

||||

|

||||

const fileService = new FileDiscoveryService(projectRoot);

|

||||

const result = await bfsFileSearch(projectRoot, {

|

||||

fileName: 'target.txt',

|

||||

fileService,

|

||||

fileFilteringOptions: {

|

||||

respectGitIgnore: true,

|

||||

respectQwenIgnore: true,

|

||||

},

|

||||

});

|

||||

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

it('should ignore qwenignored files', async () => {

|

||||

await createTestFile('node_modules/', 'project', '.qwenignore');

|

||||

await createTestFile('content', 'project', 'node_modules', 'target.txt');

|

||||

const targetFilePath = await createTestFile(

|

||||

'content',

|

||||

'project',

|

||||

'not-ignored',

|

||||

'target.txt',

|

||||

);

|

||||

|

||||

const fileService = new FileDiscoveryService(projectRoot);

|

||||

const result = await bfsFileSearch(projectRoot, {

|

||||

fileName: 'target.txt',

|

||||

fileService,

|

||||

fileFilteringOptions: {

|

||||

respectGitIgnore: false,

|

||||

respectQwenIgnore: true,

|

||||

},

|

||||

});

|

||||

|

||||

expect(result).toEqual([targetFilePath]);

|

||||

});

|

||||

|

||||

it('should not ignore files if respect flags are false', async () => {

|

||||

await createEmptyDir('project', '.git');

|

||||

await createTestFile('node_modules/', 'project', '.gitignore');

|

||||

const target1 = await createTestFile(

|

||||

'content',

|

||||

'project',

|

||||

'node_modules',

|

||||

'target.txt',

|

||||

);

|

||||

const target2 = await createTestFile(

|

||||

'content',

|

||||

'project',

|

||||

'not-ignored',

|

||||

'target.txt',

|

||||

);

|

||||

|

||||

const fileService = new FileDiscoveryService(projectRoot);

|

||||

const result = await bfsFileSearch(projectRoot, {

|

||||

fileName: 'target.txt',

|

||||

fileService,

|

||||

fileFilteringOptions: {

|

||||

respectGitIgnore: false,

|

||||

respectQwenIgnore: false,

|

||||

},

|

||||

});

|

||||

|

||||

expect(result.sort()).toEqual([target1, target2].sort());

|

||||

});

|

||||

});

|

||||

|

||||

it('should find all files in a complex directory structure', async () => {

|

||||

// Create a complex directory structure to test correctness at scale

|

||||

// without flaky performance checks.

|

||||

const numDirs = 50;

|

||||

const numFilesPerDir = 2;

|

||||

const numTargetDirs = 10;

|

||||

|

||||

const dirCreationPromises: Array<Promise<unknown>> = [];

|

||||

for (let i = 0; i < numDirs; i++) {

|

||||

dirCreationPromises.push(createEmptyDir(`dir${i}`));

|

||||

dirCreationPromises.push(createEmptyDir(`dir${i}`, 'subdir1'));

|

||||

dirCreationPromises.push(createEmptyDir(`dir${i}`, 'subdir2'));

|

||||

dirCreationPromises.push(createEmptyDir(`dir${i}`, 'subdir1', 'deep'));

|

||||

}

|

||||

await Promise.all(dirCreationPromises);

|

||||

|

||||

const fileCreationPromises: Array<Promise<string>> = [];

|

||||

for (let i = 0; i < numTargetDirs; i++) {

|

||||

// Add target files in some directories

|

||||

fileCreationPromises.push(

|

||||

createTestFile('content', `dir${i}`, 'QWEN.md'),

|

||||

);

|

||||

fileCreationPromises.push(

|

||||

createTestFile('content', `dir${i}`, 'subdir1', 'QWEN.md'),

|

||||

);

|

||||

}

|

||||

const expectedFiles = await Promise.all(fileCreationPromises);

|

||||

|

||||

const result = await bfsFileSearch(testRootDir, {

|

||||

fileName: 'QWEN.md',

|

||||

// Provide a generous maxDirs limit to ensure it doesn't prematurely stop

|

||||

// in this large test case. Total dirs created is 200.

|

||||

maxDirs: 250,

|

||||

});

|

||||

|

||||

// Verify we found the exact files we created

|

||||

expect(result.length).toBe(numTargetDirs * numFilesPerDir);

|

||||

expect(result.sort()).toEqual(expectedFiles.sort());

|

||||

});

|

||||

});

|

||||

@@ -1,131 +0,0 @@

|

||||

/**

|

||||

* @license

|

||||

* Copyright 2025 Google LLC

|

||||

* SPDX-License-Identifier: Apache-2.0

|

||||

*/

|

||||

|

||||